Some Arguments Are Probably Valid: Syllogistic Reasoning As Communication Michael Tessler, Noah D

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 2 Introduction to Classical Propositional

CHAPTER 2 INTRODUCTION TO CLASSICAL PROPOSITIONAL LOGIC 1 Motivation and History The origins of the classical propositional logic, classical propositional calculus, as it was, and still often is called, go back to antiquity and are due to Stoic school of philosophy (3rd century B.C.), whose most eminent representative was Chryssipus. But the real development of this calculus began only in the mid-19th century and was initiated by the research done by the English math- ematician G. Boole, who is sometimes regarded as the founder of mathematical logic. The classical propositional calculus was ¯rst formulated as a formal ax- iomatic system by the eminent German logician G. Frege in 1879. The assumption underlying the formalization of classical propositional calculus are the following. Logical sentences We deal only with sentences that can always be evaluated as true or false. Such sentences are called logical sentences or proposi- tions. Hence the name propositional logic. A statement: 2 + 2 = 4 is a proposition as we assume that it is a well known and agreed upon truth. A statement: 2 + 2 = 5 is also a proposition (false. A statement:] I am pretty is modeled, if needed as a logical sentence (proposi- tion). We assume that it is false, or true. A statement: 2 + n = 5 is not a proposition; it might be true for some n, for example n=3, false for other n, for example n= 2, and moreover, we don't know what n is. Sentences of this kind are called propositional functions. We model propositional functions within propositional logic by treating propositional functions as propositions. -

Aristotle's Assertoric Syllogistic and Modern Relevance Logic*

Aristotle’s assertoric syllogistic and modern relevance logic* Abstract: This paper sets out to evaluate the claim that Aristotle’s Assertoric Syllogistic is a relevance logic or shows significant similarities with it. I prepare the grounds for a meaningful comparison by extracting the notion of relevance employed in the most influential work on modern relevance logic, Anderson and Belnap’s Entailment. This notion is characterized by two conditions imposed on the concept of validity: first, that some meaning content is shared between the premises and the conclusion, and second, that the premises of a proof are actually used to derive the conclusion. Turning to Aristotle’s Prior Analytics, I argue that there is evidence that Aristotle’s Assertoric Syllogistic satisfies both conditions. Moreover, Aristotle at one point explicitly addresses the potential harmfulness of syllogisms with unused premises. Here, I argue that Aristotle’s analysis allows for a rejection of such syllogisms on formal grounds established in the foregoing parts of the Prior Analytics. In a final section I consider the view that Aristotle distinguished between validity on the one hand and syllogistic validity on the other. Following this line of reasoning, Aristotle’s logic might not be a relevance logic, since relevance is part of syllogistic validity and not, as modern relevance logic demands, of general validity. I argue that the reasons to reject this view are more compelling than the reasons to accept it and that we can, cautiously, uphold the result that Aristotle’s logic is a relevance logic. Keywords: Aristotle, Syllogism, Relevance Logic, History of Logic, Logic, Relevance 1. -

The Validities of Pep and Some Characteristic Formulas in Modal Logic

THE VALIDITIES OF PEP AND SOME CHARACTERISTIC FORMULAS IN MODAL LOGIC Hong Zhang, Huacan He School of Computer Science, Northwestern Polytechnical University,Xi'an, Shaanxi 710032, P.R. China Abstract: In this paper, we discuss the relationships between some characteristic formulas in normal modal logic with their frames. We find that the validities of the modal formulas are conditional even though some of them are intuitively valid. Finally, we prove the validities of two formulas of Position- Exchange-Principle (PEP) proposed by papers 1 to 3 by using of modal logic and Kripke's semantics of possible worlds. Key words: Characteristic Formulas, Validity, PEP, Frame 1. INTRODUCTION Reasoning about knowledge and belief is an important issue in the research of multi-agent systems, and reasoning, which is set up from the main ideas of situational calculus, about other agents' states and actions is one of the most important directions in Al^^'^\ Papers [1-3] put forward an axiom scheme in reasoning about others(RAO) in multi-agent systems, and a rule called Position-Exchange-Principle (PEP), which is shown as following, was abstracted . C{(p^y/)^{C(p->Cy/) (Ce{5f',...,5f-}) When the length of C is at least 2, it reflects the mechanism of an agent reasoning about knowledge of others. For example, if C is replaced with Bf B^/, then it should be B':'B]'{(p^y/)-^iBf'B]'(p^Bf'B]'y/) 872 Hong Zhang, Huacan He The axiom schema plays a useful role as that of modus ponens and axiom K when simplifying proof. Though examples were demonstrated as proofs in papers [1-3], from the perspectives both in semantics and in syntax, the rule is not so compellent. -

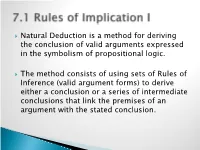

7.1 Rules of Implication I

Natural Deduction is a method for deriving the conclusion of valid arguments expressed in the symbolism of propositional logic. The method consists of using sets of Rules of Inference (valid argument forms) to derive either a conclusion or a series of intermediate conclusions that link the premises of an argument with the stated conclusion. The First Four Rules of Inference: ◦ Modus Ponens (MP): p q p q ◦ Modus Tollens (MT): p q ~q ~p ◦ Pure Hypothetical Syllogism (HS): p q q r p r ◦ Disjunctive Syllogism (DS): p v q ~p q Common strategies for constructing a proof involving the first four rules: ◦ Always begin by attempting to find the conclusion in the premises. If the conclusion is not present in its entirely in the premises, look at the main operator of the conclusion. This will provide a clue as to how the conclusion should be derived. ◦ If the conclusion contains a letter that appears in the consequent of a conditional statement in the premises, consider obtaining that letter via modus ponens. ◦ If the conclusion contains a negated letter and that letter appears in the antecedent of a conditional statement in the premises, consider obtaining the negated letter via modus tollens. ◦ If the conclusion is a conditional statement, consider obtaining it via pure hypothetical syllogism. ◦ If the conclusion contains a letter that appears in a disjunctive statement in the premises, consider obtaining that letter via disjunctive syllogism. Four Additional Rules of Inference: ◦ Constructive Dilemma (CD): (p q) • (r s) p v r q v s ◦ Simplification (Simp): p • q p ◦ Conjunction (Conj): p q p • q ◦ Addition (Add): p p v q Common Misapplications Common strategies involving the additional rules of inference: ◦ If the conclusion contains a letter that appears in a conjunctive statement in the premises, consider obtaining that letter via simplification. -

Theorem Proving in Classical Logic

MEng Individual Project Imperial College London Department of Computing Theorem Proving in Classical Logic Supervisor: Dr. Steffen van Bakel Author: David Davies Second Marker: Dr. Nicolas Wu June 16, 2021 Abstract It is well known that functional programming and logic are deeply intertwined. This has led to many systems capable of expressing both propositional and first order logic, that also operate as well-typed programs. What currently ties popular theorem provers together is their basis in intuitionistic logic, where one cannot prove the law of the excluded middle, ‘A A’ – that any proposition is either true or false. In classical logic this notion is provable, and the_: corresponding programs turn out to be those with control operators. In this report, we explore and expand upon the research about calculi that correspond with classical logic; and the problems that occur for those relating to first order logic. To see how these calculi behave in practice, we develop and implement functional languages for propositional and first order logic, expressing classical calculi in the setting of a theorem prover, much like Agda and Coq. In the first order language, users are able to define inductive data and record types; importantly, they are able to write computable programs that have a correspondence with classical propositions. Acknowledgements I would like to thank Steffen van Bakel, my supervisor, for his support throughout this project and helping find a topic of study based on my interests, for which I am incredibly grateful. His insight and advice have been invaluable. I would also like to thank my second marker, Nicolas Wu, for introducing me to the world of dependent types, and suggesting useful resources that have aided me greatly during this report. -

John P. Burgess Department of Philosophy Princeton University Princeton, NJ 08544-1006, USA [email protected]

John P. Burgess Department of Philosophy Princeton University Princeton, NJ 08544-1006, USA [email protected] LOGIC & PHILOSOPHICAL METHODOLOGY Introduction For present purposes “logic” will be understood to mean the subject whose development is described in Kneale & Kneale [1961] and of which a concise history is given in Scholz [1961]. As the terminological discussion at the beginning of the latter reference makes clear, this subject has at different times been known by different names, “analytics” and “organon” and “dialectic”, while inversely the name “logic” has at different times been applied much more broadly and loosely than it will be here. At certain times and in certain places — perhaps especially in Germany from the days of Kant through the days of Hegel — the label has come to be used so very broadly and loosely as to threaten to take in nearly the whole of metaphysics and epistemology. Logic in our sense has often been distinguished from “logic” in other, sometimes unmanageably broad and loose, senses by adding the adjectives “formal” or “deductive”. The scope of the art and science of logic, once one gets beyond elementary logic of the kind covered in introductory textbooks, is indicated by two other standard references, the Handbooks of mathematical and philosophical logic, Barwise [1977] and Gabbay & Guenthner [1983-89], though the latter includes also parts that are identified as applications of logic rather than logic proper. The term “philosophical logic” as currently used, for instance, in the Journal of Philosophical Logic, is a near-synonym for “nonclassical logic”. There is an older use of the term as a near-synonym for “philosophy of language”. -

The Substitutional Analysis of Logical Consequence

THE SUBSTITUTIONAL ANALYSIS OF LOGICAL CONSEQUENCE Volker Halbach∗ dra version ⋅ please don’t quote ónd June óþÕä Consequentia ‘formalis’ vocatur quae in omnibus terminis valet retenta forma consimili. Vel si vis expresse loqui de vi sermonis, consequentia formalis est cui omnis propositio similis in forma quae formaretur esset bona consequentia [...] Iohannes Buridanus, Tractatus de Consequentiis (Hubien ÕÉßä, .ì, p.óóf) Zf«±§Zh± A substitutional account of logical truth and consequence is developed and defended. Roughly, a substitution instance of a sentence is dened to be the result of uniformly substituting nonlogical expressions in the sentence with expressions of the same grammatical category. In particular atomic formulae can be replaced with any formulae containing. e denition of logical truth is then as follows: A sentence is logically true i all its substitution instances are always satised. Logical consequence is dened analogously. e substitutional denition of validity is put forward as a conceptual analysis of logical validity at least for suciently rich rst-order settings. In Kreisel’s squeezing argument the formal notion of substitutional validity naturally slots in to the place of informal intuitive validity. ∗I am grateful to Beau Mount, Albert Visser, and Timothy Williamson for discussions about the themes of this paper. Õ §Z êZo±í At the origin of logic is the observation that arguments sharing certain forms never have true premisses and a false conclusion. Similarly, all sentences of certain forms are always true. Arguments and sentences of this kind are for- mally valid. From the outset logicians have been concerned with the study and systematization of these arguments, sentences and their forms. -

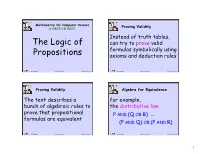

Propositional Logic (PDF)

Mathematics for Computer Science Proving Validity 6.042J/18.062J Instead of truth tables, The Logic of can try to prove valid formulas symbolically using Propositions axioms and deduction rules Albert R Meyer February 14, 2014 propositional logic.1 Albert R Meyer February 14, 2014 propositional logic.2 Proving Validity Algebra for Equivalence The text describes a for example, bunch of algebraic rules to the distributive law prove that propositional P AND (Q OR R) ≡ formulas are equivalent (P AND Q) OR (P AND R) Albert R Meyer February 14, 2014 propositional logic.3 Albert R Meyer February 14, 2014 propositional logic.4 1 Algebra for Equivalence Algebra for Equivalence for example, The set of rules for ≡ in DeMorgan’s law the text are complete: ≡ NOT(P AND Q) ≡ if two formulas are , these rules can prove it. NOT(P) OR NOT(Q) Albert R Meyer February 14, 2014 propositional logic.5 Albert R Meyer February 14, 2014 propositional logic.6 A Proof System A Proof System Another approach is to Lukasiewicz’ proof system is a start with some valid particularly elegant example of this idea. formulas (axioms) and deduce more valid formulas using proof rules Albert R Meyer February 14, 2014 propositional logic.7 Albert R Meyer February 14, 2014 propositional logic.8 2 A Proof System Lukasiewicz’ Proof System Lukasiewicz’ proof system is a Axioms: particularly elegant example of 1) (¬P → P) → P this idea. It covers formulas 2) P → (¬P → Q) whose only logical operators are 3) (P → Q) → ((Q → R) → (P → R)) IMPLIES (→) and NOT. The only rule: modus ponens Albert R Meyer February 14, 2014 propositional logic.9 Albert R Meyer February 14, 2014 propositional logic.10 Lukasiewicz’ Proof System Lukasiewicz’ Proof System Prove formulas by starting with Prove formulas by starting with axioms and repeatedly applying axioms and repeatedly applying the inference rule. -

A Quasi-Classical Logic for Classical Mathematics

Theses Honors College 11-2014 A Quasi-Classical Logic for Classical Mathematics Henry Nikogosyan University of Nevada, Las Vegas Follow this and additional works at: https://digitalscholarship.unlv.edu/honors_theses Part of the Logic and Foundations Commons Repository Citation Nikogosyan, Henry, "A Quasi-Classical Logic for Classical Mathematics" (2014). Theses. 21. https://digitalscholarship.unlv.edu/honors_theses/21 This Honors Thesis is protected by copyright and/or related rights. It has been brought to you by Digital Scholarship@UNLV with permission from the rights-holder(s). You are free to use this Honors Thesis in any way that is permitted by the copyright and related rights legislation that applies to your use. For other uses you need to obtain permission from the rights-holder(s) directly, unless additional rights are indicated by a Creative Commons license in the record and/or on the work itself. This Honors Thesis has been accepted for inclusion in Theses by an authorized administrator of Digital Scholarship@UNLV. For more information, please contact [email protected]. A QUASI-CLASSICAL LOGIC FOR CLASSICAL MATHEMATICS By Henry Nikogosyan Honors Thesis submitted in partial fulfillment for the designation of Departmental Honors Department of Philosophy Ian Dove James Woodbridge Marta Meana College of Liberal Arts University of Nevada, Las Vegas November, 2014 ABSTRACT Classical mathematics is a form of mathematics that has a large range of application; however, its application has boundaries. In this paper, I show that Sperber and Wilson’s concept of relevance can demarcate classical mathematics’ range of applicability by demarcating classical logic’s range of applicability. -

Saving the Square of Opposition∗

HISTORY AND PHILOSOPHY OF LOGIC, 2021 https://doi.org/10.1080/01445340.2020.1865782 Saving the Square of Opposition∗ PIETER A. M. SEUREN Max Planck Institute for Psycholinguistics, Nijmegen, the Netherlands Received 19 April 2020 Accepted 15 December 2020 Contrary to received opinion, the Aristotelian Square of Opposition (square) is logically sound, differing from standard modern predicate logic (SMPL) only in that it restricts the universe U of cognitively constructible situations by banning null predicates, making it less unnatural than SMPL. U-restriction strengthens the logic without making it unsound. It also invites a cognitive approach to logic. Humans are endowed with a cogni- tive predicate logic (CPL), which checks the process of cognitive modelling (world construal) for consistency. The square is considered a first approximation to CPL, with a cognitive set-theoretic semantics. Not being cognitively real, the null set Ø is eliminated from the semantics of CPL. Still rudimentary in Aristotle’s On Interpretation (Int), the square was implicitly completed in his Prior Analytics (PrAn), thereby introducing U-restriction. Abelard’s reconstruction of the logic of Int is logically and historically correct; the loca (Leak- ing O-Corner Analysis) interpretation of the square, defended by some modern logicians, is logically faulty and historically untenable. Generally, U-restriction, not redefining the universal quantifier, as in Abelard and loca, is the correct path to a reconstruction of CPL. Valuation Space modelling is used to compute -

A Validity Framework for the Use and Development of Exported Assessments

A Validity Framework for the Use and Development of Exported Assessments By María Elena Oliveri, René Lawless, and John W. Young A Validity Framework for the Use and Development of Exported Assessments María Elena Oliveri, René Lawless, and John W. Young1 1 The authors would like to acknowledge Bob Mislevy, Michael Kane, Kadriye Ercikan, and Maurice Hauck for their valuable input and expertise in reviewing various iterations of the framework as well as Priya Kannan, Don Powers, and James Carlson for their input throughout the technical review process. Copyright © 2015 Educational Testing Service. All Rights Reserved. ETS, the ETS logo, GRADUATE RECORD EXAMINATIONS, GRE, LISTENTING. LEARNING. LEADING, TOEIC, and TOEFL are registered trademarks of Educational Testing Service (ETS). EXADEP and EXAMEN DE ADMISIÓN A ESTUDIOS DE POSGRADO are trademarks of ETS. 1 Abstract In this document, we present a framework that outlines the key considerations relevant to the fair development and use of exported assessments. Exported assessments are developed in one country and are used in countries with a population that differs from the one for which the assessment was developed. Examples of these assessments include the Graduate Record Examinations® (GRE®) and el Examen de Admisión a Estudios de Posgrado™ (EXADEP™), among others. Exported assessments can be used to make inferences about performance in the exporting country or in the receiving country. To illustrate, the GRE can be administered in India and be used to predict success at a graduate school in the United States, or similarly it can be administered in India to predict success in graduate school at a graduate school in India. -

The Development of Mathematical Logic from Russell to Tarski: 1900–1935

The Development of Mathematical Logic from Russell to Tarski: 1900–1935 Paolo Mancosu Richard Zach Calixto Badesa The Development of Mathematical Logic from Russell to Tarski: 1900–1935 Paolo Mancosu (University of California, Berkeley) Richard Zach (University of Calgary) Calixto Badesa (Universitat de Barcelona) Final Draft—May 2004 To appear in: Leila Haaparanta, ed., The Development of Modern Logic. New York and Oxford: Oxford University Press, 2004 Contents Contents i Introduction 1 1 Itinerary I: Metatheoretical Properties of Axiomatic Systems 3 1.1 Introduction . 3 1.2 Peano’s school on the logical structure of theories . 4 1.3 Hilbert on axiomatization . 8 1.4 Completeness and categoricity in the work of Veblen and Huntington . 10 1.5 Truth in a structure . 12 2 Itinerary II: Bertrand Russell’s Mathematical Logic 15 2.1 From the Paris congress to the Principles of Mathematics 1900–1903 . 15 2.2 Russell and Poincar´e on predicativity . 19 2.3 On Denoting . 21 2.4 Russell’s ramified type theory . 22 2.5 The logic of Principia ......................... 25 2.6 Further developments . 26 3 Itinerary III: Zermelo’s Axiomatization of Set Theory and Re- lated Foundational Issues 29 3.1 The debate on the axiom of choice . 29 3.2 Zermelo’s axiomatization of set theory . 32 3.3 The discussion on the notion of “definit” . 35 3.4 Metatheoretical studies of Zermelo’s axiomatization . 38 4 Itinerary IV: The Theory of Relatives and Lowenheim’s¨ Theorem 41 4.1 Theory of relatives and model theory . 41 4.2 The logic of relatives .