Open-Source Coprocessor for Integer Multiple Precision Arithmetic

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

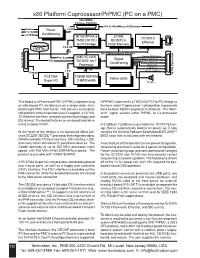

X86 Platform Coprocessor/Prpmc (PC on a PMC)

x86 Platform Coprocessor/PrPMC (PC on a PMC) 32b/33MHz PCI bus PN1/PN2 +5V to Kbd/Mouse/USB power Vcore Power +3.3V +2.5v Conditioning +3.3VIO 1K100 FPGA & 512KB 10/100TX TMS2250 PCI BIOS/PLA Ethernet RJ45 Compact to PCI bridge Flash Memory PLA I/O Flash site 8 32b/33MHz Internal PCI bus Analog SVGA Video Pwr Seq AMD SC2200 Signal COM 1 (RXD/TXD only) IDE "GEODE (tm)" Conditioning COM 2 (RXD/TXD only) Processor USB Port 1 Rear I/O PN4 I/O Rear 64 USB Port 2 LPC Keyboard/Mouse Floppy 36 Pin 36 Pin Connector PC87364 128MB SDRAM Status LEDs Super I/O (16MWx64b) PC Spkr This board is a Processor PMC (PrPMC) implementing A PrPMC implements a TMS2250 PCI-to-PCI bridge to an x86-based PC architecture on a single-wide, stan- the host, and a “Coprocessor” cofniguration implements dard height PMC form factor. This delivers a complete back-to-back 16550 compatible COM ports. The “Mon- x86 platform with comprehensive I/O support, a 10/100- arch” signal selects either PrPMC or Co-processor TX Ethernet interface, and disk access (both floppy and mode. IDE drives). The board features an on-board hard drive using Compact Flash. A 512Kbyte FLASH memory holds the 1K100 PLA im- age that is automatically loaded on power up. It also At the heart of the design is an Advanced Micro De- contains the General Software Embedded BIOS 2000™ vices SC2200 GEODE™ processor that integrates video, BIOS code that is included with each board. -

Convey Overview

THE WORLD’S FIRST HYBRID-CORE COMPUTER. CONVEY HYBRID-CORE COMPUTING Hybrid-core Computing Convey HC-1 High Performance of application- specific hardware Heterogenous solutions • can be much more efficient Performance/ • still hard to program Programmability and Power efficiency deployment ease of an x86 server Application Multicore solutions • don’t always scale well • parallel programming is hard Low Difficult Ease of Deployment Easy 1/22/2010 3 Hybrid-Core Computing Application-Specific Personalities Applications • Extend the x86 instruction set • Implement key operations in Convey Compilers hardware Life Sciences x86-64 ISA Custom ISA CAE Custom Financial Oil & Gas Shared Virtual Memory Cache-coherent, shared memory • Both ISAs address common memory *ISA: Instruction Set Architecture 7/12/2010 4 HC-1 Hardware PCI I/O FPGA FPGA Intel Personalities Chipset FPGA FPGA 8 GB/s 80 GB/s Memory Memory Cache Coherent, Shared Virtual Memory 1/22/2010 5 Using Personalities C/C++ Fortran • Personalities are user specifies reloadable personality at instruction sets Convey Software compile time Development Suite • Compiler instruction generates x86 descriptions and coprocessor instructions from Hybrid-Core Executable P ANSI standard x86-64 and Coprocessor Personalities C/C++ & Fortran Instructions • Executable can run on x86 nodes FPGA Convey HC-1 or Convey Hybrid- bitfiles Core nodes Intel x86 Coprocessor personality loaded at runtime by OS 1/22/2010 6 SYSTEM ARCHITECTURE HC-1 Architecture “Commodity” Intel Server Convey FPGA-based coprocessor Direct -

Digital Electronic Circuits

DIGITAL ELECTRONIC CIRCUITS SUBJECT CODE: PEI4I103 B.Tech, Fourth Semester Prepared By Dr. Kanhu Charan Bhuyan Asst. Professor Instrumentation and Electronics Engineering COLLEGE OF ENGINEERING AND TECHNOLOGY BHUBANESWAR B.Tech (E&IE/AE&I) detail Syllabus for Admission Batch 2015-16 PEI4I103- DIGITAL ELECTRONICS University level 80% MODULE – I (12 Hours)1. Number System: Introduction to various number systems and their Conversion. Arithmetic Operation using 1’s and 2`s Compliments, Signed Binary and Floating Point Number Representation Introduction to Binary codes and their applications. (5 Hours) 2. Boolean Algebra and Logic Gates: Boolean algebra and identities, Complete Logic set, logic gates and truth tables. Universal logic gates, Algebraic Reduction and realization using logic gates (3 Hours) 3. Combinational Logic Design: Specifying the Problem, Canonical Logic Forms, Extracting Canonical Forms, EX-OR Equivalence Operations, Logic Array, K-Maps: Two, Three and Four variable K-maps, NAND and NOR Logic Implementations. (4 Hours) MODULE – II (14 Hours) 4. Logic Components: Concept of Digital Components, Binary Adders, Subtraction and Multiplication, An Equality Detector and comparator, Line Decoder, encoders, Multiplexers and De-multiplexers. (5 Hours) 5. Synchronous Sequential logic Design: sequential circuits, storage elements: Latches (SR,D), Storage elements: Flip-Flops inclusion of Master-Slave, characteristics equation and state diagram of each FFs and Conversion of Flip-Flops. Analysis of Clocked Sequential circuits and Mealy and Moore Models of Finite State Machines (6 Hours) 6. Binary Counters: Introduction, Principle and design of synchronous and asynchronous counters, Design of MOD-N counters, Ring counters. Decade counters, State Diagram of binary counters (4 hours) MODULE – III (12 hours) 7. -

Design and Analysis of Power Efficient PTL Half Subtractor Using 120Nm Technology

International Journal of Computer Trends and Technology (IJCTT) – volume 7 number 4– Jan 2014 Design and Analysis of Power Efficient PTL Half Subtractor Using 120nm Technology 1 2 Pranshu Sharma , Anjali Sharma 1(Department of Electronics & Communication Engineering, APG Shimla University, India) 2(Department of Electronics & Communication Engineering, APG Shimla University, India) ABSTRACT : In the designing of any VLSI System, There are a number of logic styles by which a circuit can arithmetic circuits play a vital role, subtractor circuit is be implemented some of them used in this paper are one among them. In this paper a Power efficient Half- CMOS, TG, PTL. CMOS logic styles are robust against Subtractor has been designed using the PTL technique. voltage scaling and transistor sizing and thus provide a Subtractor circuit using this technique consumes less reliable operation at low voltages and arbitrary transistor power in comparison to the CMOS and TG techniques. sizes. In CMOS style input signals are connected to The proposed Half-Subtractor circuit using the PTL transistor gates only, which facilitate the usage and technique consists of 6 NMOS and 4 PMOS. The characterization of logic cells. Due to the complementary proposed PTL Half-Subtractor is designed and simulated transistor pairs the layout of CMOS gates is not much using DSCH 3.1 and Microwind 3.1 on 120nm. The complicated and is power efficient. The large number of power estimation and simulation of layout has been done PMOS transistors used in complementary CMOS logic for the proposed PTL half-Subtractor design. Power style is one of its major disadvantages and it results in comparison on BSIM-4 and LEVEL-3 has been high input loads [3]. -

Co-Emulation of Scan-Chain Based Designs Utilizing SCE-MI Infrastructure

UNLV Theses, Dissertations, Professional Papers, and Capstones 5-1-2014 Co-Emulation of Scan-Chain Based Designs Utilizing SCE-MI Infrastructure Bill Jason Pidlaoan Tomas University of Nevada, Las Vegas Follow this and additional works at: https://digitalscholarship.unlv.edu/thesesdissertations Part of the Computer Engineering Commons, Computer Sciences Commons, and the Electrical and Computer Engineering Commons Repository Citation Tomas, Bill Jason Pidlaoan, "Co-Emulation of Scan-Chain Based Designs Utilizing SCE-MI Infrastructure" (2014). UNLV Theses, Dissertations, Professional Papers, and Capstones. 2152. http://dx.doi.org/10.34917/5836171 This Thesis is protected by copyright and/or related rights. It has been brought to you by Digital Scholarship@UNLV with permission from the rights-holder(s). You are free to use this Thesis in any way that is permitted by the copyright and related rights legislation that applies to your use. For other uses you need to obtain permission from the rights-holder(s) directly, unless additional rights are indicated by a Creative Commons license in the record and/ or on the work itself. This Thesis has been accepted for inclusion in UNLV Theses, Dissertations, Professional Papers, and Capstones by an authorized administrator of Digital Scholarship@UNLV. For more information, please contact [email protected]. CO-EMULATION OF SCAN-CHAIN BASED DESIGNS UTILIZING SCE-MI INFRASTRUCTURE By: Bill Jason Pidlaoan Tomas Bachelor‟s Degree of Electrical Engineering Auburn University 2011 A thesis submitted -

Exploiting Free Silicon for Energy-Efficient Computing Directly

Exploiting Free Silicon for Energy-Efficient Computing Directly in NAND Flash-based Solid-State Storage Systems Peng Li Kevin Gomez David J. Lilja Seagate Technology Seagate Technology University of Minnesota, Twin Cities Shakopee, MN, 55379 Shakopee, MN, 55379 Minneapolis, MN, 55455 [email protected] [email protected] [email protected] Abstract—Energy consumption is a fundamental issue in today’s data A well-known solution to the memory wall issue is moving centers as data continue growing dramatically. How to process these computing closer to the data. For example, Gokhale et al [2] data in an energy-efficient way becomes more and more important. proposed a processor-in-memory (PIM) chip by adding a processor Prior work had proposed several methods to build an energy-efficient system. The basic idea is to attack the memory wall issue (i.e., the into the main memory for computing. Riedel et al [9] proposed performance gap between CPUs and main memory) by moving com- an active disk by using the processor inside the hard disk drive puting closer to the data. However, these methods have not been widely (HDD) for computing. With the evolution of other non-volatile adopted due to high cost and limited performance improvements. In memories (NVMs), such as phase-change memory (PCM) and spin- this paper, we propose the storage processing unit (SPU) which adds computing power into NAND flash memories at standard solid-state transfer torque (STT)-RAM, researchers also proposed to use these drive (SSD) cost. By pre-processing the data using the SPU, the data NVMs as the main memory for data-intensive applications [10] to that needs to be transferred to host CPUs for further processing improve the system energy-efficiency. -

Design of Adder / Subtractor Circuits Based On

ISSN (Print) : 2320 – 3765 ISSN (Online): 2278 – 8875 International Journal of Advanced Research in Electrical, Electronics and Instrumentation Engineering (An ISO 3297: 2007 Certified Organization) Vol. 2, Issue 8, August 2013 DESIGN OF ADDER / SUBTRACTOR CIRCUITS BASED ON REVERSIBLE GATES V.Kamalakannan1, Shilpakala.V2, Ravi.H.N3 Lecturer, Department of ECE, R.L.Jalappa Institute of Technology, Doddaballapur, Karnataka, India 1 Asst. Professor & HOD, Department of ECE, R.L.Jalappa Institute of Technology, Doddaballapur, Karnataka, India2 Lab Assistant, Dept. of ECE, U.V.C.E, Bangalore Karnataka, India3 ABSTRACT: Reversible logic has extensive applications in quantum computing, it is a unconventional form of computing where the computational process is reversible, i.e., time-invertible. The main motivation behind the study of this technology is aimed at implementing reversible computing where they offer what is predicted to be the only potential way to improve the energy efficiency of computers beyond von Neumann-Landauer limit. It is relatively new and emerging area in the field of computing that taught us to think physically about computation Quantum Computing will be a total change in how the computer will operate and function. The reversible arithmetic circuits are efficient in terms of number of reversible gates, garbage output and quantum cost. In this paper design Reversible Binary Adder- Subtractor- Mux, Adder-Subtractor- TR Gate., Adder-Subtractor- Hybrid are proposed. In all the three design approaches, the Adder and Subtractor are realized in a single unit as compared to only full adder/subtractor in the existing design. The performance analysis is verified using number reversible gates, Garbage input/outputs and Quantum Cost.The reversible 4-bit full adder/ subtractor design unit is compared with conventional ripple carry adder, carry look ahead adder, carry skip adder, Manchester carry adder based on their performance with respect to area, timing and power. -

Area and Power Optimized D-Flip Flop and Subtractor

IT in Industry, Vol. 9, No.1, 2021 Published Online 28-02-2021 AREA AND POWER OPTIMIZED D-FLIP FLOP AND SUBTRACTOR T. Subhashini1, M. Kamaraju2, K. Babulu3 1,2 Department of ECE, Gudlavalleru Engineering College, India 3Department of ECE, UCOE, JNTUK, Kakinada, India Abstract: Low power is essential in today’s technology. It LITERATURE SURVEY is most significant with high speed, small size and stability. So, power reduction is most important in modern In the era of microelectronics, power dissipation is technology using VLSI design techniques. Today most of limiting factor. In any CMOS technology, provides the market necessities require low power, long run time very low Static power dissipation. Due to the and market which also deserve small size and high speed. discharge of capacitance, during the switching In this paper several logic circuits DFF with 5 transistors operation, that cause a power dissipation. Which and sub tractor circuit using powerless XOR gate and increases with the clock frequency. Using Groundless XNOR gates are implemented. In the proposed Transmission Gate Technique Such unnecessary DFF, the area can be decreased by 62% & substarctor discharging can be prevented. Due to the resistance of circuit, area decreased by 80% and power consumption of switches, impartial losses may be occurred for any DFF and subtractor circuit are 15.4µW and 13.76µW logic operation. respectively, but these are very less as compared to existing techniques. To avoid these minor losses keep clock frequency to be small than the technological limit. There are Keyword: D FF, NMOS, PMOS, VLSI, P-XOR, G-XNOR different types of Transmission Gate logic families. -

Simulating Altera Designs

1. Simulating Altera Designs May 2013 QII53025-13.0.0 QII53025-13.0.0 This document describes simulating designs that target Altera® devices. Simulation verifies design behavior before device programming. The Quartus® II software supports RTL and gate level design simulation in third-party EDA simulators. Altera Simulation Overview Simulation involves setting up your simulator working environment, compiling simulation model libraries, and running your simulation. Generate simulation files in an automated or custom flow. Refer to Figure 1–1 and Table 1–3. Figure 1–1. Simulation in Quartus II Design Flow Design Entry (HDL, Qsys, DSP Builder) Altera Simulation RTL Simulation Models Quartus II Design Flow Gate-Level Simulation Post-synthesis functional Post-synthesis Analysis & Synthesis simulation netlist functional simulation EDA Fitter Netlist Post-fit functional Post-fit functional (place-and-route) Writer simulation netlist simulation Post-fit timing TimeQuest Timing Analyzer (Optional)Post-fit timing Post-fit simulation netlist (1) timing simulation simulation (3) Device Programmer (1) Timing simulation is not supported for Arria® V, Cyclone® V, Stratix® V, and newer families. You can use the Quartus II NativeLink feature to automatically generate simulation files and scripts. NativeLink can launch your simulator a from within the Quartus II software. Use a custom flow for more control over all aspects of simulation file generation. © 2013 Altera Corporation. All rights reserved. ALTERA, ARRIA, CYCLONE, HARDCOPY, MAX, MEGACORE, NIOS, QUARTUS and STRATIX words and logos are trademarks of Altera Corporation and registered in the U.S. Patent and Trademark Office and in other countries. All other words and logos identified as trademarks or service marks are the property of their respective holders as described at www.altera.com/common/legal.html. -

CUDA What Is GPGPU

What is GPGPU ? • General Purpose computation using GPU in applications other than 3D graphics CUDA – GPU accelerates critical path of application • Data parallel algorithms leverage GPU attributes – Large data arrays, streaming throughput Slides by David Kirk – Fine-grain SIMD parallelism – Low-latency floating point (FP) computation • Applications – see //GPGPU.org – Game effects (FX) physics, image processing – Physical modeling, computational engineering, matrix algebra, convolution, correlation, sorting Previous GPGPU Constraints CUDA • Dealing with graphics API per thread per Shader Input Registers • “Compute Unified Device Architecture” – Working with the corner cases per Context • General purpose programming model of the graphics API Fragment Program Texture – User kicks off batches of threads on the GPU • Addressing modes Constants – GPU = dedicated super-threaded, massively data parallel co-processor – Limited texture size/dimension Temp Registers • Targeted software stack – Compute oriented drivers, language, and tools Output Registers • Shader capabilities • Driver for loading computation programs into GPU FB Memory – Limited outputs – Standalone Driver - Optimized for computation • Instruction sets – Interface designed for compute - graphics free API – Data sharing with OpenGL buffer objects – Lack of Integer & bit ops – Guaranteed maximum download & readback speeds • Communication limited – Explicit GPU memory management – Between pixels – Scatter a[i] = p 1 Parallel Computing on a GPU Extended C • Declspecs • NVIDIA GPU Computing Architecture – global, device, shared, __device__ float filter[N]; – Via a separate HW interface local, constant __global__ void convolve (float *image) { – In laptops, desktops, workstations, servers GeForce 8800 __shared__ float region[M]; ... • Keywords • 8-series GPUs deliver 50 to 200 GFLOPS region[threadIdx] = image[i]; on compiled parallel C applications – threadIdx, blockIdx • Intrinsics __syncthreads() – __syncthreads .. -

High Performance Full Subtractor Using Floating-Gate MOSFET

Microelectronic Engineering 162 (2016) 75–78 Contents lists available at ScienceDirect Microelectronic Engineering journal homepage: www.elsevier.com/locate/mee Accelerated publication High performance full subtractor using floating-gate MOSFET Roshani Gupta, Rockey Gupta, Susheel Sharma Department of Physics and Electronics, University of Jammu, Jammu 180006, India article info abstract Article history: Low power consumption has become one of the primary requirements in the design of digital VLSI circuits in re- Received 18 March 2016 cent years. With scaling down of device dimensions, the supply voltage also needs to be scaled down for reliable Received in revised form 10 May 2016 operation. The speed of conventional digital integrated circuits is degrading on reducing the supply voltage for a Accepted 11 May 2016 given technology. Moreover, the threshold voltage of MOSFET does not scale down proportionally with its di- Available online 13 May 2016 mensions, thus putting a limitation on its suitability for low voltage operation. Therefore, there is a need to ex- Keywords: plore new methodology for the design of digital circuits well suited for low voltage operation and low power Full subtractor consumption. Floating-gate MOS (FGMOS) technology is one of the design techniques with its attractive features FGMOS of reduced circuit complexity and threshold voltage programmability. It can be operated below the conventional TG threshold voltage of MOSFET leading to wider output signal swing at low supply voltage and dissipates less CPL power as compared to CMOS circuits without much compromise on the device performance. This paper presents GDI the design of full subtractor using FGMOS technique whose performance has been compared with full subtractor Power delay product circuits employing CMOS, transmission gates (TG), complementary pass transistor logic (CPL) and gate diffusion input (GDI). -

Comparing the Power and Performance of Intel's SCC to State

Comparing the Power and Performance of Intel’s SCC to State-of-the-Art CPUs and GPUs Ehsan Totoni, Babak Behzad, Swapnil Ghike, Josep Torrellas Department of Computer Science, University of Illinois at Urbana-Champaign, Urbana, IL 61801, USA E-mail: ftotoni2, bbehza2, ghike2, [email protected] Abstract—Power dissipation and energy consumption are be- A key architectural challenge now is how to support in- coming increasingly important architectural design constraints in creasing parallelism and scale performance, while being power different types of computers, from embedded systems to large- and energy efficient. There are multiple options on the table, scale supercomputers. To continue the scaling of performance, it is essential that we build parallel processor chips that make the namely “heavy-weight” multi-cores (such as general purpose best use of exponentially increasing numbers of transistors within processors), “light-weight” many-cores (such as Intel’s Single- the power and energy budgets. Intel SCC is an appealing option Chip Cloud Computer (SCC) [1]), low-power processors (such for future many-core architectures. In this paper, we use various as embedded processors), and SIMD-like highly-parallel archi- scalable applications to quantitatively compare and analyze tectures (such as General-Purpose Graphics Processing Units the performance, power consumption and energy efficiency of different cutting-edge platforms that differ in architectural build. (GPGPUs)). These platforms include the Intel Single-Chip Cloud Computer The Intel SCC [1] is a research chip made by Intel Labs (SCC) many-core, the Intel Core i7 general-purpose multi-core, to explore future many-core architectures. It has 48 Pentium the Intel Atom low-power processor, and the Nvidia ION2 (P54C) cores in 24 tiles of two cores each.