Checkpointing Under Linux with Berkeley Lab Checkpoint/Restart

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Linux Tutorial Last Updated: September 29 2021 for Windows Users

VLAAMS SUPERCOMPUTER Innovative Computing CENTRUM for A Smarter Flanders Linux Tutorial Last updated: September 29 2021 For Windows Users Authors: Jasper Devreker (UGent), Ewan Higgs (UGent), Kenneth Hoste (UGent) Acknowledgement: VSCentrum.be Audience: This document is a hands-on guide for using the Linux command line in the context of the UGent HPC infrastructure. The command line (sometimes called ’shell’) can seems daunting at first, but with a little understanding can be very easy to use. Everything you do startsatthe prompt. Here you have the liberty to type in any commands you want. Soon, you will be able to move past the limited point and click interface and express interesting ideas to the computer using the shell. Gaining an understanding of the fundamentals of Linux will help accelerate your research using the HPC infrastructure. You will learn about commands, managing files, and some scripting basics. Notification: In$ commands this tutorial specific commands are separated from the accompanying text: These should be entered by the reader at a command line in a terminal on the UGent-HPC. They appear in all exercises preceded by a $ and printed in bold. You’ll find those actions ina grey frame. Button are menus, buttons or drop down boxes to be pressed or selected. “Directory” is the notation for directories (called “folders” in Windows terminology) or specific files. (e.g., “/user/home/gent/vsc400/vsc40000”) “Text” Is the notation for text to be entered. Tip: A “Tip” paragraph is used for remarks or tips. They can also be downloaded from the VSC website at https://www.vscentrum.be. -

SUSE Linux Enterprise Server 11 SP4 System Analysis and Tuning Guide System Analysis and Tuning Guide SUSE Linux Enterprise Server 11 SP4

SUSE Linux Enterprise Server 11 SP4 System Analysis and Tuning Guide System Analysis and Tuning Guide SUSE Linux Enterprise Server 11 SP4 Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see http://www.suse.com/company/legal/ . All other third party trademarks are the property of their respective owners. A trademark symbol (®, ™ etc.) denotes a SUSE or Novell trademark; an asterisk (*) denotes a third party trademark. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xi 1 Available Documentation xii 2 Feedback xiv 3 Documentation Conventions xv I BASICS 1 1 General Notes on System Tuning 2 1.1 Be Sure What Problem to Solve 2 1.2 Rule Out Common Problems 3 1.3 Finding the Bottleneck 3 1.4 Step-by-step Tuning 4 II SYSTEM MONITORING 5 2 System Monitoring Utilities 6 2.1 Multi-Purpose Tools 6 vmstat 7 -

Introduction to UNIX Summary of Some Useful Commands

Introduction to UNIX "...the number of UNIX installations has grown to 10, with more expected..." - Dennis Ritchie and Ken Thompson, June 1972 (Bell Lab.) Universities, research institutes, government bodies and computer companies all began using the powerful UNIX system to develop many of the technologies which today are part of a UNIX system. Guide to UNIX on the beginners level: http://www.ee.surrey.ac.uk/Teaching/Unix/ Summary of some useful commands: Some basic UNIX commands cd directory_name change to the directory directory_name cd .. change to the directory above the current directory cd ~ change to the home directory cp file_1 file_2 copy the file file_1 to the file file_2 ln –s source linkname link the file with the name source to the file linkname ls directory_name show the content of the directory directory_name ls –l directory_name show in detail the content of the directory directory_name ls –a directory_name show all files including hidden files of the directory directory_name mkdir directory_name create the new directory directory_name less file_name show the content of the file file_name tail file_name show the last part of a file file_name head file_name show the top part of a file file_name (x)emacs file_name edit the file file_name using the editor xemacs mv file_1 to file_2 change the filename file_1 to file_2 rm –i file_name remove the file file_name (the system asks for confirmation) rm –ri directory_name remove all files recursive in the directory directory_name rmdir directory_name remove the directory directory_name -

System Analysis and Tuning Guide System Analysis and Tuning Guide SUSE Linux Enterprise Server 15 SP1

SUSE Linux Enterprise Server 15 SP1 System Analysis and Tuning Guide System Analysis and Tuning Guide SUSE Linux Enterprise Server 15 SP1 An administrator's guide for problem detection, resolution and optimization. Find how to inspect and optimize your system by means of monitoring tools and how to eciently manage resources. Also contains an overview of common problems and solutions and of additional help and documentation resources. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xii 1 Available Documentation xiii -

Cheatsheet V2.4.Pdf

! ! 2.4!Edition! Cross!reference!processes!with!various!lists:! Scan!a!block!of!code!in!process!or!kernel!memory! psxview! for!imported!APIs:! ! impscan!! Show!processes!in!parent/child!tree:! !!!!Hp/HHpid=PID!!!!!!!!!Process!ID!! pstree! !!!!Hb/HHbase=BASE!!!Base!address!to!scan! & !!!!Hs/HHsize=SIZE!!!!!!!Size!to!scan!from!start!of!base! Process&Information& ! ! Logs&/&Histories& Specify!–o/HHoffset=OFFSET!or!Hp/HHpid=1,2,3!! ! ! Recover!event!logs!(XP/2003):! Display!DLLs:! evtlogs!! ! ! dlllist! !!!!HS/HHsaveHevt!!!!!!!!!!!!!!!!!!!!Save!raw!event!logs! Development!build!and!wiki:! ! !!!!HD/HHdumpHdir=PATH!!!Write!to!this!directory! github.com/volatilityfoundation!! Show!command!line!arguments:! ! ! cmdline! Recover!command!history:! Download!a!stable!release:! ! cmdscan!and!consoles!! volatilityfoundation.org!! Display!details!on!VAD!allocations:! ! ! vadinfo![HHaddr]! Recover!IE!cache/Internet!history:! Read!the!book:! ! iehistory!! artofmemoryforensics.com! Dump!allocations!to!individual!files:! ! ! vaddump!HHdumpHdir=PATH![HHbase]! Show!running!services:! Development!Team!Blog:! ! svcscan!! http://volatilityHlabs.blogspot.com!! Dump!all!valid!pages!to!a!single!file:! !!!!Hv/HHverbose!!!!Show!ServiceDll!from!registry! ! memdump!HHdumpHdir=PATH! ! (Official)!Training!Contact:! ! Networking&Information& Display!open!handles:! [email protected]!! ! handles!! ! Active!info!(XP/2003):! !!!!Ht/HHobjectHtype=TYPE!!!Mutant,!File,!Key,!etc…! Follow:!@volatility! connections!and!sockets!! !!!!Hs/HHsilent!!!!!!!!!!!!!!!!!!!!!!!!!!!Hide!unnamed!handles! -

Shells and Processes

Shells and Processes Bryce Boe 2012/08/08 CS32, Summer 2012 B Outline • Operang Systems and Linux Review • Shells • Project 1 Part 1 Overview • Processes • Overview For Monday (Sor>ng Presentaons) OS Review • Operang systems – Manages system resources: cpu, memory, I/O – Types: single/mul>-user and single/mul>-process – Provides Fairness, security Self Check Quesons • What is the primary benefit oF a mul>-process OS over a single process OS? How is this accomplished? • Explain the difference between mul>programming and mul>tasking Self Check Answers • What is the primary benefit oF a mul>-process OS over a single process OS? How is this accomplished? – Increased resource u>lizaon (primarily oF the CPU) accomplished by scheduling other processes when the currently running process requires I/O SelF Check Answers cont. • Explain the difference between mul>programming and mul>tasking – Mul>programming systems switch the running process when that process requires I/O. – Mul>tasking systems periodically switch the running process aer some (typically minute) period of me Linux Architecture Shells What is a shell? • A shell is a program that provides the interFace between the user and the operang system • Can be used to tell the OS to: – Execute programs (as processes) – Stop, or pause processes – Create, copy, move, remove files – Load or unload device drivers Types of Shells • Command line shells: – Provide a textual input as the user-interFace – Bourne shell (sh), C shell (csh), Bourne-Again shell (bash), cmd.exe • Graphical shells – Provide a point-and-click -

Full Workload

Command Line: Process Control C@ELOLJ<I GifZ\jjXe[afYZfekifc =LCCNFIBCF8; !Qn\`Ycld#g_fkfZXj\%Zfd What is happening on your Linux machine? Various shell commands All of these parameters can be com- bined – for example, pstree -apu. give you details about system processes and help you control them. C`jk`e^GifZ\jj\jn`k_gj BY HEIKE JURZIK The ps command gives you a list of the processes currently running on your sys- henever you or an application ing. All other processes share this com- tem. If you do not specify any command- launch a program, the operat- mon “ancestor” – init starts the operat- line parameters, the tool will restrict the Ning system starts one or multi- ing system’s basic programs. pstree list to the current shell. If you are inter- ple processes. These processes can enter shows the relationship between “parent” ested in all of your processes, call ps various states: They can be processed, and “child” processes. This tree struc- with the x option (Listing 1). stopped, restarted, and – under normal ture shows you at a glance who is de- The tabular output in the shell tells circumstances – stopped again. Linux scended from whom (Figure 1). you the following characteristics: has something similar to access controls PID: The process identifier, a unique for processes: Only the user that started 8[[`k`feXcFlkglk number that you can use to reference a process can stop, restart, or terminate The tool gives you more detailed output a process individually. the process. if you set the -a flag. This tells pstree to TTY: The terminal or console on The only exception to this rule is the show you, in addition, the parameters which the process was started. -

The Linux Command Line

The Linux Command Line Second Internet Edition William E. Shotts, Jr. A LinuxCommand.org Book Copyright ©2008-2013, William E. Shotts, Jr. This work is licensed under the Creative Commons Attribution-Noncommercial-No De- rivative Works 3.0 United States License. To view a copy of this license, visit the link above or send a letter to Creative Commons, 171 Second Street, Suite 300, San Fran- cisco, California, 94105, USA. Linux® is the registered trademark of Linus Torvalds. All other trademarks belong to their respective owners. This book is part of the LinuxCommand.org project, a site for Linux education and advo- cacy devoted to helping users of legacy operating systems migrate into the future. You may contact the LinuxCommand.org project at http://linuxcommand.org. This book is also available in printed form, published by No Starch Press and may be purchased wherever fine books are sold. No Starch Press also offers this book in elec- tronic formats for most popular e-readers: http://nostarch.com/tlcl.htm Release History Version Date Description 13.07 July 6, 2013 Second Internet Edition. 09.12 December 14, 2009 First Internet Edition. 09.11 November 19, 2009 Fourth draft with almost all reviewer feedback incorporated and edited through chapter 37. 09.10 October 3, 2009 Third draft with revised table formatting, partial application of reviewers feedback and edited through chapter 18. 09.08 August 12, 2009 Second draft incorporating the first editing pass. 09.07 July 18, 2009 Completed first draft. Table of Contents Introduction....................................................................................................xvi -

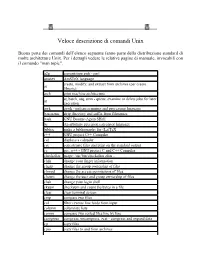

Unix Command

Veloce descrizione di comandi Unix Buona parte dei comandi dell’elenco seguente fanno parte della distribuzione standard di molte architetture Unix. Per i dettagli vedere le relative pagine di manuale, invocabili con il comando "man topic". a2p convertitore awk - perl amstex AmSTeX language create, modify, and extract from archives (per creare ar librerie) arch print machine architecture at, batch, atq, atrm - queue, examine or delete jobs for later at execution awk gawk - pattern scanning and processing language basename strip directory and suffix from filenames bash GNU Bourne-Again SHell bc An arbitrary precision calculator language bibtex make a bibliography for (La)TeX c++ GNU project C++ Compiler cal displays a calendar cat concatenate files and print on the standard output cc gcc, g++ - GNU project C and C++ Compiler checkalias usage: /usr/bin/checkalias alias .. chfn change your finger information chgrp change the group ownership of files chmod change the access permissions of files chown change the user and group ownership of files chsh change your login shell cksum checksum and count the bytes in a file clear clear terminal screen cmp compare two files col filter reverse line feeds from input column columnate lists comm compare two sorted files line by line compress compress, uncompress, zcat - compress and expand data cp copy files cpio copy files to and from archives tcsh - C shell with file name completion and command line csh editing csplit split a file into sections determined by context lines cut remove sections from each -

Bash Guide for Beginners

Bash Guide for Beginners Machtelt Garrels Xalasys.com <tille wants no spam _at_ xalasys dot com> Version 1.8 Last updated 20060315 Edition Bash Guide for Beginners Table of Contents Introduction.........................................................................................................................................................1 1. Why this guide?...................................................................................................................................1 2. Who should read this book?.................................................................................................................1 3. New versions, translations and availability.........................................................................................2 4. Revision History..................................................................................................................................2 5. Contributions.......................................................................................................................................3 6. Feedback..............................................................................................................................................3 7. Copyright information.........................................................................................................................3 8. What do you need?...............................................................................................................................4 9. Conventions used in this -

Introduction to Linux Basic Commands & Environment

Introduction to Linux Basic Commands & Environment Alexander B. Pacheco Research Computing Outline 1 Introduction 2 Linux File System Heirarchy 3 Basic Commands 4 Editors 5 File & Job Manipulation 6 Environment & Environment Variables 2 / 57 Introduction What is Linux? Linux is an operating system that evolved from a kernel created by Linus Torvalds when he was a student at the University of Helsinki. It's meant to be used as an alternative to other operating systems, Windows, Mac OS, MS-DOS, Solaris and others. Linux is the most popular OS used in a Supercomputer OS Family Count Share % Linux 489 97.8 Unix 9 1.8 Windows 1 0.2 Mixed 1 0.2 All Top 500 Supercomputers were Linux based in the November 2017 List. If you are using a Supercomputer/High Performance Computer for your research, it will be based on a *nix OS. It is required/neccessary/mandatory to learn Linux Programming (commands, shell scripting) if your research involves use of High Performance Computing or Supercomputing resources. http://www.top500.org/statistics/list/ June 2015 List 4 / 57 Where is Linux used? Linux distributions are tailored to different requirements such as 1 Server 2 Desktop 3 Workstation 4 Routers 5 Embedded devices 6 Mobile devices (Android is a Linux-based OS) Almost any software that you use on windows has a roughly equivalent software on Linux, most often multiple equivalent software e.g. Microsoft Office equivalents are OpenOffice.org, LibreOffice, KOffice For complete list, visit http: //wiki.linuxquestions.org/wiki/Linux_software_equivalent_to_Windows_software Linux offers you freedom, to choose your desktop environment, software. -

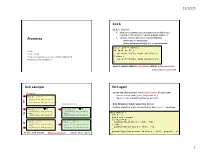

Processes Fork Fork Example 1 2 3 Fork Again

11/12/15 fork pid_t fork() 1. Clone current parent process to create identical child process, including all state (memory, registers, program counter, …). Processes 2. Continue executing both copies with one difference: • returns 0 to the child process • returns child’s process ID (pid) to the parent process pid_t pid = fork(); Focus: if (pid == 0) { Process model printf("hello from child\n"); Process management case study: Unix/Linux/Mac OS X } else { (Windows is a little different.) printf("hello from parent\n"); } fork is unique: called in one process, returns in two processes! (once in parent, once in child) 3 fork example fork again Process n Parent and child continue from private copies of same state. pid_t pid = f ork( ); Memory contents (code, globals, heap, stack, etc.), if (p id = = 0) { Register contents, program counter, file descriptors… pr intf ("he llo from chi ld\n "); } els e { 1 pr intf ("he llo from par ent\ n"); } Child Process m Only difference: return value from fork() Relative execution order of parent/child after fork() undefined pid_t pid = f ork( ); à m pid_t pid = f ork( ); à 0 if (p id = = 0) { if (p id = = 0) { pr intf ("he llo from chi ld\n "); pr intf ("he llo from chi ld\n "); } els e { } els e { void fork1() { 2 pr intf ("he llo from par ent\ n"); pr intf ("he llo from par ent\ n"); int x = 1; } } pid_t pid = fork(); if (pid == 0) { pid_t pid = f ork( ); pid_t pid = f ork( ); if (p id = = 0) { if (p id = = 0) { printf("Child has x = %d\n", ++x); 3 pr intf ("he llo from chi ld\n "); pr intf ("he llo from chi ld\n "); } else { } els e { } els e { pr intf ("he llo from par ent\ n"); pr intf ("he llo from par ent\ n"); printf("Parent has x = %d\n", --x); } } } printf("Bye from process %d with x = %d\n", getpid(), x); hello from parent hello from child Which prints first? } 4 5 1 11/12/15 When you run the command ls fork-exec Exec-ing a new program in a shell: fork-exec model: Stack fork() clone current process Code/state of shell process.