Comparisons of Performance Between Quantum and Classical Machine Learning Christopher Havenstein Southern Methodist University, [email protected]

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Implementing Grover's Algorithm on the IBM Quantum Computers

Implementing Grover’s Algorithm on the IBM Quantum Computers Aamir Mandviwalla*, Keita Ohshiro* and Bo Ji Abstract—This paper focuses on testing the current viability of Our goal in this paper is to study how close we are using quantum computers for the processing of data-driven tasks hardware-wise to realistically being able to exploit the poten- fueled by emerging data science applications. We test the publicly tial benefits of quantum algorithms. Prior work is rather limited available IBM quantum computers using Grover’s algorithm, a well-known quantum search algorithm, to obtain a baseline for this subject. There exist articles proclaiming that the age for the general evaluations of these quantum devices and to of quantum computing is only a year or two away along with investigate the impacts of various factors such as number of less positive articles that do not expect major advancements quantum bits (or qubits), qubit choice, and device choice. The for at least ten years [7], [8]. Instead of providing a prediction main contributions of this paper include a new 4-qubit imple- for the future, in this paper we aim to evaluate the accuracy mentation of Grover’s algorithm and test results showing the current capabilities of quantum computers. Our study indicates of quantum computers that are publicly available now. that quantum computers can currently only be used accurately To accomplish this goal, we run tests using Grover’s al- for solving simple problems with very small amounts of data. gorithm implemented in a variety of different ways on the There are also notable differences between different selections of publicly available IBM computers: IBM Q 5 Tenerife (5-qubit the qubits in the implementation design and between different chip) and IBM Q 16 Ruschlikon¨ (16-qubit chip) [9]. -

Simulating Quantum Field Theory with a Quantum Computer

Simulating quantum field theory with a quantum computer John Preskill Lattice 2018 28 July 2018 This talk has two parts (1) Near-term prospects for quantum computing. (2) Opportunities in quantum simulation of quantum field theory. Exascale digital computers will advance our knowledge of QCD, but some challenges will remain, especially concerning real-time evolution and properties of nuclear matter and quark-gluon plasma at nonzero temperature and chemical potential. Digital computers may never be able to address these (and other) problems; quantum computers will solve them eventually, though I’m not sure when. The physics payoff may still be far away, but today’s research can hasten the arrival of a new era in which quantum simulation fuels progress in fundamental physics. Frontiers of Physics short distance long distance complexity Higgs boson Large scale structure “More is different” Neutrino masses Cosmic microwave Many-body entanglement background Supersymmetry Phases of quantum Dark matter matter Quantum gravity Dark energy Quantum computing String theory Gravitational waves Quantum spacetime particle collision molecular chemistry entangled electrons A quantum computer can simulate efficiently any physical process that occurs in Nature. (Maybe. We don’t actually know for sure.) superconductor black hole early universe Two fundamental ideas (1) Quantum complexity Why we think quantum computing is powerful. (2) Quantum error correction Why we think quantum computing is scalable. A complete description of a typical quantum state of just 300 qubits requires more bits than the number of atoms in the visible universe. Why we think quantum computing is powerful We know examples of problems that can be solved efficiently by a quantum computer, where we believe the problems are hard for classical computers. -

Information Scrambling in Computationally Complex Quantum Circuits

Information Scrambling in Computationally Complex Quantum Circuits Xiao Mi,1, ∗ Pedram Roushan,1, ∗ Chris Quintana,1, ∗ Salvatore Mandr`a,2, 3 Jeffrey Marshall,2, 4 Charles Neill,1 Frank Arute,1 Kunal Arya,1 Juan Atalaya,1 Ryan Babbush,1 Joseph C. Bardin,1, 5 Rami Barends,1 Andreas Bengtsson,1 Sergio Boixo,1 Alexandre Bourassa,1, 6 Michael Broughton,1 Bob B. Buckley,1 David A. Buell,1 Brian Burkett,1 Nicholas Bushnell,1 Zijun Chen,1 Benjamin Chiaro,1 Roberto Collins,1 William Courtney,1 Sean Demura,1 Alan R. Derk,1 Andrew Dunsworth,1 Daniel Eppens,1 Catherine Erickson,1 Edward Farhi,1 Austin G. Fowler,1 Brooks Foxen,1 Craig Gidney,1 Marissa Giustina,1 Jonathan A. Gross,1 Matthew P. Harrigan,1 Sean D. Harrington,1 Jeremy Hilton,1 Alan Ho,1 Sabrina Hong,1 Trent Huang,1 William J. Huggins,1 L. B. Ioffe,1 Sergei V. Isakov,1 Evan Jeffrey,1 Zhang Jiang,1 Cody Jones,1 Dvir Kafri,1 Julian Kelly,1 Seon Kim,1 Alexei Kitaev,1, 7 Paul V. Klimov,1 Alexander N. Korotkov,1, 8 Fedor Kostritsa,1 David Landhuis,1 Pavel Laptev,1 Erik Lucero,1 Orion Martin,1 Jarrod R. McClean,1 Trevor McCourt,1 Matt McEwen,1, 9 Anthony Megrant,1 Kevin C. Miao,1 Masoud Mohseni,1 Wojciech Mruczkiewicz,1 Josh Mutus,1 Ofer Naaman,1 Matthew Neeley,1 Michael Newman,1 Murphy Yuezhen Niu,1 Thomas E. O'Brien,1 Alex Opremcak,1 Eric Ostby,1 Balint Pato,1 Andre Petukhov,1 Nicholas Redd,1 Nicholas C. -

Quantum Permutation Synchronization

(I) QUBO Preparation (II) Quantum Annealing (III) Global Synchronization Quantum Permutation Synchronization 1;? 2;? 2 1 Tolga Birdal Vladislav Golyanik Christian Theobalt Leonidas Guibas Unembedding 1Stanford University 2Max Planck Institute for Informatics, SIC QUBO Problem Logical Abstract Formulation We present QuantumSync, the first quantum algorithm for solving a synchronization problem in the context of com- puter vision. In particular, we focus on permutation syn- Embedding chronization which involves solving a non-convex optimiza- Quantum Annealing tion problem in discrete variables. We start by formulating Solution synchronization into a quadratic unconstrained binary opti- mization problem (QUBO). While such formulation respects Unembedding the binary nature of the problem, ensuring that the result is a set of permutations requires extra care. Hence, we: (i) Figure 1. Overview of QuantumSync. QuantumSync formulates show how to insert permutation constraints into a QUBO permutation synchronization as a QUBO and embeds its logical instance on a quantum computer. After running multiple anneals, problem and (ii) solve the constrained QUBO problem on it selects the lowest energy solution as the global optimum. the current generation of the adiabatic quantum computers D-Wave. Thanks to the quantum annealing, we guarantee reconstruction and multi-shape analysis pipelines [86, 23, global optimality with high probability while sampling the 25] because it heavy-lifts the global constraint satisfaction energy landscape to yield confidence estimates. Our proof- while respecting the geometry of the parameters. In fact, of-concepts realization on the adiabatic D-Wave computer most of the multiview-consistent inference problems can be demonstrates that quantum machines offer a promising way expressed as some form of a synchronization [108, 15]. -

Quantum Machine Learning: Benefits and Practical Examples

Quantum Machine Learning: Benefits and Practical Examples Frank Phillipson1[0000-0003-4580-7521] 1 TNO, Anna van Buerenplein 1, 2595 DA Den Haag, The Netherlands [email protected] Abstract. A quantum computer that is useful in practice, is expected to be devel- oped in the next few years. An important application is expected to be machine learning, where benefits are expected on run time, capacity and learning effi- ciency. In this paper, these benefits are presented and for each benefit an example application is presented. A quantum hybrid Helmholtz machine use quantum sampling to improve run time, a quantum Hopfield neural network shows an im- proved capacity and a variational quantum circuit based neural network is ex- pected to deliver a higher learning efficiency. Keywords: Quantum Machine Learning, Quantum Computing, Near Future Quantum Applications. 1 Introduction Quantum computers make use of quantum-mechanical phenomena, such as superposi- tion and entanglement, to perform operations on data [1]. Where classical computers require the data to be encoded into binary digits (bits), each of which is always in one of two definite states (0 or 1), quantum computation uses quantum bits, which can be in superpositions of states. These computers would theoretically be able to solve certain problems much more quickly than any classical computer that use even the best cur- rently known algorithms. Examples are integer factorization using Shor's algorithm or the simulation of quantum many-body systems. This benefit is also called ‘quantum supremacy’ [2], which only recently has been claimed for the first time [3]. There are two different quantum computing paradigms. -

A Scanning Transmon Qubit for Strong Coupling Circuit Quantum Electrodynamics

ARTICLE Received 8 Mar 2013 | Accepted 10 May 2013 | Published 7 Jun 2013 DOI: 10.1038/ncomms2991 A scanning transmon qubit for strong coupling circuit quantum electrodynamics W. E. Shanks1, D. L. Underwood1 & A. A. Houck1 Like a quantum computer designed for a particular class of problems, a quantum simulator enables quantitative modelling of quantum systems that is computationally intractable with a classical computer. Superconducting circuits have recently been investigated as an alternative system in which microwave photons confined to a lattice of coupled resonators act as the particles under study, with qubits coupled to the resonators producing effective photon–photon interactions. Such a system promises insight into the non-equilibrium physics of interacting bosons, but new tools are needed to understand this complex behaviour. Here we demonstrate the operation of a scanning transmon qubit and propose its use as a local probe of photon number within a superconducting resonator lattice. We map the coupling strength of the qubit to a resonator on a separate chip and show that the system reaches the strong coupling regime over a wide scanning area. 1 Department of Electrical Engineering, Princeton University, Olden Street, Princeton 08550, New Jersey, USA. Correspondence and requests for materials should be addressed to W.E.S. (email: [email protected]). NATURE COMMUNICATIONS | 4:1991 | DOI: 10.1038/ncomms2991 | www.nature.com/naturecommunications 1 & 2013 Macmillan Publishers Limited. All rights reserved. ARTICLE NATURE COMMUNICATIONS | DOI: 10.1038/ncomms2991 ver the past decade, the study of quantum physics using In this work, we describe a scanning superconducting superconducting circuits has seen rapid advances in qubit and demonstrate its coupling to a superconducting CPWR Osample design and measurement techniques1–3. -

COVID-19 Detection on IBM Quantum Computer with Classical-Quantum Transfer Learning

medRxiv preprint doi: https://doi.org/10.1101/2020.11.07.20227306; this version posted November 10, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted medRxiv a license to display the preprint in perpetuity. It is made available under a CC-BY-NC-ND 4.0 International license . Turk J Elec Eng & Comp Sci () : { © TUB¨ ITAK_ doi:10.3906/elk- COVID-19 detection on IBM quantum computer with classical-quantum transfer learning Erdi ACAR1*, Ihsan_ YILMAZ2 1Department of Computer Engineering, Institute of Science, C¸anakkale Onsekiz Mart University, C¸anakkale, Turkey 2Department of Computer Engineering, Faculty of Engineering, C¸anakkale Onsekiz Mart University, C¸anakkale, Turkey Received: .201 Accepted/Published Online: .201 Final Version: ..201 Abstract: Diagnose the infected patient as soon as possible in the coronavirus 2019 (COVID-19) outbreak which is declared as a pandemic by the world health organization (WHO) is extremely important. Experts recommend CT imaging as a diagnostic tool because of the weak points of the nucleic acid amplification test (NAAT). In this study, the detection of COVID-19 from CT images, which give the most accurate response in a short time, was investigated in the classical computer and firstly in quantum computers. Using the quantum transfer learning method, we experimentally perform COVID-19 detection in different quantum real processors (IBMQx2, IBMQ-London and IBMQ-Rome) of IBM, as well as in different simulators (Pennylane, Qiskit-Aer and Cirq). By using a small number of data sets such as 126 COVID-19 and 100 Normal CT images, we obtained a positive or negative classification of COVID-19 with 90% success in classical computers, while we achieved a high success rate of 94-100% in quantum computers. -

![Arxiv:1812.09167V1 [Quant-Ph] 21 Dec 2018 It with the Tex Typesetting System Being a Prime Example](https://docslib.b-cdn.net/cover/6826/arxiv-1812-09167v1-quant-ph-21-dec-2018-it-with-the-tex-typesetting-system-being-a-prime-example-436826.webp)

Arxiv:1812.09167V1 [Quant-Ph] 21 Dec 2018 It with the Tex Typesetting System Being a Prime Example

Open source software in quantum computing Mark Fingerhutha,1, 2 Tomáš Babej,1 and Peter Wittek3, 4, 5, 6 1ProteinQure Inc., Toronto, Canada 2University of KwaZulu-Natal, Durban, South Africa 3Rotman School of Management, University of Toronto, Toronto, Canada 4Creative Destruction Lab, Toronto, Canada 5Vector Institute for Artificial Intelligence, Toronto, Canada 6Perimeter Institute for Theoretical Physics, Waterloo, Canada Open source software is becoming crucial in the design and testing of quantum algorithms. Many of the tools are backed by major commercial vendors with the goal to make it easier to develop quantum software: this mirrors how well-funded open machine learning frameworks enabled the development of complex models and their execution on equally complex hardware. We review a wide range of open source software for quantum computing, covering all stages of the quantum toolchain from quantum hardware interfaces through quantum compilers to implementations of quantum algorithms, as well as all quantum computing paradigms, including quantum annealing, and discrete and continuous-variable gate-model quantum computing. The evaluation of each project covers characteristics such as documentation, licence, the choice of programming language, compliance with norms of software engineering, and the culture of the project. We find that while the diversity of projects is mesmerizing, only a few attract external developers and even many commercially backed frameworks have shortcomings in software engineering. Based on these observations, we highlight the best practices that could foster a more active community around quantum computing software that welcomes newcomers to the field, but also ensures high-quality, well-documented code. INTRODUCTION Source code has been developed and shared among enthusiasts since the early 1950s. -

![Arxiv:1908.04480V2 [Quant-Ph] 23 Oct 2020](https://docslib.b-cdn.net/cover/8997/arxiv-1908-04480v2-quant-ph-23-oct-2020-468997.webp)

Arxiv:1908.04480V2 [Quant-Ph] 23 Oct 2020

Quantum adiabatic machine learning with zooming Alexander Zlokapa,1 Alex Mott,2 Joshua Job,3 Jean-Roch Vlimant,1 Daniel Lidar,4 and Maria Spiropulu1 1Division of Physics, Mathematics & Astronomy, Alliance for Quantum Technologies, California Institute of Technology, Pasadena, CA 91125, USA 2DeepMind Technologies, London, UK 3Lockheed Martin Advanced Technology Center, Sunnyvale, CA 94089, USA 4Departments of Electrical and Computer Engineering, Chemistry, and Physics & Astronomy, and Center for Quantum Information Science & Technology, University of Southern California, Los Angeles, CA 90089, USA Recent work has shown that quantum annealing for machine learning, referred to as QAML, can perform comparably to state-of-the-art machine learning methods with a specific application to Higgs boson classification. We propose QAML-Z, a novel algorithm that iteratively zooms in on a region of the energy surface by mapping the problem to a continuous space and sequentially applying quantum annealing to an augmented set of weak classifiers. Results on a programmable quantum annealer show that QAML-Z matches classical deep neural network performance at small training set sizes and reduces the performance margin between QAML and classical deep neural networks by almost 50% at large training set sizes, as measured by area under the ROC curve. The significant improvement of quantum annealing algorithms for machine learning and the use of a discrete quantum algorithm on a continuous optimization problem both opens a new class of problems that can be solved by quantum annealers and suggests the approach in performance of near-term quantum machine learning towards classical benchmarks. I. INTRODUCTION lem Hamiltonian, ensuring that the system remains in the ground state if the system is perturbed slowly enough, as given by the energy gap between the ground state and Machine learning has gained an increasingly impor- the first excited state [36{38]. -

Simulating Quantum Field Theory with a Quantum Computer

Simulating quantum field theory with a quantum computer John Preskill Bethe Lecture, Cornell 12 April 2019 Frontiers of Physics short distance long distance complexity Higgs boson Large scale structure “More is different” Neutrino masses Cosmic microwave Many-body entanglement background Supersymmetry Phases of quantum Dark matter matter Quantum gravity Dark energy Quantum computing String theory Gravitational waves Quantum spacetime particle collision molecular chemistry entangled electrons A quantum computer can simulate efficiently any physical process that occurs in Nature. (Maybe. We don’t actually know for sure.) superconductor black hole early universe Opportunities in quantum simulation of quantum field theory Exascale digital computers will advance our knowledge of QCD, but some challenges will remain, especially concerning real-time evolution and properties of nuclear matter and quark-gluon plasma at nonzero temperature and chemical potential. Digital computers may never be able to address these (and other) problems; quantum computers will solve them eventually, though I’m not sure when. The physics payoff may still be far away, but today’s research can hasten the arrival of a new era in which quantum simulation fuels progress in fundamental physics. Collaborators: Stephen Jordan, Keith Lee, Hari Krovi arXiv: 1111.3633, 1112.4833, 1404.7115, 1703.00454, 1811.10085 Work in progress: Alex Buser, Junyu Liu, Burak Sahinoglu ??? Quantum Supremacy! Quantum computing in the NISQ Era The (noisy) 50-100 qubit quantum computer is coming soon. (NISQ = noisy intermediate-scale quantum .) NISQ devices cannot be simulated by brute force using the most powerful currently existing supercomputers. Noise limits the computational power of NISQ-era technology. -

A Functional Architecture for Scalable Quantum Computing

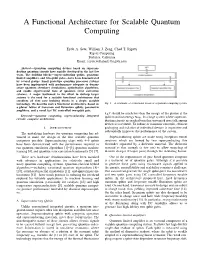

A Functional Architecture for Scalable Quantum Computing Eyob A. Sete, William J. Zeng, Chad T. Rigetti Rigetti Computing Berkeley, California Email: feyob,will,[email protected] Abstract—Quantum computing devices based on supercon- ducting quantum circuits have rapidly developed in the last few years. The building blocks—superconducting qubits, quantum- limited amplifiers, and two-qubit gates—have been demonstrated by several groups. Small prototype quantum processor systems have been implemented with performance adequate to demon- strate quantum chemistry simulations, optimization algorithms, and enable experimental tests of quantum error correction schemes. A major bottleneck in the effort to devleop larger systems is the need for a scalable functional architecture that combines all thee core building blocks in a single, scalable technology. We describe such a functional architecture, based on Fig. 1. A schematic of a functional layout of a quantum computing system. a planar lattice of transmon and fluxonium qubits, parametric amplifiers, and a novel fast DC controlled two-qubit gate. kBT should be much less than the energy of the photon at the Keywords—quantum computing, superconducting integrated qubit transition energy ~!01. In a large system where supercon- circuits, computer architecture. ducting circuits are packed together, unwanted crosstalk among devices is inevitable. To reduce or minimize crosstalk, efficient I. INTRODUCTION packaging and isolation of individual devices is imperative and substantially improves the performance of the system. The underlying hardware for quantum computing has ad- vanced to make the design of the first scalable quantum Superconducting qubits are made using Josephson tunnel computers possible. Superconducting chips with 4–9 qubits junctions which are formed by two superconducting thin have been demonstrated with the performance required to electrodes separated by a dielectric material. -

Explorations in Quantum Neural Networks with Intermediate Measurements

ESANN 2020 proceedings, European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning. Online event, 2-4 October 2020, i6doc.com publ., ISBN 978-2-87587-074-2. Available from http://www.i6doc.com/en/. Explorations in Quantum Neural Networks with Intermediate Measurements Lukas Franken and Bogdan Georgiev ∗Fraunhofer IAIS - Research Center for ML and ML2R Schloss Birlinghoven - 53757 Sankt Augustin Abstract. In this short note we explore a few quantum circuits with the particular goal of basic image recognition. The models we study are inspired by recent progress in Quantum Convolution Neural Networks (QCNN) [12]. We present a few experimental results, where we attempt to learn basic image patterns motivated by scaling down the MNIST dataset. 1 Introduction The recent demonstration of Quantum Supremacy [1] heralds the advent of the Noisy Intermediate-Scale Quantum (NISQ) [2] technology, where signs of supe- riority of quantum over classical machines in particular tasks may be expected. However, one should keep in mind the limitations of NISQ-devices when study- ing and developing quantum-algorithmic solutions - among other things, these include limits on the number of gates and qubits. At the same time the interaction of quantum computing and machine learn- ing is growing, with a vast amount of literature and new results. To name a few applications, the well-known HHL algorithm [3], quantum phase estimation [5] and inner products speed-up techniques lead to further advances in Support Vector Machines [4] and Principal Component Analysis [6, 7]. Intensive progress and ongoing research has also been made towards quantum analogues of Neural Networks (QNN) [8, 9, 10].