Navigation Filter Best Practices

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Neural Implementation of the Kalman Filter

A Neural Implementation of the Kalman Filter Robert C. Wilson Leif H. Finkel Department of Psychology Department of Bioengineering Princeton University University of Pennsylvania Princeton, NJ 08540 Philadelphia, PA 19103 [email protected] Abstract Recent experimental evidence suggests that the brain is capable of approximating Bayesian inference in the face of noisy input stimuli. Despite this progress, the neural underpinnings of this computation are still poorly understood. In this pa- per we focus on the Bayesian filtering of stochastic time series and introduce a novel neural network, derived from a line attractor architecture, whose dynamics map directly onto those of the Kalman filter in the limit of small prediction error. When the prediction error is large we show that the network responds robustly to changepoints in a way that is qualitatively compatible with the optimal Bayesian model. The model suggests ways in which probability distributions are encoded in the brain and makes a number of testable experimental predictions. 1 Introduction There is a growing body of experimental evidence consistent with the idea that animals are some- how able to represent, manipulate and, ultimately, make decisions based on, probability distribu- tions. While still unproven, this idea has obvious appeal to theorists as a principled way in which to understand neural computation. A key question is how such Bayesian computations could be per- formed by neural networks. Several authors have proposed models addressing aspects of this issue [15, 10, 9, 19, 2, 3, 16, 4, 11, 18, 17, 7, 6, 8], but as yet, there is no conclusive experimental evidence in favour of any one and the question remains open. -

Kalman and Particle Filtering

Abstract: The Kalman and Particle filters are algorithms that recursively update an estimate of the state and find the innovations driving a stochastic process given a sequence of observations. The Kalman filter accomplishes this goal by linear projections, while the Particle filter does so by a sequential Monte Carlo method. With the state estimates, we can forecast and smooth the stochastic process. With the innovations, we can estimate the parameters of the model. The article discusses how to set a dynamic model in a state-space form, derives the Kalman and Particle filters, and explains how to use them for estimation. Kalman and Particle Filtering The Kalman and Particle filters are algorithms that recursively update an estimate of the state and find the innovations driving a stochastic process given a sequence of observations. The Kalman filter accomplishes this goal by linear projections, while the Particle filter does so by a sequential Monte Carlo method. Since both filters start with a state-space representation of the stochastic processes of interest, section 1 presents the state-space form of a dynamic model. Then, section 2 intro- duces the Kalman filter and section 3 develops the Particle filter. For extended expositions of this material, see Doucet, de Freitas, and Gordon (2001), Durbin and Koopman (2001), and Ljungqvist and Sargent (2004). 1. The state-space representation of a dynamic model A large class of dynamic models can be represented by a state-space form: Xt+1 = ϕ (Xt,Wt+1; γ) (1) Yt = g (Xt,Vt; γ) . (2) This representation handles a stochastic process by finding three objects: a vector that l describes the position of the system (a state, Xt X R ) and two functions, one mapping ∈ ⊂ 1 the state today into the state tomorrow (the transition equation, (1)) and one mapping the state into observables, Yt (the measurement equation, (2)). -

1D Atmospheric Study of the Temperate Sub-Neptune K2-18B D

A&A 646, A15 (2021) Astronomy https://doi.org/10.1051/0004-6361/202039072 & © D. Blain et al. 2021 Astrophysics 1D atmospheric study of the temperate sub-Neptune K2-18b D. Blain, B. Charnay, and B. Bézard LESIA, Observatoire de Paris, PSL Research University, CNRS, Sorbonne Université, Université de Paris, 92195 Meudon, France e-mail: [email protected] Received 30 July 2020 / Accepted 13 November 2020 ABSTRACT Context. The atmospheric composition of exoplanets with masses between 2 and 10 M is poorly understood. In that regard, the sub-Neptune K2-18b, which is subject to Earth-like stellar irradiation, offers a valuable opportunity⊕ for the characterisation of such atmospheres. Previous analyses of its transmission spectrum from the Kepler, Hubble (HST), and Spitzer space telescopes data using both retrieval algorithms and forward-modelling suggest the presence of H2O and an H2–He atmosphere, but have not detected other gases, such as CH4. Aims. We present simulations of the atmosphere of K2-18 b using Exo-REM, our self-consistent 1D radiative-equilibrium model, using a large grid of atmospheric parameters to infer constraints on its chemical composition. Methods. We compared the transmission spectra computed by our model with the above-mentioned data (0.4–5 µm), assuming an H2–He dominated atmosphere. We investigated the effects of irradiation, eddy diffusion coefficient, internal temperature, clouds, C/O ratio, and metallicity on the atmospheric structure and transit spectrum. Results. We show that our simulations favour atmospheric metallicities between 40 and 500 times solar and indicate, in some cases, the formation of H2O-ice clouds, but not liquid H2O clouds. -

Planet Hunters. VI: an Independent Characterization of KOI-351 and Several Long Period Planet Candidates from the Kepler Archival Data

Accepted to AJ Planet Hunters VI: An Independent Characterization of KOI-351 and Several Long Period Planet Candidates from the Kepler Archival Data1 Joseph R. Schmitt2, Ji Wang2, Debra A. Fischer2, Kian J. Jek7, John C. Moriarty2, Tabetha S. Boyajian2, Megan E. Schwamb3, Chris Lintott4;5, Stuart Lynn5, Arfon M. Smith5, Michael Parrish5, Kevin Schawinski6, Robert Simpson4, Daryll LaCourse7, Mark R. Omohundro7, Troy Winarski7, Samuel Jon Goodman7, Tony Jebson7, Hans Martin Schwengeler7, David A. Paterson7, Johann Sejpka7, Ivan Terentev7, Tom Jacobs7, Nawar Alsaadi7, Robert C. Bailey7, Tony Ginman7, Pete Granado7, Kristoffer Vonstad Guttormsen7, Franco Mallia7, Alfred L. Papillon7, Franco Rossi7, and Miguel Socolovsky7 [email protected] ABSTRACT We report the discovery of 14 new transiting planet candidates in the Kepler field from the Planet Hunters citizen science program. None of these candidates overlapped with Kepler Objects of Interest (KOIs) at the time of submission. We report the discovery of one more addition to the six planet candidate system around KOI-351, making it the only seven planet candidate system from Kepler. Additionally, KOI-351 bears some resemblance to our own solar system, with the inner five planets ranging from Earth to mini-Neptune radii and the outer planets being gas giants; however, this system is very compact, with all seven planet candidates orbiting . 1 AU from their host star. A Hill stability test and an orbital integration of the system shows that the system is stable. Furthermore, we significantly add to the population of long period 1This publication has been made possible through the work of more than 280,000 volunteers in the Planet Hunters project, whose contributions are individually acknowledged at http://www.planethunters.org/authors. -

Lecture 8 the Kalman Filter

EE363 Winter 2008-09 Lecture 8 The Kalman filter • Linear system driven by stochastic process • Statistical steady-state • Linear Gauss-Markov model • Kalman filter • Steady-state Kalman filter 8–1 Linear system driven by stochastic process we consider linear dynamical system xt+1 = Axt + But, with x0 and u0, u1,... random variables we’ll use notation T x¯t = E xt, Σx(t)= E(xt − x¯t)(xt − x¯t) and similarly for u¯t, Σu(t) taking expectation of xt+1 = Axt + But we have x¯t+1 = Ax¯t + Bu¯t i.e., the means propagate by the same linear dynamical system The Kalman filter 8–2 now let’s consider the covariance xt+1 − x¯t+1 = A(xt − x¯t)+ B(ut − u¯t) and so T Σx(t +1) = E (A(xt − x¯t)+ B(ut − u¯t))(A(xt − x¯t)+ B(ut − u¯t)) T T T T = AΣx(t)A + BΣu(t)B + AΣxu(t)B + BΣux(t)A where T T Σxu(t) = Σux(t) = E(xt − x¯t)(ut − u¯t) thus, the covariance Σx(t) satisfies another, Lyapunov-like linear dynamical system, driven by Σxu and Σu The Kalman filter 8–3 consider special case Σxu(t)=0, i.e., x and u are uncorrelated, so we have Lyapunov iteration T T Σx(t +1) = AΣx(t)A + BΣu(t)B , which is stable if and only if A is stable if A is stable and Σu(t) is constant, Σx(t) converges to Σx, called the steady-state covariance, which satisfies Lyapunov equation T T Σx = AΣxA + BΣuB thus, we can calculate the steady-state covariance of x exactly, by solving a Lyapunov equation (useful for starting simulations in statistical steady-state) The Kalman filter 8–4 Example we consider xt+1 = Axt + wt, with 0.6 −0.8 A = , 0.7 0.6 where wt are IID N (0, I) eigenvalues of A are -

Sirius Astronomer

FEBRUARY 2013 Free to members, subscriptions $12 for 12 Volume 40, Number 2 Jupiter is featured often in this newsletter because it is one of the most appealing objects in the night sky even for very small telescopes. The king of Solar System planets is prominent in the night sky throughout the month, located near Aldebaran in the constellation Taurus. Pat Knoll took this image on January 18th from his observing site at Kearney Mesa, California, using a Meade LX200 Classic at f/40 with a 4X Powermate. The image was compiled from a two-minute run with an Imaging Source DFK 21AU618.AS camera . OCA CLUB MEETING STAR PARTIES COMING UP The free and open club meeting The Black Star Canyon site will open The next session of the Beginners will be held February 8 at 7:30 PM on February 2. The Anza site will be Class will be held at the Heritage in the Irvine Lecture Hall of the open on February 9. Members are en- Museum of Orange County at Hashinger Science Center at couraged to check the website calen- 3101 West Harvard Street in San- Chapman University in Orange. dar for the latest updates on star par- ta Ana on February 1st. The fol- This month, UCSD’s Dr. Burgasser ties and other events. lowing class will be held March will present “The Coldest Stars: Y- 1st Dwarfs and the Fuzzy Border be- Please check the website calendar for tween Stars and Planets.” the outreach events this month! Volun- GOTO SIG: TBA teers are always welcome! Astro-Imagers SIG: Feb. -

Antanamo Bay 'Ocr'' Er T "

1 rC'y!0'% %04 -0 Guantanamo Bay 'ocr'' er t ". ~ O " ~al kr "" Vol. 58 No. 3 Friday, January 19, 2001 Base residents take part Lassiter, Kochan selected in Candlelight March Naval Base SOY, JSOY GUANTANAMO BAY - EM1(SW) Wilbur A. Lasseter from the U.S. Naval Brig was recently selected as 2000 Sailor of the Year for Naval Station and Naval Base. He was also selected as Sailor of the Quarter for the fourth quarter for Naval Station and Naval Base. Lasseter will now compete for Southeast Region Sailor of the Year in Jacksonville the week of Feb. 11. Lasseter served as brig guard and recently elevated to brig administra- tive supervisor because of his un- matched leadership and managerial a skills. Through his superb leadership and hard work, Lasseter achieved a Com- bined Federal Campaign contribution for the Naval Station in excess of $21,000, 55 percent of the Naval +. Base's total goal of $38,000. As a brig career counselor, his efforts yielded three reenlistments, three Overseas Tour Extension Incen- tive Program requests and one con- version package. In addition, Lasseter performed a host of formal and HMI Tim Hill delivers a "moving" version of Dr Martin Luther informal counseling sessions. King famous "IHave A Dream" speech at POW/MIA Memorial - continued on page two (top). More than 320 GTMO residents marched in the 2nd annual CandlelightMarch in honor ofDr Martin Luther King Jr. GUANTANAMO BAY - BU3 Joseph J. Kochan was recently se- Photos by JOC Walter T Ham IV (top) lected as the Naval Station and Naval Base 2000 Junior Sailor of the and PH2 Emnitt J Hawks (bottom) Year and the 4th quarter Sailor of the Quarter. -

Kalman-Filter Control Schemes for Fringe Tracking

A&A 541, A81 (2012) Astronomy DOI: 10.1051/0004-6361/201218932 & c ESO 2012 Astrophysics Kalman-filter control schemes for fringe tracking Development and application to VLTI/GRAVITY J. Menu1,2,3,, G. Perrin1,3, E. Choquet1,3, and S. Lacour1,3 1 LESIA, Observatoire de Paris, CNRS, UPMC, Université Paris Diderot, Paris Sciences et Lettres, 5 place Jules Janssen, 92195 Meudon, France 2 Instituut voor Sterrenkunde, KU Leuven, Celestijnenlaan 200D, 3001 Leuven, Belgium e-mail: [email protected] 3 Groupement d’Intérêt Scientifique PHASE (Partenariat Haute résolution Angulaire Sol Espace) between ONERA, Observatoire de Paris, CNRS and Université Paris Diderot, France Received 31 January 2012 / Accepted 28 February 2012 ABSTRACT Context. The implementation of fringe tracking for optical interferometers is inevitable when optimal exploitation of the instrumental capacities is desired. Fringe tracking allows continuous fringe observation, considerably increasing the sensitivity of the interfero- metric system. In addition to the correction of atmospheric path-length differences, a decent control algorithm should correct for disturbances introduced by instrumental vibrations, and deal with other errors propagating in the optical trains. Aims. In an effort to improve upon existing fringe-tracking control, especially with respect to vibrations, we attempt to construct con- trol schemes based on Kalman filters. Kalman filtering is an optimal data processing algorithm for tracking and correcting a system on which observations are performed. As a direct application, control schemes are designed for GRAVITY, a future four-telescope near-infrared beam combiner for the Very Large Telescope Interferometer (VLTI). Methods. We base our study on recent work in adaptive-optics control. -

The Unscented Kalman Filter for Nonlinear Estimation

The Unscented Kalman Filter for Nonlinear Estimation Eric A. Wan and Rudolph van der Merwe Oregon Graduate Institute of Science & Technology 20000 NW Walker Rd, Beaverton, Oregon 97006 [email protected], [email protected] Abstract 1. Introduction The Extended Kalman Filter (EKF) has become a standard The EKF has been applied extensively to the field of non- technique used in a number of nonlinear estimation and ma- linear estimation. General application areas may be divided chine learning applications. These include estimating the into state-estimation and machine learning. We further di- state of a nonlinear dynamic system, estimating parame- vide machine learning into parameter estimation and dual ters for nonlinear system identification (e.g., learning the estimation. The framework for these areas are briefly re- weights of a neural network), and dual estimation (e.g., the viewed next. Expectation Maximization (EM) algorithm) where both states and parameters are estimated simultaneously. State-estimation This paper points out the flaws in using the EKF, and The basic framework for the EKF involves estimation of the introduces an improvement, the Unscented Kalman Filter state of a discrete-time nonlinear dynamic system, (UKF), proposed by Julier and Uhlman [5]. A central and (1) vital operation performed in the Kalman Filter is the prop- (2) agation of a Gaussian random variable (GRV) through the system dynamics. In the EKF, the state distribution is ap- where represent the unobserved state of the system and proximated by a GRV, which is then propagated analyti- is the only observed signal. The process noise drives cally through the first-order linearization of the nonlinear the dynamic system, and the observation noise is given by system. -

1 the Kalman Filter

Macroeconometrics, Spring, 2019 Bent E. Sørensen August 20, 2019 1 The Kalman Filter We assume that we have a model that concerns a series of vectors αt, which are called \state vectors". These variables are supposed to describe the current state of the system in question. These state variables will typically not be observed and the other main ingredient is therefore the observed variables yt. The first step is to write the model in state space form which is in the form of a linear system which consists of 2 sets of linear equations. The first set of equations describes the evolution of the system and is called the \Transition Equation": αt = Kαt−1 + Rηt ; where K and R are matrices of constants and η is N(0;Q) and serially uncorrelated. (This setup allows for a constant also.) The second set of equations describes the relation between the state of the system and the observations and is called the \Measurement Equation": yt = Zαt + ξt ; where ξt is N(0;H), serially uncorrelated, and E(ξtηt−j) = 0 for all t and j. It turns out that a lot of models can be put in the state space form with a little imagination. The main restriction is of course on the linearity of the model while you may not care about the normality condition as you will still be doing least squares. The state-space model as it is defined here is not the most general possible|it is in principle easy to allow for non-stationary coefficient matrices, see for example Harvey(1989). -

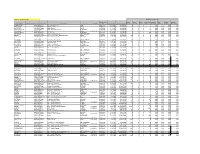

Basin Name 8-Digit HUC/ Service Area Owner Type Project Name County Corps AID No

updated February 28, 2021 Mitigation Requirements DWR Permit Warm Cool Cold Unspecified Riparian Non- Coastal Riparian Basin Name 8-digit HUC/ Service Area Owner Type Project Name County Corps AID No. Payment Date Payment amount Stream Stream Stream Thermal Wetland Riparian Wetland Buffer (sqft) PASQUOTANK 03010205 Private Barrier Island Station DARE 1996-0710 6 /23/1997 $18,000.00 0 0 0 0.00 1.44 0.00 0.00 CAPE FEAR 03030002 Private Panther Creek WAKE 1996-0320 8 /10/1997 $18,000.00 0 0 0 0.61 0.00 0.00 0.00 CAPE FEAR 03030003 Private Bailey Farms WAKE 1995-0102 9 /3 /1997 $12,000.00 0 0 0 0.40 0.00 0.00 0.00 FRENCH BROAD 06010105 DOT DOT - Widening of NC 151 BUNCOMBE 1997-0440 10/2 /1997 $32,500.00 0 0 0 260 0.00 0.00 0.00 0.00 CAPE FEAR 03030002 Private Kit Creek WAKE 1997-08149 1997-0729 10/14/1997 $6,000.00 0 0 0 0.25 0.00 0.00 0.00 FRENCH BROAD 06010105 Private Nasty Branch BUNCOMBE 1997-0123 11/13/1997 $37,500.00 0 0 0 300 0.00 0.00 0.00 0.00 CAPE FEAR 03030005 Private Motts Creek Apartments NEW HANOVER 1997-0075 12/11/1997 $44,280.00 0 0 0 0.00 3.69 0.00 0.00 NEUSE 03020201 Government Town of Cary - Cary Parkway Extension WAKE 1997-0227 1 /30/1998 $56,250.00 450 0 0 0 0.00 0.00 0.00 0.00 CAPE FEAR 03030002 Private Amberly Development CHATHAM 1995-0410 2 /2 /1998 $30,000.00 240 0 0 0 0.00 0.00 0.00 0.00 CATAWBA 03050103 Private McKee Road Apartments MECKLENBURG 1997-0809 2 /13/1998 $27,500.00 220 0 0 0 0.00 0.00 0.00 0.00 CAPE FEAR 03030002 Private Northeast Creek Parkway DURHAM 1997-0575 3 /19/1998 $22,750.00 182 0 0 0 0.00 0.00 -

BDS/GPS Multi-System Positioning Based on Nonlinear Filter Algorithm

Global Journal of Computer Science and Technology: G Interdisciplinary Volume 16 Issue 1 Version 1.0 Year 2016 Type: Double Blind Peer Reviewed International Research Journal Publisher: Global Journals Inc. (USA) Online ISSN: 0975-4172 & Print ISSN: 0975-4350 BDS/GPS Multi-System Positioning based on Nonlinear Filter Algorithm By JaeHyok Kong, Xuchu Mao & Shaoyuan Li Shanghai Jiao Tong University Abstract- The Global Navigation Satellite System can provide all-day three-dimensional position and speed information. Currently, only using the single navigation system cannot satisfy the requirements of the system's reliability and integrity. In order to improve the reliability and stability of the satellite navigation system, the positioning method by BDS and GPS navigation system is presented, the measurement model and the state model are described. Furthermore, Unscented Kalman Filter (UKF) is employed in GPS and BDS conditions, and analysis of single system/multi-systems’ positioning has been carried out respectively. The experimental results are compared with the estimation results, which are obtained by the iterative least square method and the extended Kalman filtering (EFK) method. It shows that the proposed method performed high-precise positioning. Especially when the number of satellites is not adequate enough, the proposed method can combine BDS and GPS systems to carry out a higher positioning precision. Keywords: global navigation satellite system (GNSS), positioning algorithm, unscented kalman filter (UKF), beidou navigation system (BDS). GJCST-G Classification : G.1.5, G.1.6, G.2.1 BDSGPSMultiSystemPositioningbasedonNonlinearFilterAlgorithm Strictly as per the compliance and regulations of: © 2016. JaeHyok Kong, Xuchu Mao & Shaoyuan Li. This is a research/review paper, distributed under the terms of the Creative Commons Attribution-Noncommercial 3.0 Unported License http://creativecommons.org/ licenses/by-nc/3.0/), permitting all non- commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.