Summer 2020 Vol

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Open Source Used in Influx1.8 Influx 1.9

Open Source Used In Influx1.8 Influx 1.9 Cisco Systems, Inc. www.cisco.com Cisco has more than 200 offices worldwide. Addresses, phone numbers, and fax numbers are listed on the Cisco website at www.cisco.com/go/offices. Text Part Number: 78EE117C99-1178791953 Open Source Used In Influx1.8 Influx 1.9 1 This document contains licenses and notices for open source software used in this product. With respect to the free/open source software listed in this document, if you have any questions or wish to receive a copy of any source code to which you may be entitled under the applicable free/open source license(s) (such as the GNU Lesser/General Public License), please contact us at [email protected]. In your requests please include the following reference number 78EE117C99-1178791953 Contents 1.1 golang-protobuf-extensions v1.0.1 1.1.1 Available under license 1.2 prometheus-client v0.2.0 1.2.1 Available under license 1.3 gopkg.in-asn1-ber v1.0.0-20170511165959-379148ca0225 1.3.1 Available under license 1.4 influxdata-raft-boltdb v0.0.0-20210323121340-465fcd3eb4d8 1.4.1 Available under license 1.5 fwd v1.1.1 1.5.1 Available under license 1.6 jaeger-client-go v2.23.0+incompatible 1.6.1 Available under license 1.7 golang-genproto v0.0.0-20210122163508-8081c04a3579 1.7.1 Available under license 1.8 influxdata-roaring v0.4.13-0.20180809181101-fc520f41fab6 1.8.1 Available under license 1.9 influxdata-flux v0.113.0 1.9.1 Available under license 1.10 apache-arrow-go-arrow v0.0.0-20200923215132-ac86123a3f01 1.10.1 Available under -

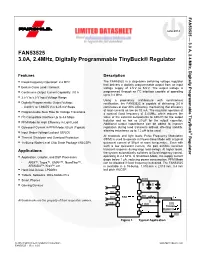

FAN53525 3.0A, 2.4Mhz, Digitally Programmable Tinybuck® Regulator

FAN53525 — 3.0 A, 2.4 MHz, June 2014 FAN53525 3.0A, 2.4MHz, Digitally Programmable TinyBuck® Regulator Digitally Programmable TinyBuck Digitally Features Description . Fixed-Frequency Operation: 2.4 MHz The FAN53525 is a step-down switching voltage regulator that delivers a digitally programmable output from an input . Best-in-Class Load Transient voltage supply of 2.5 V to 5.5 V. The output voltage is 2 . Continuous Output Current Capability: 3.0 A programmed through an I C interface capable of operating up to 3.4 MHz. 2.5 V to 5.5 V Input Voltage Range Using a proprietary architecture with synchronous . Digitally Programmable Output Voltage: rectification, the FAN53525 is capable of delivering 3.0 A - 0.600 V to 1.39375 V in 6.25 mV Steps continuous at over 80% efficiency, maintaining that efficiency at load currents as low as 10 mA. The regulator operates at Programmable Slew Rate for Voltage Transitions . a nominal fixed frequency of 2.4 MHz, which reduces the . I2C-Compatible Interface Up to 3.4 Mbps value of the external components to 330 nH for the output inductor and as low as 20 µF for the output capacitor. PFM Mode for High Efficiency in Light Load . Additional output capacitance can be added to improve . Quiescent Current in PFM Mode: 50 µA (Typical) regulation during load transients without affecting stability, allowing inductance up to 1.2 µH to be used. Input Under-Voltage Lockout (UVLO) ® At moderate and light loads, Pulse Frequency Modulation Regulator Thermal Shutdown and Overload Protection . (PFM) is used to operate in Power-Save Mode with a typical . -

An Emerging Architecture in Smart Phones

International Journal of Electronic Engineering and Computer Science Vol. 3, No. 2, 2018, pp. 29-38 http://www.aiscience.org/journal/ijeecs ARM Processor Architecture: An Emerging Architecture in Smart Phones Naseer Ahmad, Muhammad Waqas Boota * Department of Computer Science, Virtual University of Pakistan, Lahore, Pakistan Abstract ARM is a 32-bit RISC processor architecture. It is develop and licenses by British company ARM holdings. ARM holding does not manufacture and sell the CPU devices. ARM holding only licenses the processor architecture to interested parties. There are two main types of licences implementation licenses and architecture licenses. ARM processors have a unique combination of feature such as ARM core is very simple as compare to general purpose processors. ARM chip has several peripheral controller, a digital signal processor and ARM core. ARM processor consumes less power but provide the high performance. Now a day, ARM Cortex series is very popular in Smartphone devices. We will also see the important characteristics of cortex series. We discuss the ARM processor and system on a chip (SOC) which includes the Qualcomm, Snapdragon, nVidia Tegra, and Apple system on chips. In this paper, we discuss the features of ARM processor and Intel atom processor and see which processor is best. Finally, we will discuss the future of ARM processor in Smartphone devices. Keywords RISC, ISA, ARM Core, System on a Chip (SoC) Received: May 6, 2018 / Accepted: June 15, 2018 / Published online: July 26, 2018 @ 2018 The Authors. Published by American Institute of Science. This Open Access article is under the CC BY license. -

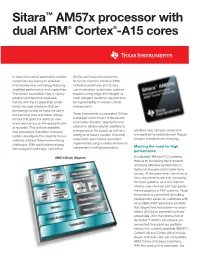

Sitara™ Am57x Processor with Dual ARM® Cortex®-A15 Cores

Sitara™ AM57x processor with dual ARM® Cortex®-A15 cores In today’s industrial automation market, (PLCs) and industrial computers consumers are seeing an evolution to human machine interface (HMI), that requires new technology featuring industrial peripherals and factory amplified performance and capabilities. communication, automation systems The factory automation floor is rapidly require cuttingedge technologies to advancing to become more user meet stringent customer requirements friendly with the incorporation of ele for high reliability in missioncritical ments like user interfaces that are environments. increasingly similar to those we use in our everyday lives and video compe Texas Instruments Incorporated (TI) has tencies that grant the ability to view a strategic commitment to the factory machines running on the opposite side automation industry, ranging from an of factories. This shift necessitates extensive, reliable solution portfolio to solutions very complex, expensive new processors that afford industrial a long product life supply as well as a and resistant to evolution even though system developers the capacity to suc strong localbased support. Industrial industry standards are changing. cessfully address these everevolving automation applications have been challenges. With applications ranging implemented using a variety of external Meeting the need for high from programmable logic controllers components making yesterday’s performance AM57x block diagram In industrial HMI and PLC systems, there is an increasing trend towards achieving x86level performance in fanless enclosures and smaller form factors. At the same time, communica tions requirements are ever increasing for these systems, as is the need for intuitive user interface and highperfor mance graphics in HMI systems. -

Quick Install for AWS EMR

Quick Install for AWS EMR Version: 6.8 Doc Build Date: 01/21/2020 Copyright © Trifacta Inc. 2020 - All Rights Reserved. CONFIDENTIAL These materials (the “Documentation”) are the confidential and proprietary information of Trifacta Inc. and may not be reproduced, modified, or distributed without the prior written permission of Trifacta Inc. EXCEPT AS OTHERWISE PROVIDED IN AN EXPRESS WRITTEN AGREEMENT, TRIFACTA INC. PROVIDES THIS DOCUMENTATION AS-IS AND WITHOUT WARRANTY AND TRIFACTA INC. DISCLAIMS ALL EXPRESS AND IMPLIED WARRANTIES TO THE EXTENT PERMITTED, INCLUDING WITHOUT LIMITATION THE IMPLIED WARRANTIES OF MERCHANTABILITY, NON-INFRINGEMENT AND FITNESS FOR A PARTICULAR PURPOSE AND UNDER NO CIRCUMSTANCES WILL TRIFACTA INC. BE LIABLE FOR ANY AMOUNT GREATER THAN ONE HUNDRED DOLLARS ($100) BASED ON ANY USE OF THE DOCUMENTATION. For third-party license information, please select About Trifacta from the Help menu. 1. Release Notes . 4 1.1 Changes to System Behavior . 4 1.1.1 Changes to the Language . 4 1.1.2 Changes to the APIs . 18 1.1.3 Changes to Configuration 23 1.1.4 Changes to the Object Model . 26 1.2 Release Notes 6.8 . 30 1.3 Release Notes 6.4 . 36 1.4 Release Notes 6.0 . 42 1.5 Release Notes 5.1 . 49 2. Quick Start 55 2.1 Install from AWS Marketplace with EMR . 55 2.2 Upgrade for AWS Marketplace with EMR . 62 3. Configure 62 3.1 Configure for AWS . 62 3.1.1 Configure for EC2 Role-Based Authentication . 68 3.1.2 Enable S3 Access . 70 3.1.2.1 Create Redshift Connections 81 3.1.3 Configure for EMR . -

OMAP 3 Family of Multimedia Applications

OMAP™ 3 family of multimedia applications processors Revolutionizing entertainment and productivity Key features in wireless handheld commumications • Combines mobile entertainment and high-performance productivity applications. Product Bulletin • Integrates the advanced Superscalar ARM Cortex-A8 RISC core, enabling up to The OMAP™ 3 family of multimedia applications processors from Texas Instruments (TI) 3x gain in performance versus ARM11. introduces a new level of performance that enables laptop-like productivity and advanced • Designed in 45-nm (OMAP36x platform) entertainment in multimedia-enabled handsets. OMAP 3 devices support all levels of and 65-nm (OMAP34x platform) CMOS handsets, from the entry-level multimedia-enabled handsets to high-end Mobile Internet process technologies for less power Devices (MIDs). consumption and increased device performance. Entry-level Mid-level High-end • Includes integrated IVA hardware multimedia-enabled multimedia-enabled multimedia-enabled accelerators to enable multi-standard encode handsets handsets handsets decode up to HD resolution. OMAP3410 OMAP3420 OMAP3430/3440 • Available integrated image signal OMAP3610 OMAP3620 OMAP3630/3640 processor (ISP) enables faster, higher quality image capture, lower system cost TI’s OMAP 3 family of applications processors These devices can operate at a higher and lower power consumption. • Provides seamless connectivity to hard integrate the ARM Cortex-A8 superscalar frequency than previous-generation OMAP diskdrive (HDD) devices for mass storage. microprocessor -

Easybuild Documentation Release 20210907.0

EasyBuild Documentation Release 20210907.0 Ghent University Tue, 07 Sep 2021 08:55:41 Contents 1 What is EasyBuild? 3 2 Concepts and terminology 5 2.1 EasyBuild framework..........................................5 2.2 Easyblocks................................................6 2.3 Toolchains................................................7 2.3.1 system toolchain.......................................7 2.3.2 dummy toolchain (DEPRECATED) ..............................7 2.3.3 Common toolchains.......................................7 2.4 Easyconfig files..............................................7 2.5 Extensions................................................8 3 Typical workflow example: building and installing WRF9 3.1 Searching for available easyconfigs files.................................9 3.2 Getting an overview of planned installations.............................. 10 3.3 Installing a software stack........................................ 11 4 Getting started 13 4.1 Installing EasyBuild........................................... 13 4.1.1 Requirements.......................................... 14 4.1.2 Using pip to Install EasyBuild................................. 14 4.1.3 Installing EasyBuild with EasyBuild.............................. 17 4.1.4 Dependencies.......................................... 19 4.1.5 Sources............................................. 21 4.1.6 In case of installation issues. .................................. 22 4.2 Configuring EasyBuild.......................................... 22 4.2.1 Supported configuration -

Delft University of Technology Arrowsam In-Memory Genomics

Delft University of Technology ArrowSAM In-Memory Genomics Data Processing Using Apache Arrow Ahmad, Tanveer; Ahmed, Nauman; Peltenburg, Johan; Al-Ars, Zaid DOI 10.1109/ICCAIS48893.2020.9096725 Publication date 2020 Document Version Accepted author manuscript Published in 2020 3rd International Conference on Computer Applications & Information Security (ICCAIS) Citation (APA) Ahmad, T., Ahmed, N., Peltenburg, J., & Al-Ars, Z. (2020). ArrowSAM: In-Memory Genomics Data Processing Using Apache Arrow. In 2020 3rd International Conference on Computer Applications & Information Security (ICCAIS): Proceedings (pp. 1-6). [9096725] IEEE . https://doi.org/10.1109/ICCAIS48893.2020.9096725 Important note To cite this publication, please use the final published version (if applicable). Please check the document version above. Copyright Other than for strictly personal use, it is not permitted to download, forward or distribute the text or part of it, without the consent of the author(s) and/or copyright holder(s), unless the work is under an open content license such as Creative Commons. Takedown policy Please contact us and provide details if you believe this document breaches copyrights. We will remove access to the work immediately and investigate your claim. This work is downloaded from Delft University of Technology. For technical reasons the number of authors shown on this cover page is limited to a maximum of 10. © 2020 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works. -

Bringing Probabilistic Programming to Scientific Simulators at Scale

Etalumis: Bringing Probabilistic Programming to Scientific Simulators at Scale Atılım Güneş Baydin, Lei Shao, Wahid Bhimji, Lukas Heinrich, Lawrence Meadows, Jialin Liu, Andreas Munk, Saeid Naderiparizi, Bradley Gram-Hansen, Gilles Louppe, Mingfei Ma, Xiaohui Zhao, Philip Torr, Victor Lee, Kyle Cranmer, Prabhat, and Frank Wood SC19, Denver, CO, United States 19 November 2019 Simulation and HPC Computational models and simulation are key to scientific advance at all scales Particle physics Nuclear physics Material design Drug discovery Weather Climate science Cosmology 2 Introducing a new way to use existing simulators Probabilistic programming Simulation Supercomputing (machine learning) 3 Simulators Parameters Outputs (data) Simulator 4 Simulators Parameters Outputs (data) Simulator Prediction: ● Simulate forward evolution of the system ● Generate samples of output 5 Simulators Parameters Outputs (data) Simulator Prediction: ● Simulate forward evolution of the system ● Generate samples of output 6 Simulators Parameters Outputs (data) Simulator Prediction: ● Simulate forward evolution of the system ● Generate samples of output WE NEED THE INVERSE! 7 Simulators Parameters Outputs (data) Simulator Prediction: ● Simulate forward evolution of the system ● Generate samples of output Inference: ● Find parameters that can produce (explain) observed data ● Inverse problem ● Often a manual process 8 Simulators Parameters Outputs (data) Inferred Simulator Observed data parameters Gene network Gene expression 9 Simulators Parameters Outputs (data) -

Advances in Electron Microscopy with Deep Learning

Advances in Electron Microscopy with Deep Learning by Jeffrey Mark Ede Thesis To be submitted to the University of Warwick for the degree of Doctor of Philosophy in Physics Department of Physics January 2021 Contents Contents i List of Abbreviations iii List of Figures viii List of Tables xvii Acknowledgments xix Declarations xx Research Training xxv Abstract xxvi Preface xxvii I Initial Motivation......................................... xxvii II Thesis Structure.......................................... xxvii III Connections............................................ xxix Chapter 1 Review: Deep Learning in Electron Microscopy1 1.1 Scientific Paper..........................................1 1.2 Reflection............................................. 100 Chapter 2 Warwick Electron Microscopy Datasets 101 2.1 Scientific Paper.......................................... 101 2.2 Amendments and Corrections................................... 133 2.3 Reflection............................................. 133 Chapter 3 Adaptive Learning Rate Clipping Stabilizes Learning 136 3.1 Scientific Paper.......................................... 136 3.2 Amendments and Corrections................................... 147 3.3 Reflection............................................. 147 Chapter 4 Partial Scanning Transmission Electron Microscopy with Deep Learning 149 4.1 Scientific Paper.......................................... 149 4.2 Amendments and Corrections................................... 176 4.3 Reflection............................................. 176 -

Nomadik Application Processor Andrea Gallo Giancarlo Asnaghi ST Is #1 World-Wide Leader in Digital TV and Consumer Audio

Nomadik Application Processor Andrea Gallo Giancarlo Asnaghi ST is #1 world-wide leader in Digital TV and Consumer Audio MP3 Portable Digital Satellite Radio Set Top Box Player Digital Car Radio DVD Player MMDSP+ inside more than 200 million produced chips January 14, 2009 ST leader in mobile phone chips January 14, 2009 Nomadik Nomadik is based on this heritage providing: – Unrivalled multimedia performances – Very low power consumption – Scalable performances January 14, 2009 BestBest ApplicationApplication ProcessorProcessor 20042004 9 Lowest power consumption 9 Scalable performance 9 Video/Audio quality 9 Cost-effective Nominees: Intel XScale PXA260, NeoMagic MiMagic 6, Nvidia MQ-9000, STMicroelectronics Nomadik STn8800, Texas Instruments OMAP 1611 January 14, 2009 Nomadik Nomadik is a family of Application Processors – Distributed processing architecture ARM9 + multiple Smart Accelerators – Support of a wide range of OS and applications – Seamless integration in the OS through standard API drivers and MM framework January 14, 2009 roadmap ... January 14, 2009 Some Nomadik products on the market... January 14, 2009 STn8815 block diagram January 14, 2009 Nomadik : a true real time multiprocessor platform ARM926 SDRAM SRAM General (L1 + L2) Purpose •Unlimited Space (Level 2 •Limited Bandwidth Cache System for Video) DMA Master OS Memory Controller Peripherals multi-layer AHB bus RTOS RTOS Multi-thread (Scheduler FSM) NAND Flash MMDSP+ Video •Unlimited Space MMDSP+ Audio 66 MHz, 16-bit •“No” Bandwidth 133 MHz, 24-bit •Mass storage -

Master Thesis

Master thesis To obtain a Master of Science Degree in Informatics and Communication Systems from the Merseburg University of Applied Sciences Subject: Tunisian truck license plate recognition using an Android Application based on Machine Learning as a detection tool Author: Supervisor: Achraf Boussaada Prof.Dr.-Ing. Rüdiger Klein Matr.-Nr.: 23542 Prof.Dr. Uwe Schröter Table of contents Chapter 1: Introduction ................................................................................................................................. 1 1.1 General Introduction: ................................................................................................................................... 1 1.2 Problem formulation: ................................................................................................................................... 1 1.3 Objective of Study: ........................................................................................................................................ 4 Chapter 2: Analysis ........................................................................................................................................ 4 2.1 Methodological approaches: ........................................................................................................................ 4 2.1.1 Actual approach: ................................................................................................................................... 4 2.1.2 Image Processing with OCR: ................................................................................................................