Slide Guitar Synthesizer with Gestural Control

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

BOSS Introduces SY-1000 Guitar Synthesizer

Press Release FOR IMMEDIATE RELEASE BOSS Introduces SY-1000 Guitar Synthesizer Next-Generation GK Synthesizer and Instrument Modeling Processor for Guitar and Bass Los Angeles, California, December 5, 2019 — BOSS introduces the SY-1000 Guitar Synthesizer, an advanced synthesizer and modeling processor for guitar and bass. Opening a bold new chapter in BOSS guitar synth innovation, the SY-1000 features a newly developed Dynamic Synth and refreshed versions of historic BOSS/Roland instrument modeling and synthesizer technologies. Backed by a cutting-edge sound engine, high-speed DSP, and evolved GK technology, the SY-1000 delivers the finest performance and most organic playing experience yet. BOSS and its parent company, Roland, have been at the forefront of guitar synthesizer development since 1977, when the landmark GR-500 first introduced guitar synthesis to the world. The SY-1000 is the most powerful guitar/bass synth processor to date, fusing decades of R&D with the latest software and hardware advancements. Leveraging custom DSP and GK independent string processing, the SY-1000 brings numerous musical advantages to players, including ultra-articulate tracking, lightning-fast response, instantly variable tuning, sound panning/layering, and more. SY-1000 users can build patches with three simultaneous instruments—each with a number of distinctive types to choose from—and combine them for an endless range of sounds. Fed by the processor’s 13-pin GK input, every instrument offers an extensive set of parameters for tone shaping, mixing, and tuning. A normal ¼-inch input is also available to blend in regular guitar/bass pickup sounds. Deep and expressive, the SY-1000’s Dynamic Synth takes guitar synthesis to a new level, allowing players to craft sounds never before possible. -

Guitar Electronics Input/Output Structures Modify: Fender Amp Mxr Phase 100 More Gr- 500 the Ultimate Keyboard

J u ly -Aug. 1981 PQLUPHONU $2 .5 0 ELECTRONIC MUSIC & HOME RECORDING ISSN: 0163-4534 GUITAR ELECTRONICS INPUT/OUTPUT STRUCTURES MODIFY: FENDER AMP MXR PHASE 100 MORE GR- 500 THE ULTIMATE KEYBOARD The Prophet-10 is the most complete keyboard instrument available today. The Prophet is a true polyphonic programmable synthesizer with 10 complete voices and 2 manuals. Each 5 voice keyboard has its own programmer allowing two completely different sounds to be played simultaneously. All ten voices can also be played from one keyboard program. Each voice has 2 voltage controlled oscillators, a mixer, a four pole low pass filter, two ADSR envelope generators, a final VCA and independent modula tion capabilities. The Prophet-10’s total capabilities are too The Prophet-10 has an optional polyphonic numerous to mention here, but some of the sequencer that can be installed when the Prophet features include: is ordered, or at a later date in the field. It fits * Assignable voice modes (normal, single, completely within the main unit and operates on double, alternate) the lower manual. Various features of the * Stereo and mono balanced and unbalanced sequencer are: outputs * Simplicity; just play normally & record ex * Pitch bend and modulation wheels actly what you play. * Polyphonic modulation section * 2500 note capability, and 6 memory banks. * Voice defeat system * Built-in micro-cassette deck for both se * Two assignable & programmable control quence and program storage. voltage pedals which can act on each man * Extensive editing & overdubbing facilities. ual independently * Exact timing can be programmed, and an * Three-band programmable equalization external clock can be used. -

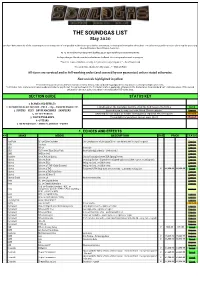

Soundgas Stock List

THE SOUNDGAS LIST May 2020 We don't have prices for all the incoming items: in many cases it’s impossible to determine price before assessment, servicing and testing has taken place. Preorders are possible on some of our regular pieces (eg Binson Echorecs, Space Echoes, Junos etc). As-is: we need to clear our service backlog so are open to offers on unserviced items. We hope that you like the new list and welcome feedback: this is very much a work in progress. “Your list is one of the best, it really is. I just want everything on it.” - Pete Townshend "I’m on the list, thanks. It’s like crack …” - Michael Price All items are serviced and in full working order (and covered by our guarantee) unless stated otherwise. New arrivals highlighted in yellow Prices (where quoted) are in £GBP and exclude delivery. Debit/Credit Card and Paypal payments may incur a surcharge on high value items. *VAT (Sales Tax): Customers in USA/Canada/Australia the pay the tax-free price shown in the first column where applicable. All prices in the first column show standard VAT-exclusive prices; if the second column has the same price, then there’s no reclaimable VAT on the item. SECTION GUIDE STATUS KEY 1. ECHOES AND EFFECTS 2. RECORDING GEAR: MIXERS - PRES - EQs - COMPRESSORS ETC. Listed now on the Soundgas website, click the link to go to the listing Listed 3. SYNTHS - KEYS - DRUM MACHINES - SAMPLERS Arrived or on its way, yet to be listed. Please enquire. Enquire 4. EFFECT PEDALS Reserved for our studio or further investigation required. -

Harmonic Octave Generator – Guitar Synthesizer

HOG2 Harmonic Octave Generator – Guitar Synthesizer Operating Instructions Congratulations on your purchase of the HOG2! The HOG2 is an Octave and Harmonic Generator/Guitar Synthesizer that can simultaneously generate multiple octaves and harmonics from your input signal. Whether you play single notes, arpeggios or full chords, the HOG2 will track every note you play. Built-in to the HOG2 are 7 Expression Modes that enable you to modify your sounds using a standard Expression Pedal, a MIDI Controller, or the Expression Button on the HOG2 itself. Further sound sculpting is achieved through an Amplitude Envelope Generator and a 2nd Order Low Pass Resonant Filter. The HOG2 can save and load up to 100 user presets using the optional EHX HOG2 Foot Controller accessory. The presets are saved inside the HOG2 but are accessed either by connecting the HOG2 Foot Controller accessory from EHX or through MIDI. Using just the HOG2 by itself, 1 preset can be stored and loaded. WARNING: Your HOG2 comes equipped with an Electro-Harmonix 9.6DC-200BI power supply (same as used by Boss® & Ibanez®: 9.6 Volts DC 200mA). The HOG2 requires 170mA at 9VDC with a center negative plug. The HOG2 does not take batteries. Using the wrong adapter may damage your unit and void the warranty. Audio Connections and Controls MASTER VOL Slider – Sets the overall output volume of the HOG2. All voices including the DRY OUTPUT signal are affected by the MASTER VOL slider. The HOG2’s output volume will increase as this slider is pushed upward. DRY OUTPUT Slider – Controls the output volume of your original, unaffected DRY signal before it exits the HOG2. -

Guitar Effects Guide Book

Guitar & Bass Effects / Tuners / Metronomes GUITAR EFFECTS GUIDE BOOK Vol.19 CommitCommitmentment toto QualityQuality andand InnovationInnovation BOSS forges into 2005 with a rrock-solidock-solid family of effects and accessories. TTechnicalechnical innovation and tanktank-tough-tough construction make BOSS prproductsoducts the most respected and soughtsought-after-after tone totoolsols in the world. Players who want the best plug into BOSS. INDEX The Many Roles of Guitar Effects 4 Bass Effect Units 43 AB-2 2-Way Selector 51 DB-30 Dr. Beat 78 GE-7 Equalizer 34 OS-2 OverDrive/Distortion 13 AC-2 Acoustic Simulator 36 DB-60 Dr. Beat 78 GEB-7 Bass Equalizer 46 PH-3 Phase Shifter 31 History of BOSS 6 Reduce Noise 49 ACA-Series AC Adaptors 79 DB-90 Dr. Beat 78 GT-6B Bass Effects Processor 72 PS-5 SUPER Shifter 41 Add Distortion 8 Change Connections 50 AD-3 Acoustic Instrument Processor 65 DD-3 Digital Delay 24 GT-8 Guitar Effects Processor 72 PSA-Series AC Adaptors 79 AD-5 Acoustic Instrument Processor 65 DD-6 Digital Delay 23 LMB-3 Bass Limiter Enhancer 47 PW-10 V-Wah® 62 Boost Tips 18 Next-Generation Pedals 53 AD-8 Acoustic Guitar Processor 64 DD-20 Giga Delay 58 LS-2 Line Selector 50 RC-20XL Loop Station™ 61 Guitar Amp Settings 20 Acoustic Processors 64 AW-3 Dynamic Wah 35 DS-1 Distortion 14 MD-2 Mega Distortion 17 RV-5 Digital Reverb 25 BCB-60 Pedal Board 74 DS-2 TURBO Distortion 15 ME-50 Guitar Multiple Effects 73 SD-1 SUPER OverDrive 11 Add Acoustic Dimensions 22 Challenge Yourself 66 BD-2 Blues Driver® 12 EQ-20 Advanced EQ 60 ME-50B -

G3 Operation Manual (17 MB Pdf)

OPERATION MANUAL Thank you very much for purchasing the ZOOM . Please read this manual carefully to learn about all the functions of the so that you will be able to use it fully for a long time. Keep this manual in a convenient place for reference when necessary. Contents Usage and safety precautions .......................2 Using Rhythms ............................................24 Introduction ...................................................3 Using the Looper .........................................26 Terms Used in This Manual ............................3 Updating the firmware .................................30 Part Names ....................................................4 Restoring the to its factory default settings. .......................................31 Turn the power on and play ...........................6 Using Audio Interface Functions .......................32 Adjusting effects ............................................8 Effect Types and Parameters .......................33 Selecting Patches ........................................ 10 Troubleshooting ...........................................49 Storing Patches ............................................ 12 Specifications ..............................................50 Setting specific patch parameters ............... 14 Rhythm List .................................................51 Changing Various Settings ........................... 18 Using the Tuner ............................................22 © ZOOM CORPORATION Copying or reproduction of this document -

BX-13 V3 Manual .Cwk

BX-13 v3 Expanded Bus Converter version 3 OWNER’S MANUAL _______________________________________ DESCRIPTION Congratulations on your purchase of the BX-13 Your BX-13 V3 has been hand-built and hand- V3 Expanded Bus Converter. wired using quality components for reliable operation and is backed by a one-year parts and The BX-13 V3 combines the technology found labor warranty. in the Roland BC-13 bus converter but with expanded foot switch controls, guitar volume Please read this owner’s manual carefully before pedal input, and EV-5 standard control output. using the BX-13 V3. Like a BC-13 Bus Converter, the BX-13 V3 can convert the vintage 24-pin style signals into modern, 13-pin control signals, enabling the vintage guitar synth enthusiast access to the latest in guitar technology. _______________________________________ IMPORTANT NOTES • When connecting a guitar and • The 13-pin connector used with the BX- synthesizer to the BX-13 V3, be sure 13 V3 is locking style, and therefore that power to the synthesizer is switched cables cannot be disconnected unless off. the locking pin is released. _______________________________________ PANEL DESCRIPTIONS 1. 24-Pin GR Input Connector: Input much louder than the standard for 24-pin style G-Series guitar cable. (humbucker) sound, and the Guitar Volume knob may need to be adjusted 2. 13-Pin GK Output Connector: The to a lower output level. The hex fuzz modern, 13-pin synthesizer output sound is only available on guitars with hex connector. fuzz, like the Roland G-202, G-303, G- 505, and G-808. -

On the Folk Circuit, Actor Jeff Daniels Aims to Be Fingerpickin' Good

on the Leno show/ Singing some song that nobody On the folk circuit, knows/ He’s got an Oscar, now he’s got a guitar/ Just actor Jeff Daniels like that, he’s a rock-and-roll star.” So it’s meant as a compliment to say that you’d likely aims to be never guess it was Daniels playing if you heard one of fingerpickin’ good his folk tunes on the radio. People tend to get a “a bit of shock,” when they hear him for the first time, says Daniels, an able By Lauren Daley GLOBE CORRESPONDENT fingerpicker who has a Martin guitar named after him AUGUST 02, 2018 (the Martin OM Jeff Daniels Custom Signature Edition), nine albums on Spotify, and a penchant for mixing witty wordplay with serious heartache. His jukebox heartbreaker “She Don’t Love Me,” for example, is straight from the John Prine school of songwriting (“It’s not that she don’t love me, she just don’t like me anymore.”) The Emmy- and Golden Globe-winning, Tony- nominated actor is known to fans, depending on the fan, as the guy from “Something Wild” or “Dumb and Dumber” or “The Squid and the Whale” or “Terms of Endearment” or HBO’s “The Newsroom” . The genre-blurring list goes on. Daniels recently earned two more Emmy nods — supporting actor in a limited series in the Netflix western “Godless,” and lead actor in a limited series in Hulu’s “The Looming Tower.” LUKE PLINE Jeff Daniels Yet bonafide musicians, it would seem, have welcomed Daniels into their tribe: He’s shared the When you hear that an actor is venturing into music, stage with Lyle Lovett, John Hiatt, Joe Ely, Guy Clark, you don’t expect to be wowed. -

2007 Vol 20 Pedal Guide

Cover.qxd 07.9.3 11:06 Page 2 in CG-3 1_2.qxd 07.8.27 16:33 Page 1 1_2.qxd 07.8.27 16:33 Page 2 10,00010,000,000 pedals sold and going strong ... BOSS is the number one choice for musicians who wwant the best of the best. Carefully selected parts, uncompromised circuit design, road-troad-tough construction-everything on a BOSS pedal is made with the professional in mind. Musicians who demand the best choose BOSS. INDEX The Many Roles of Guitar Effects ......................... 3 Accessories ........................................................... 80 DB-30 Dr. Beat .................................................................... 84 FV-500H FFoot Volume ............................................ 81 MT-2 Metal Zone® ................................................. 17 RT-20 Rotary Ensemble ........................................ 59 History of BOSS ....................................................... 5 AB-2 2-Way Selector ............................................ 80 DB-60 Dr. Beat .................................................................... 84 FV-500L FFoot Volume ........................................... 81 NS-2 Noise Suppressor ........................................ 48 RV-5 Digital Reverb ............................................. 27 Add Distortion .......................................................... 7 AC-3 Acoustic Simulator ..................................... 39 DB-90 Dr. Beat .................................................................... 84 FZ-5 Fuzz .............................................................. -

G3X Operation Manual

OPERATION MANUAL Thank you very much for purchasing the ZOOM / . Please read this manual carefully to learn about all the functions of the / so that you will be able to use it fully for a long time. Keep this manual in a convenient place for reference when necessary. Contents Usage and safety precautions .......................2 Using Rhythms ............................................28 Introduction ...................................................3 Using the Looper .........................................30 Terms Used in This Manual ............................3 Using Audio Interface Functions .......................36 Part Names ....................................................4 Adjusting the expression pedal....................37 Turning the power on .....................................6 Updating the firmware .................................38 Adjusting effects ............................................8 Restoring the / to its factory default settings. .......................................39 Selecting Patches ........................................ 12 Effect Types and Parameters .......................40 Storing Patches ............................................ 14 Troubleshooting ...........................................58 Setting patch-specific parameters ............... 16 Specifications ..............................................59 Changing Various Settings ...........................20 Rhythm List .................................................59 Using the Tuner ............................................26 -

GR-55 Leaflet

Amazing sound at your fingertips! Express your originality and imagination with the new generation of GR. New Sound Possibilities Infinite possibilities from two types of synth tones + COSM guitar modeling Loaded with sounds that respond to every turn of your imagination, including never-experienced heavy and thick tones, astral sounds, and the COSM Amp Models ultimate in clear tone. The GR-55 blends two types of synth sounds and with COSM guitar modeling to reproduce a broad range of guitar tones and Effects n Options (sold separately) and expressive effects. GR-55 Bass mode delivers Divided Pickup for Bass amazing tone and GK-3B expressiveness to bassists. lSimple to install with included holder lAccommodates a variety of bass-guitar Attach the GK-3B divided pickup types, as it can be attached to "float" to your bass, and you can play above the bridge superb synth sounds with COSM lThe GK-3B can adjust to specific string bass-guitar and -amp modeling. positions, supporting both 5- and 6-string basses *GK-3 divided pickup (for guitar) also sold separately; purchase extra GK-3 pickups to install on the guitars of your choice. Perfection in creating authentic guitar tones USB Flash Memory Unit Selector GK Cable M-UF2G US-20 GKC-5 (5 m/196-7/8 inch) The GR-55 features a world-class lineup Allows a single guitar GK Cable to control two of COSM models that replicate vintage GK-compatible units GKC-10 (10 m/393-3/4 inch) masterpiece guitar amps, plus effects equal 2 GB USB memory key to BOSS's flagship GT class. -

Samash Fall 2008 Gear Guide.Pdf

SAM ASH® 45-DAY SATISFACTION GUARANTEE: If you aren’t satisfied with your purchase for any reason, just return it within 30 days for a full refund or within 45 days for a full exchange. Returns must be in original condition with your Sam Ash receipt, all original packaging, accessories, manuals, and blank warranty cards. Limitations: For health reasons we do not accept returns of harmonicas and internal earphones. Please contact the manufacturer if defec- tive. We do not accept returns of open computer software, books, sheet music, and other easily copied materials. We do not accept returns of opened packages of strings, cartridges, styli, fog fluid, tubes, and cleaning products. Exceptions: There is a 15-day return period and a 15% restocking fee on microphones, mixers, speakers, power amps, rack effects, computers, recording, DJ, lighting, karaoke equipment, and pro workstations. There is no restocking fee on exchanges for like items; We do not accept returns on special order merchandise.; We reserve the right to require ID and deny return privileges to abusers of our policy.; Payment of Refunds: Refunds paid by the same method of payment as the original purchase whenever possible. Allow 15 business days for refund checks from our main office. SAM ASH® Best In The Business 50 State/60 Day BEST PRICE Guarantee: We Guarantee you the lowest price on your Sam Ash purchase. Sam Ash always beats the competition! Within 60 days, show us a lower price on the same product advertised by a US authorized dealer who has the product in stock, and we will beat that deal.