Intuitive Time-Series-Analysis-Toolbox for Inexperienced Data Scientists

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Some Touching News the New Rules of Digital Media

Some Touching News the new rules of digital media Josh Clark @globalmoxie Interaction designer but also what might be called: design strategist I work mainly in mobile. I wrote a book called Tapworthy about designing iPhone apps. Fill my days: Help people/companies think through crafting terrific experiences for non-desktop systems. Means figuring out how phones, tablets fit into overall digital diet. But it’s also about how these devices are creating entirely new kinds of interaction, new kinds of digital products and interfaces. That’s what I’m going to talk about today. How this explosion of new devices means we have to rethink how we approach design. Especially excited about possibilities of touch interfaces. I believe touch forces—or should force—important, FUNDAMENTAL changes in how we approach the designs of these interfaces. When you get rid of the mouse and cursor, these prosthetics that we’ve been using to point at stuf for 25 years, you get a VERY diferent experience. And it suggests entirely new interfaces. Touch will help us sweep away decades of buttons—menus—folders—tabs—administrative debris to work directly with content. This is very diferent from what came before. And certainly VERY diferent from print. I’m going to talk about two things today: 1. How we should/shouldn’t go about conceiving entirely new interfaces for news; particularly its relationship to print. Then: nitty-gritty techniques for pushing touch interfaces in exciting new directions. iPad in particular giving many of us opportunity to experiment. EXCITING. But also means we see a lot of misfires, too. -

Package 'Yonder'

Package ‘yonder’ January 10, 2020 Type Package Title A Reactive Web Framework Built on 'shiny' Version 0.2.0 Description Build 'shiny' applications with the latest Bootstrap components and design utilities. Includes refreshed reactive inputs and outputs. Use responsive layouts to design and construct applications for devices of all sizes. License GPL-3 URL https://nteetor.github.io/yonder BugReports https://github.com/nteetor/yonder/issues Encoding UTF-8 LazyData true RoxygenNote 7.0.2 Depends R (>= 3.2), shiny (>= 1.4.0) Imports htmltools (>= 0.4.0), magrittr, utils Suggests testthat (>= 2.1.0) NeedsCompilation no Author Nathan Teetor [aut, cre], The Bootstrap Authors [cph] (Bootstrap library), Twitter, Inc [cph] (Bootstrap library), JS Foundation [cph] (jQuery library), Federico Zivolo [ctb, cph] (popper.js library), Johann Servoire [ctb, cph] (bs-custom-file-input library) Maintainer Nathan Teetor <[email protected]> Repository CRAN Date/Publication 2020-01-10 21:20:07 UTC 1 2 R topics documented: R topics documented: yonder-package . .3 active ............................................4 affix.............................................5 alert . .6 background . .7 badge . .8 blockquote . .9 border . 10 buttonGroupInput . 12 buttonInput . 13 card ............................................. 16 checkbarInput . 19 checkboxInput . 21 chipInput . 23 collapsePane . 26 column . 27 d1.............................................. 30 display . 31 dropdown . 32 fieldset . 34 fileInput . 35 flex ............................................ -

Merlinx Extension

MerlinX Extension For Adobe Creative Cloud Applications MerlinOne Inc. 17 Whitney Road Quincy, MA 02169 T (617) 328-6645 http://www.merlinone.com MerlinOne Inc. Table of Contents Table of Contents 1 Introduction 3 Installing the Extension 4 Logging In 5 Accessing the Extension 5 Collapsing and Docking the Extension 5 How to Log In 5 Specifying a Merlin Server 6 Specifying Your Name & Password 6 Connecting 6 How to Log Out 7 Overview 8 Getting Around 10 Locating Assets 10 Search 10 Collections 10 Saved Searches 12 Customizing the Display 12 Thumbnail Grid 12 MerlinX Extension (Wednesday, August 29, 2018) "1 MerlinOne Inc. Thumbnail Size 13 Thumbnail Info 13 Working With Digital Assets 14 Opening Assets 15 Asset Versions 15 Checking Out 15 Checking In 16 Reverting 16 Placing Assets in InDesign 17 Automatic Update of Placed Assets 17 Sending Assets to Your Merlin Server 18 Modified Assets 18 New Assets 18 MerlinX Extension (Wednesday, August 29, 2018) "2 MerlinOne Inc. Introduction The MerlinX Extension is an Adobe Extension that makes it easy to access your MerlinX Digital Asset Management system from within your favorite Adobe Creative Cloud application. The extension allows you to locate assets either by searching or through user- defined asset collections. In addition to helping you find assets, the extension also helps you work on them. As your creation evolves, you can periodically send it to your Merlin server through a process called “checking it in”. The server keeps track of each version of the asset you check in, so it is possible to revert changes that are not desired. -

Reviewer's Guide 2 Overview

Reviewer's Guide 2 Overview What is OmniOutliner? OmniOutliner for iPad is a professional-grade outlining application to easily capture, compose, and organize text and data. It's feature-rich enough to see a novel from outline to print and simple enough to create a grocery list in a snap. What makes OmniOutliner different from other iPad outlining apps? OmniOutliner includes everything you'd expect in a premiere outlining application: fast, easy capture; intuitive editing; diverse templates; and robust styles. If you all you'd like to do with your outline is prepare a grocery list or balance your checkbook, OmniOutliner for iPad can help you do that. If you're looking for something a bit more complex, OmniOutliner is designed to expand organically with your needs. Advanced options are there when you need them, and stay out of your way when you don't. Everything's been designed with iPad—and your fingertips—in mind: flexible style options; intelligent row creation; notes; links and attachments; sharing; and more. Start your outlines on the iPad and continue on the desktop, or vice versa. OmniOutliner combines the functionality of a desktop app with the powerful mobile experience of iPad. It's a powerful system created by a company that's been in the Mac business—and providing free customer support—for over 15 years. Who uses OmniOutliner? Business professionals, writers, students, parents, home users, and educators all rely on OmniOutliner for its unparalleled task management functionality. From complicated and intricate papers to a quick to-do list, some common-use examples include: • Restructuring an essay on the fly • Creating a number column to keep track of finances • Adding "Buy milk" to a grocery list • Using notes to expand on a principal idea • Creating visual allure with styles • Tapping checkboxes to keep track of completed agenda items • Using notes to expand on a principal idea 3 The Toolbar & Editbar When you launch OmniOutliner for the first time, you can start from scratch, or begin working with one of the built-in templates. -

Help Link at the Top of Any Page to Open This Document

CAMD Business System (CBS) Tutorial Welcome to the CAMD Business System. The purpose of this document is to guide CBS users through the modules in the updated CAMD Business System. You may use the CAMD Business System to: 1. View and modify your user profile; 2. Manage general accounts; 3. Transfer allowances; 4. Submit annual compliance information; 5. Manage agent relationships; 6. Manage feedback recipient relationships; 7. Manage Certificates of Representation, including information regarding plants, units, designated representatives, owners/operators, and generators, and 8. Manage responsible officials. You can jump to any topic you are interested in by clicking on the topic in the table of contents. Within the application, you may select the Help Link at the top of any page to open this document. June 16, 2021 1 Table of Contents Login ..........................................................................................................................................3 Your Profile ................................................................................................................................4 Reset Your Password…………………………………………………………….……………….11 Manage General Accounts……………………………………………………………………….15 Allowance Transfers ................................................................................................................. 27 Compliance ............................................................................................................................... 43 Edit Contact Information .......................................................................................................... -

Apollo Twin USB Software Manual

H I GH- R ESO L U T I O N I N TER F A C E with Realtime UAD Processing Apollo Twin USB Software Manual UAD Software Version 9 Manual Version 210429 www.uaudio.com Tip: Click any section or Table Of Contents page number to jump directly to that page. About This Manual ................................................................................ 6 For Apollo Twin USB .......................................................................................... 6 Introduction ......................................................................................... 7 Welcome To Apollo! ............................................................................................ 7 Apollo Software Features .................................................................................... 8 Apollo Documentation Overview ........................................................................ 10 Apollo Software Overview .................................................................................. 12 Installation & Setup ............................................................................ 14 System Requirements ...................................................................................... 14 Setup Overview ................................................................................................ 15 Software Installation ........................................................................................ 16 Windows Setup ............................................................................................... -

Apollo Thunderbolt Console Software Manual

H I GH- R ESO L U T I O N I N TER F A C E with Realtime UAD Processing Apollo Thunderbolt Software Manual UAD Software Version 9.11.0 Manual Version 191031 www.uaudio.com Tip: Click any section or Table Of Contents page number to jump directly to that page. About This Manual ................................................................................ 7 For Apollo models connected via Thunderbolt ....................................................... 7 Introduction ......................................................................................... 8 Welcome To Apollo! ............................................................................................ 8 Apollo Software Features .................................................................................... 9 Apollo Documentation Overview ........................................................................ 11 Additional Resources & Technical Support ......................................................... 12 Apollo Software Overview .................................................................................. 13 Installation & Setup ............................................................................ 15 Apollo Thunderbolt System Requirements .......................................................... 15 Software Setup ................................................................................................ 16 Windows Setup ................................................................................................ 17 What Next? .................................................................................................... -

Predix Design System Contents

Predix Design System Contents Predix Design System Overview 1 Create Modern Web Applications 1 About the Predix Design System 6 Application Development with the Predix Design System 7 Supported Browsers for Web Applications 9 Predix Design System Glossary 10 Use the Predix Design System 12 Using the Predix Design System 12 Setting Up the Predix Design System Developer Environment 13 Migrate to Predix Design System Cirrus 15 Migrating to Predix Design System Cirrus 15 New Predix UI Components for Predix Design System Cirrus 17 Deprecated Predix UI Components for Predix Design System Cirrus 18 Predix Design System Cirrus Design Changes 18 Predix Design System Cirrus API Changes 19 Get Started with Predix UI Components 26 About Predix UI Components 26 Getting Started with Predix UI Components 27 Using a Predix UI Component in a Web Application 27 Predix UI Basics 29 Predix UI Templates 30 Predix UI Components 31 Predix UI Datetime Components 33 Predix UI Mobile Components 33 Predix UI Data Visualization Components 34 Predix UI Vis Framework 35 Localize Predix UI Components 39 Localizing Predix UI Components 39 Localizing Text Strings 40 Localizing with the Moments.js Library 41 Localizing with the D3.js Library 44 Custom Locale Support 46 ii Predix Design System Theme Web Applications 51 Theming Web Applications 51 Styling a Predix UI Component 51 Applying a Theme to a Web Application 53 CSS Custom Properties Overview 54 CSS Custom Properties Reference 55 Get Started with Predix UI CSS Modules 56 About Predix UI CSS Modules 56 Getting Started with Predix UI CSS Modules 56 Predix UI CSS Visual Library 59 Predix UI CSS Layout Library 60 Predix UI CSS Utilities Library 61 Predix UI CSS Module Overview 62 Predix Design System Release Notes 66 Predix Design System Release Notes 66 iii Predix Design System Overview Create Modern Web Applications Web applications have evolved to implement many coordinated user functions and tasks traditionally associated with desktop software (for example, Google Docs and Microsoft Office). -

Design and Testing of a Mobile Touchscreen Interface for Multi-Modal Biometric Capture

This publication is available free of charge from http://dx.doi.org/10.6028/NIST.IR.8003 NISTIR 8003 Design and Testing of a Mobile Touchscreen Interface for Multi-Modal Biometric Capture Kristen K. Greene Ross J. Micheals Kayee Kwong Gregory P. Fiumara http://dx.doi.org/10.6028/NIST.IR.8003 This publication is available free of charge from http://dx.doi.org/10.6028/NIST.IR.8003 NISTIR 8003 Design and Testing of a Mobile Touchscreen Interface for Multi-Modal Biometric Capture Kristen K. Greene Ross J. Micheals Kayee Kwong Gregory P. Fiumara Information Access Division Information Technology Laboratory This publication is available free of charge from: http://dx.doi.org/10.6028/NIST.IR.8003 May 2014 U.S. Department of Commerce Penny Pritzker, Secretary National Institute of Standards and Technology Patrick D. Gallagher, Under Secretary of Commerce for Standards and Technology and Director This publication is available free of charge from http://dx.doi.org/10.6028/NIST.IR.8003 DISCLAIMER Certain commercial entities, equipment, or materials may be identified in this document in order to describe an experimental procedure or concept adequately. Such identification is not intended to imply recommendation or endorsement by the National Institute of Standards and Technology, nor is it intended to imply that the entities, materials, or equipment are necessarily the best available for the purpose. ii This publication is available free of charge from http://dx.doi.org/10.6028/NIST.IR.8003 EXECUTIVE SUMMARY In this report, we describe in detail the design and usability testing of a touchscreen interface for multimodal biometric capture, an application called WSABI, Web Services for Acquiring Biometric Information. -

Healthe NL Quick Reference Guide

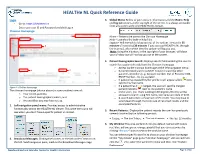

HEALTHe NL Quick Reference Guide Login 3. Global Menu: Refers to your account information and the Home, Help - Go to: https://healthenl.ca and log out options at the top right of the screen. It is always accessible from any screen within the HEALTHe NL Viewer. - Enter your user ID and Password and click Log In Clinician Homepage . 3 Home – Returns the user to the Clinician Homepage. 2 Help – Launches the built-in help files. Logout - Will immediately log you out of the system. Timeout is 35 4 minutes of inactivity (30 minutes if you accessed HEALTHe NL through the internet), after which time the system will log you out. Note: Using the X button, in the top right of your browser, will close your window but will not log you out of the system. 1 4. Patient Demographic Search: Displays search fields enabling the user to search for a patient directly from the Clinician Homepage. o Access via the clinician homepage or the left navigation menu. 5 6 o Recommended search via MCP, however search by other patient’s identifiers (E.g. Account number, Out of Province HCN, RMCP Number, etc.) is available. o If patient has masked his/her profile it will appear a lock icon next to his/ her name. o If a patient has been merged, the user will see the merged Figure 1: Clinician Homepage patient indicator next to the patient’s name. The clinician homepage (shown above) is a personalized view of: o Some users, (i.e. Users working in Emergency Rooms) will be o Your recent patients; allowed to search using first name, last name and date of birth. -

Creating User Interfaces Using Web-Based Technologies to Support Rapid Prototyping in a Desktop Astrovisualization Software Klas Eskilson

LiU-ITN-TEK-A--17/062--SE Creating User Interfaces Using Web-based Technologies to Support Rapid Prototyping in a Desktop Astrovisualization Software Klas Eskilson 2017-11-28 Department of Science and Technology Institutionen för teknik och naturvetenskap Linköping University Linköpings universitet nedewS ,gnipökrroN 47 106-ES 47 ,gnipökrroN nedewS 106 47 gnipökrroN LiU-ITN-TEK-A--17/062--SE Creating User Interfaces Using Web-based Technologies to Support Rapid Prototyping in a Desktop Astrovisualization Software Examensarbete utfört i Datateknik vid Tekniska högskolan vid Linköpings universitet Klas Eskilson Handledare Emil Axelsson Examinator Anders Ynnerman Norrköping 2017-11-28 Upphovsrätt Detta dokument hålls tillgängligt på Internet – eller dess framtida ersättare – under en längre tid från publiceringsdatum under förutsättning att inga extra- ordinära omständigheter uppstår. Tillgång till dokumentet innebär tillstånd för var och en att läsa, ladda ner, skriva ut enstaka kopior för enskilt bruk och att använda det oförändrat för ickekommersiell forskning och för undervisning. Överföring av upphovsrätten vid en senare tidpunkt kan inte upphäva detta tillstånd. All annan användning av dokumentet kräver upphovsmannens medgivande. För att garantera äktheten, säkerheten och tillgängligheten finns det lösningar av teknisk och administrativ art. Upphovsmannens ideella rätt innefattar rätt att bli nämnd som upphovsman i den omfattning som god sed kräver vid användning av dokumentet på ovan beskrivna sätt samt skydd mot att dokumentet ändras eller presenteras i sådan form eller i sådant sammanhang som är kränkande för upphovsmannens litterära eller konstnärliga anseende eller egenart. För ytterligare information om Linköping University Electronic Press se förlagets hemsida http://www.ep.liu.se/ Copyright The publishers will keep this document online on the Internet - or its possible replacement - for a considerable time from the date of publication barring exceptional circumstances. -

NIST Special Publication

NISTIR 8003 Design and Testing of a Mobile Touchscreen Interface for Multi-Modal Biometric Capture Kristen K. Greene Ross J. Micheals Kayee Kwong Gregory P. Fiumara http://dx.doi.org/10.6028/NIST.IR.8003 NISTIR 8003 Design and Testing of a Mobile Touchscreen Interface for Multi-Modal Biometric Capture Kristen K. Greene Ross J. Micheals Kayee Kwong Gregory P. Fiumara Information Technology Laboratory http://dx.doi.org/10.6028/NIST.IR.8003 May, 2014 U.S. Department of Commerce Penny Pritzker, Secretary National Institute of Standards and Technology Patrick D. Gallagher, Under Secretary of Commerce for Standards and Technology and Director ii DISCLAIMER Certain commercial entities, equipment, or materials may be identified in this document in order to describe an experimental procedure or concept adequately. Such identification is not intended to imply recommendation or endorsement by the National Institute of Standards and Technology, nor is it intended to imply that the entities, materials, or equipment are necessarily the best available for the purpose. iii EXECUTIVE SUMMARY In this report, we describe in detail the design and usability testing of a touchscreen interface for multimodal biometric capture, an application called WSABI, Web Services for Acquiring Biometric Information. The application code is publicly available online at https://github.com/NIST-BWS/wsabi2. The interface is a tablet-based reference application for the Web Services for Biometric Devices (WS-BD) protocol. Just as WS-BD specifies a method of communication between client and sensors (i.e., machine-to-machine communication), WSABI provides a consistent and modality- independent method of interaction between human operators and sensors (i.e., human-to- machine communication).