Volume 2 ~ Issue 3

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

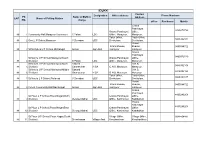

IDUKKI Contact Designation Office Address Phone Numbers PS Name of BLO in LAC Name of Polling Station Address NO

IDUKKI Contact Designation Office address Phone Numbers PS Name of BLO in LAC Name of Polling Station Address NO. charge office Residence Mobile Grama Panchayat 9495879720 Grama Panchayat Office, 88 1 Community Hall,Marayoor Grammam S.Palani LDC Office, Marayoor. Marayoor. Taluk Office, Taluk Office, 9446342837 88 2 Govt.L P School,Marayoor V Devadas UDC Devikulam. Devikulam. Krishi Krishi Bhavan, Bhavan, 9495044722 88 3 St.Michale's L P School,Michalegiri Annas Agri.Asst marayoor marayoor Grama Panchayat 9495879720 St.Mary's U P School,Marayoor(South Grama Panchayat Office, 88 4 Division) S.Palani LDC Office, Marayoor. Marayoor. St.Mary's U P School,Marayoor(North Edward G.H.S, 9446392168 88 5 Division) Gnanasekar H SA G.H.S, Marayoor. Marayoor. St.Mary's U P School,Marayoor(Middle Edward G.H.S, 9446392168 88 6 Division) Gnanasekar H SA G.H.S, Marayoor. Marayoor. Taluk Office, Taluk Office, 9446342837 88 7 St.Mary's L P School,Pallanad V Devadas UDC Devikulam. Devikulam. Krishi Krishi Bhavan, Bhavan, 9495044722 88 8 Forest Community Hall,Nachivayal Annas Agri.Asst marayoor marayoor Grama Panchayat 4865246208 St.Pious L P School,Pious Nagar(North Grama Panchayat Office, 88 9 Division) George Mathai UDC Office, Kanthalloor Kanthalloor Grama Panchayat 4865246208 St.Pious L P School,Pious Nagar(East Grama Panchayat Office, 88 10 Division) George Mathai UDC Office, Kanthalloor Kanthalloor St.Pious U P School,Pious Nagar(South Village Office, Village Office, 9048404481 88 11 Division) Sreenivasan Village Asst. Keezhanthoor. Keezhanthoor. Grama -

List of Biogas Plants Installed in Kerala During 2008-09

LIST OF BIOGAS PLANTS INSTALLED IN KERALA DURING 2008-09 by Si ze Block Model Sr. No latrine g date Village amount Dist rict Dist & Name Subsidy Address Category Guidence Technical Technical Inspected Inspected Functionin Beneficiary Beneficiary 1 Trivandrum Vijayakumar.N, S/o Neyyadan Nadar, Vijaya Bhavan, Neyyattinkar Parassala HA 2m3 KVIC 0 3500 26.11.08 K.Somasekhar P.Sanjeev, ADO Neduvanvila, Parassala P.O & Pancht, Neyyattinkara Tq- a anPillai (BT) 695502 2 Trivandrum Sabeena Beevi, Kunnuvila Puthenveedu, Edakarickam, Kilimanoor Pazhayakunnu GEN 3m3 KVIC 0 2700 28.10.08 K.Somasekhar P.Sanjeev, ADO Thattathumala.P.O, Pazhayakunnummel Pancht, mmel anPillai (BT) Chirayinkeezhu Tq 3 Trivandrum Anilkumar.B.K, S/o Balakrishnan, Therivila House, Athiyannur Athiyannur HA 2m3 DB 0 3500 17.01.09 K.Somasekhar P.Sanjeev ADO Kamukinkode, Kodangavila.P.O, Athiyannur Pancht, anPillai (BT) Neyyattinkara Tq 4 Trivandrum Sathyaraj.I, S/o Issac, kodannoor Mele Puthenveedu, Perumkadav Perumpazhuth HA 2m3 DB 0 3500 18.01.09 K.Somasekhar P.Sanjeev ADO Punnaikadu, Perumpaxhuthoor.P.O, Neyyattinkara Pancht & ila oor anPillai (BT) Tq 5 Trivandrum Balavan.R.P, S/o Rayappan, 153, Paduva House, Neyyattinkar Athiyannur HA 2m3 DB 0 3500 04.02.09 K.Somasekhar P.Sanjeev ADO Kamukincode, Kodungavila.P.O, Athiyannur Pancht, a anPillai (BT) Neyyattinkara Tq-695123 6 Trivandrum Ani.G, S/o Govindan.K, Karakkattu Puthenveedu, Avanakuzhy, Athiyannur Athiyannur HA 2m3 DB 0 3500 08.02.09 K.Somasekhar P.Sanjeev ADO Thannimoodu.P.O, Athiyannur Pancht, Neyyattinakara Tq anPillai -

2004-05 - Term Loan

KERALA STATE BACKWARD CLASSES DEVELOPMENT CORPORATION LTD. A Govt. of Kerala Undertaking KSBCDC 2004-05 - Term Loan Name of Family Comm Gen R/ Project NMDFC Inst . Sl No. LoanNo Address Activity Sector Date Beneficiary Annual unity der U Cost Share No Income 010105768 Asha S R Keezhay Kunnathu Veedu,Irayam Codu,Cheriyakonni 0 F R Textile Business Sector 52632 44737 21/05/2004 2 010105770 Aswathy Sarath Tc/ 24/1074,Y.M.R Jn., Nandancodu,Kowdiar 0 F U Bakery Unit Business Sector 52632 44737 21/05/2004 2 010106290 Beena Kanjiramninna Veedu,Chowwera,Chowara Trivandrum 0 F R Readymade Business Sector 52632 44737 26/04/2004 2 010106299 Binu Kumar B Kunnuvila Veedu,Thozhukkal,Neyyattinkara 0 M U Dtp Centre Service Sector 52632 44737 22/04/2004 2 1 010106426 Satheesan Poovan Veedu, Tc 11/1823,Mavarthalakonam,Nalanchira 0 M U Cement Business Business Sector 52632 44737 19/04/2004 1 2 010106429 Chandrika Charuninnaveedu,Puthenkada,Thiruppuram 0 F R Copra Unit Business Sector 15789 13421 22/04/2004 1 3 010106430 Saji Shaji Bhavan,Mottamoola,Veeranakavu 0 M R Provision Store Business Sector 52632 44737 22/04/2004 1 4 010106431 Anil Kumar Varuvilakathu Pankaja Mandiram,Ooruttukala,Neyyattinkara 0 M R Timber Business Business Sector 52632 44737 22/04/2004 1 5 010106432 Satheesh Panavilacode Thekkarikath Veedu,Mullur,Mullur 0 M R Ready Made Garments Business Sector 52632 44737 22/04/2004 1 6 010106433 Raveendran R.S.Bhavan,Manthara,Perumpazhuthur 0 C M U Provision Store Business Sector 52632 44737 22/04/2004 1 010106433 Raveendran R.S.Bhavan,Manthara,Perumpazhuthur -

Aiswarya Spices & Dream Land

+91-8048371874 Aiswarya Spices & Dream Land https://www.indiamart.com/aiswarya-spices/ Are you crushed and jammed in the fast paced, modern life? Want to plan a holiday to enjoy and experience the real thrill and joy of life? Then, take the right step along with Aiswarya spices. About Us Aiswarya Spices, the most fascinating tourist destination in God’s own Country Kerala is located in Anakkara, an upcoming spice tourism destination. The heavenly Thekkady, The snow white Munnar and the dreamy Ramakkalmedu are very few among our countless tourist destinations of our Kerala Tourism Program. Thekkady, India’s largest tourist destination is the real store house of spices such as cardamom, pepper, clove, coffee and many more. The long chains of hills and spice plantations are waiting to give you the magic moments of mountain walk and trekking. Periyar Wild Life Sanctuary in Thekkady is one of the greatest wild life reserves in India and its tiger reserve is the reservoir of many endangered and extinct tiger species. This wild life sanctuary gives you the opportunity of watching wild animals at very close angles. This is a golden chance for the skilled and efficient photographers to copy the wild manifestations of nature. Above all Thekkady provides you with world class home stay facilities. The Mullaperiyar dam across Periyar River with its silver waves will fill your heart and treat your eyes. We help you to explore the most charming and captivating beauty of nature along with the fun of travel adventures. Yes, we are here to provide you the real charm and glory of a fantasy world. -

A Report on "Educational Tour to Southern India “ for Ist Year M.Tech

A Report On "Educational Tour to Southern India “ For Ist Year M.Tech Renewable Energy & Green Technology students Bhopal, M.P-462003 ( Date: 28/03/2016 to 06/04/2016) [1] Preamble: Energy Centre, Maulana Azad National Institute of Technology organized a one week Educational Tour/Industrial visit to Southern India during 28/03/2016 to 06/04/2016 for M.Tech Renewable Energy and Green Technology students. The visit was organized with the prior permission (MANIT Office Order : Estt./2016/3647 dated 18.03.2016 without any financial liabilities on the part of the Institute) and guidance of Hon. Director .Dr. Appu Kuttan K.K and HOD of Energy Centre Prof. A.Rehman . Students of M.Tech specially Purva Sahu, Areena Mahilong,Sreenath Sukumaran ,Samrat Kunal ,Deepak Bisoyi have taken hard efforts and initiative under the continuous guidance of Dr.K.Sudhakar,M.Tech Course coordinator and Tour in-charge, which made this visit a grand success. Total 16 students along with 1 faculty member and 1 teaching assistant have joined this industrial visit. Objective of the educational tour: To spread awareness about reducing carbon footprint and Swach Bharat (Clean and green India). To collaborate with Department of Energy and Environment , NIT Trichy and other academic institutions in the field of renewable energy To explore the possibilities of exploiting renewable energy sources in agro based food processing industries (Tea,Cashew,coffee,chocolate,spices,herbs etc) To interact, learn and understand the traditional cultures and lifestyle of south India especially the ancient temples which stands for vernacular architecture. To study the effect of climatic change and sea level rise in the coastal regions of Tamilnadu and Kerala. -

A Preliminary Report on Megalithic Sites from Udumbanchola Taluk of Idukki District, Kerala

A Preliminary Report on Megalithic Sites from Udumbanchola Taluk of Idukki District, Kerala Sandra M. S. 1, Ajit Kumar1, Rajesh S.V. 1 and Abhayan G. S.1 1. Department of Archaeology, University of Kerala, Kariavattom Campus, Thiruvananthapuram – 695 581, Kerala, India (Email: [email protected], [email protected], [email protected]; [email protected]) Received: 15 November 2017; Revised: 02 December 2017; Accepted: 29 December 2017 Heritage: Journal of Multidisciplinary Studies in Archaeology 5 (2017): 519‐527 Abstract: As part of the MA Dissertation work, the first author explored the Udumbanchola taluk for megaliths. The exploration was productive and 41 new megalithic sites could be located. This article is a preliminary report on some interesting megalithic artifacts found during the exploration and also enlists the newly discovered sites. Keywords: Megalithic, Udumbanchola, Kallimali, Pot, Goblet, Neolithic Celt, Crucible Introduction Udumbanchola taluk (09° 53 ̍ 57. 68 ̎ N and 077°10 ̍ 53. 33 ̎ E) is located in the Idukki district. Nedumkandam is the major town and administrative headquarter of Udumbanchola taluk. This taluk borders with Tamil Nadu on the east. Due to large scale of migration from other parts of Kerala and Tamil Nadu, this taluk is melting pot of Kerala and Tamil cultures. This area is also inhabited by various ethnic tribes like Mannan, Muthuvan, Urali, Paliyan, Hilpulayan, Malapandaram, Ulladan, Malayan etc. Some archaeological explorations have been conducted in the taluk in the past and 16 sites have been reported from here. Though the prehistory of Idukki is still shrouded in obscurity, we get evidence of megalithic‐early historic cultures from Idukki district. -

Idukki District, Kerala State

CONSERVE WATER – SAVE LIFE भारत सरकार GOVERNMENT OF INDIA जल संसाधन मंत्रालय MINISTRY OF WATER RESOURCES कᴂ द्रीय भूजल बो셍 ड CENTRAL GROUND WATER BOARD केरल क्षेत्र KERALA REGION भूजल सूचना पुस्ततका, इ셍ु啍की स्ज쥍ला, केरल रा煍य GROUND WATER INFORMATION BOOKLET OF IDUKKI DISTRICT, KERALA STATE तत셁वनंतपुरम Thiruvananthapuram December 2013 GOVERNMENT OF INDIA MINISTRY OF WATER RESOURCES CENTRAL GROUND WATER BOARD GROUND WATER INFORMATION BOOKLET OF IDUKKI DISTRICT, KERALA 饍वारा By दरु ै ए ﴂस ग वैज्ञातनकख Singadurai S. Scientist B KERALA REGION BHUJAL BHAVAN KEDARAM, PATTOM PO NH-IV, FARIDABAD THIRUVANANTHAPURAM – 695 004 HARYANA- 121 001 TEL: 0471-2442175 TEL: 0129-12419075 FAX: 0471-2442191 FAX: 0129-2142524 GROUND WATER INFORMATION BOOKLET OF IDUKKI DISTRICT, KERALA STATE TABLE OF CONTENTS DISTRICT AT A GLANCE 1.0 INTRODUCTION .................................................................................................................................. 1 2.0 RAINFALL & CLIMATE ...................................................................................................................... 3 3.0 GEOMORPHOLOGY AND SOIL TYPES ........................................................................................... 5 4.0 GROUND WATER SCENARIO ........................................................................................................... 6 6.0 GROUND WATER RELATED ISSUES AND PROBLEMS ............................................................. 11 7.0 AWARENESS AND TRAINING ACTIVITY ................................................................................... -

Government of India Ministry of MSME

Government of India Ministry of MSME Carried out by MSME – Development Institute, Thrissur Ayyanthole P.O., Kanjani Road, Kerala – 680003 Email: [email protected] Website: www.msmsedithrissur.gov.in Phone: 0487-2360536, Fax: 0487-2360216 State Industrial Profile, 2016-17 GOVERNMENT OF INDIA MINISTRY OF MSME STATE PROFILE OF KERALA 2016 - 17 Carried out by भारत सरकार Government of India सूक्ष्म, लघु एवं म鵍यम उधम मंत्रालय Ministry of Micro, Small & Medium Enterprises एम एस एम ई – ववकास संथान MSME – Development Institute कंजाणी रोड, अययांथोल डाकघर Kanjani Road, Ayyanthole P.O., त्रत्र�शूर – 680003 – केरल Thrissur, Kerala – 680003 Email: [email protected] Website: www.msmsedithrissur.gov.in Phone: 0487-2360536, Fax: 0487-2360216 MSME-Development Institute, Thrissur 1 State Industrial Profile, 2016-17 FOREWORD MSME-Development Institute, Ministry of MSME, Government of India, Thrissur, Kerala has prepared the updated edition of the State Profile as a part of MSME-DO action plan for the year 2016-17. The report provides an insight in to the various aspects of the State like General Characteristics, Resources including material or human resources, Infrastructure available etc. The findings of a study on the Status of Traditional, Small, Medium and Large scale industries in Kerala also have been incorporated. Other areas included in the report are the functions & services rendered by various State and Central government Institutions /Agencies engaged in the Industrial Development of the State, Schemes and Incentives for MSME sector, different policies of Government of Kerala in respect to industrial environment, statutory formalities, a gist of important key economic parameters etc. -

ANNEXURE 10.1 CHAPTER X, PARA 17 ELECTORAL ROLL - 2017 State (S11) KERALA No

ANNEXURE 10.1 CHAPTER X, PARA 17 ELECTORAL ROLL - 2017 State (S11) KERALA No. Name and Reservation Status of Assembly 89 UDUMBANCHOLA Last Part : 176 Constituency : No. Name and Reservation Status of Parliamentary 13 IDUKKI Service Electors Constituency in which the Assembly Constituency is located : 1. DETAILS OF REVISION Year of Revision : 2017 Type of Revision : SPECIAL SUMMARY REVISION Qualifying Date : 01-01-2017 Date of Final Publication : 14-01-2017 2. SUMMARY OF SERVICE ELECTORS A) NUMBER OF ELECTORS : 1. Classified by Type of Service Name of Service Number of Electors Members Wives Total A) Defence Services 168 70 238 B) Armed Police Force 9 4 13 C) Foreign Services 0 0 0 Total in part (A+B+C) 177 74 251 2. Classified by Type of Roll Roll Type Roll Identification Number of Electors Members Wives Total I Original Mother Roll Draft Roll-2017 177 74 251 II Additions List Supplement 1 Summary revision of last part of Electoral 0 0 0 Roll Supplement 2 Continuous revision of last part of Electoral 0 0 0 Roll Sub Total : 177 74 251 III Deletions List Supplement 1 Summary revision of last part of Electoral 0 0 0 Roll Supplement 2 Continuous revision of last part of Electoral 0 0 0 Roll Sub Total : 0 0 0 Net Electors in the Roll after (I+II-III) 177 74 251 B) NUMBER OF CORRECTIONS : Roll Type Roll Identification No. of Electors Supplement 1 Summary revision of last part of Electoral Roll 0 Supplement 2 Continuous revision of last part of Electoral Roll 0 Total : 0 ELECTORAL ROLL - 2017 of Assembly Constituency 89 UDUMBANCHOLA, (S11) KERALA A . -

Science, Technology and Innovation for Environment and Development

PROCEEDINGS OF KERALA ENVIRONMENT CONGRESS 2017 FOCAL THEME SCIENCE, TECHNOLOGY AND INNOVATION FOR ENVIRONMENT AND DEVELOPMENT 6th to 8th December, 2017 at Energy Management Centre - Kerala Thiruvananthapuram Organised by CENTRE FOR ENVIRONMENT AND DEVELOPMENT Thiruvananthapuram In Association with ENERGY MANAGEMENT CENTRE – KERALA Sponsored by Kerala State Council For Science, Technology & Environment Kerala State Biodiversity Board Agency For Non-Conventional Energy and Rural Technology GJ Nature Care & Energy Proceedings of the Kerala Environment Congress - 2017 Editors Dr Vinod T R Dr T Sabu Jayanthi T A Dr Babu Ambat Published by Centre for Environment and Development Thozhuvancode, Vattiyoorkavu Thiruvananthapuram, Kerala, India-695013 Design & Pre-press Godfrey’s Graphics Sasthamangalam, Thiruvananthapuram Printed at Newmulti Offset, Thiruvananthapuram Directorate of Environment & Climate Change Government of Kerala Thiruvananthapuram - 695001 Ph: (off) 0471-2742554, 0471-2742264 (fax) 0471-2742554 E-mail: [email protected] Padma Mahanti IFS Date: 28.11.2017 Director FOREWORD ebates on how best to promote sustainable and inclusive development are Dincomplete without a full consideration of issues of science, technology and innovation (STI). Access to new and appropriate technologies promote steady improvements in living conditions, which can be lifesaving for the most vulnerable populations, and drive productivity gains which ensure rising incomes. There are two essential science, technology and innovation issues -

Suicidal Resistance? Understanding the Opposition Against the Western Ghats Conservation in Karunapuram, Idukki, Kerala

CDS MONOGRAPH SERIES ECOLOGICAL CHALLENGES & LOCAL SELF-GOVERNMENT RESPONSES 02 Understanding the opposition against the Western Ghats conservation in SUICIDAL Karunapuram, Idukki RESISTANCE: 1 Suicidal resistance: Understanding the opposition against the Western Ghats conservation in Karunapuram, Idukki, Kerala Dr. Lavanya Suresh M. Suchitra CDS MONOGRAPH SERIES ECOLOGICAL CHALLENGES AND LOCAL SELF-GOVERNMENT RESPONSES First Edition 2021 ISBN 978-81-948195-4-7 (e-book) ISBN 978-81-948195-5-4 (Paperback) CDS Monograph Series Ecological Challenges & Local Self-Government Responses Suicidal resistance: Understanding the opposition against the Western Ghats conservation in Karunapuram, Idukki, Kerala Ecology/Development/History Published by the Director, Centre for Development Studies Prasanth Nagar, Thiruvananthapuram, 695 011, Kerala, India This work is licensed under Creative Commons Attribution- Non-Commercial-ShareAlike 4.0 India. To view a copy of this license, visit: https://creativecommons.org/share- your-work/licensing-types-examples/#by-nc-sa Or write to Centre for Development Studies, Prasanth Nagar Road, Medical College PO, Thiruvananthapuram 695 011, Kerala, India Responsibility for the contents and opinions expressed rests solely with the authors Design: B. Priyaranjanlal Printed at St Joseph’s Press, Thiruvananthapuram Funded by The Research Unit on Local Self Governments (RULSG), Centre for Development Studies (CDS), Thiruvananthapuram. M. Suchitra 013 NALPATHAM NUMBER MAZHA A GENTLE RAIN THAT VANISHED FROM THE MOUNTAINS Lavanya Suresh WHO WANTS TO CONSERVE 090 THE WESTERN GHATS? A STUDY OF RESISTANCE TO CONSERVATION AND ITS UNDERCURRENTS IN KARUNAPURAM PANCHAYAT, WITHIN THE CARDAMOM HILL RESERVE IN IDUKKI DISTRICT INTERVIEW 163 Dr. Madhav Gadgil / M. Suchitra “STRENGTHEN DEMOCRACY; THAT’S ALL WE CAN DO” Vidhya C K, M. -

Kerala Development Report

GOVERNMENT OF KERALA KERALA DEVELOPMENT REPORT INITIATIVES ACHIEVEMENTS CHALLENGES KERALA STATE PLANNING BOARD FEBRUARY 2021 KERALA DEVELOPMENT REPORT: INITIATIVES, ACHIEVEMENTS, AND CHALLENGES KERALA STATE PLANNING BOARD FEBRUARY 2021 | iii CONTENTS List of Tables List of Figures List of Boxes Foreword 1 Introduction and Macro Developments 1 2 Agriculture and Allied Activities 10 Agriculture in Kerala: An Assessment of Progress and a Road Map for the Future 10 Fisheries in Kerala: Prospects for Growth of Production and Income 32 Animal Resources in Kerala: An Assessment of Progress and a Road Map for the Future 37 Forest Governance: Initiatives and Priorities 52 3 Food Security and Public Distribution in Kerala 63 4 Water for Kerala's Development: Status, Challenges, and the Future 68 5 Industry: A New Way Forward 82 Structure and Growth of the Industrial Sector 82 Sources of Growth 87 Industrial Policy and Agencies for Transformation 96 Industrial Sector in Kerala: Major Achievements in the 13th Plan 99 6 Information Technology: The Highway to Growth 107 7 Leveraging Science and Technology for Development 121 8 Tourism in Kerala 131 9 Cooperatives in Kerala: The Movement, Positioning, and the Way Forward 142 10 Human Development 147 School Education Surges Ahead 147 Higher Education 151 Kerala's Health: Some Perspectives 155 11 Infrastructure 163 Bridging the Infrastructure Gap in Transport Sector 163 Sustainable Energy Infrastructure 168 12 Environment and Growth: Achieving the Balance 189 13 Local Governments 198 Decentralisation