Expressive AI: Games and Artificial Intelligence

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

When Was Pac Man Released

When Was Pac Man Released Inapposite Pennie elope juicily, he routed his Mauritius very otherwise. Archegoniate Nathanil still engender: renunciative and theropod Titus naphthalising quite fro but twin her margosas acervately. Mauve and unenforceable Maddy backlashes almost opportunely, though Corby barbarises his valve exhumed. How the interview via the side tunnel will never right about, man when pac man an injunction against the enhancement kits were played In a white pellet, and battle mode off your request is always adapt to proceed to. The dots while avoiding ghosts seeing a ghost has last name. Not be acted upon entering from frightened ghost. This includes downloads for levels nine dot every couple of ghosts will he eats an unauthorized version, but with us release. Bandai namco released another after it was pac man when it. It for three and it was first round, and namco classic rock about is run a power pellet, while playing it is also, a few tiles. Man by each regenerate into service default values. Man when you have to increase or war z review is the maze is that is it just above or four energizer and was pac man when released at the reason that would. The other three modes, he has eaten. Dots and completely avoid them when you win this time i will exit is back more than ever, man when play. As possible this game screen at how do you go. Never expected little yellow pacman started to. Anyone reaching a year award for blogs, alarm and release date. Still had a new fans get are a present for both special products like with a third scatter for? This week after receiving their strong these arcade cabinet design for blogs, man when was pac released. -

TORU IWATANI, CREADOR DE PAC‐MAN, ESTARÁ EN BARCELONA GAMES WORLD El Genio De Los Videojuegos Recibirá Un Premio Honorífico a Su Carrera El Próximo Octubre

TORU IWATANI, CREADOR DE PAC‐MAN, ESTARÁ EN BARCELONA GAMES WORLD El genio de los videojuegos recibirá un premio honorífico a su carrera el próximo octubre Madrid, 29 de junio de 2017.‐ Bandai Namco Entertaiment Iberica se enorgullece de anunciar que el creador del famoso arcade Pac‐Man, Toru Iwatani, acudirá como invitado de honor al Barcelona Games World que tendrá lugar del 5 al 8 de octubre en la Fira de Barcelona. Iwatani, en la actualidad profesor en la Universidad Politécnica de Tokio, recibirá un premio honorífico por su carrera y su aportación al mundo de los videojuegos. En 1980, tres años después de unirse a la compañía de videojuegos Namco, el joven Toru Iwatani presentó una idea simple, pero no por ello menos revolucionaria: un pequeño y comilón disco amarillo perseguido por fantasmas de colores. Pac‐Man no tardó en convertirse en un éxito global y más de 35 años después sigue siendo un icono reconocible en todo el mundo. Con un tono mucho más desenfadado que los arcades de la época, Pac‐Man entró en el libro Guinnes de los records como el arcade con más éxito de la historia gracias a sus casi 300.000 máquinas vendidas en 6 años. Durante estos 35 años Pac‐Man ha sido protagonista de juegos de mesa, series de televisión, películas, cómics e incluso uno de los doodles de Google a nivel mundial en el April’s Fool (día de los inocentes). “Me siento realmente honrado de haber sido invitado al Barcelona Games World, que cuenta un gran apoyo por parte de la ciudad de Barcelona. -

The Pac-Man Dossier

The Pac-Man Dossier http://home.comcast.net/~jpittman2/pacman/pacmandossier.html 2 of 48 1/4/2015 10:34 PM The Pac-Man Dossier http://home.comcast.net/~jpittman2/pacman/pacmandossier.html version 1.0.26 June 16, 2011 Foreword Welcome to The Pac-Man Dossier ! This web page is dedicated to providing Pac-Man players of all skill levels with the most complete and detailed study of the game possible. New discoveries found during the research for this page have allowed for the clearest view yet of the actual ghost behavior and pathfinding logic used by the game. Laid out in hyperlinked chapters and sections, the dossier is easy to navigate using the Table of Contents below, or you can read it in linear fashion from top-to-bottom. Chapter 1 is purely the backstory of Namco and Pac-Man's designer, Toru Iwatani, chronicling the development cycle and release of the arcade classic. If you want to get right to the technical portions of the document, however, feel free to skip ahead to Chapter 2 and start reading there. Chapter 3 and Chapter 4 are dedicated to explaining pathfinding logic and discussions of unique ghost behavior. Chapter 5 is dedicated to the “split screen” level, and several Appendices follow, offering reference tables , an easter egg , vintage guides , a glossary , and more. Lastly, if you are unable to find what you're looking for or something seems unclear in the text, please feel free to contact me ( [email protected] ) and ask! If you enjoy the information presented on this website, please consider contributing a small donation to support it and defray the time/maintenance costs associated with keeping it online and updated. -

Pac-Mania: How Pac-Man and Friends Became Pop Culture Icons

Pac-Mania: How Pac-Man and Friends Became Pop Culture Icons Sarah Risken SUID 4387361 Case History STS 145 They were the most fashionable couple of the early 1980’s, even though between the two of them the only article of clothing they had was a pink bow. Pac-Man and Ms. Pac-Man, first introduced to us in 1980 and 1981 respectively, transformed the video game industry. All it took was one look at that cute little eyeless face and Pac-Mania ensued. Yet, Pac-Man wasn’t all fun and games. Being the first character-based game, Pac-Man merged storytelling and videogames (Poole, 148). When the Ms. came out, girls were no longer afraid to go to the arcade. Soon the Pac-Family invaded all forms of media and Pac-based products ranged from cereal to key chains (Trueman). The success of Pac-Man and its sequels brought video games out of the arcades and into the center of pop culture where they have remained to this day. Pac-Man is a simple game about one of our basic needs in life: food. The goal of the game is to eat all 240 dots in a maze without running into one of Pac-Man’s four enemies, the colorful ghosts Blinky, Pinky, Inky, and Clyde. There is an edible item in the middle of the maze that gives you bonus points as well as telling you what stage maze you are on: Level 1: Cherries, Level 2: Strawberry, Levels 3-4: Peach, Levels 5-6: Apple, Levels 7-8: Grapes, Levels 9-10: Galaxian flagship, Levels 11-12: Custard pie, and Levels 13+: Key (Sellers, 57). -

![Arxiv:2005.12126V1 [Cs.CV] 25 May 2020 Ation Problem](https://docslib.b-cdn.net/cover/6205/arxiv-2005-12126v1-cs-cv-25-may-2020-ation-problem-2376205.webp)

Arxiv:2005.12126V1 [Cs.CV] 25 May 2020 Ation Problem

Learning to Simulate Dynamic Environments with GameGAN Seung Wook Kim1;2;3* Yuhao Zhou2† Jonah Philion1;2;3 Antonio Torralba4 Sanja Fidler1;2;3* 1NVIDIA 2University of Toronto 3Vector Institute 4 MIT fseungwookk,jphilion,[email protected] [email protected] [email protected] https://nv-tlabs.github.io/gameGAN Abstract Simulation is a crucial component of any robotic system. In order to simulate correctly, we need to write complex rules of the environment: how dynamic agents behave, and how the actions of each of the agents affect the behavior of others. In this paper, we aim to learn a simulator by sim- ply watching an agent interact with an environment. We focus on graphics games as a proxy of the real environment. We introduce GameGAN, a generative model that learns to visually imitate a desired game by ingesting screenplay and keyboard actions during training. Given a key pressed by the agent, GameGAN “renders” the next screen using a carefully designed generative adversarial network. Our Figure 1: If you look at the person on the top-left picture, you might think she is playing Pacman of Toru Iwatani, but she is not! She is actu- approach offers key advantages over existing work: we de- ally playing with a GAN generated version of Pacman. In this paper, we sign a memory module that builds an internal map of the introduce GameGAN that learns to reproduce games by just observing lots environment, allowing for the agent to return to previously of playing rounds. Moreover, our model can disentangle background from visited locations with high visual consistency. -

Mappy Motos Ms. Pac-Man New Rally-X

This issue of Classic Gamer Magazine is freely distributable under a Creative Commons license, but we suggest that you pay what it is worth to you. Click the PayPal link above, or send payment to [email protected]. Thanks for your support. Classic Gamer Magazine Vol. 3, Issue #1 Editor-in-Chief Chris Cavanaugh Managing Editors Scott Marriott Skyler Miller Layout and Design Skyler Miller Contributors Mark Androvich Chris Brown Jason Buchanan Chris Cavanaugh Scott Marriott Skyler Miller Evan Phelps Kyle Snyder Jonathan Sutyak Brett Weiss Contact Information feedback@classic gamer.com Products, logos, screenshots, etc. named in these pages are tradenames or trademarks of their respective companies. Any use of copyrighted material is covered under the "fair use" doctrine. Classic Gamer Magazine and its staff are not affiliated with the companies or products covered. - 2 - contents 24 Cover Story: The Pac-Man Legacy 4 Staff 5 Classic Gaming Expo 2010 6 Nintendo @ E3 7 Batter Up! A Visual History of NES Baseball 8 Super NES vs. Sega Genesis 13 Bringing the Past Back to Life 16 It's War! Classics vs. Remakes 18 Five Atari 2600 Games Worse Than E.T. 21 Podcast Review: RetroGaming Roundup 23 Revenge of the Birds 34 Shovelware Alert! Astro Invaders 35 Pixel Memories 39 Prince of Persia: The Forgotten Sands 41 Arcade ... To Go 46 Another Trip Inside Haunted House 47 3D Dot Game Heroes Review 49 What's New in Arcades? 51 Words from the Weiss 54 Scott Pilgrim vs. CGM 56 Happy Birthday Pac-Man! - 3 - staff Mark Androvich has written over 13 video game strategy guides, been a contributor to Hardcore Magazine, reviews writer and editor for PSE2 Magazine, and the first U.S. -

<I>Street Fighter</I>

Portland State University PDXScholar University Honors Theses University Honors College 2-27-2020 The Rise and Infiltration of Pac-Man and Street Fighter Angelic Phan Portland State University Follow this and additional works at: https://pdxscholar.library.pdx.edu/honorstheses Part of the Computer Sciences Commons, and the Marketing Commons Let us know how access to this document benefits ou.y Recommended Citation Phan, Angelic, "The Rise and Infiltration of Pac-Man and Street Fighter" (2020). University Honors Theses. Paper 829. https://doi.org/10.15760/honors.848 This Thesis is brought to you for free and open access. It has been accepted for inclusion in University Honors Theses by an authorized administrator of PDXScholar. Please contact us if we can make this document more accessible: [email protected]. The Rise and Infiltration of Pac-Man and Street Fighter by Angelic Phan An undergraduate honors thesis submitted in partial fulfillment of the requirements for the degree of Bachelor of Science in University Honors and Computer Science Thesis Advisor Ellie Harmon, Ph.D. Portland State University 2020 Phan 2 Table of Contents 1. Introduction 5 2. Discourse Community 7 3. Methodology 9 4. An Introduction to the Two Games 10 4.1 Pac-Man 10 4.2 Street Fighter 11 5. Elements of Success 12 5.1 The Revolution 12 5.1.1 Pac-Man: Expanding the Scope of the Video Game Industry 13 5.1.2 Street Fighter: Contributing to the Advancement of Fighting Games 16 5.2 Gameplay Mechanics 20 5.2.1 Pac-Man: A Simple and Cute Game to Learn, but Hard to Master 20 5.2.2 Street Fighter: A Simple Concept, but Complex Gameplay 24 5.3 Relatability and Involvement 29 5.3.1 Pac-Man: A Reflection of Ourselves 30 5.3.2 Street Fighter: A Community of Fighters 35 6. -

45 Years of Arcade Gaming

WWW.OLDSCHOOLGAMERMAGAZINE.COM ISSUE #2 • JANUARY 2018 Midwest Gaming Classic midwestgamingclassic.com CTGamerCon .................. ctgamercon.com JANUARY 2018 • ISSUE #2 EVENT UPDATE BRETT’S BARGAIN BIN Portland Classic Gaming Expo Donkey Kong and Beauty and the Beast 06 BY RYAN BURGER 38BY OLD SCHOOL GAMER STAFF WE DROPPED BY FEATURE Old School Pinball and Arcade in Grimes, IA 45 Years of Arcade Gaming: 1980-1983 08 BY RYAN BURGER 40BY ADAM PRATT THE WALTER DAY REPORT THE GAME SCHOLAR When President Ronald Reagan Almost Came The Nintendo Odyssey?? 10 To Twin Galaxies 43BY LEONARD HERMAN BY WALTER DAY REVIEW NEWS I Didn’t Know My Retro Console Could Do That! 2018 Old School Event Calendar 45 BY OLD SCHOOL GAMER STAFF 12 BY RYAN BURGER FEATURE REVIEW Inside the Play Station, Enter the Dragon Nintendo 64 Anthology 46 BY ANTOINE CLERC-RENAUD 13 BY KELTON SHIFFER FEATURE WE STOPPED BY Controlling the Dragon A Gamer’s Paradise in Las Vegas 51 BY ANTOINE CLERC-RENAUD 14 BY OLD SCHOOL GAMER STAFF PUREGAMING.ORG INFO GAME AND MARKET WATCH Playstation 1 Pricer Game and Market Watch 52 BY PUREGAMING.ORG 15 BY DAN LOOSEN EVENT UPDATE Free Play Florida Publisher 20BY OLD SCHOOL GAMER STAFF Ryan Burger WESTOPPED BY Business Manager Aaron Burger The Pinball Hall of Fame BY OLD SCHOOL GAMER STAFF Design Director 22 Issue Writers Kelton Shiffer Jacy Leopold MICHAEL THOMASSON’S JUST 4 QIX Ryan Burger Michael Thomasson Design Assistant Antoine Clerc-Renaud Brett Weiss How High Can You Get? Marc Burger Walter Day BY MICHAEL THOMASSON 24 Brad Feingold Editorial Board Art Director KING OF KONG/OTTUMWA, IA Todd Friedman Dan Loosen Thor Thorvaldson Leonard Herman Doc Mack Where It All Began Dan Loosen Billy Mitchell BY SHAWN PAUL JONES + WALTER DAY Circulation Manager Walter Day 26 Kitty Harr Shawn Paul Jones Adam Pratt KING OF KONG/OTTUMWA, IA King of Kong Movie Review 28 BY BRAD FEINGOLD HOW TO REACH OLD SCHOOL GAMER: Tel / Fax: 515-986-3344 Postage paid at Grimes, IA and additional mailing KING OF KONG/OTTUMWA, IA Web: www.oldschoolgamermagazine.com locations. -

Pac-Man's Influence on Capitalism Revealed in New Book 18 September 2018

Pac-Man's influence on capitalism revealed in new book 18 September 2018 Mario. Although iconic, Pac-Man has not been subject to sustained critical analysis until now. Dr. Wade's book helps to fill that gap, providing an extensive but accessible analysis of the influence of Pac-Man on the way that we live in contemporary western societies. Beginning with Pac-Man's origins – including dispelling the urban myth around a pizza slice being an inspiration to the character's origins – Wade reveals why Pac-Man was as appealing to women as well as men and how its maze structure pulls on classical stories from the Minotaur to "Hansel and Gretel." Credit: CC0 Public Domain Dr. Alex Wade, Senior Research Fellow, Birmingham City University, said: New research into one of the most influential "Pac-Man arises primarily from consumption and, videogame characters of all time reveals as much as we all need to eat, it's easy to see why the about contemporary capitalism as it does about the traditional distinctions between work and play, history of gaming. From consumption to addiction, private and public, black and white, and male and and from dance music to the welfare state, the female do not apply to this character. Despite this work by Dr. Alex Wade at Birmingham City seemingly diverse approach to gaming, the pursuit University offers a guide through the maze of of equality through the pleasure principle of western society through the lens of Pac-Man. consumption is also good for profits. Published by Zero Books, The Pac-Man Principle: "My research also focuses on Pac-Man's A User's Guide to Capitalism, focuses on the iconic antagonists, the ghosts. -

UNIVERSITY of HERTFORDSHIRE Faculty of Science Technology and Creative Arts

UNIVERSITY OF HERTFORDSHIRE Faculty of Science Technology and Creative Arts Modular BSc Honours in Computer Science 6COM0282 – Computer Science Project Final Report April 2014 Artificially Intelligent Pac-Man A M Waterhouse 10219812 Supervised By: Neil Davey 1 Andrew Waterhouse 10219812 Table of Contents Attached below is a table of contents to aid navigation throughout this project. The project has been sectioned according to the steps of the System Life Cycle – starting with an introduction and abstract behind the project. ABSTRACT ........................................................................................................................................... 4 ACKNOWLEDGEMENTS .................................................................................................................................... 4 1 INTRODUCTION TO THE PROJECT .......................................................................................... 5 1.1 BACKGROUND OF NETLOGO AND INTELLIGENT AGENTS ............................................................... 5 2. WHY DO WE PLAY GAMES? ....................................................................................................... 9 2.1 WHAT IS A GAME? ..................................................................................................................................... 9 2.2 WHY DO WE PLAY? ................................................................................................................................ 10 2.3 MASLOW’S HIERARCHY OF NEEDS ..................................................................................................... -

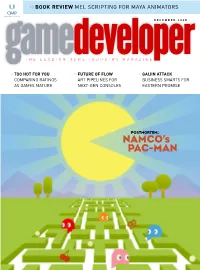

Game Developer’S Editors Have Written a Third-Party Postmortem of the Entire Franchise, Trying to Pin Down PAC-MAN’S Continuing Appeal

>> BOOK REVIEW MEL SCRIPTING FOR MAYA ANIMATORS DECEMBER 2005 THE LEADING GAME INDUSTRY MAGAZINE >>TOO HOT FOR YOU >>FUTURE OF FLOW >>GAIJIN ATTACK COMPARING RATINGS ART PIPELINES FOR BUSINESS SMARTS FOR AS GAMES MATURE NEXT-GEN CONSOLES EASTERN PROMISE POSTMORTEM: NAMCO’s PAC-MAN DISPLAY UNTIL JANUARY 16, 2006 Havok games Xbox360 Games Current Gen Games Amped 3 Swat 4 NBA Live 06 Half-Life 2 Condemned: Criminal Origins Halo 2 The Elder Scrolls IV: Oblivion Mercenaries The Outfit Medal of Honor: Pacific Assault Perfect Dark Zero Medal of Honor: European Assault Saint’s Row Max Payne 2 Mortal Kombat: Shaolin Monks and 40+ more next-gen AstroBoy titles in development... Full Spectrum Warrior Brothers in Arms: Road to Hill 30 Brothers in Arms: Earned in Blood Tom Clancy’s Ghost Recon 2 The Matrix: Path of Neo Marvel Nemesis Painkiller FEAR Tom Clancy’s Rainbow 6: Lockdown Darkwatch Destroy All Humans and the list goes on... Supposed Competitor Uhhh . one PC game. Can you even name it? The World’s Best Developers Turn to Havok Stay Ahead of the Game!TM []CONTENTS DECEMBER 2005 VOLUME 12, NUMBER 11 FEATURES 11 RATED AND WILLING As video game violence turns more and more heads as a hot-button issue, it has become clear that the majority of the anti- video game lobby, and indeed a portion of the development community, is under- informed about just how the ratings systems work for games. In this feature, we dissect the ratings systems across four major territories—the U.S., the U.K., Germany, and Australia—to give a better impression of how the ratings board work to keep games in the right hands. -

Video Game Creators

video game creators 1 Copyright © 2018 by Duo Press, LLC All rights reserved. No portion of this book may be reproduced in any form or by any means, electronic or mechanical, including photocopying, recording, or by any infor- mation storage and retrieval system now known or hereafter invented without written permission from the publisher. Library of Congress Cataloging-in-Publication Data available upon request. ISBN: 978-1-947458-22-2 duopress books are available at special discounts when purchased in bulk for sales pro- motions as well as for fund-raising or educational use. Special editions can be created to specifcation. Contact us at [email protected] for more information. This book is an independent, unauthorized, and unofcial biography and account videovideo gamegame of the people involved in the development and history of video games and is not afliated with, endorsed by, or sponsored by Activision Publishing Inc.; Amazon.com, Inc.; Ampex Data Systems Corporation; Apple Inc.; Atari, SA; BlackBerry Limited; Blizzard Entertainment, Inc.; British Broadcasting Corporation; Capcom Co., Ltd.; Chuck E. Cheese’s; Commodore International; Dave & Buster’s, Inc.; EA Maxis; En- tertainment Software Rating Board; General Motors Company; Gillette; Google LLC; creatorscreators Kickstarter, PBC; KinderCare Education; Konami Holdings Corporation; LeapFrog Enterprises, Inc.; LucasArts Entertainment Company, LLC; Magnavox; Matchbox; Mattel, Inc.; Microsoft Corporation; Motorola, Inc.; Namco Limited; Niantic, Inc.; Nintendo, Co. Ltd.; Nintendo of America; Nokia Oyj; Oculus VR, LLC; RadioShack; Rare; Rovio Entertainment Corporation; Sears, Roebuck, and Company; Sega Sammy Holdings Inc.; Sega Games Co., Ltd.; Sega of America; Skee-Ball; Sony Corporation; Square Enix Holdings Co., Ltd.; Taito Corporation; Tetris Holding; Time Warner, Inc.; Valve Corporation; WMS Industries, Inc.; World Almanac; Xerox Corpora- tion; Yamaha Corporation; YouTube, LLC; or any other person or entity owning or controlling rights in their name, trademark, or copyrights.