Logic and the Foundations of Game and Decision Theory (LOFT 7)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Uniqueness and Symmetry in Bargaining Theories of Justice

Philos Stud DOI 10.1007/s11098-013-0121-y Uniqueness and symmetry in bargaining theories of justice John Thrasher Ó Springer Science+Business Media Dordrecht 2013 Abstract For contractarians, justice is the result of a rational bargain. The goal is to show that the rules of justice are consistent with rationality. The two most important bargaining theories of justice are David Gauthier’s and those that use the Nash’s bargaining solution. I argue that both of these approaches are fatally undermined by their reliance on a symmetry condition. Symmetry is a substantive constraint, not an implication of rationality. I argue that using symmetry to generate uniqueness undermines the goal of bargaining theories of justice. Keywords David Gauthier Á John Nash Á John Harsanyi Á Thomas Schelling Á Bargaining Á Symmetry Throughout the last century and into this one, many philosophers modeled justice as a bargaining problem between rational agents. Even those who did not explicitly use a bargaining problem as their model, most notably Rawls, incorporated many of the concepts and techniques from bargaining theories into their understanding of what a theory of justice should look like. This allowed them to use the powerful tools of game theory to justify their various theories of distributive justice. The debates between partisans of different theories of distributive justice has tended to be over the respective benefits of each particular bargaining solution and whether or not the solution to the bargaining problem matches our pre-theoretical intuitions about justice. There is, however, a more serious problem that has effectively been ignored since economists originally J. -

Implementation Theory*

Chapter 5 IMPLEMENTATION THEORY* ERIC MASKIN Institute for Advanced Study, Princeton, NJ, USA TOMAS SJOSTROM Department of Economics, Pennsylvania State University, University Park, PA, USA Contents Abstract 238 Keywords 238 1. Introduction 239 2. Definitions 245 3. Nash implementation 247 3.1. Definitions 248 3.2. Monotonicity and no veto power 248 3.3. Necessary and sufficient conditions 250 3.4. Weak implementation 254 3.5. Strategy-proofness and rich domains of preferences 254 3.6. Unrestricted domain of strict preferences 256 3.7. Economic environments 257 3.8. Two agent implementation 259 4. Implementation with complete information: further topics 260 4.1. Refinements of Nash equilibrium 260 4.2. Virtual implementation 264 4.3. Mixed strategies 265 4.4. Extensive form mechanisms 267 4.5. Renegotiation 269 4.6. The planner as a player 275 5. Bayesian implementation 276 5.1. Definitions 276 5.2. Closure 277 5.3. Incentive compatibility 278 5.4. Bayesian monotonicity 279 * We are grateful to Sandeep Baliga, Luis Corch6n, Matt Jackson, Byungchae Rhee, Ariel Rubinstein, Ilya Segal, Hannu Vartiainen, Masahiro Watabe, and two referees, for helpful comments. Handbook of Social Choice and Welfare, Volume 1, Edited by K.J Arrow, A.K. Sen and K. Suzumura ( 2002 Elsevier Science B. V All rights reserved 238 E. Maskin and T: Sj'str6m 5.5. Non-parametric, robust and fault tolerant implementation 281 6. Concluding remarks 281 References 282 Abstract The implementation problem is the problem of designing a mechanism (game form) such that the equilibrium outcomes satisfy a criterion of social optimality embodied in a social choice rule. -

Matchings and Games on Networks

Matchings and games on networks by Linda Farczadi A thesis presented to the University of Waterloo in fulfilment of the thesis requirement for the degree of Doctor of Philosophy in Combinatorics and Optimization Waterloo, Ontario, Canada, 2015 c Linda Farczadi 2015 Author's Declaration I hereby declare that I am the sole author of this thesis. This is a true copy of the thesis, including any required final revisions, as accepted by my examiners. I understand that my thesis may be made electronically available to the public. ii Abstract We investigate computational aspects of popular solution concepts for different models of network games. In chapter 3 we study balanced solutions for network bargaining games with general capacities, where agents can participate in a fixed but arbitrary number of contracts. We fully characterize the existence of balanced solutions and provide the first polynomial time algorithm for their computation. Our methods use a new idea of reducing an instance with general capacities to an instance with unit capacities defined on an auxiliary graph. This chapter is an extended version of the conference paper [32]. In chapter 4 we propose a generalization of the classical stable marriage problem. In our model the preferences on one side of the partition are given in terms of arbitrary bi- nary relations, that need not be transitive nor acyclic. This generalization is practically well-motivated, and as we show, encompasses the well studied hard variant of stable mar- riage where preferences are allowed to have ties and to be incomplete. Our main result shows that deciding the existence of a stable matching in our model is NP-complete. -

John Von Neumann Between Physics and Economics: a Methodological Note

Review of Economic Analysis 5 (2013) 177–189 1973-3909/2013177 John von Neumann between Physics and Economics: A methodological note LUCA LAMBERTINI∗y University of Bologna A methodological discussion is proposed, aiming at illustrating an analogy between game theory in particular (and mathematical economics in general) and quantum mechanics. This analogy relies on the equivalence of the two fundamental operators employed in the two fields, namely, the expected value in economics and the density matrix in quantum physics. I conjecture that this coincidence can be traced back to the contributions of von Neumann in both disciplines. Keywords: expected value, density matrix, uncertainty, quantum games JEL Classifications: B25, B41, C70 1 Introduction Over the last twenty years, a growing amount of attention has been devoted to the history of game theory. Among other reasons, this interest can largely be justified on the basis of the Nobel prize to John Nash, John Harsanyi and Reinhard Selten in 1994, to Robert Aumann and Thomas Schelling in 2005 and to Leonid Hurwicz, Eric Maskin and Roger Myerson in 2007 (for mechanism design).1 However, the literature dealing with the history of game theory mainly adopts an inner per- spective, i.e., an angle that allows us to reconstruct the developments of this sub-discipline under the general headings of economics. My aim is different, to the extent that I intend to pro- pose an interpretation of the formal relationships between game theory (and economics) and the hard sciences. Strictly speaking, this view is not new, as the idea that von Neumann’s interest in mathematics, logic and quantum mechanics is critical to our understanding of the genesis of ∗I would like to thank Jurek Konieczny (Editor), an anonymous referee, Corrado Benassi, Ennio Cavaz- zuti, George Leitmann, Massimo Marinacci, Stephen Martin, Manuela Mosca and Arsen Palestini for insightful comments and discussion. -

Koopmans in the Soviet Union

Koopmans in the Soviet Union A travel report of the summer of 1965 Till Düppe1 December 2013 Abstract: Travelling is one of the oldest forms of knowledge production combining both discovery and contemplation. Tjalling C. Koopmans, research director of the Cowles Foundation of Research in Economics, the leading U.S. center for mathematical economics, was the first U.S. economist after World War II who, in the summer of 1965, travelled to the Soviet Union for an official visit of the Central Economics and Mathematics Institute of the Soviet Academy of Sciences. Koopmans left with the hope to learn from the experiences of Soviet economists in applying linear programming to economic planning. Would his own theories, as discovered independently by Leonid V. Kantorovich, help increasing allocative efficiency in a socialist economy? Koopmans even might have envisioned a research community across the iron curtain. Yet he came home with the discovery that learning about Soviet mathematical economists might be more interesting than learning from them. On top of that, he found the Soviet scene trapped in the same deplorable situation he knew all too well from home: that mathematicians are the better economists. Key-Words: mathematical economics, linear programming, Soviet economic planning, Cold War, Central Economics and Mathematics Institute, Tjalling C. Koopmans, Leonid V. Kantorovich. Word-Count: 11.000 1 Assistant Professor, Department of economics, Université du Québec à Montréal, Pavillon des Sciences de la gestion, 315, Rue Sainte-Catherine Est, Montréal (Québec), H2X 3X2, Canada, e-mail: [email protected]. A former version has been presented at the conference “Social and human sciences on both sides of the ‘iron curtain’”, October 17-19, 2013, in Moscow. -

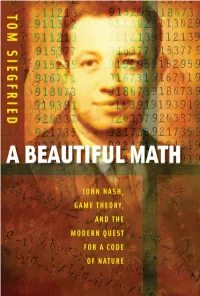

A Beautiful Math : John Nash, Game Theory, and the Modern Quest for a Code of Nature / Tom Siegfried

A BEAUTIFULA BEAUTIFUL MATH MATH JOHN NASH, GAME THEORY, AND THE MODERN QUEST FOR A CODE OF NATURE TOM SIEGFRIED JOSEPH HENRY PRESS Washington, D.C. Joseph Henry Press • 500 Fifth Street, NW • Washington, DC 20001 The Joseph Henry Press, an imprint of the National Academies Press, was created with the goal of making books on science, technology, and health more widely available to professionals and the public. Joseph Henry was one of the founders of the National Academy of Sciences and a leader in early Ameri- can science. Any opinions, findings, conclusions, or recommendations expressed in this volume are those of the author and do not necessarily reflect the views of the National Academy of Sciences or its affiliated institutions. Library of Congress Cataloging-in-Publication Data Siegfried, Tom, 1950- A beautiful math : John Nash, game theory, and the modern quest for a code of nature / Tom Siegfried. — 1st ed. p. cm. Includes bibliographical references and index. ISBN 0-309-10192-1 (hardback) — ISBN 0-309-65928-0 (pdfs) 1. Game theory. I. Title. QA269.S574 2006 519.3—dc22 2006012394 Copyright 2006 by Tom Siegfried. All rights reserved. Printed in the United States of America. Preface Shortly after 9/11, a Russian scientist named Dmitri Gusev pro- posed an explanation for the origin of the name Al Qaeda. He suggested that the terrorist organization took its name from Isaac Asimov’s famous 1950s science fiction novels known as the Foun- dation Trilogy. After all, he reasoned, the Arabic word “qaeda” means something like “base” or “foundation.” And the first novel in Asimov’s trilogy, Foundation, apparently was titled “al-Qaida” in an Arabic translation. -

Nine Takes on Indeterminacy, with Special Emphasis on the Criminal Law

University of Pennsylvania Carey Law School Penn Law: Legal Scholarship Repository Faculty Scholarship at Penn Law 2015 Nine Takes on Indeterminacy, with Special Emphasis on the Criminal Law Leo Katz University of Pennsylvania Carey Law School Follow this and additional works at: https://scholarship.law.upenn.edu/faculty_scholarship Part of the Criminal Law Commons, Law and Philosophy Commons, and the Public Law and Legal Theory Commons Repository Citation Katz, Leo, "Nine Takes on Indeterminacy, with Special Emphasis on the Criminal Law" (2015). Faculty Scholarship at Penn Law. 1580. https://scholarship.law.upenn.edu/faculty_scholarship/1580 This Article is brought to you for free and open access by Penn Law: Legal Scholarship Repository. It has been accepted for inclusion in Faculty Scholarship at Penn Law by an authorized administrator of Penn Law: Legal Scholarship Repository. For more information, please contact [email protected]. ARTICLE NINE TAKES ON INDETERMINACY, WITH SPECIAL EMPHASIS ON THE CRIMINAL LAW LEO KATZ† INTRODUCTION ............................................................................ 1945 I. TAKE 1: THE COGNITIVE THERAPY PERSPECTIVE ................ 1951 II. TAKE 2: THE MORAL INSTINCT PERSPECTIVE ..................... 1954 III. TAKE 3: THE CORE–PENUMBRA PERSPECTIVE .................... 1959 IV. TAKE 4: THE SOCIAL CHOICE PERSPECTIVE ....................... 1963 V. TAKE 5: THE ANALOGY PERSPECTIVE ................................. 1965 VI. TAKE 6: THE INCOMMENSURABILITY PERSPECTIVE ............ 1968 VII. TAKE 7: THE IRRATIONALITY-OF-DISAGREEMENT PERSPECTIVE ..................................................................... 1969 VIII. TAKE 8: THE SMALL WORLD/LARGE WORLD PERSPECTIVE 1970 IX. TAKE 9: THE RESIDUALIST PERSPECTIVE ........................... 1972 CONCLUSION ................................................................................ 1973 INTRODUCTION The claim that legal disputes have no determinate answer is an old one. The worry is one that assails every first-year law student at some point. -

MATCHING with RENEGOTIATION 1. Introduction Deferred Acceptance

MATCHING WITH RENEGOTIATION AKIHIKO MATSUI AND MEGUMI MURAKAMI Abstract. The present paper studies the deferred acceptance algorithm (DA) with renegotiation. After DA assigns objects to players, the players may renegotiate. The final allocation is prescribed by a renegotiation mapping, of which value is a strong core given an allocation induced by DA. We say that DA is strategy-proof with renegotiation if for any true preference profile and any (poten- tially false) report, an optimal strategy for each player is to submit the true preference. We then have the first theorem: DA is not strategy-proof with renegotiation for any priority structure. The priority structure is said to be unreversed if there are two players who have higher priority than the others at any object, and the other players are aligned in the same order across objects. Then, the second theorem states that the DA rule is implemented in Nash equilibrium with renegotiation, or equivalently, any Nash equilibrium of DA with renegotiation induces the same allocation as the DA rule, if and only if the priority structure is unreversed. Keywords: deferred acceptance algorithm (DA), indivisible object, renegotiation mapping, strong core, strategy-proofness with renegotiation, Nash implementation with renegotiation, unreversed priority JEL Classification Numbers: C78, D47 1. Introduction Deferred acceptance algorithm (DA) has been studied since the seminal paper by Gale and Shapley (1962). A class of problems investigated therein includes college admissions and office assignments. In these problems, the centralized mechanism assigns objects to players based on their preferences over objects and priorities for the objects over the players. Two notable prop- erties of DA are stability and strategy-proofness (Gale & Shapley, 1962; Dubins & Freedman, 1981; Roth, 1982). -

Handbook of Game Theory, Vol. 3 Edited by Robert Aumann and Sergiu Hart, Elsevier, New York, 2002

Games and Economic Behavior 46 (2004) 215–218 www.elsevier.com/locate/geb Handbook of Game Theory, Vol. 3 Edited by Robert Aumann and Sergiu Hart, Elsevier, New York, 2002. This is the final volume of three that organize and summarize the game theory literature, a task that becomes more necessary as the field grows. In the 1960s the main actors could have met in a large room, but today there are several national conferences with concurrent sessions, and the triennial World Congress. MathSciNet lists almost 700 pieces per year with game theory as their primary or secondary classification. The problem is not quite that the yearly output is growing—this number is only a bit higher than two decades ago— but simply that it is accumulating. No one can keep up with the whole literature, so we have come to recognize these volumes as immensely valuable. They are on almost everyone’s shelf. The initial four chapters discuss the basics of the Nash equilibrium. Van Damme focuses on the mathematical properties of refinements, and Hillas and Kohlberg work up to the Nash equilibrium from below, discussing its rationale with respect to dominance concepts, rationalizability, and correlated equilibria. Aumann and Heifetz treat the foundations of incomplete information, and Raghavan looks at the properties of two-person games that do not generalize. There is less overlap among the four chapters than one would expect, and it is valuable to get these parties’ views on the basic questions. It strikes me as lively and healthy that after many years the field continues to question and sometimes modify its foundations. -

Whither Game Theory? Towards a Theory of Learning in Games

Whither Game Theory? Towards a Theory of Learning in Games The MIT Faculty has made this article openly available. Please share how this access benefits you. Your story matters. Citation Fudenberg, Drew, and David K. Levine. “Whither Game Theory? Towards a Theory of Learning in Games.” Journal of Economic Perspectives, vol. 30, no. 4, Nov. 2016, pp. 151–70. As Published http://dx.doi.org/10.1257/jep.30.4.151 Publisher American Economic Association Version Final published version Citable link http://hdl.handle.net/1721.1/113940 Terms of Use Article is made available in accordance with the publisher's policy and may be subject to US copyright law. Please refer to the publisher's site for terms of use. Journal of Economic Perspectives—Volume 30, Number 4—Fall 2016—Pages 151–170 Whither Game Theory? Towards a Theory of Learning in Games Drew Fudenberg and David K. Levine hen we were graduate students at MIT (1977–81), the only game theory mentioned in the first-year core was static Cournot (1838) oligopoly, W although Eric Maskin taught an advanced elective on game theory and mechanism design. Just ten years later, game theory had invaded mainstream economic research and graduate education, and instructors had a choice of three graduate texts: Fudenberg and Tirole (1991), Osborne and Rubinstein (1994), and Myerson (1997). Game theory has been successful in economics because it works empirically in many important circumstances. Many of the theoretical applications in the 1980s involved issues in industrial organization, like commitment and timing in patent races and preemptive investment. -

Chronology of Game Theory

Chronology of Game Theory http://www.econ.canterbury.ac.nz/personal_pages/paul_walker/g... Home | UC Home | Econ. Department | Chronology of Game Theory | Nobel Prize A Chronology of Game Theory by Paul Walker September 2012 | Ancient | 1700 | 1800 | 1900 | 1950 | 1960 | 1970 | 1980 | 1990 | Nobel Prize | 2nd Nobel Prize | 3rd Nobel Prize | 0-500AD The Babylonian Talmud is the compilation of ancient law and tradition set down during the first five centuries A.D. which serves as the basis of Jewish religious, criminal and civil law. One problem discussed in the Talmud is the so called marriage contract problem: a man has three wives whose marriage contracts specify that in the case of this death they receive 100, 200 and 300 respectively. The Talmud gives apparently contradictory recommendations. Where the man dies leaving an estate of only 100, the Talmud recommends equal division. However, if the estate is worth 300 it recommends proportional division (50,100,150), while for an estate of 200, its recommendation of (50,75,75) is a complete mystery. This particular Mishna has baffled Talmudic scholars for two millennia. In 1985, it was recognised that the Talmud anticipates the modern theory of cooperative games. Each solution corresponds to the nucleolus of an appropriately defined game. 1713 In a letter dated 13 November 1713 Francis Waldegrave provided the first, known, minimax mixed strategy solution to a two-person game. Waldegrave wrote the letter, about a two-person version of the card game le Her, to Pierre-Remond de Montmort who in turn wrote to Nicolas Bernoulli, including in his letter a discussion of the Waldegrave solution. -

Perfect Bidder Collusion Through Bribe and Request*

Perfect bidder collusion through bribe and request* Jingfeng Lu† Zongwei Lu ‡ Christian Riis§ May 31, 2021 Abstract We study collusion in a second-price auction with two bidders in a dy- namic environment. One bidder can make a take-it-or-leave-it collusion pro- posal, which consists of both an offer and a request of bribes, to the opponent. We show that there always exists a robust equilibrium in which the collusion success probability is one. In the equilibrium, for each type of initiator the expected payoff is generally higher than the counterpart in any robust equi- libria of the single-option model (Eso¨ and Schummer(2004)) and any other separating equilibria in our model. Keywords: second-price auction, collusion, multidimensional signaling, bribe JEL codes: D44, D82 arXiv:1912.03607v2 [econ.TH] 28 May 2021 *We are grateful to the editors in charge and an anonymous reviewer for their insightful comments and suggestions, which greatly improved the quality of our paper. Any remaining errors are our own. †Department of Economics, National University of Singapore. Email: [email protected]. ‡School of Economics, Shandong University. Email: [email protected]. §Department of Economics, BI Norwegian Business School. Email: [email protected]. 1 1 Introduction Under standard assumptions, auctions are a simple yet effective economic insti- tution that allows the owner of a scarce resource to extract economic rents from buyers. Collusion among bidders, on the other hand, can ruin the rents and hence should be one of the main issues that are always kept in the minds of auction de- signers with a revenue-maximizing objective.