The Cell Broadband Engine: Exploiting Multiple Levels of Parallelism in a Chip Multiprocessor

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wind Rose Data Comes in the Form >200,000 Wind Rose Images

Making Wind Speed and Direction Maps Rich Stromberg Alaska Energy Authority [email protected]/907-771-3053 6/30/2011 Wind Direction Maps 1 Wind rose data comes in the form of >200,000 wind rose images across Alaska 6/30/2011 Wind Direction Maps 2 Wind rose data is quantified in very large Excel™ spreadsheets for each region of the state • Fields: X Y X_1 Y_1 FILE FREQ1 FREQ2 FREQ3 FREQ4 FREQ5 FREQ6 FREQ7 FREQ8 FREQ9 FREQ10 FREQ11 FREQ12 FREQ13 FREQ14 FREQ15 FREQ16 SPEED1 SPEED2 SPEED3 SPEED4 SPEED5 SPEED6 SPEED7 SPEED8 SPEED9 SPEED10 SPEED11 SPEED12 SPEED13 SPEED14 SPEED15 SPEED16 POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 WEIBC1 WEIBC2 WEIBC3 WEIBC4 WEIBC5 WEIBC6 WEIBC7 WEIBC8 WEIBC9 WEIBC10 WEIBC11 WEIBC12 WEIBC13 WEIBC14 WEIBC15 WEIBC16 WEIBK1 WEIBK2 WEIBK3 WEIBK4 WEIBK5 WEIBK6 WEIBK7 WEIBK8 WEIBK9 WEIBK10 WEIBK11 WEIBK12 WEIBK13 WEIBK14 WEIBK15 WEIBK16 6/30/2011 Wind Direction Maps 3 Data set is thinned down to wind power density • Fields: X Y • POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 • Power1 is the wind power density coming from the north (0 degrees). Power 2 is wind power from 22.5 deg.,…Power 9 is south (180 deg.), etc… 6/30/2011 Wind Direction Maps 4 Spreadsheet calculations X Y POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 Max Wind Dir Prim 2nd Wind Dir Sec -132.7365 54.4833 0.643 0.767 1.911 4.083 -

Quietrock Case Study | Sony Computer Entertainment America

Studios & Entertainment Quiet® Success Story Project: ® Sony Computer Entertainment ‘Sh-h-h-h!’ PlayStation 4 game- America, LLC making in progress! Location: San Mateo, California New sound studios spearhead renovations for Sony Computer General Contractor: Entertainment America, with an acoustical assist from Magnum Drywall Magnum Drywall and PABCO® Gypsum’s QuietRock® Products: QuietRock® FLAME CURB® San Mateo, California The Sound of Silence means a lot to engineers producing video games for Sony PlayStation®4 (PS4™) enthusiasts. At Sony Computer Entertainment America LLC headquarters in San Mateo, California, producers’ tolerance for intrusive noise from beyond studio walls is zero. Only the intense action on-screen matters while creating audio effects that dramatize, punctuate and heighten the deeply immersive experience for video gamers. In these studios Sony Entertainment sound engineers make the most of that capability while keeping the PlayStation® pipeline full for weekly launches of new games. The gaming experience draws players to PS4™ and its predecessor PlayStation® consoles, and PS4™ elevates 3D excitement ever higher. It’s the world’s most powerful games console, with a Graphics Processing Unit (GPU) able to perform 1,843 teraflops*. “When sound is important, we prefer to submit QuietRock as a good solution for the architect and the owner. There’s nothing else on the market that’s comparable. I even used it in my own home movie theatre.”” – Gary Robinson, Owner Magnum Drywall what the job demands Visit www.QuietRock.com or call Call 1.800.797.8159 for more information On top of its introductory lineup in November 2013, over 180 PS4™ games are in development, including “Be the Batman”, the epic conclusion of the “Batman: Arkham Knight” trilogy, that is due out in June 2015. -

History of AI at IBM and How IBM Is Leveraging Watson for Intellectual Property

History of AI at IBM and How IBM is Leveraging Watson for Intellectual Property 2019 ECC Conference June 9-11 at Marist College IBM Intellectual Property Management Solutions 1 IBM Intellectual Property Management Solutions © 2017-2019 IBM Corporation Who are We? At IBM for 37 years I currently work in the Technology and Intellectual Property organization, a combination of CHQ and Research. I have worked as an engineer in Procurement, Testing, MLC Packaging, and now T&IP. Currently Lead Architect on IP Advisor with Watson, a Watson based Patent and Intellectual Property Analytics tool. • Master Inventor • Number of patents filed ~ 24+ • Number of submissions in progress ~ 4+ • Consult/Educate outside companies on all things IP (from strategy to commercialization, including IP 101) • Technical background: Semiconductors, Computers, Programming/Software, Tom Fleischman Intellectual Property and Analytics [email protected] Is the manager of the Intellectual Property Management Solutions team in CHQ under the Technology and Intellectual Property group. Current OM for IP Advisor with Watson application, used internally and externally. Past Global Business Services in the PLM and Supply Chain practices. • Number of patents filed – 2 (2018) • Number of submissions in progress - 2 • Consult/Educate outside companies on all things IP (from strategy to commercialization, including IP 101) • Schaumburg SLE Sue Hallen • Technical background: Registered Professional Engineer in Illinois, Structural Engineer by [email protected] degree, lots of software development and implementation for PLM clients 2 IBM Intellectual Property Management Solutions © 2017-2019 IBM Corporation How does IBM define AI? IBM refers to it as Augmented Intelligence…. • Not artificial or meant to replace Human Thinking…augments your work AI Terminology Machine Learning • Provides computers with the ability to continuing learning without being pre-programmed. -

USE CASE Requirements

Ref. Ares(2017)2745733 - 31/05/2017 USE CASE Requirements Co-funded by the Horizon 2020 Framework Programme of the European Union DELIVERABLE NUMBER D2.1 DELIVERABLE TITLE USE CASE Requirements RESPONSIBLE AUTHOR CSI Piemonte OPERA: LOw Power Heterogeneous Architecture for Next Generation of SmaRt Infrastructure and Platform in Industrial and Societal Applications GRANT AGREEMENT N. 688386 PROJECT REF. NO H2020 - 688386 PROJECT ACRONYM OPERA LOw Power Heterogeneous Architecture for Next Generation of PROJECT FULL NAME SmaRt Infrastructure and Platform in Industrial and Societal Applications STARTING DATE (DUR.) 01 /12 /2015 ENDING DATE 30/11/2018 PROJECT WEBSITE www.operaproject.eu WP2 | Low Power Computing Requirements and Innovation WORKPACKAGE N. | TITLE Engineering WORKPACKAGE LEADER ISMB DELIVERABLE N. | TITLE D2.1 | USE CASE Requirements RESPONSIBLE AUTHOR Luca Scanavino – CSI Piemonte DATE OF DELIVERY M10 (CONTRACTUAL) DATE OF DELIVERY (SUBMITTED) M10 VERSION | STATUS V2.0 (update) NATURE R(Report) DISSEMINATION LEVEL PU(Public) AUTHORS (PARTNER) CSI PIEMONTE, DEPARTMENT DE L’ISERE, ISMB D2.1 | USE CASEs Requirements 1 OPERA: LOw Power Heterogeneous Architecture for Next Generation of SmaRt Infrastructure and Platform in Industrial and Societal Applications VERSION MODIFICATION(S) DATE AUTHOR(S) Luca Scanavino – CSI Jean-Christophe 0.1 All Document 12/09/2016 Maisonobe – LD38 Pietro Ruiu – ISMB Alberto Scionti - ISMB Finalization of the 1.0 15/09/2016 Giulio URLINI (ST) document Update based on the Luca Scanavino – CSI feedback -

List of Notable Handheld Game Consoles (Source

List of notable handheld game consoles (source: http://en.wikipedia.org/wiki/Handheld_game_console#List_of_notable_handheld_game_consoles) * Milton Bradley Microvision (1979) * Epoch Game Pocket Computer - (1984) - Japanese only; not a success * Nintendo Game Boy (1989) - First internationally successful handheld game console * Atari Lynx (1989) - First backlit/color screen, first hardware capable of accelerated 3d drawing * NEC TurboExpress (1990, Japan; 1991, North America) - Played huCard (TurboGrafx-16/PC Engine) games, first console/handheld intercompatibility * Sega Game Gear (1991) - Architecturally similar to Sega Master System, notable accessory firsts include a TV tuner * Watara Supervision (1992) - first handheld with TV-OUT support; although the Super Game Boy was only a compatibility layer for the preceding game boy. * Sega Mega Jet (1992) - no screen, made for Japan Air Lines (first handheld without a screen) * Mega Duck/Cougar Boy (1993) - 4 level grayscale 2,7" LCD - Stereo sound - rare, sold in Europe and Brazil * Nintendo Virtual Boy (1994) - Monochromatic (red only) 3D goggle set, only semi-portable; first 3D portable * Sega Nomad (1995) - Played normal Sega Genesis cartridges, albeit at lower resolution * Neo Geo Pocket (1996) - Unrelated to Neo Geo consoles or arcade systems save for name * Game Boy Pocket (1996) - Slimmer redesign of Game Boy * Game Boy Pocket Light (1997) - Japanese only backlit version of the Game Boy Pocket * Tiger game.com (1997) - First touch screen, first Internet support (with use of sold-separately -

Copyrighted Material

CHAPTER 1 MULTI- AND MANY-CORES, ARCHITECTURAL OVERVIEW FOR PROGRAMMERS Lasse Natvig, Alexandru Iordan, Mujahed Eleyat, Magnus Jahre and Jorn Amundsen 1.1 INTRODUCTION 1.1.1 Fundamental Techniques Parallelism hasCOPYRIGHTED been used since the early days of computing MATERIAL to enhance performance. From the first computers to the most modern sequential processors (also called uni- processors), the main concepts introduced by von Neumann [20] are still in use. How- ever, the ever-increasing demand for computing performance has pushed computer architects toward implementing different techniques of parallelism. The von Neu- mann architecture was initially a sequential machine operating on scalar data with bit-serial operations [20]. Word-parallel operations were made possible by using more complex logic that could perform binary operations in parallel on all the bits in a computer word, and it was just the start of an adventure of innovations in parallel computer architectures. Programming Multicore and Many-core Computing Systems, 3 First Edition. Edited by Sabri Pllana and Fatos Xhafa. © 2017 John Wiley & Sons, Inc. Published 2017 by John Wiley & Sons, Inc. 4 MULTI- AND MANY-CORES, ARCHITECTURAL OVERVIEW FOR PROGRAMMERS Prefetching is a 'look-ahead technique' that was introduced quite early and is a way of parallelism that is used at several levels and in different components of a computer today. Both data and instructions are very often accessed sequentially. Therefore, when accessing an element (instruction or data) at address k, an auto- matic access to address k+1 will bring the element to where it is needed before it is accessed and thus eliminates or reduces waiting time. -

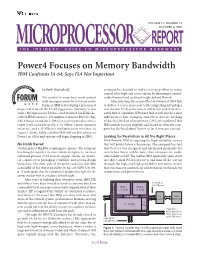

Power4 Focuses on Memory Bandwidth IBM Confronts IA-64, Says ISA Not Important

VOLUME 13, NUMBER 13 OCTOBER 6,1999 MICROPROCESSOR REPORT THE INSIDERS’ GUIDE TO MICROPROCESSOR HARDWARE Power4 Focuses on Memory Bandwidth IBM Confronts IA-64, Says ISA Not Important by Keith Diefendorff company has decided to make a last-gasp effort to retain control of its high-end server silicon by throwing its consid- Not content to wrap sheet metal around erable financial and technical weight behind Power4. Intel microprocessors for its future server After investing this much effort in Power4, if IBM fails business, IBM is developing a processor it to deliver a server processor with compelling advantages hopes will fend off the IA-64 juggernaut. Speaking at this over the best IA-64 processors, it will be left with little alter- week’s Microprocessor Forum, chief architect Jim Kahle de- native but to capitulate. If Power4 fails, it will also be a clear scribed IBM’s monster 170-million-transistor Power4 chip, indication to Sun, Compaq, and others that are bucking which boasts two 64-bit 1-GHz five-issue superscalar cores, a IA-64, that the days of proprietary CPUs are numbered. But triple-level cache hierarchy, a 10-GByte/s main-memory IBM intends to resist mightily, and, based on what the com- interface, and a 45-GByte/s multiprocessor interface, as pany has disclosed about Power4 so far, it may just succeed. Figure 1 shows. Kahle said that IBM will see first silicon on Power4 in 1Q00, and systems will begin shipping in 2H01. Looking for Parallelism in All the Right Places With Power4, IBM is targeting the high-reliability servers No Holds Barred that will power future e-businesses. -

Big Blue in the Bottomless Pit: the Early Years of IBM Chile

Big Blue in the Bottomless Pit: The Early Years of IBM Chile Eden Medina Indiana University In examining the history of IBM in Chile, this article asks how IBM came to dominate Chile’s computer market and, to address this question, emphasizes the importance of studying both IBM corporate strategy and Chilean national history. The article also examines how IBM reproduced its corporate culture in Latin America and used it to accommodate the region’s political and economic changes. Thomas J. Watson Jr. was skeptical when he The history of IBM has been documented first heard his father’s plan to create an from a number of perspectives. Former em- international subsidiary. ‘‘We had endless ployees, management experts, journalists, and opportunityandlittleriskintheUS,’’he historians of business, technology, and com- wrote, ‘‘while it was hard to imagine us getting puting have all made important contributions anywhere abroad. Latin America, for example to our understanding of IBM’s past.3 Some seemed like a bottomless pit.’’1 However, the works have explored company operations senior Watson had a different sense of the outside the US in detail.4 However, most of potential for profit within the world market these studies do not address company activi- and believed that one day IBM’s sales abroad ties in regions of the developing world, such as would surpass its growing domestic business. Latin America.5 Chile, a slender South Amer- In 1949, he created the IBM World Trade ican country bordered by the Pacific Ocean on Corporation to coordinate the company’s one side and the Andean cordillera on the activities outside the US and appointed his other, offers a rich site for studying IBM younger son, Arthur K. -

The Evolution of Ibm Research Looking Back at 50 Years of Scientific Achievements and Innovations

FEATURES THE EVOLUTION OF IBM RESEARCH LOOKING BACK AT 50 YEARS OF SCIENTIFIC ACHIEVEMENTS AND INNOVATIONS l Chris Sciacca and Christophe Rossel – IBM Research – Zurich, Switzerland – DOI: 10.1051/epn/2014201 By the mid-1950s IBM had established laboratories in New York City and in San Jose, California, with San Jose being the first one apart from headquarters. This provided considerable freedom to the scientists and with its success IBM executives gained the confidence they needed to look beyond the United States for a third lab. The choice wasn’t easy, but Switzerland was eventually selected based on the same blend of talent, skills and academia that IBM uses today — most recently for its decision to open new labs in Ireland, Brazil and Australia. 16 EPN 45/2 Article available at http://www.europhysicsnews.org or http://dx.doi.org/10.1051/epn/2014201 THE evolution OF IBM RESEARCH FEATURES he Computing-Tabulating-Recording Com- sorting and disseminating information was going to pany (C-T-R), the precursor to IBM, was be a big business, requiring investment in research founded on 16 June 1911. It was initially a and development. Tmerger of three manufacturing businesses, He began hiring the country’s top engineers, led which were eventually molded into the $100 billion in- by one of world’s most prolific inventors at the time: novator in technology, science, management and culture James Wares Bryce. Bryce was given the task to in- known as IBM. vent and build the best tabulating, sorting and key- With the success of C-T-R after World War I came punch machines. -

14789093.Pdf

iNIS-mf—8658 THE INFLUENCE OF COLLISIONS WITH NOBLE GASES ON SPECTRAL LINES OF HYDROGEN ISOTOPES PROEFSCHRIFT TER VERKRIJGING VAN DE GRAAD VAN DOCTOR IN DE WISKUNDE EN NATUURWETENSCHAPPEN AAN DE RIJKSUNIVERSITEIT TE LEIDEN, OP GEZAG VAN DE RECTOR MAGNIFICUS DR. A.A.H. KASSENAAR, HOOGLERAAR IN DE FACULTEIT DER GENEESKUNDE, VOLGENS BESLUIT VAN HET COLLEGE VAN DEKANEN TE VERDEDIGEN OP WOENSDAG 10 NOVEMBER 1982 TE KLOKKE 14.15 UUR DOOR PETER WILLEM HERMANS GEBOREN TE ROTTERDAM IN 1952 1982 DRUKKERIJ J.H. PASMANS B.V., 's-GRAVENHAGE Promotor: Prof. dr. J.J.M. Beenakker Het onderzoek is uitgevoerd mede onder verantwoordelijkheid van wijlen prof. dr. H.F.P. Knaap Aan mijn oudeva Het 1n dit proefschrift beschreven onderzoek werd uitgevoerd als onderdeel van het programma van de werkgemeenschap voor Molecuul fysica van de Stichting voor Fundamenteel Onderzoek der Materie (FOM) en is mogelijk gemaakt door financiële steun van de Nederlandse Organisatie voor Zuiver- WetenschappeHjk Onderzoek (ZWO). CONTENTS PREFACE 9 CHAPTER I THEORY OF THE COLLISIONAL BROADENING AND SHIFT OF SPECTRAL LINES OF HYDROGEN INFINITELY DILUTED IN NOBLE GASES 11 1. Introduction 11 2. General theory 12 a. Rotational Raman 15 b. Depolarized Rayleigh 16 3. Experimental preview 16 a. Rotational Raman lines; broadening and shift 17 b. Depolarized Rayleigh line 17 CHAPTER II EXPERIMENTAL DETERMINATION OF LINE BROADENING AND SHIFT CROSS SECTIONS OF HYDROGEN-NOBLE GAS MIXTURES 21 1. Introduction 21 2. Experimental setup 22 2.1 Laser 22 2.2 Scattering cell 24 2.3 Analyzing system 27 2.4 Detection system 28 3. Measuring technique 28 3.1 Width measurement 28 3.2 Shift measurement 32 3.3 Gases 32 4. -

IBM Research AI Residency Program

IBM Research AI Residency Program The IBM Research™ AI Residency Program is a 13-month program Topics of focus include: that provides opportunity for scientists, engineers, domain experts – Trust in AI (Causal modeling, fairness, explainability, and entrepreneurs to conduct innovative research and development robustness, transparency, AI ethics) on important and emerging topics in Artificial Intelligence (AI). The program aims at creating and investigating novel approaches – Natural Language Processing and Understanding in AI that progress capabilities towards significant technical (Question and answering, reading comprehension, and real-world challenges. AI Residents work closely with IBM language embeddings, dialog, multi-lingual NLP) Research scientists and are expected to fully complete a project – Knowledge and Reasoning (Knowledge/graph embeddings, within the 13-month residency. The results of the project may neuro-symbolic reasoning) include publications in top AI conferences and journals, development of prototypes demonstrating important new AI functionality – AI Automation, Scaling, and Management (Automated data and fielding of working AI systems. science, neural architecture search, AutoML, transfer learning, few-shot/one-shot/meta learning, active learning, AI planning, As part of the selection process, candidates must submit parallel and distributed learning) a 500-word statement of research interest and goals. – AI and Software Engineering (Big code analysis and understanding, software life cycle including modernize, build, debug, test and manage, software synthesis including refactoring and automated programming) – Human-Centered AI (HCI of AI, human-AI collaboration, AI interaction models and modalities, conversational AI, novel AI experiences, visual AI and data visualization) Deadline to apply: January 31, 2021 Earliest start date: June 1, 2021 Duration: 13 months Locations: IBM Thomas J. -

POWER® Processor-Based Systems

IBM® Power® Systems RAS Introduction to IBM® Power® Reliability, Availability, and Serviceability for POWER9® processor-based systems using IBM PowerVM™ With Updates covering the latest 4+ Socket Power10 processor-based systems IBM Systems Group Daniel Henderson, Irving Baysah Trademarks, Copyrights, Notices and Acknowledgements Trademarks IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business Machines Corporation in the United States, other countries, or both. These and other IBM trademarked terms are marked on their first occurrence in this information with the appropriate symbol (® or ™), indicating US registered or common law trademarks owned by IBM at the time this information was published. Such trademarks may also be registered or common law trademarks in other countries. A current list of IBM trademarks is available on the Web at http://www.ibm.com/legal/copytrade.shtml The following terms are trademarks of the International Business Machines Corporation in the United States, other countries, or both: Active AIX® POWER® POWER Power Power Systems Memory™ Hypervisor™ Systems™ Software™ Power® POWER POWER7 POWER8™ POWER® PowerLinux™ 7® +™ POWER® PowerHA® POWER6 ® PowerVM System System PowerVC™ POWER Power Architecture™ ® x® z® Hypervisor™ Additional Trademarks may be identified in the body of this document. Other company, product, or service names may be trademarks or service marks of others. Notices The last page of this document contains copyright information, important notices, and other information. Acknowledgements While this whitepaper has two principal authors/editors it is the culmination of the work of a number of different subject matter experts within IBM who contributed ideas, detailed technical information, and the occasional photograph and section of description.