02-50-Woźniak

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Plw Sc/Nt/Ntr/Ls

K Service Source PLW SC/NT/NTR/LS Personal LaserWriter SC, Personal LaserWriter NT, Personal LaserWriter NTR, Personal LaserWriter LS, Personal LaserWriter LS/L K Service Source Basics PLW SC/NT/NTR/LS Basics Product Information - 1 Product Information The printers covered in this manual are • Personal LaserWriter SC • Personal LaserWriter NT • Personal LaserWriter NTR • Personal LaserWriter LS • Personal LaserWriter LS/L Compatibility Not all parts are compatible among the five models. Refer to Illustrated Parts for compatibility cross references. The cassette feeder tray and its associated parts are optional on the LS, LS/L, and NTR models. Basics Paper Paths - 2 Paper Paths There are four paper paths in the Personal LaserWriter. Paper is fed from the cassette or multipurpose tray and delivered to the face-down or face-up delivery trays. Note: Face signifies image side. Default delivery is face- down at the top of the printer. Basics LS–LS/L Identification - 3 LS–LS/L Identification I/O Board Bracket The LS/L is a cost-reduced version of the LS but is sold and packaged under the same LS name. Parts are not necessarily interchangeable between the two models. Power Switch External distinguishing characteristics: • LS: The power switch is on the left rear of printer; the rear cover has an opening for an I/O board bracket and Personal LaserWriter LS displays the family number M2000. • LS/L: The power switch is on the right rear of printer; Solid Rear Cover the rear cover is solid plastic and displays the family number M2002. Power Switch Personal LaserWriter LS/L Basics Sensing System Theory - 4 Sensing System Theory There are six sensors in the PS11 Personal LaserWriter: four PS12 paper sensors and two printer-open sensors. -

Advanced Bash-Scripting Guide

Advanced Bash−Scripting Guide An in−depth exploration of the art of shell scripting Mendel Cooper <[email protected]> 2.2 31 October 2003 Revision History Revision 0.1 14 June 2000 Revised by: mc Initial release. Revision 0.2 30 October 2000 Revised by: mc Bugs fixed, plus much additional material and more example scripts. Revision 0.3 12 February 2001 Revised by: mc Another major update. Revision 0.4 08 July 2001 Revised by: mc More bugfixes, much more material, more scripts − a complete revision and expansion of the book. Revision 0.5 03 September 2001 Revised by: mc Major update. Bugfixes, material added, chapters and sections reorganized. Revision 1.0 14 October 2001 Revised by: mc Bugfixes, reorganization, material added. Stable release. Revision 1.1 06 January 2002 Revised by: mc Bugfixes, material and scripts added. Revision 1.2 31 March 2002 Revised by: mc Bugfixes, material and scripts added. Revision 1.3 02 June 2002 Revised by: mc 'TANGERINE' release: A few bugfixes, much more material and scripts added. Revision 1.4 16 June 2002 Revised by: mc 'MANGO' release: Quite a number of typos fixed, more material and scripts added. Revision 1.5 13 July 2002 Revised by: mc 'PAPAYA' release: A few bugfixes, much more material and scripts added. Revision 1.6 29 September 2002 Revised by: mc 'POMEGRANATE' release: some bugfixes, more material, one more script added. Revision 1.7 05 January 2003 Revised by: mc 'COCONUT' release: a couple of bugfixes, more material, one more script. Revision 1.8 10 May 2003 Revised by: mc 'BREADFRUIT' release: a number of bugfixes, more scripts and material. -

System Analysis and Tuning Guide System Analysis and Tuning Guide SUSE Linux Enterprise Server 15 SP1

SUSE Linux Enterprise Server 15 SP1 System Analysis and Tuning Guide System Analysis and Tuning Guide SUSE Linux Enterprise Server 15 SP1 An administrator's guide for problem detection, resolution and optimization. Find how to inspect and optimize your system by means of monitoring tools and how to eciently manage resources. Also contains an overview of common problems and solutions and of additional help and documentation resources. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xii 1 Available Documentation xiii -

Mostly-Static Decentralized Information Flow Control by Andrew C

Mostly-Static Decentralized Information Flow Control by Andrew C. Myers Submitted to the Department of Electrical Engineering and Computer Science in partial ful®llment of the requirements for the degree of Doctor of Philosophy at the Massachusetts Institute of Technology February 1999 c Massachusetts Institute of Technology 1999. All rights reserved. Author :::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: Department of Electrical Engineering and Computer Science January 7, 1999 Certi®ed by :::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: Barbara Liskov Ford Professor of Engineering Thesis Supervisor Accepted by ::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: Arthur C. Smith Chairman, Departmental Committee on Graduate Students Mostly-Static Decentralized Information Flow Control by Andrew C. Myers Submitted to the Department of Electrical Engineering and Computer Science on January 7, 1999, in partial ful®llment of the requirements for the degree of Doctor of Philosophy Abstract The growing use of mobile code in downloaded programs such as applets and servlets has increased interest in robust mechanisms for ensuring privacy and secrecy. Common security mechanisms such as sandboxing and access control are either too restrictive or too weakÐthey prevent applications from sharing data usefully, or allow private information to leak. For example, security mechanisms in Java prevent many useful applications while still permitting Trojan horse applets -

07 07 Unixintropart2 Lucio Week 3

Unix Basics Command line tools Daniel Lucio Overview • Where to use it? • Command syntax • What are commands? • Where to get help? • Standard streams(stdin, stdout, stderr) • Pipelines (Power of combining commands) • Redirection • More Information Introduction to Unix Where to use it? • Login to a Unix system like ’kraken’ or any other NICS/ UT/XSEDE resource. • Download and boot from a Linux LiveCD either from a CD/DVD or USB drive. • http://www.puppylinux.com/ • http://www.knopper.net/knoppix/index-en.html • http://www.ubuntu.com/ Introduction to Unix Where to use it? • Install Cygwin: a collection of tools which provide a Linux look and feel environment for Windows. • http://cygwin.com/index.html • https://newton.utk.edu/bin/view/Main/Workshop0InstallingCygwin • Online terminal emulator • http://bellard.org/jslinux/ • http://cb.vu/ • http://simpleshell.com/ Introduction to Unix Command syntax $ command [<options>] [<file> | <argument> ...] Example: cp [-R [-H | -L | -P]] [-fi | -n] [-apvX] source_file target_file Introduction to Unix What are commands? • An executable program (date) • A command built into the shell itself (cd) • A shell program/function • An alias Introduction to Unix Bash commands (Linux) alias! crontab! false! if! mknod! ram! strace! unshar! apropos! csplit! fdformat! ifconfig! more! rcp! su! until! apt-get! cut! fdisk! ifdown! mount! read! sudo! uptime! aptitude! date! fg! ifup! mtools! readarray! sum! useradd! aspell! dc! fgrep! import! mtr! readonly! suspend! userdel! awk! dd! file! install! mv! reboot! symlink! -

UNIX System Services Z/OS Version 1 Release 7 Implementation

Front cover UNIX System Services z/OS Version 1 Release 7 Implementation z/OS UNIX overview z/OS UNIX setup z/OS UNIX usage Paul Rogers Theodore Antoff Patrick Bruinsma Paul-Robert Hering Lutz Kühner Neil O’Connor Lívio Sousa ibm.com/redbooks International Technical Support Organization UNIX System Services z/OS Version 1 Release 7 Implementation March 2006 SG24-7035-01 Note: Before using this information and the product it supports, read the information in “Notices” on page xiii. Second Edition (March 2006) This edition applies to Version 1 Release 7 of z/OS (5637-A01), and Version 1, Release 7 of z/OS.e (5655-G52), and to all subsequent releases and modifications until otherwise indicated in new editions. © Copyright International Business Machines Corporation 2003, 2006. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . xiii Trademarks . xiv Preface . .xv The team that wrote this redbook. .xv Become a published author . xvi Comments welcome. xvii Chapter 1. UNIX overview. 1 1.1 UNIX fundamentals . 2 1.1.1 UNIX objectives . 2 1.1.2 What people like about UNIX . 2 1.1.3 What people don’t like about UNIX . 3 1.1.4 UNIX operating system . 3 1.1.5 UNIX file system . 4 1.1.6 Parameter files . 6 1.1.7 Daemons. 6 1.1.8 Accessing UNIX . 6 1.1.9 UNIX standards. 7 1.1.10 MVS and UNIX functional comparison . 8 1.2 z/OS UNIX System Services fundamentals . -

(12) United States Patent (10) Patent N0.: US 7,283,777 B2 Russel Et Al

US007283777B2 (12) United States Patent (10) Patent N0.: US 7,283,777 B2 Russel et al. (45) Date of Patent: Oct. 16, 2007 (54) SYSTEM AND METHOD FOR (58) Field of Classi?cation Search .............. .. 399/323, CHARACTERIZING FUSER STRIPPING 399/33, 315, 21, 324, 322 PERFORMANCE See application ?le for complete search history. (75) Inventors: Steven M. Russel, Pittsford, NY (US); (56) References Cited Mansour Messalti, Sherwood, OR U. S. PATENT DOCUMENTS (US); Jeremy Christopher deJong, Orchard Park, NY (US); Anthony S. 4,952,982 A * 8/1990 Tabuchi ....... .. 399/22 Condello, Webster, NY (US); Daniel 5,282,009 A * l/l994 Derimiggio 399/46 James McVeigh, Webster, NY (US); 5,406,363 A * 4/1995 Siegel et a1. .............. .. 399/323 Donald M. Bott, Rochester, NY (US); 6,795,677 B2 9/2004 Berkes et a1. James Joseph Padula, Webster, NY * cited by examiner (Us) Primary ExamineriDavid M. Gray (73) Assignee: Xerox Corporation, Stamford, CT Assistant Examiner4Geoifrey T Evans (Us) (74) Attorney, Agent, or FirmiDuane C. Basch; Basch & Nickerson LLP ( * ) Notice: Subject to any disclaimer, the term of this patent is extended or adjusted under 35 (57) ABSTRACT U.S.C. 154(b) by 0 days. Disclosed herein are several embodiments to facilitate the (21) Appl. No.: 11/343,672 characterization of fuser stripping performance. Recogniz ing that the characteristics of a substrate exiting a fusing nip (22) Filed: Jan. 31, 2006 are indicative of the operation of the nip and the stripping operation itself, several contact and non-contact sensing (65) Prior Publication Data methods are described to detect or predict degraded strip US 2007/0177913 A1 Aug. -

Centos Cheat Sheet Cheat Sheet by Bromono Via Cheatography.Com/20940/Cs/3795

CentOS Cheat Sheet Cheat Sheet by bromono via cheatography.com/20940/cs/3795/ Help Commands Partitions and Disk Management File Operations (cont) ls Options whatis df less View a file on page at a -a Show all (including hidden) time, allows for going Search whatis database for Report file system disk space -R Recursive list backwards complete words; used to find usage -r Reverse order short descrip tions of system head Print the first 10 lines of a mount commands file -t Sort by last modified Show whats mounted or mount Sort by file size which tail Print the last 10 lines of a -S a file system file Long listing format Shows the full path to shell -l unmou nt wc Count the number of commands -1 One file per line Unmount a file system words or characters in a where is file -m Comma-s epa rated output fuser Locate binary, source and man stat Display file of file system -Q Quoted output pages for a command Identifies processes using files status or sockets aprop os cut Remove sections from grep Options isof lines of input Search through a database of -i Case insensi tive search short descrip tion to find help list open files on the system paste Merge lines of files -r Recursive search and man pages containing -v Inverted search certain terms and commands Directory Operations Searching Files -o Show matched part of file only man clear clears your screen grep Search text files for lines Manual pages for commands containing a matching pwd Shows current directory Process Management pattern cd Change directo ries Report on current Bash -

Выключатели Нагрузки Socomec Sirco M И Sirco MV - Брошюра На Продукцию

---------- ,__ _________ Выключатели нагрузки Socomec Sirco M и Sirco MV - брошюра на продукцию. Юниджет SIRCO M - https://www.uni-jet.com/catalog/commutation/vyiklyuchateli-nagruzki/ socomec-sirco-m/ SIRCO MV - https://www.uni-jet.com/catalog/commutation/vyiklyuchateli-nagruzki/ l�-' socomec-sirco-mv/ un I Jet SIRCO M and MV Modular universal switches from 16 to 160 A SOCOMEC : ADDITIONAL SOCOMEC Innovators in LV switching solutions SWITCHING SOLUTIONS As a world leader in electrical switching and protection systems, SOCOMEC launches a new generation of load > SIRCO load break switches up to 5000 A break and changeover switches for applications from 16 to 160 A. SIRCO M and MV : Total integration of all electrical functions: isolating, control and changeover switching SOCOMEC has developed a new technical concept that combines the features of a load break switch and a SIRCM 353 A changeover switch in order to propose a highly innovative modular design. > Manualandmotorisedchangeover SIRCO M and MV : switch Full compliance with IEC, EN 60947-3 or UL 508 standards The new SIRCO M and MV range of load break switches has been designed, qualified and tested according to the criteria defined by the IEC 60947-3 standard. This process guarantees a high quality level for the product which is fully adapted to arduous operating environments when operating in the field. ATYSM 007 A > FusecombinationswitchesFUSERBLOC - Frontorrightsideoperation FUSER 703 A ATYS 079 A SIRCO M Modular universal switches the versatile solution Usestandardcomponentstocreateasolutionthatperfectlysuitstoyourapplication. SWITCHING & DISCONNECTION CHANGEOVER SWITCHING ADAPTABLE For safe and optimised operation of LV Two mechanically interlocked switches. To all motor or distribution load applications. -

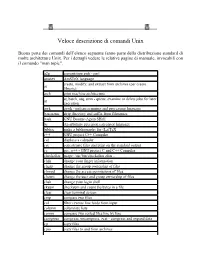

Unix Command

Veloce descrizione di comandi Unix Buona parte dei comandi dell’elenco seguente fanno parte della distribuzione standard di molte architetture Unix. Per i dettagli vedere le relative pagine di manuale, invocabili con il comando "man topic". a2p convertitore awk - perl amstex AmSTeX language create, modify, and extract from archives (per creare ar librerie) arch print machine architecture at, batch, atq, atrm - queue, examine or delete jobs for later at execution awk gawk - pattern scanning and processing language basename strip directory and suffix from filenames bash GNU Bourne-Again SHell bc An arbitrary precision calculator language bibtex make a bibliography for (La)TeX c++ GNU project C++ Compiler cal displays a calendar cat concatenate files and print on the standard output cc gcc, g++ - GNU project C and C++ Compiler checkalias usage: /usr/bin/checkalias alias .. chfn change your finger information chgrp change the group ownership of files chmod change the access permissions of files chown change the user and group ownership of files chsh change your login shell cksum checksum and count the bytes in a file clear clear terminal screen cmp compare two files col filter reverse line feeds from input column columnate lists comm compare two sorted files line by line compress compress, uncompress, zcat - compress and expand data cp copy files cpio copy files to and from archives tcsh - C shell with file name completion and command line csh editing csplit split a file into sections determined by context lines cut remove sections from each -

Oracle Universal Installer and Opatch User's Guide for Windows and UNIX

Oracle® Universal Installer and OPatch User’s Guide 11g Release 2 (11.2) for Windows and UNIX E12255-11 March 2011 Oracle Universal Installer and OPatch User’s Guide, 11g Release 2 (11.2) for Windows and UNIX E12255-11 Copyright © 1996, 2011, Oracle and/or its affiliates. All rights reserved. Primary Author: Michael Zampiceni Contributor: Smitha Viswanathan, Sumant Sankaran, Phi Nguyen This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this software or related documentation is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, the following notice is applicable: U.S. GOVERNMENT RIGHTS Programs, software, databases, and related documentation and technical data delivered to U.S. Government customers are "commercial computer software" or "commercial technical data" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, duplication, disclosure, modification, and adaptation shall be subject to the restrictions and license terms set forth in the applicable Government contract, and, to the extent applicable by the terms of the Government contract, the additional rights set forth in FAR 52.227-19, Commercial Computer Software License (December 2007). -

POSIX-2017 Shell & Utilities Utilities

POSIX-2017 Shell & Utilities Utilities admin - create and administer SCCS files (DEVELOPMENT) ex - text editor alias - define or display aliases expand - convert tabs to spaces ar - create and maintain library archives expr - evaluate arguments as an expression asa - interpret carriage-control characters false - return false value at - execute commands at a later time fc - process the command history list awk - pattern scanning and processing language fg - run jobs in the foreground basename - return non-directory portion of a pathname file - determine file type batch - schedule commands to be executed in a batch queue find - find files bc - arbitrary-precision arithmetic language fold - filter for folding lines bg - run jobs in the background fort77 - FORTRAN compiler (FORTRAN) c99 - compile standard C programs fuser - list process IDs of all processes that have one or more files cal - print a calendar open cat - concatenate and print files gencat - generate a formatted message catalog cd - change the working directory getconf - get configuration values cflow - generate a C-language flowgraph (DEVELOPMENT) get - get a version of an SCCS file (DEVELOPMENT) chgrp - change the file group ownership getopts - parse utility options chmod - change the file modes grep - search a file for a pattern chown - change the file ownership hash - remember or report utility locations cksum - write file checksums and sizes head - copy the first part of files cmp - compare two files iconv - codeset conversion command - execute a simple command id - return