Bayesian Networks for Regulatory Network Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Molecular and Cellular Signaling

Martin Beckerman Molecular and Cellular Signaling With 227 Figures AIP PRESS 4(2) Springer Contents Series Preface Preface vii Guide to Acronyms xxv 1. Introduction 1 1.1 Prokaryotes and Eukaryotes 1 1.2 The Cytoskeleton and Extracellular Matrix 2 1.3 Core Cellular Functions in Organelles 3 1.4 Metabolic Processes in Mitochondria and Chloroplasts 4 1.5 Cellular DNA to Chromatin 5 1.6 Protein Activities in the Endoplasmic Reticulum and Golgi Apparatus 6 1.7 Digestion and Recycling of Macromolecules 8 1.8 Genomes of Bacteria Reveal Importance of Signaling 9 1.9 Organization and Signaling of Eukaryotic Cell 10 1.10 Fixed Infrastructure and the Control Layer 12 1.11 Eukaryotic Gene and Protein Regulation 13 1.12 Signaling Malfunction Central to Human Disease 15 1.13 Organization of Text 16 2. The Control Layer 21 2.1 Eukaryotic Chromosomes Are Built from Nucleosomes 22 2.2 The Highly Organized Interphase Nucleus 23 2.3 Covalent Bonds Define the Primary Structure of a Protein 26 2.4 Hydrogen Bonds Shape the Secondary Structure . 27 2.5 Structural Motifs and Domain Folds: Semi-Independent Protein Modules 29 xi xü Contents 2.6 Arrangement of Protein Secondary Structure Elements and Chain Topology 29 2.7 Tertiary Structure of a Protein: Motifs and Domains 30 2.8 Quaternary Structure: The Arrangement of Subunits 32 2.9 Many Signaling Proteins Undergo Covalent Modifications 33 2.10 Anchors Enable Proteins to Attach to Membranes 34 2.11 Glycosylation Produces Mature Glycoproteins 36 2.12 Proteolytic Processing Is Widely Used in Signaling 36 2.13 Reversible Addition and Removal of Phosphoryl Groups 37 2.14 Reversible Addition and Removal of Methyl and Acetyl Groups 38 2.15 Reversible Addition and Removal of SUMO Groups 39 2.16 Post-Translational Modifications to Histones . -

GENE REGULATION Differences Between Prokaryotes & Eukaryotes

GENE REGULATION Differences between prokaryotes & eukaryotes Gene function Description of Prokaryotic Chromosome and E.coli Review Differences between Prokaryotic & Eukaryotic Chromosomes Four differences Eukaryotic Chromosomes Form Length in single human chromosome Length in single diploid cell Proteins beside histones Proportion of DNA that codes for protein in prokaryotes eukaryotes humans Regulation of Gene Expression in Prokaryotes Terms promoter structural gene operator operon regulator repressor corepressor inducer The lac operon - Background E.coli behavior presence of lactose and absence of lactose behavior of mutants outcome of mutants The Lac operon Regulates production of b-galactosidase http://www.sumanasinc.com/webcontent/animations/content/lacoperon.html The trp operon Regulates the production of the enzyme for tryptophan synthesis http://bcs.whfreeman.com/thelifewire/content/chp13/1302002.html General Summary During transcription, RNA remains briefly bound to the DNA template Structural genes coding for polypeptides with related functions often occur in sequence Two kinds of regulatory control positive & negative General Summary Regulatory efficiency is increased because mRNA is translated into protein immediately and broken down rapidly. 75 different operons comprising 260 structural genes in E.coli Gene Regulation in Eukaryotes some regulation occurs because as little as one % of DNA is expressed Gene Expression and Differentiation Characteristic proteins are produced at different stages of differentiation producing cells with their own characteristic structure and function. Therefore not all genes are expressed at the same time As differentiation proceeds, some genes are permanently “turned” off. Example - different types of hemoglobin are produced during development and in adults. DNA is expressed at a precise time and sequence in time. -

What Makes the Lac-Pathway Switch: Identifying the Fluctuations That Trigger Phenotype Switching in Gene Regulatory Systems

What makes the lac-pathway switch: identifying the fluctuations that trigger phenotype switching in gene regulatory systems Prasanna M. Bhogale1y, Robin A. Sorg2y, Jan-Willem Veening2∗, Johannes Berg1∗ 1University of Cologne, Institute for Theoretical Physics, Z¨ulpicherStraße 77, 50937 K¨oln,Germany 2Molecular Genetics Group, Groningen Biomolecular Sciences and Biotechnology Institute, Centre for Synthetic Biology, University of Groningen, Nijenborgh 7, 9747 AG, Groningen, The Netherlands y these authors contributed equally ∗ correspondence to [email protected] and [email protected]. Multistable gene regulatory systems sustain different levels of gene expression under identical external conditions. Such multistability is used to encode phenotypic states in processes including nutrient uptake and persistence in bacteria, fate selection in viral infection, cell cycle control, and development. Stochastic switching between different phenotypes can occur as the result of random fluctuations in molecular copy numbers of mRNA and proteins arising in transcription, translation, transport, and binding. However, which component of a pathway triggers such a transition is gen- erally not known. By linking single-cell experiments on the lactose-uptake pathway in E. coli to molecular simulations, we devise a general method to pinpoint the particular fluctuation driving phenotype switching and apply this method to the transition between the uninduced and induced states of the lac genes. We find that the transition to the induced state is not caused only by the single event of lac-repressor unbinding, but depends crucially on the time period over which the repressor remains unbound from the lac-operon. We confirm this notion in strains with a high expression level of the repressor (leading to shorter periods over which the lac-operon remains un- bound), which show a reduced switching rate. -

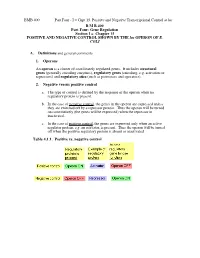

I = Chpt 15. Positive and Negative Transcriptional Control at Lac BMB

BMB 400 Part Four - I = Chpt 15. Positive and Negative Transcriptional Control at lac B M B 400 Part Four: Gene Regulation Section I = Chapter 15 POSITIVE AND NEGATIVE CONTROL SHOWN BY THE lac OPERON OF E. COLI A. Definitions and general comments 1. Operons An operon is a cluster of coordinately regulated genes. It includes structural genes (generally encoding enzymes), regulatory genes (encoding, e.g. activators or repressors) and regulatory sites (such as promoters and operators). 2. Negative versus positive control a. The type of control is defined by the response of the operon when no regulatory protein is present. b. In the case of negative control, the genes in the operon are expressed unless they are switched off by a repressor protein. Thus the operon will be turned on constitutively (the genes will be expressed) when the repressor in inactivated. c. In the case of positive control, the genes are expressed only when an active regulator protein, e.g. an activator, is present. Thus the operon will be turned off when the positive regulatory protein is absent or inactivated. Table 4.1.1. Positive vs. negative control BMB 400 Part Four - I = Chpt 15. Positive and Negative Transcriptional Control at lac 3. Catabolic versus biosynthetic operons a. Catabolic pathways catalyze the breakdown of nutrients (the substrate for the pathway) to generate energy, or more precisely ATP, the energy currency of the cell. In the absence of the substrate, there is no reason for the catabolic enzymes to be present, and the operon encoding them is repressed. In the presence of the substrate, when the enzymes are needed, the operon is induced or de-repressed. -

Teaching Gene Regulation in the High School Classroom, AP Biology, Stefanie H

Wofford College Digital Commons @ Wofford Arthur Vining Davis High Impact Fellows Projects High Impact Curriculum Fellows 4-30-2014 Teaching Gene Regulation in the High School Classroom, AP Biology, Stefanie H. Baker Wofford College, [email protected] Marie Fox Broome High School Leigh Smith Wofford College Follow this and additional works at: http://digitalcommons.wofford.edu/avdproject Part of the Biology Commons, and the Genetics Commons Recommended Citation Baker, Stefanie H.; Fox, Marie; and Smith, Leigh, "Teaching Gene Regulation in the High School Classroom, AP Biology," (2014). Arthur Vining Davis High Impact Fellows Projects. Paper 22. http://digitalcommons.wofford.edu/avdproject/22 This Article is brought to you for free and open access by the High Impact Curriculum Fellows at Digital Commons @ Wofford. It has been accepted for inclusion in Arthur Vining Davis High Impact Fellows Projects by an authorized administrator of Digital Commons @ Wofford. For more information, please contact [email protected]. High Impact Fellows Project Overview Project Title, Course Name, Grade Level Teaching Gene Regulation in the High School Classroom, AP Biology, Grades 9-12 Team Members Student: Leigh Smith High School Teacher: Marie Fox School: Broome High School Wofford Faculty: Dr. Stefanie Baker Department: Biology Brief Description of Project This project sought to enhance high school students’ understanding of gene regulation as taught in an Advanced Placement Biology course. We accomplished this by designing and implementing a lab module that included a pre-lab assessment, a hands-on classroom experiment, and a post-lab assessment in the form of a lab poster. Students developed lab skills while simultaneously learning about course content. -

Modeling Network Dynamics: the Lac Operon, a Case Study

JCBMini-Review Modeling network dynamics: the lac operon, a case study José M.G. Vilar,1 Ca˘ lin C. Guet,1,2 and Stanislas Leibler1 1The Rockefeller University, New York, NY 10021 2Department of Molecular Biology, Princeton University, Princeton, NJ 08544 We use the lac operon in Escherichia coli as a prototype components to entire cells. In contrast to what this wide- system to illustrate the current state, applicability, and spread use might indicate, such modeling has many limitations. limitations of modeling the dynamics of cellular networks. On the one hand, the cell is not a well-stirred reactor. It is a We integrate three different levels of description (molecular, highly heterogeneous and compartmentalized structure, in cellular, and that of cell population) into a single model, which phenomena like molecular crowding or channeling which seems to capture many experimental aspects of the are present (Ellis, 2001), and in which the discrete nature of system. the molecular components cannot be neglected (Kuthan, Downloaded from 2001). On the other hand, so few details about the actual in vivo processes are known that it is very difficult to proceed Modeling has had a long tradition, and a remarkable success, without numerous, and often arbitrary, assumptions about in disciplines such as engineering and physics. In biology, the nature of the nonlinearities and the values of the parameters however, the situation has been different. The enormous governing the reactions. Understanding these limitations, and www.jcb.org complexity of living systems and the lack of reliable quanti- ways to overcome them, will become increasingly impor- tative information have precluded a similar success. -

A Biochemical Mechanism for Nonrandom Mutations and Evolution

University of Montana ScholarWorks at University of Montana Biological Sciences Faculty Publications Biological Sciences 6-2000 A Biochemical Mechanism for Nonrandom Mutations and Evolution Barbara E. Wright University of Montana - Missoula, [email protected] Follow this and additional works at: https://scholarworks.umt.edu/biosci_pubs Part of the Biology Commons Let us know how access to this document benefits ou.y Recommended Citation Wright, Barbara E., "A Biochemical Mechanism for Nonrandom Mutations and Evolution" (2000). Biological Sciences Faculty Publications. 263. https://scholarworks.umt.edu/biosci_pubs/263 This Article is brought to you for free and open access by the Biological Sciences at ScholarWorks at University of Montana. It has been accepted for inclusion in Biological Sciences Faculty Publications by an authorized administrator of ScholarWorks at University of Montana. For more information, please contact [email protected]. JOURNAL OF BACTERIOLOGY, June 2000, p. 2993–3001 Vol. 182, No. 11 0021-9193/00/$04.00ϩ0 Copyright © 2000, American Society for Microbiology. All Rights Reserved. MINIREVIEW A Biochemical Mechanism for Nonrandom Mutations and Evolution BARBARA E. WRIGHT* Division of Biological Sciences, The University of Montana, Missoula, Montana Downloaded from As this minireview is concerned with the importance of the nario begins with the starvation of a self-replicating unit for its environment in directing evolution, it is appropriate to remem- precursor, metabolite A, utilized by enzyme 1 encoded by gene ber that Lamarck was the first to clearly articulate a consistent 1. When metabolite A is depleted, a mutation in a copy of gene theory of gradual evolution from the simplest of species to the 1 gives rise to gene 2 and allows enzyme 2 to use metabolite B most complex, culminating in the origin of mankind (71). -

Genes, History and Detail Ject Well

BOOKS BOOKS Genes, history and detail ject well. This is achieved through a very careful structure, new to edi- tion five, that separates the book into five distinct parts: chemistry and genetics, maintenance of the genome, expression of the genome, regu- Molecular Biology of the lation, and finally methods. There are two reasons why this is critical to Gene, Fifth Edition the success of the book: chemistry and genetics is the place for history and methods is the place to understand the technological drivers of by James D. Watson, Tania A. Baker, molecular biology and the model organisms we use. At a stroke, this Stephen P. Bell, Alexander Gann, frees the author from being held hostage by some of the detail because, Michael Levine and Richard Losick as they state in the introduction to the first edition: “Given the choice between deleting an important principal or giving experimental detail, Cold Spring Harbor Laboratory I am inclined to state the principle”. Press/Benjamin Cummings • 2004 The coverage of the key topics within each of these parts is compre- hensive and beautifully illustrated with numerous figures that are also $123/£38.99 available in the accompanying CD, a very useful feature that will pro- vide key resources for both teachers and students. Particularly amus- Peter F. R. Little ing are the pictures of key individuals, drawn from Cold Spring Harbor’s wonderful archives, including a bevy of very youthful Nobel To teach any biological science today is to steer a delicate course Laureates and my favourite image from 1961 of Monod and Szilard, between science as history and science as research. -

The Cost of Expression of Escherichia Coli Lac Operon Proteins Is in the Process, Not in the Products

Copyright Ó 2008 by the Genetics Society of America DOI: 10.1534/genetics.107.085399 The Cost of Expression of Escherichia coli lac Operon Proteins Is in the Process, Not in the Products Daniel M. Stoebel,*,1 Antony M. Dean† and Daniel E. Dykhuizen* *Department of Ecology and Evolution, Stony Brook University, Stony Brook, New York 11794 and †Department of Ecology, Evolution and Behavior and the BioTechnology Institute, University of Minnesota, St. Paul, Minnesota 55108 Manuscript received December 9, 2007 Accepted for publication January 9, 2008 ABSTRACT Transcriptional regulatory networks allow bacteria to express proteins only when they are needed. Adaptive hypotheses explaining the evolution of regulatory networks assume that unneeded expression is costly and therefore decreases fitness, but the proximate cause of this cost is not clear. We show that the cost in fitness to Escherichia coli strains constitutively expressing the lactose operon when lactose is absent is associated with the process of making the lac gene products, i.e., associated with the acts of transcription and/or translation. These results reject the hypotheses that regulation exists to prevent the waste of amino acids in useless protein or the detrimental activity of unnecessary proteins. While the cost of the process of protein expression occurs in all of the environments that we tested, the expression of the lactose permease could be costly or beneficial, depending on the environment. Our results identify the basis of a single selective pressure likely acting across the entire E. coli transcriptome. central tenet of evolutionary biology is that trade- ever, unable to determine the source of the cost. -

Review Session

REVIEW SESSION Wednesday, September 15 5:305:30 PMPM SHANTZSHANTZ 242242 EE Gene Regulation Gene Regulation Gene expression can be turned on, turned off, turned up or turned down! For example, as test time approaches, some of you may note that stomach acid production increases dramatically…. due to regulation of the genes that control synthesis of HCl by cells within the gastric pits of the stomach lining. Gene Regulation in Prokaryotes Prokaryotes may turn genes on and off depending on metabolic demands and requirements for respective gene products. NOTE: For prokaryotes, “turning on/off” refers almost exclusively to stimulating or repressing transcription Gene Regulation in Prokaryotes Inducible/RepressibleInducible/Repressible gene products: those produced only when specific chemical substrates are present/absent. ConstitutiveConstitutive gene products: those produced continuously, regardless of chemical substrates present. Gene Regulation in Prokaryotes Regulation may be Negative: gene expression occurs unless it is shut off by a regulator molecule or Positive: gene expression only occurs when a regulator mole turns it on Operons In prokaryotes, genes that code for enzymes all related to a single metabolic process tend to be organized into clusters within the genome, called operonsoperons. An operon is usually controlled by a single regulatory unit. Regulatory Elements ciscis--actingacting elementelement: The regulatory region of the DNA that binds the molecules that influences expression of the genes in the operon. It is almost always upstream (5’) to the genes in the operon. TransTrans--actingacting elementelement: The molecule(s) that interact with the cis-element and influence expression of the genes in the operon. The lac operon The lac operon contains the genes that must be expressed if the bacteria is to use the disaccharide lactose as the primary energy source. -

Regulation of Gene Expression

CAMPBELL BIOLOGY IN FOCUS URRY • CAIN • WASSERMAN • MINORSKY • REECE 15 Regulation of Gene Expression Lecture Presentations by Kathleen Fitzpatrick and Nicole Tunbridge, Simon Fraser University © 2016 Pearson Education, Inc. SECOND EDITION Overview: Beauty in the Eye of the Beholder . Prokaryotes and eukaryotes alter gene expression in response to their changing environment . Multicellular eukaryotes also develop and maintain multiple cell types © 2016 Pearson Education, Inc. Concept 15.1: Bacteria often respond to environmental change by regulating transcription . Natural selection has favored bacteria that produce only the gene products needed by the cell . A cell can regulate the production of enzymes by feedback inhibition or by gene regulation . Gene expression in bacteria is controlled by a mechanism described as the operon model © 2016 Pearson Education, Inc. Figure 15.2 Precursor Genes that encode enzymes 1, 2, and 3 Feedback trpE inhibition Enzyme 1 trpD Regulation of gene expression Enzyme 2 trpC – trpB – Enzyme 3 trpA Tryptophan (a) Regulation of enzyme (b) Regulation of enzyme activity production © 2016 Pearson Education, Inc. Operons: The Basic Concept . A group of functionally related genes can be coordinately controlled by a single “on-off switch” . The regulatory “switch” is a segment of DNA called an operator, usually positioned within the promoter . An operon is the entire stretch of DNA that includes the operator, the promoter, and the genes that they control © 2016 Pearson Education, Inc. The operon can be switched off by a protein repressor . The repressor prevents gene transcription by binding to the operator and blocking RNA polymerase . The repressor is the product of a separate regulatory gene © 2016 Pearson Education, Inc. -

Ch. 18 Regulation of Gene Expression

Ch. 18 Regulation of Gene Expression 1 Human genome has around 23,688 genes (Scientific American 2/2006) Essential Questions: How is transcription regulated? How are genes expressed? 2 Bacteria regulate transcription based on their environment 1. can adjust activity of enzymes already present Ex. enz 1 inhibited by final product 2. Adjust level of certain enz. regulate the genes that code for the enzyme 3 Operon model the tryptophan example The five genes that code for the subunits of the enzymes are clustered together. 4 Grouping genes that are related is advantageous only need one "switch" to turn them on or off "Switch" = the operator (segment of DNA) located within the promoter controls RNA polymerase's access to the genes operon = the operator, the promoter, and the genes they control trp operon is an example in E.coli 5 operon can be switched off by a repressor protein binds to operator and blocks attachment of RNA polymerase to the promoter trp repressor is made from a regulatory gene called trpR 6 7 trpR has its own promoter regulatory genes are expressed continuously: binding of repressors to operators is reversible the trp repressor is an allosteric protein has active and inactive shapes trp repressor is synthesized in inactive form 8 if tryptophan binds to trp repressor at an allosteric site, then becomes active and can attach to operator in this case tryptophan is a corepressor a small molecule that cooperates with a repressor protein to switch operon off. 9 Two types of negative gene regulation: Repressible operons transcription is usually on, but is inhibited by the corepressor Ex.