Essential Laboratory Equipments

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Method of Detecting Viral Contamination in Parenteral Solutions

University of the Pacific Scholarly Commons University of the Pacific Theses and Dissertations Graduate School 1978 A Method Of Detecting Viral Contamination In Parenteral Solutions. Joseph Alexander Woelfel University of the Pacific Follow this and additional works at: https://scholarlycommons.pacific.edu/uop_etds Part of the Pharmacy and Pharmaceutical Sciences Commons Recommended Citation Woelfel, Joseph Alexander. (1978). A Method Of Detecting Viral Contamination In Parenteral Solutions.. University of the Pacific, Dissertation. https://scholarlycommons.pacific.edu/uop_etds/3240 This Dissertation is brought to you for free and open access by the Graduate School at Scholarly Commons. It has been accepted for inclusion in University of the Pacific Theses and Dissertations by an authorized administrator of Scholarly Commons. For more information, please contact [email protected]. A METHOD OF' Di'~'rECTING VIRAL CONTAMINATION IN PAP.ENTF,RAL SOLUTIONS . ' A Dissertation Presented to the Faculty of the School of Pharmaey the University of the Pacific In Partial Fulfillment of the Hequj.remen ts for the Degree Doctor of Philosophy by Joseph Alexander Woelfel 1 July 1978 j Copyright © 1978 Joseph Alexander Woelfel All Rights Reserved This dissertation, written and submitted by JOSEPH ALEXANDER WOELFEL is approved for recommendation to the Committee on Graduate Studies, University of the Pacific Dean of the School or Department Chairman: Dissertation Committee: ~M 1 ~~~ Chairman 4-;/~ud~C?/~~ Dated._hyp=:!=::f9.--<ZL.2~S,:.,..._.t._l ~9.L.11Lf _____ A ME THOll OF DETECTING VIRAL CONTAr~I!JA TI!HJ IN PARENTERAL SOLUTIONS Abstract of Dissertation Th~ pres~nce of contaminants in parenteral sol~tions is a constant nemesis against whic h pharmaceutical manufacturers, as well as medical, pharmacy , and nursing practitioners mus t vigilantly struggle to provi de quality healt h care. -

Application of Machine Learning to Toolmarks: Statistically Based Methods for Impression Pattern Comparisons

The author(s) shown below used Federal funds provided by the U.S. Department of Justice and prepared the following final report: Document Title: Application of Machine Learning to Toolmarks: Statistically Based Methods for Impression Pattern Comparisons Author: Nicholas D. K. Petraco, Ph.D.; Helen Chan, B.A.; Peter R. De Forest, D.Crim.; Peter Diaczuk, M.S.; Carol Gambino, M.S., James Hamby, Ph.D.; Frani L. Kammerman, M.S.; Brooke W. Kammrath, M.A., M.S; Thomas A. Kubic, M.S., J.D., Ph.D.; Loretta Kuo, M.S.; Patrick McLaughlin; Gerard Petillo, B.A.; Nicholas Petraco, M.S.; Elizabeth W. Phelps, M.S.; Peter A. Pizzola, Ph.D.; Dale K. Purcell, M.S.; Peter Shenkin, Ph.D. Document No.: 239048 Date Received: July 2012 Award Number: 2009-DN-BX-K041 This report has not been published by the U.S. Department of Justice. To provide better customer service, NCJRS has made this Federally- funded grant final report available electronically in addition to traditional paper copies. Opinions or points of view expressed are those of the author(s) and do not necessarily reflect the official position or policies of the U.S. Department of Justice. This document is a research report submitted to the U.S. Department of Justice. This report has not been published by the Department. Opinions or points of view expressed are those of the author(s) and do not necessarily reflect the official position or policies of the U.S. Department of Justice. Report Title: Application of Machine Learning to Toolmarks: Statistically Based Methods for Impression Pattern Comparisons Award Number: 2009-DN-BX-K041 Authors: Nicholas D. -

Forensic Laboratories: Handbook for Facility Planning, Design, Construction, and Moving

Forensic Laboratories: Handbook for Facility Planning, Design, Construction, and Moving Some figures, charts, forms, and tables are not included in this PDF file. To view this document in its entirety, order a photocopy from NCJRS. T O EN F J TM U R ST U.S. Department of Justice A I P C E E D B O J C S F A V Office of Justice Programs F M O I N A C I J S R E BJ G O OJJ DP O F PR National Institute of Justice JUSTICE ForensicForensic Laboratories:Laboratories: HandbookHandbook forfor FacilityFacility Planning,Planning, Design,Design, Construction,Construction, andand MovingMoving RESEARCH REPORT National Institute of Justice National Institute of Standards and Technology Department of Justice Department of Commerce U. S. Department of Justice Office of Justice Programs 810 Seventh Street N.W. Washington, DC 20531 Janet Reno Attorney General U.S. Department of Justice Raymond C. Fisher Associate Attorney General Laurie Robinson Assistant Attorney General Noël Brennan Deputy Assistant Attorney General Jeremy Travis Director, National Institute of Justice Office of Justice Programs National Institute of Justice World Wide Web Site: World Wide Web Site: http://www.ojp.usdoj.gov http://www.ojp.usdoj.gov/nij U.S. Department of Justice Office of Justice Programs National Institute of Justice Forensic Laboratories: Handbook for Facility Planning, Design, Construction, and Moving Law Enforcement and Corrections Standards and Testing Program Coordinated by Office of Law Enforcement Standards National Institute of Standards and Technology Gaithersburg, MD 20899–0001 April 1998 NCJ 168106 National Institute of Justice Jeremy Travis Director This technical effort to develop this report was conducted under Interagency Agreement No. -

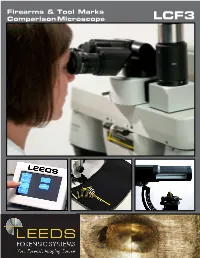

Firearms & Tool Marks Comparison Microscope

Firearms & Tool Marks Comparison Microscope LCF3 The LCF3 Firearms & Tool Marks Comparison Microscope is a robust and powerful system offering outstanding performance, ergonomic comfort, and incredible versatility. Leeds has designed the system incorporating feedback from the first hand experience of forensic examiners. PERFORMANCE • The LCF3 is built with world-class Olympus apochromatically cor- rected optics, providing crisp, aberration-free, high-resolution images. The macro bodies, with a 16:1 zoom ratio and built-in aperture diaphragms, provide the examiner with 14 matched mag- nification positions to choose from. The optics are parcentric and parfocal throughout the zoom range. Leeds’ technicians align all 14 of the click stop settings to assure that the magnification of the right and left zoom bodies are matched. This matching is complet- ed using N.I.S.T. (National Institute of Standards and Technology) traceable standards and includes an ISO 17025 accredited Certificate of Calibration with each LCF3. Optional objectives are available to provide alternate magnification ranges and working distances for the examiners. • Low profile stages help maintain an ergonomic viewing position and places the X and Y controls near the coarse and fine focus knobs. These mechanical stages and focus units are positioned to minimize repetitive hand-over-wrist motions. The stage mounts are placed on adjustable posts, allowing the stages to be remove from the work surface, to accommodate large samples. • The LCF3 optical bridge produces an erect, un-reversed image with a large 22mm field of view. Compared images can be viewed as 100% right, 100% left, and divided or overlapped into any ratio. -

Physical Evidence Manual

If you have issues viewing or accessing this file contact us at NCJRS.gov. City of Phoenix Physical Evidence Manual 142520 U.S. Department of Justice National Institute of Justice This document has been reproduced exactly as received from the person or organization originating it. Points of view or opinions stated in this document are those of the authors and do not necessarily represent the official position or policies of the National Institute of Justice. Permission to reproduce this copyrighted material has been granJ;l}\8'enix Police Department (AZ) to the National Criminal Justice Reference Service (NCJRS). Further reproduction outside of the NCJRS system requires permission of the copyright owner. Phoenix Police Department Crime Detection Laboratory d 5 CITY OF PHOENIX POLICE DEPARTMENT Crime Detection Laboratory WILLIAM J. COLLIER Director Edited by Raymond Gieszl 1990 -------------_._---------------------' CONTENTS I. Introduction ---------------------------------- 3-4 II. Laboratory ------------------------------------- 5 III. Function and Services ------------------------- 6-7 IV. General Instruction for Collection and -------- 8-10 Preservation of Physical Evidence V. Crime Scene Processing and Reconstruction ----- 11-15 VI. Marijuana, Narcotics and Dangerous Drugs ------ 16-18 VII. Prescription Only Drugs ------------------------ 19-20 VIII. Toxicology ------------------------------------ 21-22 IX. Blood stains ---------------------------------- 23-29 X. Hair ------------------------------------------- 30-32 XI. Seminal -

Forensic Applications of Microscopy: Fibers 99

© Jones & Bartlett© Darren Baker/ShutterStock, Inc. Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC. NOT FOR SALE OR DISTRIBUTION. 9781449691790_CH04_FINAL.indd 82 28/10/13 9:42 AM © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTIONCHAPTER The Microscope © Jones & Bartlett Learning, -

High School Science Materials Ordering List 2017

Featuring 2017 Grades 9–12 High School Science Materials Ordering List Biology ...............................................Pages 3–10 Chemistry ..........................................Pages 11–15 Earth Science ....................................Pages 16–19 We are Environmental Science .....................Pages 20–22 your essential Physics ...............................................Pages 23–27 resource for a STEM .................................................Pages 28–33 well-stocked science classroom. www.freyscientific.com 800-225-3739 Ordering Information EASY ORDERING SHIPPING AND Damages Please call customer service within 5 days Phone: 800-225-3739 HANDLING of delivery to report damaged shipments. All Call toll-free from 8:00am to 5:30pm (EST), A shipping and handling charge of 12% carriers reserve the right to inspect damaged Monday through Friday. Please have your will be added to all orders, with a minimum shipments. We suggest that visibly damaged credit card or purchase order number handy. charge of $5.00. shipments be refused at the time of delivery. From outside the U.S. call: 603-579-3400 Standard Delivery Fax: 877-256-3739 US 12% EASY PAYMENT Fax your order form or authorized purchase International 30% Credit Card and P-Card order toll-free 24 hours a day, 7 days a week. Minimum Charge We accept: From outside the U.S. fax to: 603-886-4632 Continental US $5.00 Order by mail AK, HI and Int’l $15.00 Send your authorized purchase order to: Standard delivery is UPS Ground or Please include credit card name, number, Frey Scientific equivalent. Overnight, second-day, and expiration date and authorized signature. P.O. Box 3000 other shipping methods are available at an Call us for more information if you would Nashua NH 03061-3000 additional cost. -

An Access-Dictionary of Internationalist High Tech Latinate English

An Access-Dictionary of Internationalist High Tech Latinate English Excerpted from Word Power, Public Speaking Confidence, and Dictionary-Based Learning, Copyright © 2007 by Robert Oliphant, columnist, Education News Author of The Latin-Old English Glossary in British Museum MS 3376 (Mouton, 1966) and A Piano for Mrs. Cimino (Prentice Hall, 1980) INTRODUCTION Strictly speaking, this is simply a list of technical terms: 30,680 of them presented in an alphabetical sequence of 52 professional subject fields ranging from Aeronautics to Zoology. Practically considered, though, every item on the list can be quickly accessed in the Random House Webster’s Unabridged Dictionary (RHU), updated second edition of 2007, or in its CD – ROM WordGenius® version. So what’s here is actually an in-depth learning tool for mastering the basic vocabularies of what today can fairly be called American-Pronunciation Internationalist High Tech Latinate English. Dictionary authority. This list, by virtue of its dictionary link, has far more authority than a conventional professional-subject glossary, even the one offered online by the University of Maryland Medical Center. American dictionaries, after all, have always assigned their technical terms to professional experts in specific fields, identified those experts in print, and in effect held them responsible for the accuracy and comprehensiveness of each entry. Even more important, the entries themselves offer learners a complete sketch of each target word (headword). Memorization. For professionals, memorization is a basic career requirement. Any physician will tell you how much of it is called for in medical school and how hard it is, thanks to thousands of strange, exotic shapes like <myocardium> that have to be taken apart in the mind and reassembled like pieces of an unpronounceable jigsaw puzzle. -

JAPSON Analytical Products Spectrum

Analytical Product Spectrum JAMBU PERSHAD & SONS Address : 6275/22 Nicholson Road, Ambala Cantt. -133 001 INDIA Phone : 0091-171-4006897, 4006441 | Fax : 0091-171-2602666, 4005444 E-mail : [email protected], [email protected] | Website : www.japson.com " Innovation is a key for turning ideas into reality" JAPSON is a company established in 1946 and dedicated to field of Scientific, Laboratory, School, Education, Dairy, Hospital and Analytical Equipments. It was one of the first company to start catering Schools, Colleges, Universities and Research Laboratories in local markets of India and then to institutions in Pakistan, Ceylon and Burma. Subsequently in seventies JAPSON made forays in the South-East Asian markets of Singapore, Malaysia and Thailand. After early Eighties the customer base spread all across the globe and Jambu Pershad and Sons was awarded International Contracts in Philippines, Oman, Uganda, Sudan, Ethiopia, Kuwait, Yemen, Jordan, Kenya, Iraq (UN Oil for Food program), Zambia, Ghana, Sri Lanka and Carribean. All the contracts have been successfully executed and completed to full satisfaction of respective Ministries of Education, United Nation bodies, World Banks projects and Asian Development Bank. Other than global contracts JAPSON has a wide dealer network in all parts of globe including Major parts of Africa, Asia, Middle- East, Europe and Caribbean resulting in healthy understanding of each market. JAPSON also act as OEM manufacturers for various European suppliers. Since Nineties JAPSON with its growing team of Engineers, Science graduates and Diploma Holders have forayed into the field of Analytical and Research Equipments. We at JAPSON work towards understanding the End customer needs and providing the complete solution for University, Industry, Research and Analytical Laboratories. -

Best Practice Manual for the Microscopic Examination of Human

BPM for the Microscopic Examination and Comparison of Human and Animal Hair ENFSI-BPM-THG-03 (vs.01) ENFSI-BPM-THG-03 (vs.01) BPM for the Microscopic Examination and Comparison of Human and Animal Hair Best Practice Manual for the Microscopic Examination and Comparison of Human and Animal Hair ENFSI-BPM-THG-03 Version 01 - November 2015 With the financial support of the Prevention of and Fight against Crime Programme European Commission - Directorate -General Home Affairs Road Accident Reconstruction Background This Best Practice Manual (BPM) belongs to a series of 10 BPMs issued by the European Network of Forensic Science Institutes (ENFSI) in November 2015. The series covers the following forensic disciplines: 1. Forensic Examination of Digital Technology 2. Forensic Examination of Handwriting 3. Chemographic Methods in Gunshot Residue Analysis 4. Road Accident Reconstruction 5. Microscopic Examination and Comparison of Human and Animal Hair 6. Fingerprint Examination 7. DNA Pattern Recognition and Comparison 8. Application of Molecular Methods for the Forensic Examination of Non-Human Biological Traces 9. Forensic Recovery, Identification and Analysis of Explosives Traces 10. Forensic Investigation of Fire Scenes which have resulted in Fatalities* 11. Forensic Investigation of Fire Scenes which involve the Clandestine Manufacture of Improvised or Homemade Explosive Devices* 12. Forensic Investigation of Fire Scenes which Involve the Clandestine Manufacture of Illicit Synthetic Drugs* * The three specific areas on Forensic Investigation of Fire Scenes (numbers 10 -12) were combined into one BPM ‘Investigation of Fire Scenes’. In the years 2014 and 2015, so-called Activity Teams have - in parallel - developed the 10 BPMs. The activities were performed within the project ‘Towards European Forensic Standardisation through Best Practice Manuals (TEFSBPM)’ and co-ordinated by the ENFSI Quality and Competence Committee. -

Bullet Castings: Recovery of Striations Kristen Fowble Forensic Science Department/Forensic Science and Chemistry

Bullet Castings: Recovery of Striations Kristen Fowble Forensic Science Department/Forensic Science and Chemistry Abstract The objective of this research was to determine the best casting material and method to cast fired bullets. In the forensic firearms field, the comparison of fired bullets using a comparison microscope is the most useful way to match a fired bullet to a particular firearm. Fired bullets can be traced back to the gun from which they were fired by careful examination and comparison of the tiny scratches on their surfaces known as striations. These striations are used to compare a recovered bullet from a scene to a bullet fired from a suspected weapon at the crime laboratory. Images of bullet striations can be entered into the National Integrated Ballistic Information Network (NIBIN) and searched against known and unsolved firearms cases in the database. Instead of sending the questioned bullet from one jurisdiction to another for comparison, castings can be made. This allows one jurisdiction to keep the original evidence and maintain chain of custody, while still providing assistance to another jurisdiction. Further, multiple castings can be made so multiple jurisdictions can conduct their own analyses simultaneously. The first casts were made with Mikrosil® and TRITECHFORENSICS Silicone Casting Material. The casts produced were negative images of the bullets. This means that the striations on the cast were backwards and orientated the opposite way of the bullet’s striations. Although the casts were negative images of the bullet, they still produced quality images of the fired bullet. Since they are negative images of the bullet it is not possible to compare the casts with the bullet; only cast-to- cast comparisons can be done. -

Military Occupational Classification of Enlisted Personnel

MHI Copyl A R D E P A R T M E N T T E C H N I C A L M A N U A 1 MILITARY OCCUPATIONAL CLASSIFICATION OF ENLISTED PERSONNEL WAR DEPARTMENT • 12 JULY 1944 WAR DEPARTMENT TECHNICAL MANUAL T M 12-427 Tkis manual together with TM 12-426 (when publislted) supersedes AR 6/5-26', 15 September 7.9-4:', including C 1, 30 January 1943 and C 2, 22 April 1943; Memorandum WG15-29-43, 11 March 1943; Memorandum W615-40-43, II April 1943; Memorandum W615-45-43, 13 May 1943; Memorandum W615-60-43, 17 July 1943; Memorandum W615-63-43, "24 August 1943; Memorandum W615-65-43, 26 August 1943; W615-44,22 March 1944; AC letter 201.6 (18 Oct 43) OC-A-KZ-MB-A, 1 November 1943; section I, Circular No. 43, War Department, 1943; and paragraph 3, Circular No. 141, War Department, 1944. MILITARY OCCUPATIONAL CLASSIFICATION OF ENLISTED PERSONNEL WA R DEPARTMENT 12 JULY 1944 United States Government Printing Office Wellington: 1944 TM 12-427 Cl TECHNICAL MANUAL MILITARY OCCUPATIONAL CLASSIFICATION OF ENLISTED PERSONNEL CHANGES 1 WAR DEPARTMENT No. 1 I WASHINGTON 25, D. C., 12 April 1945 TM12-427,12 July 1944, is changed as follows: SECTION I. INTRODUCTION TO MILL Page 11, under 5a, General. Change "821 TARY OCCUPATIONAL CLASSIFICA Quartermaster Supply Technician" to read "821 TION OF ENLISTED PERSONNEL Supply Noncommissioned Officer." 1. Insert page 2A containing changes to section I. Page 12, under 6c, Airplane Maintenance and Re 2. Delete paragraph 5, Determination of Soldier's pair.