Proceedings of the 2Nd Annual Digital TV Applications Software Environment

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Interactive Applications for Digital TV Using Internet Legacy – a Successful Case Study

Interactive Applications for Digital TV Using Internet Legacy – A Successful Case Study Márcio Gurjão Mesquita, Osvaldo de Souza B. The Big Players Abstract—This paper describes the development of an interactive application for Digital TV. The architectural Today there are three main Digital TV standards in the decisions, drawbacks, solutions, advantages and disadvantages world. DVB is the European standard, ATSC is the of Interactive Digital TV development are discussed. The American one and ISDB is the Japanese standard. Each one application, an e-mail client and its server, are presented and has its own characteristics, advantages and disadvantages. the main considerations about its development are shown, The European standard offers good options for interactive especially a protocol developed for communication between Internet systems and Digital TV systems. applications, the Japanese is very good on mobile applications and the American is strong on high quality Index Terms—Protocols, Digital TV, Electronic mail, image. Interactive TV C. The Brazilian Digital Television Effort Television is one of the main communication media in I. INTRODUCTION Brazil. More than 90% of Brazilian homes have a TV set, EVEOLOPING software applications has always been a most of which receive only analog signals [1]-[2]. The TV D hard task. When new paradigms appear, new plays an important role as a communication medium difficulties come along and software designers must deal integrator in a country of continental size and huge social with it in order to succeed and, maybe, establish new design differences as Brazil, taking information, news and and programming paradigms. This paper describes the entertainment to the whole country. -

Dp15647eng Web Om

Model No: DP15647 If you need additional assistance? Call toll free 1.800.877.5032 We can Help! Owner’s Manual Table of Contents . 5 Frequent Asked Questions (FAQ) . 45 © 2007 Sanyo Manufacturing Corporation TO THE OWNER Welcome to the World of Sanyo Thank you for purchasing this Sanyo High-Definition Digital Television. You made an excellent choice for Performance, Reliability, Features, Value, and Styling. Important Information Before installing and operating this DTV, read this manual thoroughly. This DTV provides many convenient features and functions. Operating the DTV properly enables you to manage those features and maintain it in good condition for many years to come. If your DTV seems to operate improperly, read this manual again, check operations and cable connections and try the solutions in the “Helpful Hints” sections of this manual. If the problem still persists, please call 1-800-877-5032. We can help! 2 CAUTION THIS SYMBOL INDICATES THAT DANGEROUS VOLTAGE CONSTITUT- RISK OF ELECTRIC SHOCK DO NOT OPEN ING A RISK OF ELECTRIC SHOCK IS PRESENT WITHIN THIS UNIT. CAUTION: TO REDUCE THE RISK OF ELECTRIC SHOCK, DO NOT REMOVE COVER (OR THIS SYMBOL INDICATES THAT THERE ARE IMPORTANT OPERATING BACK). NO USER-SERVICEABLE PARTS INSIDE. REFER SERVICING TO QUALIFIED AND MAINTENANCE INSTRUCTIONS IN THE LITERATURE ACCOM- SERVICE PERSONNEL. PANYING THIS UNIT. WARNING: TO REDUCE THE RISK OF FIRE OR ELECTRIC SHOCK, DO NOT EXPOSE THIS APPLIANCE TO RAIN OR MOISTURE. IMPORTANT SAFETY INSTRUCTIONS CAUTION: PLEASE ADHERE TO ALL WARNINGS ON THE PRODUCT AND IN THE OPERATING INSTRUCTIONS. BEFORE OPERATING THE PRODUCT, PLEASE READ ALL OF THE SAFETY AND OPERATING INSTRUCTIONS. -

MTS400 Series MPEG Test Systems User Manual I Table of Contents

User Manual MTS400 Series MPEG Test Systems Volume 1 of 2 071-1507-05 This document applies to firmware version 1.4 and above. www.tektronix.com Copyright © Tektronix. All rights reserved. Licensed software products are owned by Tektronix or its subsidiaries or suppliers, and are protected by national copyright laws and international treaty provisions. Tektronix products are covered by U.S. and foreign patents, issued and pending. Information in this publication supercedes that in all previously published material. Specifications and price change privileges reserved. TEKTRONIX and TEK are registered trademarks of Tektronix, Inc. Contacting Tektronix Tektronix, Inc. 14200 SW Karl Braun Drive P.O. Box 500 Beaverton, OR 97077 USA For product information, sales, service, and technical support: H In North America, call 1-800-833-9200. H Worldwide, visit www.tektronix.com to find contacts in your area. Warranty 2 Tektronix warrants that this product will be free from defects in materials and workmanship for a period of one (1) year from the date of shipment. If any such product proves defective during this warranty period, Tektronix, at its option, either will repair the defective product without charge for parts and labor, or will provide a replacement in exchange for the defective product. Parts, modules and replacement products used by Tektronix for warranty work may be new or reconditioned to like new performance. All replaced parts, modules and products become the property of Tektronix. In order to obtain service under this warranty, Customer must notify Tektronix of the defect before the expiration of the warranty period and make suitable arrangements for the performance of service. -

ANSI/CTA Standard

AANNSSII//CCTTAA SSttaannddaarrdd Line 21 Data Services ANSI/CTA-608-E R-2014 (Formerly ANSI/CEA-608-E R-2014) April 2008 NOTICE Consumer Technology Association (CTA)™ Standards, Bulletins and other technical publications are designed to serve the public interest through eliminating misunderstandings between manufacturers and purchasers, facilitating interchangeability and improvement of products, and assisting the purchaser in selecting and obtaining with minimum delay the proper product for his particular need. Existence of such Standards, Bulletins and other technical publications shall not in any respect preclude any member or nonmember of the Consumer Technology Association from manufacturing or selling products not conforming to such Standards, Bulletins or other technical publications, nor shall the existence of such Standards, Bulletins and other technical publications preclude their voluntary use by those other than Consumer Technology Association members, whether the standard is to be used either domestically or internationally. Standards, Bulletins and other technical publications are adopted by the Consumer Technology Association in accordance with the American National Standards Institute (ANSI) patent policy. By such action, the Consumer Technology Association does not assume any liability to any patent owner, nor does it assume any obligation whatever to parties adopting the Standard, Bulletin or other technical publication. Note: The user's attention is called to the possibility that compliance with this standard may require use of an invention covered by patent rights. By publication of this standard, no position is taken with respect to the validity of this claim or of any patent rights in connection therewith. The patent holder has, however, filed a statement of willingness to grant a license under these rights on reasonable and nondiscriminatory terms and conditions to applicants desiring to obtain such a license. -

Semi-Automated Mobile TV Service Generation

> IEEE Transactions on Broadcasting < 1 Semi-automated Mobile TV Service Generation Dr Moxian Liu, Member, IEEE, Dr Emmanuel Tsekleves, Member, IEEE, and Prof. John P. Cosmas, Senior Member, IEEE environments from both hardware and software perspectives. The development results on the hardware environment and Abstract—Mobile Digital TV (MDTV), the hybrid of Digital lower layer protocols are promising with reliable solutions Television (DTV) and mobile devices (such as mobile phones), has (broadcast networks such as DVB-H and mobile convergence introduced a new way for people to watch DTV and has brought networks such as 3G) being formed. However the new opportunities for development in the DTV industry. implementation of higher layer components is running Nowadays, the development of the next generation MDTV service relatively behind with several fundamental technologies has progressed in terms of both hardware layers and software, (specifications, protocols, middleware and software) still being with interactive services/applications becoming one of the future MDTV service trends. However, current MDTV interactive under development. services still lack in terms of attracting the consumers and the The vast majority of current commercial MDTV service service creation and implementation process relies too much on applications include free-to-air, Pay Television (PayTV) and commercial solutions, resulting in most parts of the process being Video-on-Demand (VoD) services. When contrasted with the proprietary. In addition, this has increased the technical demands various service types of conventional DTV, MDTV services for developers as well as has increased substantially the cost of are still unidirectional, offering basic services that lack in producing and maintaining MDTV services. -

Z86129/130/131 1 Ntsc Line 21 Decoder

1 Z86129/130/131 1 NTSC LINE 21 DECODER FEATURES Speed Pin Count/ Standard On-Screen Display Automatic Data Extraction Devices (MHz) Package Types Temp. Range & Closed Captioning V-Chip Time of Day Z86129 12 18-Pin DIP, SOIC 0° to +70°C Yes Yes Yes Z86130 12 18-Pin DIP, SOIC 0° to +70°C No Yes Yes Z86131 12 18-Pin DIP, SOIC 0° to +70°CNoNoYes ■ Complete Stand-Alone Line 21 Decoder for Closed- ■ Minimal Communications and Control Overhead Captions and Extended Data Services (XDS). Provides Simple Implementation of Violence Block, Closed Caption, and Auto Clock Set Features. ■ Preprogrammed to Provide Full Compliance with EIA- 608 Specifications for Extended Data Services. ■ Programmable, Full Screen On-Screen Display (OSD) for Creating OSD or Captions inside a Picture-in-Picture ■ Automatic Extraction and Serial Output of Special XDS (PiP) Window (Z86129 only). Packets such as Time of Day, Local Time Zone, and Program Rating (V-Chip). ■ I2C Serial Data and Control Communication ■ Cost-Effective Solution for NTSC Violence Blocking ■ User-Programmable Horizontal Display Position for inside Picture-in-Picture (PiP) Windows. easy OSD Centering and Adjustment (Z86129 only). GENERAL DESCRIPTION The Z86129/130/131 is a stand-alone integrated circuit, bility, but are ideally suited for Line 21 data slicer capable of processing Vertical Blanking Interval (VBI) data applications. from both fields of the video frame in data conforming to the transmission format defined in the Television Decoder The Z86129/130/131 can recover and output to a host pro- 2 Circuits Act of 1990 and in accordance with the Electronics cessor via the I C serial bus any XDS data packet defined Industry Association specification 608 (EIA-608). -

Downloads and Documentation Are 12 Implementation Details

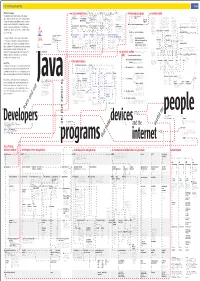

Java™ Technology Concept Map SM 2 Sun works with companies become members of the JCP by signing JSPA has a is which What is Java Technology? onthe represented are supports the development of ... Java 0 within the context of the Java Community Process, Java Community Process The Java Specification Participation Programming language Java object model that is an that by is organized defines This diagram is a model of Java™ technology. The diagram begins with members join the JCP by signing the provides ... documentation 42 may function as ... developers 23 Agreement is a one-year renewable is defined by the ... Java Language Specification 43 logo owns the ... Java trademark 1 support the development of ... Java 0 agreement that allows signatories to is used to write ... programs 24 make(s) ... SDKs 29 make ... SDKs 29 Class libraries are An application programming interface is the Particular to Java, Abstract classes permit child explains Java technology by placing it in the context of related Java forums often discuss Java in Java developer become members of the JCP. is used to write ... class libraries 10 organized collections written or understood specification of how a interfaces are source code classes to inherit a defined method makes versions of a ... JVM 18 make versions of a ... JVM 18 Companies include Alternatively, developers can sign the syntax and 4 of prebuilt classes piece of software interacts with the outside files that define a set of or to create groups of related communities provides certify Java applications using IBM, Motorola, more limited Individual Expert The Java language has roots in C, Objective C, and functions used to world. -

Preparing for the Broadcast Analog Television Turn-Off: How to Keep Cable Subscribers’ Tvs from Going Dark

White Paper Preparing for the Broadcast Analog Television Turn-Off: How to Keep Cable Subscribers’ TVs from Going Dark Learn about the NTSC-to-ATSC converter/ receiver and how TANDBERG Television’s RX8320 ATSC Broadcast Receiver solves the Analog Turn-Off issues discussed in this paper Matthew Goldman Vice President of Technology TANDBERG Television, part of the Ericsson Group First presented at SCTE Cable-Tec Expo® 2008 in Philadelphia, Pennsylvania © TANDBERG Television 2008. All rights reserved. Table of Contents 1. The “Great Analog Television Turn-Off” ..............................................................................................3 1.1 Receiving Over-the-Air TV Transmissions ..............................................................................3 1.2 ATSC DTV to NTSC Analog Conversion ...................................................................................5 2. Video Down-Conversion .........................................................................................................................5 2.1 Active Format Description ..........................................................................................................8 2.2 Bar Data .............................................................................................................................................9 2.3 Color Space Correction ................................................................................................................9 3. Audio Processing .......................................................................................................................................9 -

Entrevista Promoções Agenda De Eventos

ENTREVISTA Bjjarne Stroustrup, o criador do C++ PROMOÇÕES AGENDA DE http://reviista.espiiriitolliivre.org | #024 | MarÇo 2011 EVENTOS Linguagens de ProgramaÇÃo Grampos Digitais PÁg 21 TV pela Internet no Ubuntu PÁg 70 SumÁrio e PaginaÇÃo no LibreOffice PÁg 57 Navegando em pequenos dispositivos PÁg 74 Teste de IntrusÃo com Software Livre PÁg 65 Linux AcessÍvel PÁg 88 Alterando endereÇos MAC PÁg 69 Mulheres e TI: Seja tambÉm uma delas PÁg 90 COM LICENÇA Revista EspÍrito Livre | Março 2011 | http://revista.espiritolivre.org |02 EDITORIAL / EXPEDIENTE EXPEDIENTE Diretor Geral Programando sua vida... JoÃo Fernando Costa Júnior Neste mês de março, a Revista EspÍrito Livre fala de um assunto que para muitos É um bicho de 7 cabeças: Linguagens de ProgramaÇÃo. Seja você Editor desenvolvedor ou nÃo, programar É um ato diÁrio. Nossos familiares se JoÃo Fernando Costa Júnior programam para seus afazeres, seu filho se programa para passar no vestibular, você se programa para cumprir as suas obrigaÇÕes. Programarse É RevisÃo um ato cotidiano, e nÃo exclusivo dos desenvolvedores de programas. EntÃo AÉcio Pires, Alessandro Ferreira Leite, porque inÚmeras pessoas materializam na programaÇÃo os "seus piores Alexandre A. Borba, Carlos Alberto V. pesadelos? Será algo realmente complexo? Será fÁcil atÉ demais? A quem Loyola Júnior, Daniel Bessa, Eduardo Charquero, Felipe Buarque de Queiroz, diga e atÉ ignore tais dificuldades encontradas por várias pessoas nesse ramo Fernando Mercês, Larissa Ventorim da computaÇÃo, que sempre carece de mãodeobra qualificada para o Costa, Murilo Machado, OtÁvio mercado. Alunos de diversos cursos de computaÇÃo encontram nesta parte da Gonçalves de Santana, Rodolfo M. -

7760CCM-HD HD/SD-SDI Closed Caption CEA-608/CEA-708 Translator, Decoder & Analyzer

7700 MultiFrame Manual 7760CCM-HD HD/SD-SDI Closed Caption CEA-608/CEA-708 Translator, Decoder & Analyzer TABLE OF CONTENTS 1. OVERVIEW ......................................................................................................................... 1 2. INSTALLATION .................................................................................................................. 3 2.1. VIDEO IN AND OUT ................................................................................................................ 3 2.2. GENERAL PURPOSE INPUTS AND OUTPUTS ..................................................................... 4 2.3. 7760CCM-HD HD/SD-SDI CLOSED CAPTION CEA-608/CEA-708 TRANSLATOR, DECODER & ANALYZER CABLE ..................................................................................................... 5 2.3.1. AUX I/O Cable End ........................................................................................................... 5 2.3.2. DB-9 Communication and GPI/O Cable End ..................................................................... 6 2.3.3. Cable Connections ............................................................................................................ 6 3. SPECIFICATIONS ............................................................................................................... 7 3.1. HD/SD SERIAL DIGITAL INPUT ............................................................................................. 7 3.2. RECLOCKED OUTPUT .......................................................................................................... -

Java TV API Technical Overview to [email protected]

Java TV™ API Technical Overview: TheJavaTVAPIWhitepaper Version1.0 November14,2000 Authors: BartCalder,JonCourtney,BillFoote,LindaKyrnitszke, DavidRivas,ChihiroSaito,JamesVanLoo,TaoYe Sun Microsystems, Inc. Copyright © 1998, 1999, 2000 Sun Microsystems, Inc. 901 San Antonio Road, Palo Alto, CA 94303 USA All rights reserved. This product or document is protected by copyright and distributed under licenses restricting its use, copying, distribution, and decompilation. No part of this product or document may be reproduced in any form by any means without prior written authorization of Sun and its licensors, if any. Third-party software, including font technology, is copyrighted and licensed from Sun suppliers. Sun, Sun Microsystems, the Sun Logo, Java, Java TV, JavaPhone, PersonalJava and all Java-based marks, are trademarks or registered trademarks of Sun Microsystems, Inc. in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the U.S. and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. UNIX is a registered trademark in the U.S. and other countries, exclusively licensed through X/Open Company, Ltd. The OPEN LOOK and SunTM Graphical User Interface was developed by Sun Microsystems, Inc. for its users and licensees. Sun acknowledges the pioneering efforts of Xerox in researching and developing the concept of visual or graphical user interfaces for the computer industry. Sun holds a non-exclusive license from Xerox to the Xerox Graphical User Interface, which license also covers Sun's licensees who implement OPEN LOOK GUIs and otherwise comply with Sun's written license agreements. -

Objektorienteeritud Programmeerimine

Objektorienteeritud programmeerimine 12. veebruar 2018 Marina Lepp 1 Milline järgmistest ansamblitest meeldib teile kõige rohkem? 1. Curly Strings 17% 15% 2. Patune pool 14% 3. Trad.Attack! 13% 11% 4. Miljardid 9% 9% 5. Metsatöll 8% 6. Smilers 5% 7. Terminaator 8. Winny Puhh 9. Ei oska öelda Smilers MiljardidMetsatöll Curly StringsPatune poolTrad.Attack! TerminaatorWinny PuhhEi oska öelda 2 Tänane plaan • Sissejuhatus – organisatoorselt • Milleks? • Kuidas? • Millal? • Kas? – teemasse • Java • Esimene programm • … • … 3 Milleks OOP? • Silmaring, maailmavaade – objektid, subjektid • Õppimine – jätk esimese semestri programmeerimisele • Programmeerimine • Programmeerimise alused, Programmeerimise alused II 4 Eeldus mitmetele ainetele • LTAT.03.005 Algoritmid ja andmestruktuurid • LTAT.05.003 Tarkvaratehnika • LTAT.05.004 Veebirakenduste loomine • MTAT.03.032 Kasutajaliideste kavandamine • MTAT.03.158 Programmeerimine keeles C++ 5 Keel • Java – populaarsus • http://www.tiobe.com/index.php/content/paperinfo/tp ci/index.html – töökuulutused • http://www.cvkeskus.ee • http://www.cv.ee/too/infotehnoloogia/q-java 6 Inimesed on erinevad • Siin võib-olla neid, kes – õpivad hoopis muud eriala – programmeerivad igapäevaselt suure innuga – said Programmeerimises hinde E – on edukalt osalenud (rahvusvahelisel) informaatikaolümpiaadil – suhtlevad mingis programmeerimiskeeles palju vabamalt kui mistahes inimkeeles – on selle aine püsikliendid – … 7 Mis valdkonnast te olete? 1. humanitaarteaduste ja 90% kunstide valdkond 2. sotsiaalteaduste valdkond 3. meditsiiniteaduste valdkond 4. loodus- ja täppisteaduste valdkond 5. muu 3% 3% 1% 2% 1. 2. 3. 4. 5. 8 Programmeerimise (Progr. alused II) kursus oli 1. väga lihtne 31% 2. lihtne 29% 3. paras 4. keeruline 21% 5. väga keeruline 12% 7% 1. 2. 3. 4. 5. 9 Millise hinde saite Programmeerimise (Progr. alused II) kursusel? A. A 3%2% B.