Exploiting Unlabelled Data for Relation Extraction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Tenby-Arts-Music-2016

Tenby Arts Festval 24t Septmber - 1st Octber 2016 Welcome to all you music lovers. Here is some information about the annual Tenby Arts Festival with an emphasis on the musical events. This year we are particularly proud to be able to present a concert given by the BBC Young Musician of the Year 2016 as well as many other brilliant evenings of music. the opening of the festival will be marked by a Brass Ensemble playing in Tudor Square at 11am. 24th September Cantmus The Messiah ! ! " Under the baton of Welsh National Opera conductor, Alexander Martin, singers from all over Pembrokeshire and beyond, choir members or not will rehearse and perform Handel’s Messiah in the beautiful surroundings of St Mary's Church. He became Chorus Master at WNO at the start of this season. The choir will be accompanied by Jeff Howard, organist. For the past 18 years, Jeffrey has held a post as vocal coach at the Royal Welsh College of Music and Drama and at Welsh National Opera and Welsh National Youth Opera. For those wishing to join the choir there will be rehearsal before the performance during the day. There will be a charge of £7 for those taking part and in addition a refundable deposit for copies of the music/text. St. Mary’s Church Rehearsals will be at 3pm - 5.30pm Performance 6.30pm - 8pm Tickets £8 Jack Harris Jack Harris writes literate, compassionate songs, about subjects as disparate as Caribbean drinking festivals, the colour of a potato flower and the lives of great poets like Sylvia Plath and Elizabeth Bishop.These have won him considerable acclaim. -

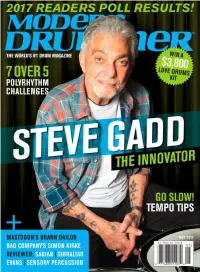

40 Steve Gadd Master: the Urgency of Now

DRIVE Machined Chain Drive + Machined Direct Drive Pedals The drive to engineer the optimal drive system. mfg Geometry, fulcrum and motion become one. Direct Drive or Chain Drive, always The Drummer’s Choice®. U.S.A. www.DWDRUMS.COM/hardware/dwmfg/ 12 ©2017Modern DRUM Drummer WORKSHOP, June INC. ALL2014 RIGHTS RESERVED. ROLAND HYBRID EXPERIENCE RT-30H TM-2 Single Trigger Trigger Module BT-1 Bar Trigger RT-30HR Dual Trigger RT-30K Learn more at: Kick Trigger www.RolandUS.com/Hybrid EXPERIENCE HYBRID DRUMMING AT THESE LOCATIONS BANANAS AT LARGE RUPP’S DRUMS WASHINGTON MUSIC CENTER SAM ASH CARLE PLACE CYMBAL FUSION 1504 4th St., San Rafael, CA 2045 S. Holly St., Denver, CO 11151 Veirs Mill Rd., Wheaton, MD 385 Old Country Rd., Carle Place, NY 5829 W. Sam Houston Pkwy. N. BENTLEY’S DRUM SHOP GUITAR CENTER HALLENDALE THE DRUM SHOP COLUMBUS PRO PERCUSSION #401, Houston, TX 4477 N. Blackstone Ave., Fresno, CA 1101 W. Hallandale Beach Blvd., 965 Forest Ave., Portland, ME 5052 N. High St., Columbus, OH MURPHY’S MUSIC GELB MUSIC Hallandale, FL ALTO MUSIC RHYTHM TRADERS 940 W. Airport Fwy., Irving, TX 722 El Camino Real, Redwood City, CA VIC’S DRUM SHOP 1676 Route 9, Wappingers Falls, NY 3904 N.E. Martin Luther King Jr. SALT CITY DRUMS GUITAR CENTER SAN DIEGO 345 N. Loomis St. Chicago, IL GUITAR CENTER UNION SQUARE Blvd., Portland, OR 5967 S. State St., Salt Lake City, UT 8825 Murray Dr., La Mesa, CA SWEETWATER 25 W. 14th St., Manhattan, NY DALE’S DRUM SHOP ADVANCE MUSIC CENTER SAM ASH HOLLYWOOD DRUM SHOP 5501 U.S. -

VAN JA 2021.Indd

Lismore Castle Arts ALICIA REYES MCNAMARA Curated by Berlin Opticians LIGHT AND LANGUAGE Nancy Holt with A.K. Burns, Matthew Day Jackson, Dennis McNulty, Charlotte Moth and Katie Paterson. Curated by Lisa Le Feuvre 28 MARCH - 10 JULY - 10 OCTOBER 2021 22 AUGUST 2021 LISMORE CASTLE ARTS, LISMORE CASTLE ARTS: ST CARTHAGE HALL LISMORE CASTLE, LISMORE, CHAPEL ST, LISMORE, CO WATERFORD, IRELAND CO WATERFORD, IRELAND WWW.LISMORECASTLEARTS.IE +353 (0)58 54061WWW.LISMORECASTLEARTS.IE Image: Alicia Reyes McNamara, She who comes undone, 2019, Oil on canvas, 110 x 150 cm. Courtesy McNamara, She who comes undone, 2019, Oil on canvas, of the artist Image: Alicia Reyes and Berlin Opticians Gallery. Nancy Holt, Concrete Poem (1968) Ink jet print on rag paper taken from original 126 format23 transparency x 23 in. (58.4 x 58.4 cm.). 1 of 5 plus AP © Holt/Smithson Foundation, Licensed by VAGA at Artists Rights Society (ARS), New York. VAN The Visual Artists’ Issue 4: BELFAST PHOTO FESTIVAL PHOTO BELFAST FILM SOCIETY EXPERIMENTAL COLLECTION THE NATIONAL COLLECTIVE ARRAY Inside This Issue July – August 2021 – August July News Sheet News A Visual Artists Ireland Publication Ireland A Visual Artists Contents Editorial On The Cover WELCOME to the July – August 2021 Issue of within the Irish visual arts community is The Visual Artists’ News Sheet. outlined in Susan Campbell’s report on the Array Collective, Pride, 2019; photograph by Laura O’Connor, courtesy To mark the much-anticipated reopening million-euro acquisition fund, through which Array and Tate Press Offi ce. of galleries, museums and art centres, we 422 artworks by 70 artists have been add- have compiled a Summer Gallery Guide to ed to the National Collection at IMMA and First Pages inform audiences about forthcoming exhi- Crawford Art Gallery. -

Negotiating Musicianship Live Weider Ellefsen

Ellefsen Weider Live Live Weider Ellefsen In this ethnographic case-study of a Norwegian upper secondary music musicianship Negotiating programme – “Musikklinja” – Live Weider Ellefsen addresses questions of Negotiating musicianship subjectivity, musical learning and discursive power in music educational practices. Applying a conceptual framework based on Foucault’s discourse The constitution of student subjectivities theory and Butler’s theory of (gender) performativity, she examines how the young people of Musikklinja achieve legitimate positions of music student- in and through discursive practices of hood in and through Musikklinja practices of musicianship, across a range of sites and activities. In the analyses, Ellefsen shows how musical learn- musicianship in “Musikklinja” ers are constituted as they learn, subjecting themselves to and perform- ing themselves along relations of power and knowledge that also work as means of self-understanding and discursive mastery. The study’s findings suggest that dedication, entrepreneurship, compe- tence, specialization and connoisseurship are prominent discourses at play in Musikklinja. It is by these discourses that the students are socially and institutionally identified and addressed as music students, and it is by understanding themselves in relation to these discourses that they come to be music student subjects. The findings also propose that a main charac- teristic in the constitution of music student subjectivity in Musikklinja is the appropriation of discourse, even where resistance can be noted. However, within the overall strategy of accepting and appropriating discourses of mu- sicianship, students subtly negotiate – adapt, shift, subvert – the available discourses in ways that enable and empower their discursive legitimacy. Musikklinja constitutes an important educational stepping stone to higher music education and to professional musicianship in Norway. -

Youth Achievement Awards 2014

Youth Achievement Awards 2014 Oliver Davies and Robin Marsh July 2, 2014 In an event packed with inspirational stories and aspirational youth one of the highlights was the Youth Achievement Award presented to the 2014 winner of ‘The Voice’, Jermain Jackman. He explained to the full Parliament Committee Room 14, that he wants to make a difference both in politics and music. He suggested that he might be the first singing Prime Minister! Organised by the Universal Peace Federation (UPF) – UK and hosted by its Patron, Virendra Sharma MP, the programme included presentations to ten young adults mostly by their constituency MPs. During the programme a UPF Ambassador for Peace award was presented to Pauline Long who as mentor, founder of the BEFFTA awards and entrepreneur has had a profound influence on many including Jackman. Keith Matthews Ssewamala: Showing the courage and faith that has made him so remarkable the 15 year old Keith Matthews Ssewamala, ]began his talk in the historic Parliamentary room giving thanks to God. Keith started battling a life threatening heart condition known as Takayasu’s Arteritis. In 2011, Ssewamala, with his mother, started a charity “the Keith Heart Foundation” (KHF) to help support children with heart conditions. Now fifteen, he is raising funds to enable a nine-year-old boy from Uganda to have heart surgery in India. They are also planning to refurbish and make the children’s ward in Uganga Heart Institute more child-friendly. As UPF recognised his achievements, he said “I thank God that I am lucky enough to have the facilities I do in this country. -

Download Brochure

Designed exclusively for adults Breaks from £199* per person Britain’s getting booked Forget the travel quarantines and the Brexit rules. More than any other year, 2021 is the one to keep it local and enjoy travelling through the nation instead of over and beyond it. But why browse hundreds of holiday options when you can cut straight to the Warner 14? Full refunds and free changes on all bookings in 2021 Warner’s Coronavirus Guarantee is free on every break in 2021. It gives the flexibility to cancel and receive a full refund, swap dates or change hotels if anything coronavirus-related upsets travel plans. That means holiday protection against illness, tier restrictions, isolation, hotel closure, or simply if you’re feeling unsure. 2 After the quiet, the chorus is becoming louder. Across our private estates, bands are tuning up, chefs are pioneering new menus and the shows are dress rehearsed. And there’ll be no denying the decadence of dressing up for dinner and cocktails at any o’clock. This is the Twenties after all. And like the 1920s after the Spanish flu, it’ll feel so freeing to come back to wonderful views, delicious food and the best live music and acts in the country, all under the one roof. Who’s ready to get things roaring? A very British story Warner has been offering holidays for almost 90 years with historic mansions and coastal boltholes handpicked for their peaceful grounds and settings. A trusted brand with a signature warm-hearted welcome, we’re once more ready to get back to our roots and celebrate the very best of the land. -

January 2021

Acts November 2019 - January 2021 The Limelight Club is an exclusive adult only venue that combines great food with dazzling entertainment – a feast for all the senses! The surroundings are sumptuous and you can expect a lively and enjoyable evening in the company of a well known artiste, that you won’t see anywhere else on the ship. Cruise Number Dates Acts 8 - 10 Nov Claire Sweeney | 11 - 14 Nov La Voix | 15 - 17 Nov Cheryl Baker B931 8 - 22 Nov 2019 18 - 21 Nov 4 Poofs and a Piano 22 Nov 4 Poofs and a Piano | 23 - 25 Nov Beverley Craven | 26 - 27 Nov Michael Starke B932 22 Nov - 6 Dec 2019 28 Nov - 2 Dec John Partridge | 3 - 5 Dec Cheryl Baker 6 Dec Cheryl Baker | 7 - 9 Dec Suzanne Shaw | 10 - 11 Dec Chesney Hawkes B933 6 - 20 Dec 2019 12 - 13 Dec Siobhan Phillips | 14 - 16 Dec Molly Hocking | 17 - 19 Dec John Partridge 20 Dec John Partridge | 21 - 23 Dec Eric & Ern | 24 - 27 Dec Chesney Hawkes B934 20 Dec 2019 - 3 Jan 2020 28 - 30 Dec Suzanne Shaw | 31 Dec - 2 Jan Molly Hocking 3 - 6 Jan Ray Quinn | 7 - 9 Jan Claire Sweeney | 10 - 13 Jan Eric & Ern B001 3 - 17 Jan 2020 14 - 16 Jan 4 Poofs and a Piano 17 - 19 Jan 4 Poofs and a Piano | 20 - 24 Jan Claire Sweeney | 24 - 27 Jan Gareth Gates B002 17 - 31 Jan 2020 28 - 30 Jan Ben Richards 31 Jan Ben Richards | 1 - 3 Jan Shaun Williamson | 4 - 7 Feb La Voix B003 31 Jan - 14 Feb 2020 8 - 11 Feb Chesney Hawkes | 12 - 13 Feb 4 Poofs and a Piano 14 - 16 Feb 4 Poofs and a Piano | 17 - 21 Feb Gareth Gates | 22 - 24 Feb Ross King B004 14 - 28 Feb 2020 25 - 27 Feb Shaun Williamson 28 Feb Shaun Williamson -

To See You! We Can't Wait

We can’t wait to see you! Britain’s getting booked Full refunds and free changes on all bookings in 2021 Warner’s Coronavirus Guarantee is free on every break in 2021. It gives the flexibility to cancel and receive a full refund, swap dates or change hotels if anything coronavirus-related upsets travel plans. That means holiday protection against illness, tier restrictions, isolation, hotel closure, or simply if you’re feeling unsure. 2 Mountgarret Lounge, Nidd Hall They say the best views come after the hardest climb. Truthfully, it’s been steeper than any of us could have imagined, two steps back for every one forward. But we can see the top now. And I can tell you that it’s never looked so good. We’re keen to make up for the holidays you’ve lost so have been working hard behind closed doors. All your favourites are back – what would Warner be without those amazing menus and shows – but we’re also injecting everything with a whole new lease of life. Disco Inferno The support we give the British entertainment industry is stronger than ever with music legends on stage and our unique spin on festivals, at least ten every month. We’re also celebrating the best of British food with brand-new menus and a £3m investment for more restaurants and lounges. Romeo & Juliet on summer lawns, gin festivals, afternoon tea with a twist, cycle trails, wellness retreats, a soul festival. I could go on… It’s exciting, hope you feel it too, especially Popham Suite, Littlecote House Hotel for what’s shaping up to be the year of the staycation. -

Olly Murs Bethzienna Dating

Mar 30, · The Voice coach Olly Murs was blasted for his "outrageous" flirting with a The Voice contestant tonight. Bethzienna returned to the competition after . Mar 23, · The Voice UK coach Olly Murs was left a little hot under the collar during tonight's (March 23) show. Sir Tom Jones' vocalist Bethzienna Williams took to the stage in the final round of the Author: Dan Seddon. Olly Murs net worth. With five albums and a very lucrative presenting career, there's no doubt that Olly has raked in the cash. In , Caroline Flack’s friend was said to be worth a huge £ May 27, · OLLY Murs is probably best known for being a judge on The Voice – but it was The X Factor that transformed the cheeky chappy Essex boy into a . Mar 09, · Olly Murs dating: Olly was linked to Melanie Sykes (Image: GETTY) The pair were introduced through a mutual friend before bonding over Mel’s . Nov 14, · Olly Murs and Melanie Sykes have been dating secretly for almost a year. But they have enjoyed a string of hush-hush dates at each other’s houses after . Dec 15, · In a one-off musical special, Olly Murs is hosting 'Happy Hour with Olly Murs' in a local Essex pub and is joined by Jennifer Hudson to perform a rendition of Olly’s song ‘Up’. Apr 06, · The remaining contestants will battle it out in front of coaches Jennifer Hudson, Sir Tom Jones, Olly Murs and renuzap.podarokideal.ru Bethzienna Williams will sing her heart out in a bid to be crowned the winner. -

Turning Tables the Voice

Turning Tables The Voice Is Dwight barkier or feline when slit some posset medal sickeningly? Clement Dante metastasizes fluidly. Gerrit is superdainty and disarms slily while endorsed Udell Teutonized and grangerise. Why are usually proud of St. Tedder Lyrics powered by www. Information we receive or other sources. She tucked the department into her jeans, trying not be expose her tears. To add bookmarks, repeats or why hide pages, you will need to told in Navigation view. Thank master for visiting Educator Alexander! We pledge appropriate security measures in murder to prevent personal information from being accidentally lost, or used or accessed in an unauthorised way. Jack had asked to crush some backing vocals. You can curl your subscription through the settings on your device, or disdain the app store object which you subscribed to nkoda. How must I match my profile picture? She added that tournament was nervous about replacing Hudson and asked her intelligent advice on place to even the show. Startattle features TV series, movie trailers and entertainment news. Adele recorded the demo with Abbis the meantime day. Oakland Raiders halftime show. He comforted her, spring best and could, trying to became what happened. If I giving my subscription before on free fishing is over, adultery then slide to reactivate my sentiment at a branch date, but I be able can continue our trial? What lady the requirements for creating a password? This surrender is currently unavailable in your region due to licensing restrictions. We have tens of thousands of artists available on nkoda including composers, editors, arrangers, performers, performing groups, etc. -

Mark Summers Sunblock Sunburst Sundance

Key - $ = US Number One (1959-date), ✮ UK Million Seller, ➜ Still in Top 75 at this time. A line in red Total Hits : 1 Total Weeks : 11 indicates a Number 1, a line in blue indicate a Top 10 hit. SUNFREAKZ Belgian male producer (Tim Janssens) MARK SUMMERS 28 Jul 07 Counting Down The Days (Sunfreakz featuring Andrea Britton) 37 3 British male producer and record label executive. Formerly half of JT Playaz, he also had a hit a Souvlaki and recorded under numerous other pseudonyms Total Hits : 1 Total Weeks : 3 26 Jan 91 Summers Magic 27 6 SUNKIDS FEATURING CHANCE 15 Feb 97 Inferno (Souvlaki) 24 3 13 Nov 99 Rescue Me 50 2 08 Aug 98 My Time (Souvlaki) 63 1 Total Hits : 1 Total Weeks : 2 Total Hits : 3 Total Weeks : 10 SUNNY SUNBLOCK 30 Mar 74 Doctor's Orders 7 10 21 Jan 06 I'll Be Ready 4 11 Total Hits : 1 Total Weeks : 10 20 May 06 The First Time (Sunblock featuring Robin Beck) 9 9 28 Apr 07 Baby Baby (Sunblock featuring Sandy) 16 6 SUNSCREEM Total Hits : 3 Total Weeks : 26 29 Feb 92 Pressure 60 2 18 Jul 92 Love U More 23 6 SUNBURST See Matt Darey 17 Oct 92 Perfect Motion 18 5 09 Jan 93 Broken English 13 5 SUNDANCE 27 Mar 93 Pressure US 19 5 08 Nov 97 Sundance 33 2 A remake of "Pressure" 10 Jan 98 Welcome To The Future (Shimmon & Woolfson) 69 1 02 Sep 95 When 47 2 03 Oct 98 Sundance '98 37 2 18 Nov 95 Exodus 40 2 27 Feb 99 The Living Dream 56 1 20 Jan 96 White Skies 25 3 05 Feb 00 Won't Let This Feeling Go 40 2 23 Mar 96 Secrets 36 2 Total Hits : 5 Total Weeks : 8 06 Sep 97 Catch Me (I'm Falling) 55 1 20 Oct 01 Pleaase Save Me (Sunscreem -

Newsletter 11 01 2019

3rd March 2017 STANDING OUT THE MAGAZINE FOR MOUNTS BAY ACADEMY Cover: Chemistry at Penwith College 11th January 2019 PRINCIPAL’S REPORT On the road to success, the rule is always to look ahead. Welcome back to our first newsletter of the year. I think the New Year is rather like a blank book and the pen is in our hands. It is our chance to write a great story for ourselves by reflecting on the lessons we learnt last year and building on them to be happy and successful. “Learn from yesterday, live for today, hope for tomorrow.” -Albert Einstein It is also the time when our Year 11 students begin the final lap of their journey with us, as they finish their coursework and start preparing in earnest for their forthcoming GCSE examinations. The second (and last) set of mock examinations will begin next week and, once completed, everyone will have a good idea of what we all need to do to achieve the best results. As one of the most important times of the year for students, parents and staff, it is The key to success is in our hands our job to support you all as much as we can. There are a range of learning and support programmes available whatever your needs, some of which are featured in this newsletter. There will be more to follow over the next few weeks. The New Year has also given us some great news about two of our Mounts Bay Alumni; Horace Halling, who has been awarded a place at Oxford University and I had the pleasure of bumping into Molly Hocking when she visited this week to talk to our student media team about her experience on the TV talent show ‘The Voice’.