One Parser to Rule Them All

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Layout-Sensitive Generalized Parsing

Layout-sensitive Generalized Parsing Sebastian Erdweg, Tillmann Rendel, Christian K¨astner,and Klaus Ostermann University of Marburg, Germany Abstract. The theory of context-free languages is well-understood and context-free parsers can be used as off-the-shelf tools in practice. In par- ticular, to use a context-free parser framework, a user does not need to understand its internals but can specify a language declaratively as a grammar. However, many languages in practice are not context-free. One particularly important class of such languages is layout-sensitive languages, in which the structure of code depends on indentation and whitespace. For example, Python, Haskell, F#, and Markdown use in- dentation instead of curly braces to determine the block structure of code. Their parsers (and lexers) are not declaratively specified but hand-tuned to account for layout-sensitivity. To support declarative specifications of layout-sensitive languages, we propose a parsing framework in which a user can annotate layout in a grammar. Annotations take the form of constraints on the relative posi- tioning of tokens in the parsed subtrees. For example, a user can declare that a block consists of statements that all start on the same column. We have integrated layout constraints into SDF and implemented a layout- sensitive generalized parser as an extension of generalized LR parsing. We evaluate the correctness and performance of our parser by parsing 33 290 open-source Haskell files. Layout-sensitive generalized parsing is easy to use, and its performance overhead compared to layout-insensitive parsing is small enough for practical application. 1 Introduction Most computer languages prescribe a textual syntax. -

Modern Compiler Implementation in Java. Second Edition

Team-Fly Modern Compiler Implementation in Java, Second Edition by Andrew W. ISBN:052182060x Appel and Jens Palsberg Cambridge University Press © 2002 (501 pages) This textbook describes all phases of a compiler, and thorough coverage of current techniques in code generation and register allocation, and the compilation of functional and object-oriented languages. Table of Contents Modern Compiler Implementation in Java, Second Edition Preface Part One - Fundamentals of Compilation Ch apt - Introduction er 1 Ch apt - Lexical Analysis er 2 Ch apt - Parsing er 3 Ch apt - Abstract Syntax er 4 Ch apt - Semantic Analysis er 5 Ch apt - Activation Records er 6 Ch apt - Translation to Intermediate Code er 7 Ch apt - Basic Blocks and Traces er 8 Ch apt - Instruction Selection er 9 Ch apt - Liveness Analysis er 10 Ch apt - Register Allocation er 11 Ch apt - Putting It All Together er 12 Part Two - Advanced Topics Ch apt - Garbage Collection er 13 Ch apt - Object-Oriented Languages er 14 Ch apt - Functional Programming Languages er 15 Ch apt - Polymorphic Types er 16 Ch apt - Dataflow Analysis er 17 Ch apt - Loop Optimizations er 18 Ch apt - Static Single-Assignment Form er 19 Ch apt - Pipelining and Scheduling er 20 Ch apt - The Memory Hierarchy er 21 Ap pe ndi - MiniJava Language Reference Manual x A Bibliography Index List of Figures List of Tables List of Examples Team-Fly Team-Fly Back Cover This textbook describes all phases of a compiler: lexical analysis, parsing, abstract syntax, semantic actions, intermediate representations, instruction selection via tree matching, dataflow analysis, graph-coloring register allocation, and runtime systems. -

Parser Tables for Non-LR(1) Grammars with Conflict Resolution Joel E

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Science of Computer Programming 75 (2010) 943–979 Contents lists available at ScienceDirect Science of Computer Programming journal homepage: www.elsevier.com/locate/scico The IELR(1) algorithm for generating minimal LR(1) parser tables for non-LR(1) grammars with conflict resolution Joel E. Denny ∗, Brian A. Malloy School of Computing, Clemson University, Clemson, SC 29634, USA article info a b s t r a c t Article history: There has been a recent effort in the literature to reconsider grammar-dependent software Received 17 July 2008 development from an engineering point of view. As part of that effort, we examine a Received in revised form 31 March 2009 deficiency in the state of the art of practical LR parser table generation. Specifically, LALR Accepted 12 August 2009 sometimes generates parser tables that do not accept the full language that the grammar Available online 10 September 2009 developer expects, but canonical LR is too inefficient to be practical particularly during grammar development. In response, many researchers have attempted to develop minimal Keywords: LR parser table generation algorithms. In this paper, we demonstrate that a well known Grammarware Canonical LR algorithm described by David Pager and implemented in Menhir, the most robust minimal LALR LR(1) implementation we have discovered, does not always achieve the full power of Minimal LR canonical LR(1) when the given grammar is non-LR(1) coupled with a specification for Yacc resolving conflicts. We also detail an original minimal LR(1) algorithm, IELR(1) (Inadequacy Bison Elimination LR(1)), which we have implemented as an extension of GNU Bison and which does not exhibit this deficiency. -

Validating LR(1) Parsers

Validating LR(1) Parsers Jacques-Henri Jourdan1;2, Fran¸coisPottier2, and Xavier Leroy2 1 Ecole´ Normale Sup´erieure 2 INRIA Paris-Rocquencourt Abstract. An LR(1) parser is a finite-state automaton, equipped with a stack, which uses a combination of its current state and one lookahead symbol in order to determine which action to perform next. We present a validator which, when applied to a context-free grammar G and an automaton A, checks that A and G agree. Validating the parser pro- vides the correctness guarantees required by verified compilers and other high-assurance software that involves parsing. The validation process is independent of which technique was used to construct A. The validator is implemented and proved correct using the Coq proof assistant. As an application, we build a formally-verified parser for the C99 language. 1 Introduction Parsing remains an essential component of compilers and other programs that input textual representations of structured data. Its theoretical foundations are well understood today, and mature technology, ranging from parser combinator libraries to sophisticated parser generators, is readily available to help imple- menting parsers. The issue we focus on in this paper is that of parser correctness: how to obtain formal evidence that a parser is correct with respect to its specification? Here, following established practice, we choose to specify parsers via context-free grammars enriched with semantic actions. One application area where the parser correctness issue naturally arises is formally-verified compilers such as the CompCert verified C compiler [14]. In- deed, in the current state of CompCert, the passes that have been formally ver- ified start at abstract syntax trees (AST) for the CompCert C subset of C and extend to ASTs for three assembly languages. -

Parse Forest Diagnostics with Dr. Ambiguity

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by CWI's Institutional Repository Parse Forest Diagnostics with Dr. Ambiguity H. J. S. Basten and J. J. Vinju Centrum Wiskunde & Informatica (CWI) Science Park 123, 1098 XG Amsterdam, The Netherlands {Jurgen.Vinju,Bas.Basten}@cwi.nl Abstract In this paper we propose and evaluate a method for locating causes of ambiguity in context-free grammars by automatic analysis of parse forests. A parse forest is the set of parse trees of an ambiguous sentence. Deducing causes of ambiguity from observing parse forests is hard for grammar engineers because of (a) the size of the parse forests, (b) the complex shape of parse forests, and (c) the diversity of causes of ambiguity. We first analyze the diversity of ambiguities in grammars for programming lan- guages and the diversity of solutions to these ambiguities. Then we introduce DR.AMBIGUITY: a parse forest diagnostics tools that explains the causes of ambiguity by analyzing differences between parse trees and proposes solutions. We demonstrate its effectiveness using a small experiment with a grammar for Java 5. 1 Introduction This work is motivated by the use of parsers generated from general context-free gram- mars (CFGs). General parsing algorithms such as GLR and derivates [35,9,3,6,17], GLL [34,22], and Earley [16,32] support parser generation for highly non-deterministic context-free grammars. The advantages of constructing parsers using such technology are that grammars may be modular and that real programming languages (often requiring parser non-determinism) can be dealt with efficiently1. -

A Declarative Extension of Parsing Expression Grammars for Recognizing Most Programming Languages

Journal of Information Processing Vol.24 No.2 256–264 (Mar. 2016) [DOI: 10.2197/ipsjjip.24.256] Regular Paper A Declarative Extension of Parsing Expression Grammars for Recognizing Most Programming Languages Tetsuro Matsumura1,†1 Kimio Kuramitsu1,a) Received: May 18, 2015, Accepted: November 5, 2015 Abstract: Parsing Expression Grammars are a popular foundation for describing syntax. Unfortunately, several syn- tax of programming languages are still hard to recognize with pure PEGs. Notorious cases appears: typedef-defined names in C/C++, indentation-based code layout in Python, and HERE document in many scripting languages. To recognize such PEG-hard syntax, we have addressed a declarative extension to PEGs. The “declarative” extension means no programmed semantic actions, which are traditionally used to realize the extended parsing behavior. Nez is our extended PEG language, including symbol tables and conditional parsing. This paper demonstrates that the use of Nez Extensions can realize many practical programming languages, such as C, C#, Ruby, and Python, which involve PEG-hard syntax. Keywords: parsing expression grammars, semantic actions, context-sensitive syntax, and case studies on program- ming languages invalidate the declarative property of PEGs, thus resulting in re- 1. Introduction duced reusability of grammars. As a result, many developers need Parsing Expression Grammars [5], or PEGs, are a popu- to redevelop grammars for their software engineering tools. lar foundation for describing programming language syntax [6], In this paper, we propose a declarative extension of PEGs for [15]. Indeed, the formalism of PEGs has many desirable prop- recognizing context-sensitive syntax. The “declarative” exten- erties, including deterministic behaviors, unlimited look-aheads, sion means no arbitrary semantic actions that are written in a gen- and integrated lexical analysis known as scanner-less parsing. -

Adaptive LL(*) Parsing: the Power of Dynamic Analysis

Adaptive LL(*) Parsing: The Power of Dynamic Analysis Terence Parr Sam Harwell Kathleen Fisher University of San Francisco University of Texas at Austin Tufts University [email protected] [email protected] kfi[email protected] Abstract PEGs are unambiguous by definition but have a quirk where Despite the advances made by modern parsing strategies such rule A ! a j ab (meaning “A matches either a or ab”) can never as PEG, LL(*), GLR, and GLL, parsing is not a solved prob- match ab since PEGs choose the first alternative that matches lem. Existing approaches suffer from a number of weaknesses, a prefix of the remaining input. Nested backtracking makes de- including difficulties supporting side-effecting embedded ac- bugging PEGs difficult. tions, slow and/or unpredictable performance, and counter- Second, side-effecting programmer-supplied actions (muta- intuitive matching strategies. This paper introduces the ALL(*) tors) like print statements should be avoided in any strategy that parsing strategy that combines the simplicity, efficiency, and continuously speculates (PEG) or supports multiple interpreta- predictability of conventional top-down LL(k) parsers with the tions of the input (GLL and GLR) because such actions may power of a GLR-like mechanism to make parsing decisions. never really take place [17]. (Though DParser [24] supports The critical innovation is to move grammar analysis to parse- “final” actions when the programmer is certain a reduction is time, which lets ALL(*) handle any non-left-recursive context- part of an unambiguous final parse.) Without side effects, ac- free grammar. ALL(*) is O(n4) in theory but consistently per- tions must buffer data for all interpretations in immutable data forms linearly on grammars used in practice, outperforming structures or provide undo actions. -

Formal Languages - 3

Formal Languages - 3 • Ambiguity in PLs – Problems with if-then-else constructs – Harder problems • Chomsky hierarchy for formal languages – Regular and context-free languages – Type 1: Context-sensitive languages – Type 0 languages and Turing machines Formal Languages-3, CS314 Fall 01© BGRyder 1 Dangling Else Ambiguity (Pascal) Start ::= Stmt Stmt ::= Ifstmt | Astmt Ifstmt ::= IF LogExp THEN Stmt | IF LogExp THEN Stmt ELSE Stmt Astmt ::= Id := Digit Digit ::= 0|1|2|3|4|5|6|7|8|9 LogExp::= Id = 0 Id ::= a|b|c|d|e|f|g|h|i|j|k|l|m|n|o|p|q|r|s|t|u|v|w|x|y|z How are compound if statements parsed using this grammar?? Formal Languages-3, CS314 Fall 01© BGRyder 2 1 IF x = 0 THEN IF y = 0 THEN z := 1 ELSE w := 2; Start Parse Tree 1 Stmt Ifstmt IF LogExp THEN Stmt Ifstmt Id = 0 IF LogExp THEN Stmt ELSE Stmt x Id = 0 Astmt Astmt Id := Digit y Id := Digit z 1 w 2 Formal Languages-3, CS314 Fall 01© BGRyder 3 IF x = 0 THEN IF y = 0 THEN z := 1 ELSE w := 2; Start Stmt Parse Tree 2 Ifstmt Q: which tree is correct? IF LogExp THEN Stmt ELSE Stmt Id = 0 Ifstmt Astmt IF LogExp THEN Stmt x Id := Digit Astmt Id = 0 w 2 Id := Digit y z 1 Formal Languages-3, CS314 Fall 01© BGRyder 4 2 How Solve the Dangling Else? • Algol60: use block structure if x = 0 then begin if y = 0 then z := 1 end else w := 2 • Algol68: use statement begin/end markers if x = 0 then if y = 0 then z := 1 fi else w := 2 fi • Pascal: change the if statement grammar to disallow parse tree 2; that is, always associate an else with the closest if Formal Languages-3, CS314 Fall 01© BGRyder 5 New Pascal Grammar Start ::= Stmt Stmt ::= Stmt1 | Stmt2 Stmt1 ::= IF LogExp THEN Stmt1 ELSE Stmt1 | Astmt Stmt2 ::= IF LogExp THEN Stmt | IF LogExp THEN Stmt1 ELSE Stmt2 Astmt ::= Id := Digit Digit ::= 0|1|2|3|4|5|6|7|8|9 LogExp::= Id = 0 Id ::= a|b|c|d|e|f|g|h|i|j|k|l|m|n|o|p|q|r|s|t|u|v|w|x|y|z Note: only if statements with IF..THEN..ELSE are allowed after the THEN clause of an IF-THEN-ELSE statement. -

![Maximal-Munch” Tokenization in Linear Time Tom Reps [TOPLAS 1998]](https://docslib.b-cdn.net/cover/2594/maximal-munch-tokenization-in-linear-time-tom-reps-toplas-1998-462594.webp)

Maximal-Munch” Tokenization in Linear Time Tom Reps [TOPLAS 1998]

Fall 2016-2017 Compiler Principles Lecture 1: Lexical Analysis Roman Manevich Ben-Gurion University of the Negev Agenda • Understand role of lexical analysis in a compiler • Regular languages reminder • Lexical analysis algorithms • Scanner generation 2 Javascript example • Can you some identify basic units in this code? var currOption = 0; // Choose content to display in lower pane. function choose ( id ) { var menu = ["about-me", "publications", "teaching", "software", "activities"]; for (i = 0; i < menu.length; i++) { currOption = menu[i]; var elt = document.getElementById(currOption); if (currOption == id && elt.style.display == "none") { elt.style.display = "block"; } else { elt.style.display = "none"; } } } 3 Javascript example • Can you some identify basic units in this code? keyword ? ? ? ? ? var currOption = 0; // Choose content to display in lower pane. ? function choose ( id ) { var menu = ["about-me", "publications", "teaching", "software", "activities"]; ? for (i = 0; i < menu.length; i++) { currOption = menu[i]; var elt = document.getElementById(currOption); if (currOption == id && elt.style.display == "none") { elt.style.display = "block"; } else { elt.style.display = "none"; } } } 4 Javascript example • Can you some identify basic units in this code? keyword identifier operator numeric literal punctuation comment var currOption = 0; // Choose content to display in lower pane. string literal function choose ( id ) { var menu = ["about-me", "publications", "teaching", "software", "activities"]; whitespace for (i = 0; i < menu.length; i++) { currOption = menu[i]; var elt = document.getElementById(currOption); if (currOption == id && elt.style.display == "none") { elt.style.display = "block"; } else { elt.style.display = "none"; } } } 5 Role of lexical analysis • First part of compiler front-end Lexical Syntax AST Symbol Inter. Code High-level Analysis Analysis Table Rep. -

The Design & Implementation of an Abstract Semantic Graph For

Clemson University TigerPrints All Dissertations Dissertations 12-2011 The esiD gn & Implementation of an Abstract Semantic Graph for Statement-Level Dynamic Analysis of C++ Applications Edward Duffy Clemson University, [email protected] Follow this and additional works at: https://tigerprints.clemson.edu/all_dissertations Part of the Computer Sciences Commons Recommended Citation Duffy, Edward, "The eD sign & Implementation of an Abstract Semantic Graph for Statement-Level Dynamic Analysis of C++ Applications" (2011). All Dissertations. 832. https://tigerprints.clemson.edu/all_dissertations/832 This Dissertation is brought to you for free and open access by the Dissertations at TigerPrints. It has been accepted for inclusion in All Dissertations by an authorized administrator of TigerPrints. For more information, please contact [email protected]. THE DESIGN &IMPLEMENTATION OF AN ABSTRACT SEMANTIC GRAPH FOR STATEMENT-LEVEL DYNAMIC ANALYSIS OF C++ APPLICATIONS A Dissertation Presented to the Graduate School of Clemson University In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy Computer Science by Edward B. Duffy December 2011 Accepted by: Dr. Brian A. Malloy, Committee Chair Dr. James B. von Oehsen Dr. Jason P. Hallstrom Dr. Pradip K. Srimani In this thesis, we describe our system, Hylian, for statement-level analysis, both static and dynamic, of a C++ application. We begin by extending the GNU gcc parser to generate parse trees in XML format for each of the compilation units in a C++ application. We then provide verification that the generated parse trees are structurally equivalent to the code in the original C++ application. We use the generated parse trees, together with an augmented version of the gcc test suite, to recover a grammar for the C++ dialect that we parse. -

Ordered Sets in the Calculus of Data Structures

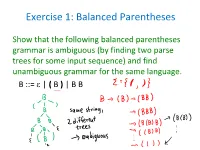

Exercise 1: Balanced Parentheses Show that the following balanced parentheses grammar is ambiguous (by finding two parse trees for some input sequence) and find unambiguous grammar for the same language. B ::= | ( B ) | B B Remark • The same parse tree can be derived using two different derivations, e.g. B -> (B) -> (BB) -> ((B)B) -> ((B)) -> (()) B -> (B) -> (BB) -> ((B)B) -> (()B) -> (()) this correspond to different orders in which nodes in the tree are expanded • Ambiguity refers to the fact that there are actually multiple parse trees, not just multiple derivations. Towards Solution • (Note that we must preserve precisely the set of strings that can be derived) • This grammar: B ::= | A A ::= ( ) | A A | (A) solves the problem with multiple symbols generating different trees, but it is still ambiguous: string ( ) ( ) ( ) has two different parse trees Solution • Proposed solution: B ::= | B (B) • this is very smart! How to come up with it? • Clearly, rule B::= B B generates any sequence of B's. We can also encode it like this: B ::= C* C ::= (B) • Now we express sequence using recursive rule that does not create ambiguity: B ::= | C B C ::= (B) • but now, look, we "inline" C back into the rules for so we get exactly the rule B ::= | B (B) This grammar is not ambiguous and is the solution. We did not prove this fact (we only tried to find ambiguous trees but did not find any). Exercise 2: Dangling Else The dangling-else problem happens when the conditional statements are parsed using the following grammar. S ::= S ; S S ::= id := E S ::= if E then S S ::= if E then S else S Find an unambiguous grammar that accepts the same conditional statements and matches the else statement with the nearest unmatched if. -

Disambiguation Filters for Scannerless Generalized LR Parsers

Disambiguation Filters for Scannerless Generalized LR Parsers Mark G. J. van den Brand1,4, Jeroen Scheerder2, Jurgen J. Vinju1, and Eelco Visser3 1 Centrum voor Wiskunde en Informatica (CWI) Kruislaan 413, 1098 SJ Amsterdam, The Netherlands {Mark.van.den.Brand,Jurgen.Vinju}@cwi.nl 2 Department of Philosophy, Utrecht University Heidelberglaan 8, 3584 CS Utrecht, The Netherlands [email protected] 3 Institute of Information and Computing Sciences, Utrecht University P.O. Box 80089, 3508TB Utrecht, The Netherlands [email protected] 4 LORIA-INRIA 615 rue du Jardin Botanique, BP 101, F-54602 Villers-l`es-Nancy Cedex, France Abstract. In this paper we present the fusion of generalized LR pars- ing and scannerless parsing. This combination supports syntax defini- tions in which all aspects (lexical and context-free) of the syntax of a language are defined explicitly in one formalism. Furthermore, there are no restrictions on the class of grammars, thus allowing a natural syntax tree structure. Ambiguities that arise through the use of unrestricted grammars are handled by explicit disambiguation constructs, instead of implicit defaults that are taken by traditional scanner and parser gener- ators. Hence, a syntax definition becomes a full declarative description of a language. Scannerless generalized LR parsing is a viable technique that has been applied in various industrial and academic projects. 1 Introduction Since the introduction of efficient deterministic parsing techniques, parsing is considered a closed topic for research, both by computer scientists and by practi- cioners in compiler construction. Tools based on deterministic parsing algorithms such as LEX & YACC [15,11] (LALR) and JavaCC (recursive descent), are con- sidered adequate for dealing with almost all modern (programming) languages.