Abowd College of Computing and GVU Center Georgia Institute of Technology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ubiquitous Computing

Lecture Notes in Computer Science 2201 Ubicomp 2001: Ubiquitous Computing International Conference Atlanta, Georgia, USA, September 30 - October 2, 2001 Proceedings Bearbeitet von Gregory D Abowd, Barry Brumitt, Steven Shafer 1. Auflage 2001. Taschenbuch. XIII, 375 S. Paperback ISBN 978 3 540 42614 1 Format (B x L): 15,5 x 23,5 cm Gewicht: 1210 g Weitere Fachgebiete > EDV, Informatik > Informationsverarbeitung > Ambient Intelligence, RFID Zu Inhaltsverzeichnis schnell und portofrei erhältlich bei Die Online-Fachbuchhandlung beck-shop.de ist spezialisiert auf Fachbücher, insbesondere Recht, Steuern und Wirtschaft. Im Sortiment finden Sie alle Medien (Bücher, Zeitschriften, CDs, eBooks, etc.) aller Verlage. Ergänzt wird das Programm durch Services wie Neuerscheinungsdienst oder Zusammenstellungen von Büchern zu Sonderpreisen. Der Shop führt mehr als 8 Millionen Produkte. Preface Ten years ago, Mark Weiser’s seminal article, ”The Computer of the 21st Cen- tury,” was published by Scientific American. In that widely cited article, Mark described some of the early results of the Ubiquitous Computing Project that he lead at Xerox PARC. This article and the initial work at PARC has inspired a large community of researchers to explore the vision of ”ubicomp”. The variety of research backgrounds represented by researchers in ubicomp is both a blessing and a curse. It is a blessing because good solutions to any of the significant prob- lems in our world require a multitude of perspectives. That Mark’s initial vision has inspired scientists with technical, design, and social expertise increases the likelihood that as a community we will be able to build a new future of inter- action that goes beyond the desktop and positively impacts our everyday lives. -

Anhong Guo's Curriculum Vitae

Anhong Guo Curriculum Vitæ Bob and Betty Beyster Building 3741 https://guoanhong.com 2260 Hayward Street +1 (678) 899-3981 Ann Arbor, MI 48109 USA [email protected] Academic Positions 01/2021 – University of Michigan, Ann Arbor Assistant Professor, Computer Science and Engineering (EECS); School of Information (by courtesy) 09/2020 – Carnegie Mellon University 12/2020 Postdoctoral Fellow, Human-Computer Interaction Institute, School of Computer Science Education 08/2014 – Carnegie Mellon University 08/2020 Ph.D. in Human-Computer Interaction M.S. in Human-Computer Interaction Human-Computer Interaction Institute, School of Computer Science Thesis: Human-AI Systems for Visual Information Access Advisor: Jeffrey P. Bigham; Committee: Chris Harrison, Jodi Forlizzi, and Meredith Ringel Morris 08/2012 – Georgia Institute of Technology 05/2014 M.S. in Human-Computer Interaction School of Interactive Computing Thesis: BeyondTouch: Extending the Input Language with Built-in Sensors on Commodity Smartphones Advisor: Gregory Abowd 09/2008 – Beijing University of Posts and Telecommunications (BUPT) 06/2012 B.Eng. in Electronic Information Engineering School of Information and Communication Engineering Awards and Honors 2021 CHI 2021 Best Paper Honorable Mention [C.23] 2021 Forbes’ Top 30 Scientists Under 30 (‘30 Under 30’) 2020 ASSETS 2020 Best Paper Nominee [C.21] 2019 ASSETS 2019 Best Artifact Award [C.17] 2018 CMU Swartz Innovation Fellowship 2018 McGinnis Venture Capital Award 2017 Snap Inc. Research Fellowship 2017 W4A 2017 Paciello Group Accessibility Challenge Delegates Award [A.5] 2016 Qualcomm Innovation Fellowship Finalist 2016 MobileHCI 2016 Best Paper Honorable Mention [C.8] 2014 ISWC 2014 Best Paper Honorable Mention [C.1] Peer-Reviewed Conference and Journal Papers Anhong Guo — curriculum vitæ, page 1 [C.24] Solon Barocas, Anhong Guo, Ece Kamar, Jacquelyn Krones, Meredith Ringel Morris, Jennifer Wortman Vaughan, Duncan Wadsworth, Hanna Wallach. -

Curriculum Vitae

Sunyoung Kim | Curriculum Vitae Assistant Professor Department of Library & Information Science School of Communication & Information Rutgers University, the State University of New Jersey [email protected] http://comminfo.rutgers.edu/~sk1897 RESEARCH INTERESTS Human-Computer Interaction, Ubiquitous computing, Technology for Health care, Everyday wellbeing & Environmental sustainability Application areas: Chronic healthcare, Everyday health and wellbeing, Healthy aging, Citizen science, Technology empowerment, Environmental sustainability, Environmental protection, Community wellbeing EDUCATION AND PROFESSIONAL PREPARATION 2014-2016 Postdoctoral fellow, Center for Research on Computation and Society John A. Paulson School of Engineering and Applied Sciences Harvard University, Cambridge, MA, USA Advisors: Krzysztof Z. Gajos & Barbara J. Grosz 2014 Ph.D. in Human-Computer Interaction Human-Computer Interaction Institute, School of Computing Carnegie Mellon University, Pittsburgh, PA, USA Committee: Eric Paulos (co-chair), Jennifer Mankoff (co-chair), Niki Kittur, Jason Ellis Thesis: “SENSR: a flexible framework for authoring mobile data-collection tools for citizen science” (C.8, C.10) 2008 M.S. in Human-Computer Interaction School of Computing Georgia Institute of Technology, Atlanta, GA, USA Advisor: Gregory Abowd Thesis: “Are you sleeping?: sharing portrayed sleeping status within a social network” (C.1) 2000 B.S. in Architecture & Housing and Interior Design Yonsei University, Seoul, Korea PROFESSIONAL EXPERIENCES 2016 - present -

Sauvik Das, Ph.D. — Curriculum Vitae | @Scyrusk on Twitter | [email protected]

Updated: 7/28/21 Sauvik Das, Ph.D. — Curriculum Vitae https://sauvik.me | @scyrusk on Twitter | [email protected] Professional appointment Georgia Institute of Technology Assistant Professor January 2018—Present School of Interactive Computing On partial leave for 2021 Selected Honors and Awards NSA Best Scientific Cybersecurity Paper Award – Honorable Mention [P8] UbiComp Best Paper [P5] SOUPS Distinguished Paper Award [P21] CHI Best Paper Honorable Mention x3 [P21, P15, P12] Most Innovative Video Nomination, AAAI Video Competition [DV1] NSF EAPSI Fellowship (2016) Qualcomm Innovation Fellowship (2014) National Defense Science and Engineering Graduate Fellowship (2012-15) Stu Card Graduate Fellowship (2011-12) CMU CyLab CUPS Doctoral Training Program Fellowship (2011-13) Grants & Competitive Gifts 2021 NSF PI Collaborative Research: SaTC: CORE: Medium: Privacy Through Design: A Design $1,199,651 Methodology to Promote the Creation of Privacy-Conscious Consumer AI *($669,163) (w/ Jodi Forlizzi, CMU) 2020 NSF PI SaTC: CORE: Small: Corporeal Cybersecurity: Improving End-User Security and $499,892 Privacy with Physicalized Computing Interfaces (w/ Gregory Abowd, Georgia Tech & Northeastern University) 2019 Facebook PI Explainable Ads: Improving Ad Targeting Transparency with Explainable AI $50,000 (sole PI) 2018 NSF PI CRII: SaTC: Systems That Facilitate Cooperation and Stewardship to Improve $175,000 End-User Security Behaviors (sole PI) * indicates portion specifically allocated to Das where applicable Academic Training & Education Carnegie Mellon University, 2011-2017 Updated: 7/28/21 M.S. / Ph.D. in Human-Computer Interaction Advisers: Dr. Jason I. Hong and Dr. Laura A. Dabbish Committee: Dr. Jeffrey P. Bigham (CMU) and Dr. J.D. Tygar (UC Berkeley) University of Tokyo, 2016 Visiting Student Researcher (as part of NSF EAPSI Grant) Adviser: Dr. -

Research Statement Gregory D. Abowd

Research Statement Gregory D. Abowd My entire research career has targetted the intersection between Software Engineering and Human-Computer Interaction (HCI). I am interested in pursuing challenging problems that involve the construction and human impact of computing technology. The vast majority of com- puting technologies are used by humans, so techniques to facilitate the design, implementation and evaluation of these systems is of obvious importance. Before arriving at Georgia Tech, my research style was classical with respect to both Software Engineering and HCI. There was no inherent problem with the method of my research. The problem I perceived was that I was inclined to apply classical techniques to classical problems. I was developing techniques to help people build the kinds of systems that they had been building for 10-15 years already. To heighten the chance that my research would have impact in either the HCI or Software Engineer- ing research or practicing communities, I decided to shift my focus toward applications and com- puting systems that were not currently commonplace. My research would then serve the role of a leading indicator on computing practice and not a lagging indicator. My research direction was influenced by the writings and work of the late Mark Weiser and his Ubiquitous Computing research project at Xerox PARC. Mark and his colleagues at PARC dem- onstrated Alan Kay’s spirit of prediction by creating and using an environment enriched with new kinds of interaction devices, from handheld to wall-sized. They showed how a number of signifi- cant Computer Science research problems are unveiled when computation is spread throughout an environment and the use of the computation goes beyond traditional desktop computing tasks. -

Conference Program 2007 Conference at a Glance 2007 Conference at a Glance

conference on human factors in computing systems San Jose, California, USA April 28–May 3, 2007 Conference Program 2007 Conference at a Glance 2007 Conference at a Glance Course 1 Course 2 Course 3 Course 4 Intro to HCI — 18:00—21:30 Intro to CSCW — 18:00—21:30 HCI History — 18:00—19:30 Drawing Ideas — 18:00—21:30 San Jose Ballroom IV San Jose Ballroom III Room A3 Room A4 & A5 SUN CIVIC A1 A2 A3 A4 & A5 A8 B1—B4 C2 AUDITORIUM 8:30– Opening Plenary: Bill Moggridge — Reaching for the Intuitive 10:30 CHI MADNESS Interactive SIG Papers Papers Papers Experience Papers: Interactivity Session Beyond Usability: Faces & Bodies in Attention & Capturing Life Reports Large Displays Shake, Rattle, and 11:30– Usability from the Social, Situational, Interaction Interruption Experiences On the Move Roll: New Forms of 13:00 CIO’s Perspective Cultural, & Input and Output Contextual Factors Interactive Papers Papers Papers Papers Papers SIG Papers Session Ubicomp Tools Mobile Interaction Politics & Activism Navigation & Medical Challenges in Task & Attention 14:30– Who Killed Design? Interaction International Usability 16:00 MONDAY Interactive Papers Papers Papers Papers Experience Papers ALT.CHI Session Expert/Novice Mobile Navigation Photo Sharing Reports Empirical Studies Evaluating 16:30– Taking CHI for a Applications Qualitative of Web Interaction Evaluation 18:00 Drive Research Methods 9:00– Social Impact Award: Gary Marsden — Doing HCI Differently — Stories from the Developing World 10:30 CHI Madness Interactive SIG Papers Papers Papers Experience -

Ubiquitous Computing for Capture and Access Full Text Available At

Full text available at: http://dx.doi.org/10.1561/1100000014 Ubiquitous Computing for Capture and Access Full text available at: http://dx.doi.org/10.1561/1100000014 Ubiquitous Computing for Capture and Access Khai N. Truong University of Toronto Canada [email protected] Gillian R. Hayes University of California, Irvine USA [email protected] Boston { Delft Full text available at: http://dx.doi.org/10.1561/1100000014 Foundations and Trends R in Human{Computer Interaction Published, sold and distributed by: now Publishers Inc. PO Box 1024 Hanover, MA 02339 USA Tel. +1-781-985-4510 www.nowpublishers.com [email protected] Outside North America: now Publishers Inc. PO Box 179 2600 AD Delft The Netherlands Tel. +31-6-51115274 The preferred citation for this publication is K. N. Truong and G. R. Hayes, Ubiq- uitous Computing for Capture and Access, Foundations and Trends R in Human{ Computer Interaction, vol 2, no 2, pp 95{173, 2008 ISBN: 978-1-60198-208-7 c 2009 K. N. Truong and G. R. Hayes All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, mechanical, photocopying, recording or otherwise, without prior written permission of the publishers. Photocopying. In the USA: This journal is registered at the Copyright Clearance Cen- ter, Inc., 222 Rosewood Drive, Danvers, MA 01923. Authorization to photocopy items for internal or personal use, or the internal or personal use of specific clients, is granted by now Publishers Inc for users registered with the Copyright Clearance Center (CCC). -

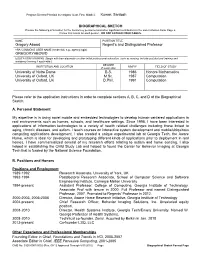

(Rev. 08/12), Biographical Sketch Format Page

Program Director/Principal Investigator (Last, First, Middle): Kumar, Santosh BIOGRAPHICAL SKETCH Provide the following information for the Senior/key personnel and other significant contributors in the order listed on Form Page 2. Follow this format for each person. DO NOT EXCEED FOUR PAGES. NAME POSITION TITLE Gregory Abowd Regent’s and Distinguished Professor eRA COMMONS USER NAME (credential, e.g., agency login) GREGORYABOWD EDUCATION/TRAINING (Begin with baccalaureate or other initial professional education, such as nursing, include postdoctoral training and residency training if applicable.) DEGREE INSTITUTION AND LOCATION MM/YY FIELD OF STUDY (if applicable) University of Notre Dame B.S. 1986 Honors Mathematics University of Oxford, UK M.Sc. 1987 Computation University of Oxford, UK D.Phil. 1991 Computation Please refer to the application instructions in order to complete sections A, B, C, and D of the Biographical Sketch. A. Personal Statement My expertise is in using novel mobile and embedded technologies to develop human-centered applications in real environments such as homes, schools, and healthcare settings. Since 1998, I have been interested in applications of information technologies to a variety of health related challenges including those linked to aging, chronic diseases, and autism. I teach courses on interactive system development and mobile/ubiquitous computing applications development. I also created a unique experimental lab at Georgia Tech, the Aware Home, which is ideal for developing and prototyping different kinds of applications prior to deployment in real homes. I have commercialized several of my research efforts relating to autism and home sensing. I also helped in establishing the Child Study Lab and helped to found the Center for Behavior Imaging at Georgia Tech that is funded by the National Science Foundation. -

Curriculum Vitae for David Garlan May 2021

Curriculum Vitae for David Garlan May 2021 Address School of Computer Science Carnegie Mellon University Pittsburgh, PA 15213-2890 412-268-5056 [email protected] www.cs.cmu.edu/~garlan Education Ph.D., Computer Science 1987 Carnegie Mellon University, Pittsburgh PA. Dissertation: Views for Tools in Integrated Environments Advisor, A. N. Habermann B.A., M.A. (Oxon) Honours in Mathematics 1973 University of Oxford, Oxford, England. B.A. Mathematics magna cum laude, phi beta kappa 1971 Amherst College, Amherst MA. Employment Associate Dean for Master’s Programs in the School of Computer Science 2018-present Carnegie Mellon University, School of Computer Science 2004-present Professor Director of Software Engineering Professional Programs 2002-2016 Carnegie Mellon University, School of Computer Science 1996-2004 Associate Professor Carnegie Mellon University, School of Computer Science 1990-96 Assistant Professor Tektronix, Inc., Computer Research Labs Senior Computer Scientist 1987-90 Carnegie Mellon University, Department of Computer Science 1987 Post-Doctoral Research Fellow Carnegie Mellon University, Department of Computer Science 1980-86 Research Assistant Awards and Honors Nancy Mead Award for Excellence in Software Engineering Education 2017 IEEE TCSE Distinguished Software Education Award 2017 Allen Newell Award for Research Excellence 2016 Master of Software Engineering “Coach” Award 2016 Fellow of the ACM 2013 Fellow of the IEEE 2012 ACM SIGSOFT Outstanding Research Award (joint with Mary Shaw) 2011 IBM Faculty Research Award 2011 -

Context-Aware Computing

Introduction to This Special Issue on Context-Aware Computing Thomas P. Moran IBM Almaden Research Center Paul Dourish University of California, Irvine To be published in Human-Computer Interaction, Volume 16, Numbers 2-3, 2001 ----------------------------------------------------------------------------------------- Pervasive Computing is a term for the strongly emerging trend toward: • Numerous, casually accessible, often invisible computing devices • Frequently mobile or embedded in the environment • Connected to an increasingly ubiquitous network infrastructure composed of a wired core and wireless edges [from the call for a conference on Pervasive Computing1] For most of us, computing has been done during the last two decades on personal computer workstations and laptops. Interacting with computational artifacts and networked information has been largely a “desk experience.” This is now changing in a big way … Ubiquitous/Pervasive Computing Computation is now packaged in a variety of devices. Smaller and lighter laptop/notebooks, as powerful as conventional personal computers, free us from the confines of the single desk. Specialized devices such as handheld personal organizers are portable enough to be with us all the time. Wireless technology allows devices to be fully interconnected with the electronic world. Cameras and VCRs are being supplanted by digital equivalents, while we increasingly listen to music on devices that are digital and solid-state. Cell phones are really networked computers. The distinction between communication and computational is blurring, not only in the devices, but also in the 1 http://www.nist.gov/pc2001/. - 1 - variety of ways computation allows us to communicate, from email to chat to voice to video. On a different scale, computation is also moving beyond personal devices. -

Curriculum Vitae

Heather Richter Lipford CURRICULUM VITAE Heather Richter Lipford Department of Software and Information Systems University of North Carolina at Charlotte 9201 University City Boulevard Charlotte, NC 28223 Phone: +1 (704) 687-8376; Fax: +1 (704) 687-4893 [email protected] http://www.sis.uncc.edu/~richter 1 Education 1997 - 2005 Ph.D. College of Computing, Georgia Institute of Technology “Designing and Evaluating Meeting Capture and Access Services” Advisor: Dr. Gregory Abowd 1992 - 1995 B.S. Computer Science, Michigan State University Advisor: Dr Betty H.C. Cheng 2 Professional Experience 2011 - present Associate Professor, Department of Software and Information Systems. University of North Carolina at Charlotte, Charlotte, NC. 2005 – 2011 Assistant Professor, Department of Software and Information Systems. University of North Carolina at Charlotte, Charlotte, NC. 1997 – 2005 Research and Teaching Assistant. Georgia Institute of Technology Atlanta, GA. 2000 - 2001 Technical Intern. IBM T.J. Watson Research Laboratories. Hawthorne, NY. 1997, 1998 Technical Intern. Hewlett-Packard Laboratories Palo Alto, CA. 1995 Undergraduate research assistant. Michigan State University, East Lansing, MI. 3 Licenses and Certifications 4 Career Highlights • Established HCI as a core area in the department by founding the HCI Lab and developing 2 new HCI courses at UNC Charlotte. • Core contributor to the usable security and privacy community with my work on privacy and social network sites. July, 2011 Curriculum Vitae Page 1 of 17 Heather Richter Lipford • Honorable mention award at CHI 2010. • Active leadership roles in usable security and privacy by serving in conference and workshop organizing roles, such as the CHI and CSCW program committees and co-chair of the SOUPS 2011 and 2012 technical program. -

Technology Enables Quick Review of Continuous Recorded Data

Press Release Press Contact: Rosemary W. Stevens, (650) 494-2800 Ace Public Relations, Palo Alto, CA [email protected] Taking a Second Look: Technology Enables Quick Review of Continuous Recorded Data San Jose, CA (24 April, 2007) -- Surveillance camera outputs and other continuous recordings are almost impossible to manage because of the time required to review the footage for key events or interesting snippets. However, Dr. Gregory Abowd of Georgia Institute of Technology has developed some techniques for selecting important portions of continuous recorded data in real-time. Dr. Abowd's motivation for this work was and is improving treatment for autism. However, his work has broad application that reaches beyond the initial impetus. The ability to find and extract key events from continuous recorded data could potentially impact many domains. On May 3rd, Dr. Abowd will present his work at CHI 2007 in San Jose, CA. "In 1999, we learned that our son, Aidan, was diagnosed with autism; a few years later our second son, Blaise, was also diagnosed with autism. The CDC reports incidence of autism in the U.S. at 1 in 166, so many families are coming to grips with the everyday struggles of this perplexing neurological developmental disability. Since Aidan's diagnosis, I have looked for ways to have my research in ubiquitous computing address the challenges of those impacted by autism. My goal is to have technology play a vital role in increasing our understanding of this unique human condition and to have it ease the everyday struggles for those who deal with autism," notes Dr.