UC Davis Books

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Annual Report 2013-14

ANNUAL REPORT 2013-14 Dr. Y.S.R. Horticultural University Venkataramannagudem, West Godavari District – 534 101 www.drysrhu.edu.in Published by : Dr.Y.S.R. Horticultural University Administrative Office, P.O. Box No. 7, Venkataramannagudem-534 101, W.G. Dist., A.P. Phones : 08818-284312, Fax : 08818-284223, e-mail : [email protected] URL: www.drysrhu.edu.in Compiled by : Dr.B.Srinivasulu, Registrar Dr.M.B.Nageswararao, Director of Industrial & International Programmes, Dr.M.Lakshminarayana Reddy, Dean PG Studies Dr.D.Srihari, Controller of Examinations Dr.J.Dilip Babu, Director of Research Dr.M.Pratap, Dean of Horticulture Dr.K.Vanajalatha, Dean of Student Affairs Dr.G.Srihari, Director of Extension Edited by : Dr.R.V.S.K.Reddy, Director of Extension All rights are reserved. No part of this book shall be reproduced or transmitted in any form by print, microfilm or any other means without written permission of the Vice-Chancellor, Dr.Y.S.R. Horticultural University, Venkataramannagudem. Dr. B.M.C. REDDY Vice-Chancellor Dr. Y.S.R. Horticultural University Foreword I am happy to present the Sixth Annual Report of Dr.Y.S.R. Horticultural University. It is a compiled document of the University activities during the year 2013-14. Dr.YSR Horticultural University was established at Venkataramannagudem, West Godavari District, Andhra Pradesh on 26th June, 2007. Dr.YSR Horticultural University is second of its kind in the country, with the mandate for Education, Research and Extension related to horticulture and allied subjects. The university at present has 4 Horticultural Colleges, 6 Horticulture Polytechnics, 27 Research Stations and 3 KVKs located in 9 agro-climatic zones of the state. -

OMICS Group Membership

International Publisher of Science, Technology and Medicine e-Books Clinical & Experts Online Biosafety An Open Access Publisher Protocols Database OMICS Group Membership Your Research- Your Rights Open Access (OA) is the practice of providing unrestricted access What are the benefi ts of Membership? via the Internet to peer-reviewed scholarly journal articles. OA is also Free Membership increasingly being provided to theses, scholarly monographs and book chapters OMICS Group offers free membership to the Scientifi c Society/ Society as a whole benefi ts from an augmented and expedites Corporate/University/Institute with a notion to serve the Scientifi c research cycle in which research can advance more effectively Community. because researchers have immediate access to all the fi ndings they Cost-effective need. Membership offers a cost-effective solution for organizations and their Increasingly, university and research libraries are being overwhelmed researchers when publishing in our range of open access journals. with information that has been created in digital format and transmitted, accessed via computers. As the number of collections The different types of Membership offer various solutions to ensure in digital formats exaggerates exponentially, more libraries and organizations benefi t from the most suitable and cost-effective pricing information providers are facing a number of unique challenges plan to fi t with their requirements and budgets. presented by this relatively new medium. Increased exposure for your organization All works published by OMICS Publishing Group are under the terms Members receive their own customized webpage with the following of the Creative Commons Attribution License. This permits anyone to features: copy, allocate, transmit and adapt the work, provided the original work and source is appropriately cited. -

Occupational Medicine & Health Affairs

www.omicsonline.org Occupational Medicine & Health Affairs Open Access ISSN: 2329-6879 Here you can find about Occupational Medicine & Health Affairs, their increasing role in the diagnosis, characterization, therapy of various marked diseases and in other crucial fields of Medical Science. To promote international dialogue and collaboration on health issues; to improve clinical practice; and to expand and deepen the understanding of health and health care. Occupational Medicine & Health Affairs is an Open Access scientific journal which is peer-reviewed. It publishes the most exciting researches with respect to the subjects of Medical Science development and their diagnostic applications. This is freely available online journal which will be soon available as a print. Occupational Medicine & Health Affairs not only helps researchers, clinicians and scientists, but also renders a link between Doctors, clinicians, pharmacologists and also the Medicine-business people who study health effects in populations. OMHA has a wide aspect in the field of health care & medical science education. Occupational Medicine & Health Affairs - Open Access uses online manuscript submission, review and tracking systems for quality and quick review processing. Submit your manuscript at http://www.omicsonline.org/submission/ R Ben-Abraham K Stephen C Bondy Susan R McGurk Karin Provost Nancy L Rothman Surya Kumar Shah Eva L Rodriguez Carolyn L Lindgren Kenji Suzuki Stanford University University of Boston University State University of Temple University New Jersey -

Enzymes and Soil Fertility 12/13/14, 6:58 AM

OMICS Group : eBooks :: Enzymes and Soil Fertility 12/13/14, 6:58 AM Home Site Map Contact Us About us Open Access eBooks Submit Editorial Board Membership Sponsorship Select Language Enzymes and Soil Fertility Powered by Translate Anna Piotrowska-Długosz* Department of Soil Science and Soil Protection, Division of Biochemistry Faculty of Agriculture and Biotechnology, University of Technology and Life Sciences, Bernardyńska, Bydgoszcz, Poland *Corresponding author: Anna Piotrowska-Długosz, Department of Soil Science and Soil Protection, Division of Biochemistry Faculty of Agriculture and Biotechnology, University of Technology and Life Sciences, Bernardyńska St., 85-089 Bydgoszcz, Poland, Tel: +48 52 374 9555; E-mail: [email protected] 1. Abstract Soil is a fundamental resource in the agricultural production system and monitoring its fertility is an important objective in the sustainable development of agro-ecosystems. In order to evaluate soil fertility, changes in its physical, chemical and biological properties must be taken into account. Among the biological features, soil enzymes are often used as index of soil fertility since they are very sensitive and respond to changes in soil management more quickly than other soil variables. Thus, the objective of this work was to review some of the aspects that are connected with using soil enzymes as indicators of agricultural practices impact (e.g., soil fertilization, crop rotation, tillage) and soil fertility. The results that are discussed in the works listed in the bibliography showed no consistent trends in enzymatic activity as being dependent on farming management practices that have stimulated, decreased or not affected this activity. The influence of inorganic fertilization and organic amendments on the soil enzyme activities depended on the dose of this amendment, the time of its application, the content of harmful substances (e.g., heavy metals), the soil type and climatic conditions. -

Federal Trade Commission V. OMICS Group Inc. (9Th Cir.), FTC Brief, 19

Case: 19-15738, 10/11/2019, ID: 11462873, DktEntry: 25, Page 1 of 80 No. 19-15738 IN THE UNITED STATES COURT OF APPEALS FOR THE NINTH CIRCUIT ––––––––––––––––––––––––––––––––––––––––––––– FEDERAL TRADE COMMISSION, Plaintiff-Appellee, v. OMICS GROUP INC., DBA OMICS PUBLISHING GROUP; ET AL., Defendants-Appellants. ––––––––––––––––––––––––––––––––––––––––––––– On Appeal from the United States District Court for the District of Nevada, Las Vegas No. 2:16-cv-02022-GMN-VCF Hon. Gloria M. Navarro ––––––––––––––––––––––––––––––––––––––––––––– BRIEF OF THE FEDERAL TRADE COMMISSION ––––––––––––––––––––––––––––––––––––––––––––– ALDEN F. ABBOTT General Counsel JOEL MARCUS Deputy General Counsel Of Counsel: MARIEL GOETZ GREGORY ASHE Attorney MICHAEL TANKERSLY FEDERAL TRADE COMMISSION FEDERAL TRADE COMMISSION 600 Pennsylvania Avenue, N.W. Washington, D.C. 20580 Washington, D.C. 20580 (202) 326-2763 Case: 19-15738, 10/11/2019, ID: 11462873, DktEntry: 25, Page 2 of 80 TABLE OF CONTENTS Table of Authorities.................................................................................. iv Jurisdiction................................................................................................ 1 Introduction............................................................................................... 1 Questions Presented .................................................................................. 2 Statement of the Case ............................................................................... 3 A. Liability Under The FTC Act ..................................................... -

Download Preprint

Recommendations from the Reducing the Inadvertent Spread of Retracted Science: Shaping a Research and Implementation Agenda Project Jodi Schneider*, Nathan D. Woods, Randi Proescholdt, Yuanxi Fu, and the RISRS Team July 2021 *Corresponding author: [email protected] and [email protected] Table of Contents EXECUTIVE SUMMARY 3 Recommendations 4 INTRODUCTION 5 RISRS Project Goals and Assumptions 6 Framing the Problem 8 Scope of this Document 9 THE RISRS PROCESS 10 Scoping Literature Review 10 Interviews 11 Workshop, Dissemination, and Implementation 11 LITERATURE REVIEW AND CURRENT AWARENESS 11 Reasons for Retraction 11 Formats and Types of Retraction 13 Field Variation 15 Continued Citation of Retracted Papers: What Went Wrong? 20 Visibility of Retraction Status 24 Inconsistent Retraction Metadata 25 Quality of Retraction Notices 26 Literature Review Conclusions 28 DEFINING PROBLEMS AND OPPORTUNITIES 29 PROBLEMS AND OPPORTUNITIES DESCRIBED BY THE EMPIRICAL LITERATURE ON RETRACTION 29 Problem Themes Described by the Empirical Literature on Retraction 30 Opportunity Themes Described by the Empirical Literature on Retraction 32 Problem Definition Described by the Empirical Literature on Retraction 35 THEMES FROM STAKEHOLDER INTERVIEWS 36 Problem Frameworks based on Stakeholder Interviews 36 Contentious Themes based on Stakeholder Interviews 38 The Purpose of Retraction According to Stakeholder Interviews 38 Changing the Scholarly Record 39 The Harms of Retraction According to Stakeholder Interviews 39 The Character of Reform According to Stakeholder Interviews 40 SYNTHESIZING THE PROBLEMS AND OPPORTUNITIES 41 Aligning Opportunity Pathways 41 Defining the Scale and Scope of the Problem 42 RECOMMENDATIONS 43 1. Develop a Systematic Cross-industry Approach to Ensure the Public Availability of Consistent, Standardized, Interoperable, and Timely Information about Retractions. -

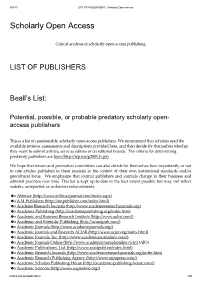

Scholarly Open Access

10/4/13 LIST OF PUBLISHERS | Scholarly Open Access Scholarly Open Access Critical analysis of scholarly open-access publishing LIST OF PUBLISHERS Beall’s List: Potential, possible, or probable predatory scholarly open- access publishers This is a list of questionable, scholarly open-access publishers. We recommend that scholars read the available reviews, assessments and descriptions provided here, and then decide for themselves whether they want to submit articles, serve as editors or on editorial boards. The criteria for determining predatory publishers are here (http://wp.me/p280Ch-g5). We hope that tenure and promotion committees can also decide for themselves how importantly or not to rate articles published in these journals in the context of their own institutional standards and/or geocultural locus. We emphasize that journal publishers and journals change in their business and editorial practices over time. This list is kept up-to-date to the best extent possible but may not reflect sudden, unreported, or unknown enhancements. o Abhinav (http://www.abhinavjournal.com/index.aspx) o A M Publishers (http://ampublisher.com/index.html) o Academe Research Journals (http://www.academeresearchjournals.org) o Academia Publishing (http://academiapublishing.org/index.htm) o Academic and Business Research Institute (http://www.aabri.com/) o Academic and Scientific Publishing (http://acascipub.com/) o Academic Journals (http://www.academicjournals.org/) o Academic Journals and Research ACJAR (http://www.acjar.org/index.html) o Academic Journals, -

OMICS Publishing Group Strongly Holds, Thereby Forming the Proposition for Its Journals and Publications

OMICSOMICS JournalsJournals In today’s world which signifies a global village, scientific researchers and practitioners need to share and disseminate information regularly, a belief that OMICS Publishing Group strongly holds, thereby forming the proposition for its journals and publications. OMICS Publishing Group operates in tune with the need for open access that is being constantly felt with passage of time by funding bodies and the associated research institutions alike. The Finch Report, developed by a committee chaired by Dame Janet Finch in Great Britain was accepted by the government in mid of 2012. The findings suggest strong need to enable more people to read and use the publications, particularly those featuring publicly funded research. OMICS Publishing Group provides for easy sharing of knowledge and seeks to provide Open Access to scientific research papers and articles. OMICS Publishing Group publishes several scientific journals featuring knowledge-sharing articles that discuss latest advancements in the field of scientific research. OMICS Publishing Group abides by the Bethesda statement on Open Access Publishing and regulated by the Creative Commons Attribution License. The content can be copied and distributed without changes, along with providing right citation. An Editorial Management system is used to track the articles on way to acceptance and publication that also checks for meeting quality standards in terms of presentation and originality of the manuscripts. Publications are edited by authors & reviewers, and the articles are classified for genuine presentation before a vast audience of readers including academicians, tertiary-level researchers and industry leaders. OMICS Publishing Group has a team of 20,000 editorial board members and about 25,000 reviewers across various specializations. -

Guide to Predatory Publishing

Guide to Predatory Publishing Produced by the Open Access Working Group of the Leibniz Association, October 2018. As the publication market has changed, the number of journals has increased significantly. In some fields, there are so many publications that it is difficult to keep track of them all. They include some journals that do not feel obliged to comply with the rules of good scientific practice,1 and instead use the academic publishing market purely as a business model for the publishers. These publications, which are usually referred to as “predatory journals”, charge authors publication fees or article processing charges (APCs), but do not organise peer reviews or other appropriate forms of quality control. The publication of research findings in such journals primarily harms the authors involved, but also weakens public confidence in scientific research.2 In the following, we describe the phenomenon of predatory publishing in greater detail and present the necessary safeguards. What is the difference between predatory journals and serious journals? One of the main differences between predatory journals and serious scientific journals is that predatory journals largely do without editorial or quality control measures. In addition, one or more of the following will typically apply: • Predatory journals do not provide transparent costing – it is often unclear from the journal’s online presence or website what costs will be incurred and what they relate to. • Predatory journals list misleading or false information about indicators, especially impact factors. • Predatory journals offer very fast publication of manuscripts they receive, which is incompatible with the length of time usually required to carry out a serious review process. -

Free Download

HOW TRUSTWORTHY? AN EXHIBITION ON NEGLIGENCE, FRAUD, AND MEASURING INTEGRITY ISBN 978-3-00-061938-0 © 2019 HEADT Centre Publications, Berlin Cover Image Eagle Nebula, M 16, Messier 16 NASA, ESA / Hubble and the Hubble Heritage Team (2015) Original picture in color, greyscale edited © HEADT Centre (2018) Typesetting and Design Kerstin Kühl Print and Binding 15 Grad Printed in Germany www.headt.eu 1 EXHIBITION CATALOGUE HOW TRUSTWORTHY? AN EXHIBITION ON NEGLIGENCE, FRAUD, AND MEASURING INTEGRITY Edited by Dr. Thorsten Stephan Beck, Melanie Rügenhagen and Prof. Dr. Michael Seadle HEADT Centre Publications goal of the exhibition The goal of this exhibition is to increase awareness about research integrity. The exhibition highlights areas where both human errors and intentional manipulation have resulted in the loss of positions and damage to careers. Students, doctoral students, and early career scholars especially need to recognize the risks, but senior scholars can also be caught and sometimes are caught for actions decades earlier. There is no statute of limitations for breaches of good scholarly practice. This exhibition serves as a learning tool. It was designed in part by students in a project seminar offered in the joint master’s programme on Digital Curation between Humboldt-Universität zu Berlin and King’s College London. The exhibition has four parts. One has to do with image manipulation and falsification, ranging from art works to tests used in medical studies. Another focuses on research data, including human errors, bad choices, and complete fabrication. A third is concerned with text-based information, and discusses plagiarism as well as fake journals and censorship. -

Integrating Research Integrity Into the History of Science

HOS0010.1177/0073275320952257History of ScienceMody et al. 952257research-article2020 Introduction HOS History of Science 2020, Vol. 58(4) 369 –385 Integrating research integrity © The Author(s) 2020 into the history of science https://doi.org/10.1177/0073275320952257Article reuse guidelines: sagepub.com/journals-permissions DOI: 10.1177/0073275320952257 journals.sagepub.com/home/hos Cyrus C. M. Mody Maastricht University, Netherlands H. Otto Sibum Uppsala University, Sweden Lissa L. Roberts University of Twente, Netherlands Abstract This introductory essay frames our special issue by discussing how attention to the history of research integrity and fraud can stimulate new historical and methodological insights of broader import to historians of science. Keywords Scientific integrity, fraud, scientific values, scientific controversies, history of science The politics of integrity In the companion introductory essay to this special issue, readers encounter the accusa- tions and counter-accusations surrounding Didier Raoult.1 Controversial figures like Raoult make compelling reading. The lurid details of his appearance, provocative lan- guage, past defiance of conventional wisdom, current climate denialism, and compli- cated relationship with his staff draw attention and generate doubts about the integrity of 1. Lissa L. Roberts, H. Otto Sibum and Cyrus C. M. Mody, “Integrating the History of Science into Broader Discussions of Research Integrity and Fraud,” History of Science 58 (2020): 354–68. Corresponding author: Lissa L. Roberts, University -

Boletín Fármacos: Ética Y Derecho

Boletín Fármacos: Ética y Derecho Boletín electrónico para fomentar el acceso y el uso adecuado de medicamentos http://www.saludyfarmacos.org/boletin-farmacos/ Publicado por Salud y Fármacos Volumen 23, número 3, agosto 2020 Boletín Fármacos es un boletín electrónico de la organización Salud y Fármacos que se publica cuatro veces al año: el último día de cada uno de los siguientes meses: febrero, mayo, agosto y noviembre. Editores Equipo de Traductores Núria Homedes Beguer, EE.UU. Núria Homedes, EE UU Antonio Ugalde, EE.UU. Enrique Muñoz Soler, España Antonio Ugalde, EE.UU. Asesores de Ética Maria Cristina Latorre, Colombia Claudio Lorenzo, Brasil Andrea Carolina Reyes Rojas, Colombia Jan Helge Solbakk, Noruega Jaime Escobar, Colombia Editores Asociados Corina Bontempo Duca de Freitas, Brasil Asesores en Ensayos Clínicos Albin Chaves, Costa Rica Juan Erviti, España Hernán Collado, Costa Rica Gianni Tognoni, Italia Francisco Debesa García, Cuba Emma Verástegui, México Anahí Dresser, México Claude Verges, Panamá José Humberto Duque, Colombia Albert Figueras, España Asesor en Publicidad y Promoción Sergio Gonorazky, Argentina Adriane Fugh-Berman Alejandro Goyret, Uruguay Eduardo Hernández, México Corresponsales Luis Justo, Argentina Rafaela Sierra, Centro América Óscar Lanza, Bolivia Steven Orozco Arcila, Colombia René Leyva, México Raquel Abrantes, Brasil Duilio Fuentes, Perú Benito Marchand, Ecuador Webmaster Gabriela Minaya, Perú People Walking Bruno Schlemper Junior, Brasil Xavier Seuba, España Federico Tobar, Panamá Francisco Rossi, Colombia Boletín Fármacos solicita comunicaciones, noticias, y artículos de investigación sobre cualquier tema relacionado con el acceso y uso de medicamentos; incluyendo temas de farmacovigilancia; políticas de medicamentos; ensayos clínicos; ética y medicamentos; dispensación y farmacia; comportamiento de la industria; prácticas recomendables y prácticas cuestionadas de uso y promoción de medicamentos.