Fault-Resilient Lightweight Cryptographic Block Ciphers for Secure Embedded Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Multiple New Formulas for Cipher Performance Computing

International Journal of Network Security, Vol.20, No.4, PP.788-800, July 2018 (DOI: 10.6633/IJNS.201807 20(4).21) 788 Multiple New Formulas for Cipher Performance Computing Youssef Harmouch1, Rachid Elkouch1, Hussain Ben-azza2 (Corresponding author: Youssef Harmouch) Department of Mathematics, Computing and Networks, National Institute of Posts and Telecommunications1 10100, Allal El Fassi Avenue, Rabat, Morocco (Email:[email protected] ) Department of Industrial and Production Engineering, Moulay Ismail University2 National High School of Arts and Trades, Mekns, Morocco (Received Apr. 03, 2017; revised and accepted July 17, 2017) Abstract are not necessarily commensurate properties. For exam- ple, an online newspaper will be primarily interested in Cryptography is a science that focuses on changing the the integrity of their information while a financial stock readable information to unrecognizable and useless data exchange network may define their security as real-time to any unauthorized person. This solution presents the availability and information privacy [14, 23]. This means main core of network security, therefore the risk analysis that, the many facets of the attribute must all be iden- for using a cipher turn out to be an obligation. Until now, tified and adequately addressed. Furthermore, the secu- the only platform for providing each cipher resistance is rity attributes are terms of qualities, thus measuring such the cryptanalysis study. This cryptanalysis can make it quality terms need a unique identification for their inter- hard to compare ciphers because each one is vulnerable to pretations meaning [20, 24]. Besides, the attributes can a different kind of attack that is often very different from be interdependent. -

Performance and Energy Efficiency of Block Ciphers in Personal Digital Assistants

Performance and Energy Efficiency of Block Ciphers in Personal Digital Assistants Creighton T. R. Hager, Scott F. Midkiff, Jung-Min Park, Thomas L. Martin Bradley Department of Electrical and Computer Engineering Virginia Polytechnic Institute and State University Blacksburg, Virginia 24061 USA {chager, midkiff, jungmin, tlmartin} @ vt.edu Abstract algorithms may consume more energy and drain the PDA battery faster than using less secure algorithms. Due to Encryption algorithms can be used to help secure the processing requirements and the limited computing wireless communications, but securing data also power in many PDAs, using strong cryptographic consumes resources. The goal of this research is to algorithms may also significantly increase the delay provide users or system developers of personal digital between data transmissions. Thus, users and, perhaps assistants and applications with the associated time and more importantly, software and system designers need to energy costs of using specific encryption algorithms. be aware of the benefits and costs of using various Four block ciphers (RC2, Blowfish, XTEA, and AES) were encryption algorithms. considered. The experiments included encryption and This research answers questions regarding energy decryption tasks with different cipher and file size consumption and execution time for various encryption combinations. The resource impact of the block ciphers algorithms executing on a PDA platform with the goal of were evaluated using the latency, throughput, energy- helping software and system developers design more latency product, and throughput/energy ratio metrics. effective applications and systems and of allowing end We found that RC2 encrypts faster and uses less users to better utilize the capabilities of PDA devices. -

Optimization of Core Components of Block Ciphers Baptiste Lambin

Optimization of core components of block ciphers Baptiste Lambin To cite this version: Baptiste Lambin. Optimization of core components of block ciphers. Cryptography and Security [cs.CR]. Université Rennes 1, 2019. English. NNT : 2019REN1S036. tel-02380098 HAL Id: tel-02380098 https://tel.archives-ouvertes.fr/tel-02380098 Submitted on 26 Nov 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. THÈSE DE DOCTORAT DE L’UNIVERSITE DE RENNES 1 COMUE UNIVERSITE BRETAGNE LOIRE Ecole Doctorale N°601 Mathématique et Sciences et Technologies de l’Information et de la Communication Spécialité : Informatique Par Baptiste LAMBIN Optimization of Core Components of Block Ciphers Thèse présentée et soutenue à RENNES, le 22/10/2019 Unité de recherche : IRISA Rapporteurs avant soutenance : Marine Minier, Professeur, LORIA, Université de Lorraine Jacques Patarin, Professeur, PRiSM, Université de Versailles Composition du jury : Examinateurs : Marine Minier, Professeur, LORIA, Université de Lorraine Jacques Patarin, Professeur, PRiSM, Université de Versailles Jean-Louis Lanet, INRIA Rennes Virginie Lallemand, Chargée de Recherche, LORIA, CNRS Jérémy Jean, ANSSI Dir. de thèse : Pierre-Alain Fouque, IRISA, Université de Rennes 1 Co-dir. de thèse : Patrick Derbez, IRISA, Université de Rennes 1 Remerciements Je tiens à remercier en premier lieu mes directeurs de thèse, Pierre-Alain et Patrick. -

A Lightweight Encryption Algorithm for Secure Internet of Things

Pre-Print Version, Original article is available at (IJACSA) International Journal of Advanced Computer Science and Applications, Vol. 8, No. 1, 2017 SIT: A Lightweight Encryption Algorithm for Secure Internet of Things Muhammad Usman∗, Irfan Ahmedy, M. Imran Aslamy, Shujaat Khan∗ and Usman Ali Shahy ∗Faculty of Engineering Science and Technology (FEST), Iqra University, Defence View, Karachi-75500, Pakistan. Email: fmusman, [email protected] yDepartment of Electronic Engineering, NED University of Engineering and Technology, University Road, Karachi 75270, Pakistan. Email: firfans, [email protected], [email protected] Abstract—The Internet of Things (IoT) being a promising and apply analytics to share the most valuable data with the technology of the future is expected to connect billions of devices. applications. The IoT is taking the conventional internet, sensor The increased number of communication is expected to generate network and mobile network to another level as every thing mountains of data and the security of data can be a threat. The will be connected to the internet. A matter of concern that must devices in the architecture are essentially smaller in size and be kept under consideration is to ensure the issues related to low powered. Conventional encryption algorithms are generally confidentiality, data integrity and authenticity that will emerge computationally expensive due to their complexity and requires many rounds to encrypt, essentially wasting the constrained on account of security and privacy [4]. energy of the gadgets. Less complex algorithm, however, may compromise the desired integrity. In this paper we propose a A. Applications of IoT: lightweight encryption algorithm named as Secure IoT (SIT). -

Meet-In-The-Middle Attacks on Reduced-Round XTEA*

Meet-in-the-Middle Attacks on Reduced-Round XTEA⋆ Gautham Sekar⋆⋆, Nicky Mouha⋆ ⋆ ⋆, Vesselin Velichkov†, and Bart Preneel 1 Department of Electrical Engineering ESAT/SCD-COSIC, Katholieke Universiteit Leuven, Kasteelpark Arenberg 10, B-3001 Heverlee, Belgium. 2 Interdisciplinary Institute for BroadBand Technology (IBBT), Belgium. {Gautham.Sekar,Nicky.Mouha,Vesselin.Velichkov, Bart.Preneel}@esat.kuleuven.be Abstract. The block cipher XTEA, designed by Needham and Wheeler, was published as a technical report in 1997. The cipher was a result of fixing some weaknesses in the cipher TEA (also designed by Wheeler and Needham), which was used in Microsoft’s Xbox gaming console. XTEA is a 64-round Feistel cipher with a block size of 64 bits and a key size of 128 bits. In this paper, we present meet-in-the-middle attacks on twelve vari- ants of the XTEA block cipher, where each variant consists of 23 rounds. Two of these require only 18 known plaintexts and a computational ef- fort equivalent to testing about 2117 keys, with a success probability of 1−2 −1025. Under the standard (single-key) setting, there is no attack re- ported on 23 or more rounds of XTEA, that requires less time and fewer data than the above. This paper also discusses a variant of the classical meet-in-the-middle approach. All attacks in this paper are applicable to XETA as well, a block cipher that has not undergone public analysis yet. TEA, XTEA and XETA are implemented in the Linux kernel. Keywords: Cryptanalysis, block cipher, meet-in-the-middle attack, Feis- tel network, XTEA, XETA. -

Zero Correlation Linear Cryptanalysis with Reduced Data Complexity

Zero Correlation Linear Cryptanalysis with Reduced Data Complexity Andrey Bogdanov1⋆ and Meiqin Wang1,2⋆ 1 KU Leuven, ESAT/COSIC and IBBT, Belgium 2 Shandong University, Key Laboratory of Cryptologic Technology and Information Security, Ministry of Education, Shandong University, Jinan 250100,China Abstract. Zero correlation linear cryptanalysis is a novel key recovery technique for block ciphers proposed in [5]. It is based on linear approx- imations with probability of exactly 1/2 (which corresponds to the zero correlation). Some block ciphers turn out to have multiple linear approx- imations with correlation zero for each key over a considerable number of rounds. Zero correlation linear cryptanalysis is the counterpart of im- possible differential cryptanalysis in the domain of linear cryptanalysis, though having many technical distinctions and sometimes resulting in stronger attacks. In this paper, we propose a statistical technique to significantly reduce the data complexity using the high number of zero correlation linear approximations available. We also identify zero correlation linear ap- proximations for 14 and 15 rounds of TEA and XTEA. Those result in key-recovery attacks for 21-round TEA and 25-round XTEA, while re- quiring less data than the full code book. In the single secret key setting, these are structural attacks breaking the highest number of rounds for both ciphers. The findings of this paper demonstrate that the prohibitive data com- plexity requirements are not inherent in the zero correlation linear crypt- analysis and can be overcome. Moreover, our results suggest that zero correlation linear cryptanalysis can actually break more rounds than the best known impossible differential cryptanalysis does for relevant block ciphers. -

Impossible Differential Cryptanalysis of the Lightweight Block Ciphers TEA, XTEA and HIGHT

Impossible Differential Cryptanalysis of the Lightweight Block Ciphers TEA, XTEA and HIGHT Jiazhe Chen1;2;3?, Meiqin Wang1;2;3??, and Bart Preneel2;3 1 Key Laboratory of Cryptologic Technology and Information Security, Ministry of Education, School of Mathematics, Shandong University, Jinan 250100, China 2 Department of Electrical Engineering ESAT/SCD-COSIC, Katholieke Universiteit Leuven, Kasteelpark Arenberg 10, B-3001 Heverlee, Belgium 3 Interdisciplinary Institute for BroadBand Technology (IBBT), Belgium [email protected] Abstract. TEA, XTEA and HIGHT are lightweight block ciphers with 64-bit block sizes and 128-bit keys. The round functions of the three ci- phers are based on the simple operations XOR, modular addition and shift/rotation. TEA and XTEA are Feistel ciphers with 64 rounds de- signed by Needham and Wheeler, where XTEA is a successor of TEA, which was proposed by the same authors as an enhanced version of TEA. HIGHT, which is designed by Hong et al., is a generalized Feistel cipher with 32 rounds. These block ciphers are simple and easy to implement but their diffusion is slow, which allows us to find some impossible prop- erties. This paper proposes a method to identify the impossible differentials for TEA and XTEA by using the weak diffusion, where the impossible differential comes from a bit contradiction. Our method finds a 14-round impossible differential of XTEA and a 13-round impossible differential of TEA, which result in impossible differential attacks on 23-round XTEA and 17-round TEA, respectively. These attacks significantly improve the previous impossible differential attacks on 14-round XTEA and 11-round TEA given by Moon et al. -

An Ultra-Lightweight Cryptographic Library for Embedded Systems

ULCL An Ultra-lightweight Cryptographic Library for Embedded Systems George Hatzivasilis1, Apostolos Theodoridis2, Elias Gasparis2 and Charalampos Manifavas3 1 Dept. of Electronic & Computer Engineering, Technical University of Crete, Akrotiri Campus, 73100 Chania, Crete, Greece 2 Dept. of Computer Science, University of Crete, Voutes Campus, 70013 Heraklion, Crete, Greece 3 Dept. of Informatics Engineering, Technological Educational Institute of Crete, Estavromenos, 71500 Heraklion, Crete, Greece Keywords: Cryptographic Library, Lightweight Cryptography, API, Embedded Systems, Security. Abstract: The evolution of embedded systems and their applications in every daily activity, derive the development of lightweight cryptography. Widely used crypto-libraries are too large to fit on constrained devices, like sensor nodes. Also, such libraries provide redundant functionality as each lightweight and ultra-lightweight application utilizes a limited and specific set of crypto-primitives and protocols. In this paper we present the ULCL crypto-library for embedded systems. It is a compact software cryptographic library, optimized for space and performance. The library is a collection of open source ciphers (27 overall primitives). We implement a common lightweight API for utilizing all primitives and a user-friendly API for users that aren’t familiar with cryptographic applications. One of the main novelties is the configurable compilation process. A user can compile the exact set of crypto-primitives that are required to implement a lightweight application. The library is implemented in C and measurements were made on PC, BeagleBone and MemSic IRIS devices. ULCL occupies 4 – 516.7KB of code. We compare our library with other similar proposals and their suitability in different types of embedded devices. 1 INTRODUCTION (ECRYPT, 2008) finalists and the SHA-3 (NIST, 2012). -

FIPS 140-2 Security Policy

Samsung FIPS BC for Mobile Phone and Tablet FIPS 140-2 Security Policy Version 1.6 Last Update: 2014-02-11 Samsung FIPS BC for Mobile Phone and Tablet FIPS 140-2 Security Policy Trademarks .......................................................................................................................... 3 1. Introduction ..................................................................................................................... 4 1.1. Purpose of the Security Policy ................................................................................... 4 1.2. Target Audience ........................................................................................................ 4 2. Cryptographic Module Specification ................................................................................ 5 2.1. Description of Module ............................................................................................... 5 2.2. Description of Approved Mode .................................................................................. 5 2.3. Cryptographic Module Boundary ............................................................................... 8 2.3.1. Software Block Diagram .................................................................................. 8 2.3.2. Hardware Block Diagram................................................................................. 9 3. Cryptographic Module Ports and Interfaces ................................................................... 10 4. Roles, Services and Authentication -

A Secure and Efficient Lightweight Symmetric Encryption Scheme For

S S symmetry Article A Secure and Efficient Lightweight Symmetric Encryption Scheme for Transfer of Text Files between Embedded IoT Devices Sreeja Rajesh 1, Varghese Paul 2, Varun G. Menon 3,* and Mohammad R. Khosravi 4 1 Department of Computer Science, Bharathiar University, Coimbatore 641046, Tamil Nadu, India; [email protected] 2 Department of Information Technology, Cochin University of Science and Technology, Ernakulam 682022, Kerala, India; [email protected] 3 Department of Computer Science and Engineering, SCMS School of Engineering and Technology, Ernakulam 683582, Kerala, India 4 Department of Electrical and Electronic Engineering, Shiraz University of Technology, Shiraz 71555-313, Iran; [email protected] * Correspondence: [email protected]; Tel.: +918714504684 Received: 29 January 2019; Accepted: 20 February 2019; Published: 24 February 2019 Abstract: Recent advancements in wireless technology have created an exponential rise in the number of connected devices leading to the internet of things (IoT) revolution. Large amounts of data are captured, processed and transmitted through the network by these embedded devices. Security of the transmitted data is a major area of concern in IoT networks. Numerous encryption algorithms have been proposed in these years to ensure security of transmitted data through the IoT network. Tiny encryption algorithm (TEA) is the most attractive among all, with its lower memory utilization and ease of implementation on both hardware and software scales. But one of the major issues of TEA and its numerous developed versions is the usage of the same key through all rounds of encryption, which yields a reduced security evident from the avalanche effect of the algorithm. -

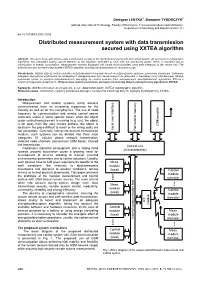

Distributed Measurement System with Data Transmission Secured Using XXTEA Algorithm

Grzegorz LENTKA1, Sławomir TYBORCZYK1 Gdansk University of Technology, Faculty of Electronics, Telecommunications and Informatics, Department of Metrology and Optoelectronics (1) doi:10.15199/48.2016.10.60 Distributed measurement system with data transmission secured using XXTEA algorithm Abstract. The paper deals with wireless data transmission security in the distributed measurement and control system. An overview of cryptographic algorithms was presented paying special attention to the algorithm dedicated to units with low processing power, which is important due to minimization of energy consumption. Measurement modules equipped with simple microcontrollers send data wirelessly to the central unit. The transmission was secured using modified XXTEA algorithm assuring low requirements for resource usage. Streszczenie. Artykuł dotyczy bezpieczeństwa bezprzewodowej transmisji danych w rozproszonym systemie pomiarowo-sterującym. Dokonano przeglądu algorytmów szyfrowania ze szczególnym uwzględnieniem tych dedykowanych do jednostek o niewielkiej mocy obliczeniowej. Moduły pomiarowe oparte na prostych mikrokontrolerach przesyłają do centrali systemu dane zabezpieczone zmodyfikowanym algorytmem XXTEA o niskich wymaganiach sprzętowych. (Rozproszony system pomiarowo-sterujący z transmisją danych zabezpieczoną algorytmem XXTEA). Keywords: distributed measurement systems, secure data transmission, XXTEA cryptographic algorithm. Słowa kluczowe: rozproszone systemy pomiarowo-sterujące, bezpieczna transmisja danych, algorytm kryptograficzny XXTEA. Introduction Measurement and control systems using wireless communication have an increasing importance for the industry as well as for the everyday live. The use of radio frequency for communication and remote control seems especially useful in some specific cases: when the object under control/measurement is moving (e.g. car), the object is far away from the user (meteo probes), the object is GSM module located in the place difficult to reach or the wiring costs are Local user interface not acceptable. -

Xtea Cryptography Implementation in Android Chatting App

JOURNAL OF INFORMATION TECHNOLOGY AND ITS UTILIZATION, VOLUME 3, ISSUE 2, DECEMBER-2020, 36-43 EISSN 2654-802X XTEA CRYPTOGRAPHY IMPLEMENTATION IN ANDROID CHATTING APP Irfan Syamsuddin1, Siska Ihdianty2, Eddy Tungadi3, Kasim4, Irawan5 1,2,3,4,5 Politeknik Negeri Ujung Pandang , Indonesia [email protected] Abstract-- Information security plays a significant role It is a simple but powerful cryptography algorithm which in information society. Cryptography is a key proof of was considered applicable to be implemented in today’s concept to increasing the security of information assets mobile communication era. and has been deployed in various algorithms. Among In this paper, we introduce a work in progress Secure Chat cryptography algorithms is Extended Tiny Encryption application based on Android environment and then Algorithm. This study aims to describe a recent Android demonstrate the way message encrypted and decrypted using Apps to realize XTEA Cryptography in mobile form. In XTEA algorithm. addition, a thorough example is presented to enable The rest of paper is organized in the following structure. readers gain understanding on how it works within our Section 2 presents literature review of XTEA. In section 3, Android Apps. Secure Chat application based on Android is presented. Then, the next section presents the actual encryption and decryption Keywords: information security; cryptography; XTEA; mathematics visualization mechanisms are exemplified in section 4. Finally, concluding remarks are given in section 5. TEA is a symmetrical algorithm. TEA is a block cipher I. INTRODUCTION algorithm created by David J. Wheeler and Roger M. Needham of Cambridge University in 1994. TEA operates in a The role of information security has gained more serious size of 64 bits and a key length of 128 bits[1][2].