Trace-Based Just-In-Time Compilation for Lazy Functional Programming Languages

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

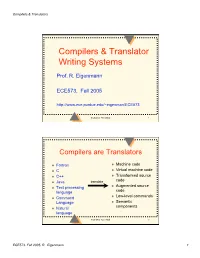

Compilers & Translator Writing Systems

Compilers & Translators Compilers & Translator Writing Systems Prof. R. Eigenmann ECE573, Fall 2005 http://www.ece.purdue.edu/~eigenman/ECE573 ECE573, Fall 2005 1 Compilers are Translators Fortran Machine code C Virtual machine code C++ Transformed source code Java translate Augmented source Text processing language code Low-level commands Command Language Semantic components Natural language ECE573, Fall 2005 2 ECE573, Fall 2005, R. Eigenmann 1 Compilers & Translators Compilers are Increasingly Important Specification languages Increasingly high level user interfaces for ↑ specifying a computer problem/solution High-level languages ↑ Assembly languages The compiler is the translator between these two diverging ends Non-pipelined processors Pipelined processors Increasingly complex machines Speculative processors Worldwide “Grid” ECE573, Fall 2005 3 Assembly code and Assemblers assembly machine Compiler code Assembler code Assemblers are often used at the compiler back-end. Assemblers are low-level translators. They are machine-specific, and perform mostly 1:1 translation between mnemonics and machine code, except: – symbolic names for storage locations • program locations (branch, subroutine calls) • variable names – macros ECE573, Fall 2005 4 ECE573, Fall 2005, R. Eigenmann 2 Compilers & Translators Interpreters “Execute” the source language directly. Interpreters directly produce the result of a computation, whereas compilers produce executable code that can produce this result. Each language construct executes by invoking a subroutine of the interpreter, rather than a machine instruction. Examples of interpreters? ECE573, Fall 2005 5 Properties of Interpreters “execution” is immediate elaborate error checking is possible bookkeeping is possible. E.g. for garbage collection can change program on-the-fly. E.g., switch libraries, dynamic change of data types machine independence. -

Source-To-Source Translation and Software Engineering

Journal of Software Engineering and Applications, 2013, 6, 30-40 http://dx.doi.org/10.4236/jsea.2013.64A005 Published Online April 2013 (http://www.scirp.org/journal/jsea) Source-to-Source Translation and Software Engineering David A. Plaisted Department of Computer Science, University of North Carolina at Chapel Hill, Chapel Hill, USA. Email: [email protected] Received February 5th, 2013; revised March 7th, 2013; accepted March 15th, 2013 Copyright © 2013 David A. Plaisted. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. ABSTRACT Source-to-source translation of programs from one high level language to another has been shown to be an effective aid to programming in many cases. By the use of this approach, it is sometimes possible to produce software more cheaply and reliably. However, the full potential of this technique has not yet been realized. It is proposed to make source- to-source translation more effective by the use of abstract languages, which are imperative languages with a simple syntax and semantics that facilitate their translation into many different languages. By the use of such abstract lan- guages and by translating only often-used fragments of programs rather than whole programs, the need to avoid writing the same program or algorithm over and over again in different languages can be reduced. It is further proposed that programmers be encouraged to write often-used algorithms and program fragments in such abstract languages. Libraries of such abstract programs and program fragments can then be constructed, and programmers can be encouraged to make use of such libraries by translating their abstract programs into application languages and adding code to join things together when coding in various application languages. -

The LLVM Instruction Set and Compilation Strategy

The LLVM Instruction Set and Compilation Strategy Chris Lattner Vikram Adve University of Illinois at Urbana-Champaign lattner,vadve ¡ @cs.uiuc.edu Abstract This document introduces the LLVM compiler infrastructure and instruction set, a simple approach that enables sophisticated code transformations at link time, runtime, and in the field. It is a pragmatic approach to compilation, interfering with programmers and tools as little as possible, while still retaining extensive high-level information from source-level compilers for later stages of an application’s lifetime. We describe the LLVM instruction set, the design of the LLVM system, and some of its key components. 1 Introduction Modern programming languages and software practices aim to support more reliable, flexible, and powerful software applications, increase programmer productivity, and provide higher level semantic information to the compiler. Un- fortunately, traditional approaches to compilation either fail to extract sufficient performance from the program (by not using interprocedural analysis or profile information) or interfere with the build process substantially (by requiring build scripts to be modified for either profiling or interprocedural optimization). Furthermore, they do not support optimization either at runtime or after an application has been installed at an end-user’s site, when the most relevant information about actual usage patterns would be available. The LLVM Compilation Strategy is designed to enable effective multi-stage optimization (at compile-time, link-time, runtime, and offline) and more effective profile-driven optimization, and to do so without changes to the traditional build process or programmer intervention. LLVM (Low Level Virtual Machine) is a compilation strategy that uses a low-level virtual instruction set with rich type information as a common code representation for all phases of compilation. -

Tdb: a Source-Level Debugger for Dynamically Translated Programs

Tdb: A Source-level Debugger for Dynamically Translated Programs Naveen Kumar†, Bruce R. Childers†, and Mary Lou Soffa‡ †Department of Computer Science ‡Department of Computer Science University of Pittsburgh University of Virginia Pittsburgh, Pennsylvania 15260 Charlottesville, Virginia 22904 {naveen, childers}@cs.pitt.edu [email protected] Abstract single stepping through program execution, watching for par- ticular conditions and requests to add and remove breakpoints. Debugging techniques have evolved over the years in response In order to respond, the debugger has to map the values and to changes in programming languages, implementation tech- statements that the user expects using the source program niques, and user needs. A new type of implementation vehicle viewpoint, to the actual values and locations of the statements for software has emerged that, once again, requires new as found in the executable program. debugging techniques. Software dynamic translation (SDT) As programming languages have evolved, new debugging has received much attention due to compelling applications of techniques have been developed. For example, checkpointing the technology, including software security checking, binary and time stamping techniques have been developed for lan- translation, and dynamic optimization. Using SDT, program guages with concurrent constructs [6,19,30]. The pervasive code changes dynamically, and thus, debugging techniques use of code optimizations to improve performance has necessi- developed for statically generated code cannot be used to tated techniques that can respond to queries even though the debug these applications. In this paper, we describe a new optimization may have changed the number of statement debug architecture for applications executing with SDT sys- instances and the order of execution [15,25,29]. -

An Execution Model for Serverless Functions at the Edge

An Execution Model for Serverless Functions at the Edge Adam Hall Umakishore Ramachandran Georgia Institute of Technology Georgia Institute of Technology Atlanta, Georgia Atlanta, Georgia ach@gatech:edu rama@gatech:edu ABSTRACT 1 INTRODUCTION Serverless computing platforms allow developers to host single- Enabling next generation technologies such as self-driving cars or purpose applications that automatically scale with demand. In con- smart cities via edge computing requires us to reconsider the way trast to traditional long-running applications on dedicated, virtu- we characterize and deploy the services supporting those technolo- alized, or container-based platforms, serverless applications are gies. Edge/fog environments consist of many micro data centers intended to be instantiated when called, execute a single function, spread throughout the edge of the network. This is in stark contrast and shut down when finished. State-of-the-art serverless platforms to the cloud, where we assume the notion of unlimited resources achieve these goals by creating a new container instance to host available in a few centralized data centers. These micro data center a function when it is called and destroying the container when it environments must support large numbers of Internet of Things completes. This design allows for cost and resource savings when (IoT) devices on limited hardware resources, processing the mas- hosting simple applications, such as those supporting IoT devices sive amounts of data those devices generate while providing quick at the edge of the network. However, the use of containers intro- decisions to inform their actions [44]. One solution to supporting duces some overhead which may be unsuitable for applications emerging technologies at the edge lies in serverless computing. -

GHC Reading Guide

GHC Reading Guide - Exploring entrances and mental models to the source code - Takenobu T. Rev. 0.01.1 WIP NOTE: - This is not an official document by the ghc development team. - Please refer to the official documents in detail. - Don't forget “semantics”. It's very important. - This is written for ghc 9.0. Contents Introduction 1. Compiler - Compilation pipeline - Each pipeline stages - Intermediate language syntax - Call graph 2. Runtime system 3. Core libraries Appendix References Introduction Introduction Official resources are here GHC source repository : The GHC Commentary (for developers) : https://gitlab.haskell.org/ghc/ghc https://gitlab.haskell.org/ghc/ghc/-/wikis/commentary GHC Documentation (for users) : * master HEAD https://ghc.gitlab.haskell.org/ghc/doc/ * latest major release https://downloads.haskell.org/~ghc/latest/docs/html/ * version specified https://downloads.haskell.org/~ghc/9.0.1/docs/html/ The User's Guide Core Libraries GHC API Introduction The GHC = Compiler + Runtime System (RTS) + Core Libraries Haskell source (.hs) GHC compiler RuntimeSystem Core Libraries object (.o) (libHsRts.o) (GHC.Base, ...) Linker Executable binary including the RTS (* static link case) Introduction Each division is located in the GHC source tree GHC source repository : https://gitlab.haskell.org/ghc/ghc compiler/ ... compiler sources rts/ ... runtime system sources libraries/ ... core library sources ghc/ ... compiler main includes/ ... include files testsuite/ ... test suites nofib/ ... performance tests mk/ ... build system hadrian/ ... hadrian build system docs/ ... documents : : 1. Compiler 1. Compiler Compilation pipeline 1. compiler The GHC compiler Haskell language GHC compiler Assembly language (native or llvm) 1. compiler GHC compiler comprises pipeline stages GHC compiler Haskell language Parser Renamer Type checker Desugarer Core to Core Core to STG STG to Cmm Assembly language Cmm to Asm (native or llvm) 1. -

EEMBC and the Purposes of Embedded Processor Benchmarking Markus Levy, President

EEMBC and the Purposes of Embedded Processor Benchmarking Markus Levy, President ISPASS 2005 Certified Performance Analysis for Embedded Systems Designers EEMBC: A Historical Perspective • Began as an EDN Magazine project in April 1997 • Replace Dhrystone • Have meaningful measure for explaining processor behavior • Developed business model • Invited worldwide processor vendors • A consortium was born 1 EEMBC Membership • Board Member • Membership Dues: $30,000 (1st year); $16,000 (subsequent years) • Access and Participation on ALL Subcommittees • Full Voting Rights • Subcommittee Member • Membership Dues Are Subcommittee Specific • Access to Specific Benchmarks • Technical Involvement Within Subcommittee • Help Determine Next Generation Benchmarks • Special Academic Membership EEMBC Philosophy: Standardized Benchmarks and Certified Scores • Member derived benchmarks • Determine the standard, the process, and the benchmarks • Open to industry feedback • Ensures all processor/compiler vendors are running the same tests • Certification process ensures credibility • All benchmark scores officially validated before publication • The entire benchmark environment must be disclosed 2 Embedded Industry: Represented by Very Diverse Applications • Networking • Storage, low- and high-end routers, switches • Consumer • Games, set top boxes, car navigation, smartcards • Wireless • Cellular, routers • Office Automation • Printers, copiers, imaging • Automotive • Engine control, Telematics Traditional Division of Embedded Applications Low High Power -

A Status Update of BEAMJIT, the Just-In-Time Compiling Abstract Machine

A Status Update of BEAMJIT, the Just-in-Time Compiling Abstract Machine Frej Drejhammar and Lars Rasmusson <ffrej,[email protected]> 140609 Who am I? Senior researcher at the Swedish Institute of Computer Science (SICS) working on programming tools and distributed systems. Acknowledgments Project funded by Ericsson AB. Joint work with Lars Rasmusson <[email protected]>. What this talk is About An introduction to how BEAMJIT works and a detailed look at some subtle details of its implementation. Outline Background BEAMJIT from 10000m BEAMJIT-aware Optimization Compiler-supported Profiling Future Work Questions Just-In-Time (JIT) Compilation Decide at runtime to compile \hot" parts to native code. Fairly common implementation technique. McCarthy's Lisp (1969) Python (Psyco, PyPy) Smalltalk (Cog) Java (HotSpot) JavaScript (SquirrelFish Extreme, SpiderMonkey, J¨agerMonkey, IonMonkey, V8) Motivation A JIT compiler increases flexibility. Tracing does not require switching to full emulation. Cross-module optimization. Compiled BEAM modules are platform independent: No need for cross compilation. Binaries not strongly coupled to a particular build of the emulator. Integrates naturally with code upgrade. Project Goals Do as little manual work as possible. Preserve the semantics of plain BEAM. Automatically stay in sync with the plain BEAM, i.e. if bugs are fixed in the interpreter the JIT should not have to be modified manually. Have a native code generator which is state-of-the-art. Eventually be better than HiPE (steady-state). Plan Use automated tools to transform and extend the BEAM. Use an off-the-shelf optimizer and code generator. Implement a tracing JIT compiler. BEAM: Specification & Implementation BEAM is the name of the Erlang VM. -

Cloud Workbench a Web-Based Framework for Benchmarking Cloud Services

Bachelor August 12, 2014 Cloud WorkBench A Web-Based Framework for Benchmarking Cloud Services Joel Scheuner of Muensterlingen, Switzerland (10-741-494) supervised by Prof. Dr. Harald C. Gall Philipp Leitner, Jürgen Cito software evolution & architecture lab Bachelor Cloud WorkBench A Web-Based Framework for Benchmarking Cloud Services Joel Scheuner software evolution & architecture lab Bachelor Author: Joel Scheuner, [email protected] Project period: 04.03.2014 - 14.08.2014 Software Evolution & Architecture Lab Department of Informatics, University of Zurich Acknowledgements This bachelor thesis constitutes the last milestone on the way to my first academic graduation. I would like to thank all the people who accompanied and supported me in the last four years of study and work in industry paving the way to this thesis. My personal thanks go to my parents supporting me in many ways. The decision to choose a complex topic in an area where I had no personal experience in advance, neither theoretically nor technologically, made the past four and a half months challenging, demanding, work-intensive, but also very educational which is what remains in good memory afterwards. Regarding this thesis, I would like to offer special thanks to Philipp Leitner who assisted me during the whole process and gave valuable advices. Moreover, I want to thank Jürgen Cito for his mainly technologically-related recommendations, Rita Erne for her time spent with me on language review sessions, and Prof. Harald Gall for giving me the opportunity to write this thesis at the Software Evolution & Architecture Lab at the University of Zurich and providing fundings and infrastructure to realize this thesis. -

Automatic Benchmark Profiling Through Advanced Trace Analysis Alexis Martin, Vania Marangozova-Martin

Automatic Benchmark Profiling through Advanced Trace Analysis Alexis Martin, Vania Marangozova-Martin To cite this version: Alexis Martin, Vania Marangozova-Martin. Automatic Benchmark Profiling through Advanced Trace Analysis. [Research Report] RR-8889, Inria - Research Centre Grenoble – Rhône-Alpes; Université Grenoble Alpes; CNRS. 2016. hal-01292618 HAL Id: hal-01292618 https://hal.inria.fr/hal-01292618 Submitted on 24 Mar 2016 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Automatic Benchmark Profiling through Advanced Trace Analysis Alexis Martin , Vania Marangozova-Martin RESEARCH REPORT N° 8889 March 23, 2016 Project-Team Polaris ISSN 0249-6399 ISRN INRIA/RR--8889--FR+ENG Automatic Benchmark Profiling through Advanced Trace Analysis Alexis Martin ∗ † ‡, Vania Marangozova-Martin ∗ † ‡ Project-Team Polaris Research Report n° 8889 — March 23, 2016 — 15 pages Abstract: Benchmarking has proven to be crucial for the investigation of the behavior and performances of a system. However, the choice of relevant benchmarks still remains a challenge. To help the process of comparing and choosing among benchmarks, we propose a solution for automatic benchmark profiling. It computes unified benchmark profiles reflecting benchmarks’ duration, function repartition, stability, CPU efficiency, parallelization and memory usage. -

Toward IFVM Virtual Machine: a Model Driven IFML Interpretation

Toward IFVM Virtual Machine: A Model Driven IFML Interpretation Sara Gotti and Samir Mbarki MISC Laboratory, Faculty of Sciences, Ibn Tofail University, BP 133, Kenitra, Morocco Keywords: Interaction Flow Modelling Language IFML, Model Execution, Unified Modeling Language (UML), IFML Execution, Model Driven Architecture MDA, Bytecode, Virtual Machine, Model Interpretation, Model Compilation, Platform Independent Model PIM, User Interfaces, Front End. Abstract: UML is the first international modeling language standardized since 1997. It aims at providing a standard way to visualize the design of a system, but it can't model the complex design of user interfaces and interactions. However, according to MDA approach, it is necessary to apply the concept of abstract models to user interfaces too. IFML is the OMG adopted (in March 2013) standard Interaction Flow Modeling Language designed for abstractly expressing the content, user interaction and control behaviour of the software applications front-end. IFML is a platform independent language, it has been designed with an executable semantic and it can be mapped easily into executable applications for various platforms and devices. In this article we present an approach to execute the IFML. We introduce a IFVM virtual machine which translate the IFML models into bytecode that will be interpreted by the java virtual machine. 1 INTRODUCTION a fundamental standard fUML (OMG, 2011), which is a subset of UML that contains the most relevant The software development has been affected by the part of class diagrams for modeling the data apparition of the MDA (OMG, 2015) approach. The structure and activity diagrams to specify system trend of the 21st century (BRAMBILLA et al., behavior; it contains all UML elements that are 2014) which has allowed developers to build their helpful for the execution of the models. -

Superoptimization of Webassembly Bytecode

Superoptimization of WebAssembly Bytecode Javier Cabrera Arteaga Shrinish Donde Jian Gu Orestis Floros [email protected] [email protected] [email protected] [email protected] Lucas Satabin Benoit Baudry Martin Monperrus [email protected] [email protected] [email protected] ABSTRACT 2 BACKGROUND Motivated by the fast adoption of WebAssembly, we propose the 2.1 WebAssembly first functional pipeline to support the superoptimization of Web- WebAssembly is a binary instruction format for a stack-based vir- Assembly bytecode. Our pipeline works over LLVM and Souper. tual machine [17]. As described in the WebAssembly Core Specifica- We evaluate our superoptimization pipeline with 12 programs from tion [7], WebAssembly is a portable, low-level code format designed the Rosetta code project. Our pipeline improves the code section for efficient execution and compact representation. WebAssembly size of 8 out of 12 programs. We discuss the challenges faced in has been first announced publicly in 2015. Since 2017, it has been superoptimization of WebAssembly with two case studies. implemented by four major web browsers (Chrome, Edge, Firefox, and Safari). A paper by Haas et al. [11] formalizes the language and 1 INTRODUCTION its type system, and explains the design rationale. The main goal of WebAssembly is to enable high performance After HTML, CSS, and JavaScript, WebAssembly (WASM) has be- applications on the web. WebAssembly can run as a standalone VM come the fourth standard language for web development [7]. This or in other environments such as Arduino [10]. It is independent new language has been designed to be fast, platform-independent, of any specific hardware or languages and can be compiled for and experiments have shown that WebAssembly can have an over- modern architectures or devices, from a wide variety of high-level head as low as 10% compared to native code [11].