Top Challenges from the First Practical Online Controlled Experiments Summit

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Financialisation of Big Tech

Engineering digital monopolies The financialisation of Big Tech Rodrigo Fernandez & Ilke Adriaans & Tobias J. Klinge & Reijer Hendrikse December 2020 Colophon Engineering digital monopolies The financialisation of Big Tech December 2020 Authors: Rodrigo Fernandez (SOMO), Ilke Editor: Marieke Krijnen Adriaans (SOMO), Tobias J. Klinge (KU Layout: Frans Schupp Leuven) and Reijer Hendrikse (VUB) Cover photo: Geralt/Pixabay With contributions from: ISBN: 978-94-6207-155-1 Manuel Aalbers and The Real Estate/ Financial Complex research group at KU Leuven, David Bassens, Roberta Cowan, Vincent Kiezebrink, Adam Leaver, Michiel van Meeteren, Jasper van Teffelen, Callum Ward Stichting Onderzoek Multinationale The Centre for Research on Multinational Ondernemingen Corporations (SOMO) is an independent, Centre for Research on Multinational not-for-profit research and network organi- Corporations sation working on social, ecological and economic issues related to sustainable Sarphatistraat 30 development. Since 1973, the organisation 1018 GL Amsterdam investigates multinational corporations The Netherlands and the consequences of their activities T: +31 (0)20 639 12 91 for people and the environment around F: +31 (0)20 639 13 21 the world. [email protected] www.somo.nl Made possible in collaboration with KU Leuven and Vrije Universiteit Brussel (VUB) with financial assistance from the Research Foundation Flanders (FWO), grant numbers G079718N and G004920N. The content of this publication is the sole responsibility of SOMO and can in no way be taken to reflect the views of any of the funders. Engineering digital monopolies The financialisation of Big Tech SOMO Rodrigo Fernandez, Ilke Adriaans, Tobias J. Klinge and Reijer Hendrikse Amsterdam, December 2020 Contents 1 Introduction .......................................................................................................... -

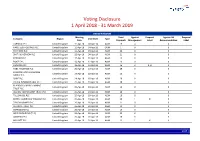

Global Voting Activity Report to March 2019

Voting Disclosure 1 April 2018 - 31 March 2019 UNITED KINGDOM Meeting Total Against Proposal Against ISS Proposal Company Region Vote Date Type Date Proposals Management Label Recommendation Label CARNIVAL PLC United Kingdom 11-Apr-18 04-Apr-18 AGM 19 0 0 HANSTEEN HOLDINGS PLC United Kingdom 11-Apr-18 04-Apr-18 OGM 1 0 0 RIO TINTO PLC United Kingdom 11-Apr-18 04-Apr-18 AGM 22 0 0 SMITH & NEPHEW PLC United Kingdom 12-Apr-18 04-Apr-18 AGM 21 0 0 PORVAIR PLC United Kingdom 17-Apr-18 10-Apr-18 AGM 15 0 0 BUNZL PLC United Kingdom 18-Apr-18 12-Apr-18 AGM 19 0 0 HUNTING PLC United Kingdom 18-Apr-18 12-Apr-18 AGM 16 2 3, 8 0 HSBC HOLDINGS PLC United Kingdom 20-Apr-18 13-Apr-18 AGM 29 0 0 LONDON STOCK EXCHANGE United Kingdom 24-Apr-18 18-Apr-18 AGM 26 0 0 GROUP PLC SHIRE PLC United Kingdom 24-Apr-18 18-Apr-18 AGM 20 0 0 CRODA INTERNATIONAL PLC United Kingdom 25-Apr-18 19-Apr-18 AGM 18 0 0 BLACKROCK WORLD MINING United Kingdom 25-Apr-18 19-Apr-18 AGM 15 0 0 TRUST PLC ALLIANZ TECHNOLOGY TRUST PLC United Kingdom 25-Apr-18 19-Apr-18 AGM 10 0 0 TULLOW OIL PLC United Kingdom 25-Apr-18 19-Apr-18 AGM 16 0 0 BRITISH AMERICAN TOBACCO PLC United Kingdom 25-Apr-18 19-Apr-18 AGM 20 1 8 0 TAYLOR WIMPEY PLC United Kingdom 26-Apr-18 20-Apr-18 AGM 21 0 0 ALLIANCE TRUST PLC United Kingdom 26-Apr-18 20-Apr-18 AGM 13 0 0 SCHRODERS PLC United Kingdom 26-Apr-18 20-Apr-18 AGM 19 0 0 WEIR GROUP PLC (THE) United Kingdom 26-Apr-18 20-Apr-18 AGM 23 0 0 AGGREKO PLC United Kingdom 26-Apr-18 20-Apr-18 AGM 20 0 0 MEGGITT PLC United Kingdom 26-Apr-18 20-Apr-18 AGM 22 1 4 0 1/47 -

Google, Bing, Yahoo!, Yandex & Baidu

Google, bing, Yahoo!, Yandex & Baidu – major differences and ranking factors. NO IMPACT » MAJOR IMPACT 1 2 3 4 TECHNICAL Domains & URLs - Use main pinyin keyword in domain - Main keyword in the domain - Main keyword in the domain - Main keyword in the domain - Easy to remember Keyword Domain 1 - As short as possible 2 - As short as possible 2 - As short as possible 11 - As short as possible - Use main pinyin keyword in URL - Main keyword as early as possible - Main keyword as early as possible - Main keyword as early as possible - Easy to remember Keyword in URL Path 3 - One specific keyword 3 - One specific keyword 3 - One specific keyword 11 - As short as possible - As short as possible - As short as possible - As short as possible - No filling words - No filling words - As short as possible Length of URL 2 2 2 22 - URL directory depth as brief as possible - No repeat of terms - No repeat of terms - Complete website secured with SSL -No ranking boost from HTTPS - No active SSL promotion - Complete website secured with SSL HTTPS 3 - No mix of HTTP and HTTPS 2 -Complete website secured with SSL 1 - Mostly recommended for authentication 22 - HTTPS is easier to be indexed than HTTP Country & Language - ccTLD or gTLD with country and language directories - ccTLD or gTLD with country and language directories - Better ranking with ccTLD (.cn or .com.cn) - Slightly better rankings in Russia with ccTLD .ru Local Top-Level Domain 3 - No regional domains, e.g. domain.eu, domain.asia 3 - No regional domains, e.g. -

Kosher Nosh Guide Summer 2020

k Kosher Nosh Guide Summer 2020 For the latest information check www.isitkosher.uk CONTENTS 5 USING THE PRODUCT LISTINGS 5 EXPLANATION OF KASHRUT SYMBOLS 5 PROBLEMATIC E NUMBERS 6 BISCUITS 6 BREAD 7 CHOCOLATE & SWEET SPREADS 7 CONFECTIONERY 18 CRACKERS, RICE & CORN CAKES 18 CRISPS & SNACKS 20 DESSERTS 21 ENERGY & PROTEIN SNACKS 22 ENERGY DRINKS 23 FRUIT SNACKS 24 HOT CHOCOLATE & MALTED DRINKS 24 ICE CREAM CONES & WAFERS 25 ICE CREAMS, LOLLIES & SORBET 29 MILK SHAKES & MIXES 30 NUTS & SEEDS 31 PEANUT BUTTER & MARMITE 31 POPCORN 31 SNACK BARS 34 SOFT DRINKS 42 SUGAR FREE CONFECTIONERY 43 SYRUPS & TOPPINGS 43 YOGHURT DRINKS 44 YOGHURTS & DAIRY DESSERTS The information in this guide is only applicable to products made for the UK market. All details are correct at the time of going to press but are subject to change. For the latest information check www.isitkosher.uk. Sign up for email alerts and updates on www.kosher.org.uk or join Facebook KLBD Kosher Direct. No assumptions should be made about the kosher status of products not listed, even if others in the range are approved or certified. It is preferable, whenever possible, to buy products made under Rabbinical supervision. WARNING: The designation ‘Parev’ does not guarantee that a product is suitable for those with dairy or lactose intolerance. WARNING: The ‘Nut Free’ symbol is displayed next to a product based on information from manufacturers. The KLBD takes no responsibility for this designation. You are advised to check the allergen information on each product. k GUESS WHAT'S IN YOUR FOOD k USING THE PRODUCT LISTINGS Hi Noshers! PRODUCTS WHICH ARE KLBD CERTIFIED Even in these difficult times, and perhaps now more than ever, Like many kashrut authorities around the world, the KLBD uses the American we need our Nosh! kosher logo system. -

The Effects of Varying Levels of Object Change on Explicit And

THE EFFECTS OF VARYING LEVELS OF OBJECT CHANGE ON EXPLICIT AND IMPLICIT MEMORY FOR BRAND MESSAGES WITHIN ADVERGAMES A Thesis Presented to the Faculty of the Graduate School University of Missouri-Columbia In Partial Fulfillment of the Requirements for the Degree Master of Arts By NICHOLAS D’ANDRADE Dr. Paul Bolls, Thesis Supervisor MAY 2007 © Copyright by Nicholas D’Andrade 2007 All Rights Reserved The undersigned, appointed by the Dean of the Graduate School, have examined the thesis entitled THE EFFECTS OF VARYING LEVELS OF OBJECT CHANGE ON EXPLICIT AND IMPLICIT MEMORY FOR BRAND MESSAGES WITHIN ADVERGAMES Presented by Nicholas D’Andrade, A candidate for the degree of Master of Arts And hereby certify that, in their opinion, it is worthy of acceptance Jennifer Aubrey Professor Jennifer Aubrey Paul Bolls Professor Paul Bolls Glenn Leshner Professor Glenn Leshner Kevin Wise Professor Kevin Wise DEDICATION To Kim, whose support, friendship, patience and love help me through every single day. Thank You. To Taya, you weren’t here when I started this but you were the biggest reason that I wanted to finish. To Mom and Dad, for everything the two of you have given me, during my academic journey and otherwise. A little more of that painting is revealed... To Barb and Jim, for all your help, all your love, and for giving me a place to come home to. To TJ, for being the friend 2000 miles away who was LITERALLY always available for a late chat on the phone. To Paul and Val, who have provided unwavering support and hospitality to Kim, Taya and me. -

Know Your Emeritus Member... STAN KOPECKY

Know your Emeritus Member... STAN KOPECKY Stan founded his consulting practice, which specializes in the design, development, testing and commercialization of consumer products packaging following a distinguished career with several of the largest and most sophisticated consumer products companies in the world. He began his career as a Food Research Scientist with Armour and Company. Shortly thereafter he joined Northfield, IL-based Kraft Inc. where he progressed rapidly through positions as a Packaging Research Scientist, Assistant Packaging Manager and Packaging Manager. While at Kraft, he implemented a series of programs that generated $3-5 million per year in savings in the production of rigid and flexible packaging categories; spearheaded the development of the Company’s first Vendor Certification Program for packaging materials and developed and delivered a packaging awareness program that proved successful in improving product quality and safety. Most recently Stan served as a Senior Packaging Project Manager with Chicago, IL-based Wm. Wrigley Jr. Company, the world’s largest manufacturer and marketer of chewing gum and a leader in the confectionary field. While at Wrigley, he played a major role in the evolution of the company, going from a provider of relatively indistinguishable commodities to a consumer focused, product feature driven business. In that regard, he was instrumental in achieving the number one position in the sugarless gum category by designing the packaging for Orbit tab gum, which proved to be a powerful product differentiator and which was awarded both a U.S. patent and the Company’s Creativity Award. He supported the Company’s successful entry into the breath mint segment by designing the tin and carton packaging utilized for the introduction of the Eclipse and Excel products into the U.S. -

The Calling Card of Russian Digital Antitrust✩ Natalia S

Russian Journal of Economics 6 (2020) 258–276 DOI 10.32609/j.ruje.6.53904 Publication date: 25 September 2020 www.rujec.org The calling card of Russian digital antitrust✩ Natalia S. Pavlova a,b,*, Andrey E. Shastitko a,b, Alexander A. Kurdin b a Russian Presidential Academy of National Economy and Public Administration, Moscow, Russia b Lomonosov Moscow State University, Moscow, Russia Abstract Digital antitrust is at the forefront of all expert discussions and is far from becoming an area of consensus among researchers. Moreover, the prescriptions for developed countries do not fit well the situation in developing countries, and namely in BRICS: where the violator of antitrust laws is based compared to national firms becomes an important factor that links competition and industrial policy. The article uses three recent cases from Russian antitrust policy in the digital sphere to illustrate typical patterns of platform conduct that lead not just to a restriction of competition that needs to be remedied by antitrust measures, but also to noteworthy distribution effects. The cases also illustrate the approach taken by the Russian competition authority to some typical problems that arise in digital markets, e.g. market definition, conduct interpretation, behavioral ef- fects, and remedies. The analysis sheds light on the specifics of Russian antitrust policy in digital markets, as well as their interpretation in the context of competition policy in developing countries and the link between competition and industrial policies. Keywords: digital antitrust, competition policy, industrial policy, platforms, multi-sided markets, essential facilities, consumer bias. JEL classification: K21, L41. 1. Introduction Russian antitrust as a direction of economic policy has a relatively short history, but a rich experience in the application of antitrust laws in areas that are considered digital. -

Press-Release Morgan Lewis Advises Yandex 31.08.2021 ENG

Contacts: Emily Carhart Director of Public Relations & Communications +1.202.739.5392 [email protected] Olga Karavaeva Marketing & Communications Manager in Russia +7.495.212.25.18 [email protected] Morgan Lewis Advising Yandex on $1B Acquisition of Interests in Businesses from Uber LONDON and MOSCOW, September 8, 2021: Morgan Lewis is advising Yandex on the restructuring of the ownership of its MLU and Self-Driving joint ventures with Uber, which will see Yandex own 100% of the Yandex.Eats, Yandex.Lavka, Yandex.Delivery and Self-Driving businesses, for consideration of $1.0 billion in cash. Yandex has also received an option to purchase Uber’s remaining stake in MLU over a two-year period for approximately $1.8 billion in cash. Yandex is one of Europe's largest internet companies and a leading search and ride-hailing provider in Russia. The cross-border Morgan Lewis team advising Yandex includes partners Tim Corbett, Nick Moore, Ksenia Andreeva, Anastasia Dergacheva and Omar Shah, and associates Ben Davies, Abbey Brimson, and Leonidas Theodosiou. For more, see Yandex’s announcement. About Morgan, Lewis & Bockius LLP Morgan Lewis is recognized for exceptional client service, legal innovation, and commitment to its communities. Our global depth reaches across North America, Asia, Europe, and the Middle East with the collaboration of more than 2,200 lawyers and specialists who provide elite legal services across industry sectors for multinational corporations to startups around the world. For more information about us, please visit www.morganlewis.com and connect with us on LinkedIn, Twitter, Facebook, Instagram, and WeChat. -

Why Are Some Websites Researched More Than Others? a Review of Research Into the Global Top Twenty Mike Thelwall

Why are some websites researched more than others? A review of research into the global top twenty Mike Thelwall How to cite this article: Thelwall, Mike (2020). “Why are some websites researched more than others? A review of research into the global top twenty”. El profesional de la información, v. 29, n. 1, e290101. https://doi.org/10.3145/epi.2020.ene.01 Manuscript received on 28th September 2019 Accepted on 15th October 2019 Mike Thelwall * https://orcid.org/0000-0001-6065-205X University of Wolverhampton, Statistical Cybermetrics Research Group Wulfruna Street Wolverhampton WV1 1LY, UK [email protected] Abstract The web is central to the work and social lives of a substantial fraction of the world’s population, but the role of popular websites may not always be subject to academic scrutiny. This is a concern if social scientists are unable to understand an aspect of users’ daily lives because one or more major websites have been ignored. To test whether popular websites may be ignored in academia, this article assesses the volume and citation impact of research mentioning any of twenty major websites. The results are consistent with the user geographic base affecting research interest and citation impact. In addition, site affordances that are useful for research also influence academic interest. Because of the latter factor, however, it is not possible to estimate the extent of academic knowledge about a site from the number of publications that mention it. Nevertheless, the virtual absence of international research about some globally important Chinese and Russian websites is a serious limitation for those seeking to understand reasons for their web success, the markets they serve or the users that spend time on them. -

Experience of European and Moscow Metro Systems

EU AND ITS NEIGHBOURHOOD: ENHANCING EU ACTORNESS IN THE EASTERN BORDERLANDS • EURINT 2020 | 303 DIGITAL TRANSFORMATION OF TRANSPORT INFRASTRUCTURE: EXPERIENCE OF EUROPEAN AND MOSCOW METRO SYSTEMS Anton DENISENKOV*, Natalya DENISENKOVA**, Yuliya POLYAKOVA*** Abstract The article is devoted to the consideration of the digital transformation processes of the world's metro systems based on Industry 4.0 technologies. The aim of the study is to determine the social, economic and technological effects of the use of digital technologies on transport on the example of the metro, as well as to find solutions to digitalization problems in modern conditions of limited resources. The authors examined the impact of the key technologies of Industry 4.0 on the business processes of the world's subways. A comparative analysis of the world's metros digital transformations has been carried out, a tree of world's metros digitalization problems has been built, a reference model for the implementation of world's metros digital transformation is proposed. As the results of the study, the authors were able to determine the social, economic and technological effects of metro digitalization. Keywords: Industry 4.0 technologies, digital transformation, transport, metro Introduction The coming era of the digital economy requires a revision of business models, methods of production in all spheres of human life in order to maintain competitive advantages in the world arena. The transport industry is no exception, as it plays an important role in the development of the country's economy and social sphere. The Metro is a complex engineering and technical transport enterprise, dynamically developing taking into account the prospects for expanding the city's borders, with an ever-increasing passenger traffic and integration into other public transport systems. -

Why Digital Sovereignty Requires a European Hyperscaler!

Why Digital Sovereignty requires a European hyperscaler! https://www.unibw.de/code [email protected] Yandex Google Baidu mail.ru/VK Facebook WeChat Amazon Alibaba JD Amazon Google Microsoft Alibaba Tencent Baidu Company Rank Amazon #1 Google #2 Company Rank Facebook #4 Netflix #8 JD.com #3 PayPal #10 Alibaba #5 Salesforce.com #11 Tencent #6 Booking #13 Sunin.com #7 Uber #14 ByteDance #9 Expedia #16 Adobe #18 Baidu #12 eBay #19 12 7 Meituan- #15 Bloomberg #20 Dianping Individuals Industry Governments Military Individuals Industry Governments Military • Lack of options for self-determination • Potential targets in disinformation campaigns • Loss of sensitive private data • Data generation & collection for machine learning Individuals Industry Governments Military • Industrial espionage • Passive through analysis of search engine results and user behavior • Transfer of enormous amounts of European R&D investments • Industrial sabotage • Indirect influence of market capitalization and valuations Endangering European Economic competitiveness Individuals Industry Governments Military • Political instability through disinformation campaigns • Economic instability through induced volatility & loss of competitiveness • Reduction of taxes • Limitation of political scope Individuals Industry Governments Military • Severe disadvantage in future warfware • “Hyperwarfare“ • split-second decision-making w/o humans through automation/AI • automated control of massive amounts of UAVs • Large-scale attacks in cyber domain, overwhelming decision making, reconnaissance, -

Unit 5 Space Exploration

TOPIC 8 People in Space There are many reasons why all types of technology are developed. In Unit 5, you’ve seen that some technology is developed out of curiosity. Galileo built his telescope because he was curious about the stars and planets. You’ve also learned that some technologies are built to help countries fight an enemy in war. The German V-2 rocket is one example of this. You may have learned in social stud- ies class about the cold war between the United States and the for- mer Soviet Union. There was no fighting with guns or bombs. However, these countries deeply mistrusted each other and became very competitive. They tried to outdo and intimidate each other. This competition thrust these countries into a space race, which was a race to be the first to put satellites and humans into space. Figure 5.57 Space shuttle Atlantis Topic 8 looks at how the desire to go into space drove people to blasts off in 1997 on its way to dock produce technologies that could make space travel a reality. with the Soviet space station Mir. Breaking Free of Earth’s Gravity Although space is only a hundred or so kilometres “up there,” it takes a huge amount of energy to go up and stay up there. The problem is gravity. Imagine throwing a ball as high as you can. Now imagine how hard it would be to throw the ball twice as high or to throw a ball twice as heavy. Gravity always pulls the ball back to Earth.