The Robotic Preschool of the Future: New Technologies for Learning and Play

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Integrating Technology with Student-Centered Learning

integrating technology with student-centered learning A REPORT TO THE NELLIE MAE EDUCATION FOUNDATION Prepared by Babette Moeller & Tim Reitzes | July 2011 www.nmefdn.org 1 acknowledgements We thank the Nellie Mae Education Foundation (NMEF) for the grant that supported the preparation of this report. Special thanks to Eve Goldberg for her guidance and support, and to Beth Miller for comments on an earlier draft of this report. We thank Ilene Kantrov for her contributions to shaping and editing this report, and Loulou Bangura for her help with building and managing a wiki site, which contains many of the papers and other resources that we reviewed (the site can be accessed at: http://nmef.wikispaces.com). We are very grateful for the comments and suggestions from Daniel Light, Shelley Pasnik, and Bill Tally on earlier drafts of this report. And we thank our colleagues from EDC’s Learning and Teaching Division who shared their work, experiences, and insights at a meeting on technology and student-centered learning: Harouna Ba, Carissa Baquarian, Kristen Bjork, Amy Brodesky, June Foster, Vivian Gilfroy, Ilene Kantrov, Daniel Light, Brian Lord, Joyce Malyn-Smith, Sarita Pillai, Suzanne Reynolds-Alpert, Deirdra Searcy, Bob Spielvogel, Tony Streit, Bill Tally, and Barbara Treacy. Babette Moeller & Tim Reitzes (2011) Education Development Center, Inc. (EDC). Integrating Technology with Student-Centered Learning. Quincy, MA: Nellie Mae Education Foundation. ©2011 by The Nellie Mae Education Foundation. All rights reserved. The Nellie Mae Education Foundation 1250 Hancock Street, Suite 205N, Quincy, MA 02169 www.nmefdn.org 3 Not surprising, 43 percent of students feel unprepared to use technology as they look ahead to higher education or their work life. -

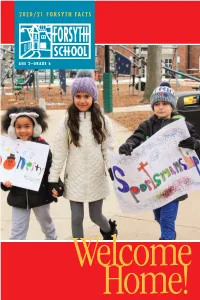

2020/21 Forsyth Facts Brochure

2020/21 FORSYTH FACTS AGE 2–GRADE 6 Welcome Home! WE PROVIDE CHILDREN WITH A SUPPORTIVE ATMOSPHERE AND OPPORTUNITIES FOR SUCCESS IN ORDER THAT THEY MAY DEVELOP SELF-CONFIDENCE AND A LOVE OF LEARNING 2020 WE EMPOWER STUDENTS TO EMBRACE CHALLENGE AS THEY FIND JOY IN LEARNING 2016 2019 ABOUT US Forsyth School is a leading independent, co-educational elementary school for children age 2 through Grade 6. Located across the street from Washington University and Forest Park in the Wydown-Forsyth Historic District, Forsyth provides an unforgettable experience on a one-of-a-kind campus with classrooms in six repurposed, historic homes. The challenging and engaging curriculum fosters independence and prepares students to thrive in secondary school and beyond. An Unforgettable Experience With neighbors including Washington University and Forest Park, many of the city’s best cultural institutions are walkable resources for Forsyth students. Science classes track biodiversity and study birds in Forest Park. Art classes visit the Mildred Lane Kemper Art Museum and the Saint Louis Art Museum. Physical Education holds the annual all-school mile run at Francis Field at Washington University, and sports teams run in Forest Park. Classes often walk to the Missouri History Museum and the Saint Louis Zoo. The core of Forsyth’s unique campus consists of six historic homes, acquired one by one over five decades since 1965. All six houses were built in the 40+ 1920s and are listed on the National Register of Historic Places; each has HOUSEHOLD ZIP CODES been repurposed and renovated to provide spacious classrooms, a library, and FROM MISSOURI lunchroom spaces. -

Policy and Procedure No: HCIT-CS-SS-2.0 Topic: Student Services Category: Client Services Issue Date: February 2017 Version: 2.0

HEART College of Innovation & Technology (Formerly Caribbean Institute of Technology) Policy and Procedure No: HCIT-CS-SS-2.0 Topic: Student Services Category: Client Services Issue Date: February 2017 Version: 2.0 This policy replaces all versions of the HCIT/CIT Student Welfare Policy. Purpose To clearly identify and communicate the services that caters to the personal development and wellbeing of students by the HEART College of Innovation & Technology (HCIT) while they pursue their studies in a selected vocation towards certification. Policy The organization provides student welfare services to assist clients/ students transition from orientation through to graduation by coordinating their College experience an impacting the students socially, mentally, physically and financially while completing their training. Scope To acquaint all new clients/students with the policies, rules, regulations and opportunities at the College that will enhance the possibility of them entering and functioning successfully within the workforce (technical & employability skills). Responsibilities Registrar: Has overarching responsibility for Student Welfare/ Affairs Registrar/ Guidance Counsellor/ Student Affairs Officer: Collaboratively plans orientation for new students/ clients. Student Affairs Officer: Has oversight for the Student Union Guidance Counsellor: Provides counselling services HEART College of Innovation & Technology Policy HCIT-CS- SS-2.0: Student Services Version 2.0 – February 2017 Policy Guidelines HCIT Recruitment Process Interview Process At HCIT, prospective students who meet the programmes requirements are selected and contacted via telephone using a formulated schedule to which they are given the option of choosing an interview time and date that is convenient to them. An HCIT interview instrument is administered which allows the interviewer to analyse and evaluate the interviewees thought processes and his/her suitability for the programme applied for. -

Technology in Early Childhood Programs 1

Draft Technology in Early Childhood Programs 1 Technology in Early Childhood Programs Serving Children from Birth through Age 8 A joint position statement of the National Association for the Education of Young Children and the Fred Rogers Center for Early Learning and Children’s Media at Saint Vincent College Proposed 2011 It is the position of NAEYC and the Fred Rogers Center that technology and interactive media are learning tools that, when used in intentional and developmentally appropriate ways and in conjunction with other traditional tools and materials, can support the development and learning of young children. In this position statement, the word “technology” is used broadly, referring to interactive digital and electronic devices, software, multi-touch tablets, technology-based toys, apps, video games and interactive (nonlinear) screen- based media. Technology is continuously evolving. As a result, this statement focuses on the principles and practices that address the technologies of today, while acknowledging that in the future new and emerging technologies will require continual revisions and adaptation. The most effective use of technology in an early childhood setting involves the application of tools and materials to enhance children’s learning and development, interactions, communication, and collaboration. As technology increasingly finds its way into mainstream culture, the types and uses of technology in early childhood programs have also expanded dramatically to include computers, tablets, e-books, mobile devices, handheld gaming devices, digital cameras and video camcorders, electronic toys, multimedia players for music and videos, digital audio recorders, interactive whiteboards, software applications, the Internet, streaming media, and more. These technologies are increasingly expanding the tools and materials to which young children have access both in their homes and in their classrooms, affecting the ways in which young children interact with the world and with others. -

A Top-Notch, Cost-Free Education for Children Pre-K Through Grade 12 Expert Teaching

A top-notch, cost-free education for children Pre-K through Grade 12 Expert teaching. Technology- enabled learning. World-class facilities. A Milton Hershey School education is one of the nation’s finest. MHS students are well prepared for college and careers. They pursue life- changing opportunities in this safe and supportive community of mentors and friends. MHS is cost-free — from food and housing to sports equipment, healthcare, and more. Students even have the opportunity to accrue up to $95,000 in scholarship support. classEs Teaching, resources wHat we offer and results • Technology-enhanced classrooms and instruction in a one-to-one environment • Small classes that foster personalized learning How it works • Hands-on Learning, from STEAM ElEmEntary Pre-K – Grade 4 (science, technology, engineering, arts, middlE Grades 5 – 8 and math) and problem-based learning sEnior Grades 9 – 12 to field trips to co-op and internship opportunities • Career Training, beginning in middle school and resulting in two or more students make life-cHanging industry-recognized certifications by progress at mHs – Achievement graduation consistently outperforms PA state averages; academic growth consistently • College Preparatory Education, exceeds PA growth average. including honors and AP courses, the Our programming is top-notch, opportunity to earn college credits, and our students are the proof.* dual-enrollment and partnerships with higher education * Measured by Pennsylvania standardized assessments • Skills for Life, from social and emotional learning to character and leadership development to health and wellness homE lifE Safe and wHat we offer supportive • Guidance and structure from attentive and caring adults • Character development and a focus on respect, integrity and accountability How it works • Everything students need, from games Students live in safe and supportive and computers to sports equipment and homes with 8 to 12 other students quiet places to study of the same gender and similar age. -

THE HUMBER COLLEGE INSTITUTE of TECHNOLOGY and ADVANCED LEARNING 205 Humber College Boulevard, Toronto, Ontario M9W 5L7

AGREEMENT FOR OUTBOUND ARTICULATION B E T W E E N: THE HUMBER COLLEGE INSTITUTE OF TECHNOLOGY AND ADVANCED LEARNING 205 Humber College Boulevard, Toronto, Ontario M9W 5L7 hereinafter referred to as "Humber" of the first part. -and- FERRIS STATE UNIVERSITY 1201 S. State Street, Big Rapids, Michigan, USA 49307 hereinafter referred to as "Ferris", of the second part; THIS AGREEMENT made this June 1, 2019 THIS AGREEMENT (the “Agreement”) dated June 1, 2019 (the “Effective Date”) B E T W E E N: THE HUMBER COLLEGE INSTITUTE OF TECHNOLOGY AND ADVANCED LEARNING (hereinafter referred to as the “Humber”) -and- FERRIS STATE UNIVERSITY (hereinafter referred to as the “Ferris”) RECITALS: A. The Humber College Institute of Technology and Advanced Learning (“Humber”) is a Post- Secondary Institution as governed by the Ontario Colleges of Applied Arts and Technology Act, 2002 (Ontario). B. Ferris State University (“Ferris”), a constitutional body corporate of the State of Michigan, located at 1201 S. State, CSS-310, Big Rapids, Michigan, United States. C. Humber and Ferris desire to collaborate on the development of an Outbound Articulation agreement to facilitate educational opportunities in applied higher education. D. Humber and Ferris (together, the “Parties” and each a “Party”) wish to enter into Agreement to meet growing demands for student mobility and shall be arranged from time to time in accordance with this Agreement. NOW THEREFORE, in consideration of the premises and the mutual promises hereinafter contained, it is agreed by and between the Parties: 1.0 Intent of the Agreement a) To facilitate the transfer of students from Humber with appropriate prerequisite qualifications and grades for advanced standing into the HVACR Engineering Technology and Energy Management Bachelor of Science Program at Ferris (the “Program”). -

Educators' Insights on Utilizing Ipads to Meet Kindergarten Goals

Educators’ insights on utilizing iPads to meet kindergarten goals by Lisa Tsumura A Master’s research project submitted in conformity with the requirements for the Degree of Master of Education Graduate Department of Education in the University of Ontario Institute of Technology © Copyright by Lisa Tsumura, 2017 i Abstract This study investigates how educators plan and review the integration of iPads to meet Early Learning Kindergarten curriculum goals across the four newly-established domains of the Ontario Kindergarten Curriculum. The literature reviewed for this study indicates that earlier studies on iPads have primarily focused on measuring literacy and mathematics learning outcomes and little research has been done on how technology can be used in a play-based learning environment. This study explores other domains of Kindergarten iPad use which are less documented in the literature. The study captures teacher planning for iPad integration to help students meet outcomes in other domains (such as Problem-Solving and Innovating; and Self-Regulation and Well- being). This study follows an action research model and captures the conversations of two educators implementing iPads who set goals (plan) and document their reflective practice through recorded conversations at regular intervals. Results of this study suggest that iPads can be used to develop skills in all four domains of the curriculum and that iPads can be used as a tool by teachers to document learning in a play-based environment. Our findings indicate that when teachers engage in action research, they are able to reflect and improve upon their own practices. As teachers develop new pedagogical understandings about the relationship between iPads and play, they are then able to support students’ digital play. -

Annual Report 2019 of Karlsruhe Institute of Technology

THE RESEARCH UNIVERSITY IN THE HELMHOLTZ ASSOCIATION Annual Report 2019 of Karlsruhe Institute of Technology KIT – The Research University in the Helmholtz Association www.kit.edu AT A GLANCE KIT – The Research University in the Helmholtz Association Mission We create and impart knowledge for the society and the environment. From fundamental research to applications, we excel in a broad range of disciplines, i.e. in natural sciences, engineering sciences, economics, and the humanities and social sciences. We make significant contributions to the global challenges of humankind in the fields of energy, mobility, and information. Being a big science institution, we take part in international competition and hold a leading position in Europe. We offer research-based study programs to prepare our students for responsible positions in society, industry, and science. Our innovation efforts build a bridge between important scientific findings and their application for the benefit of society, economic prosperity, and the preservation of our natural basis of life. Our working together and our management culture are characterized by respect, cooperation, confidence, and subsidiarity. An inspiring work environment as well as cultural diversity characterize and enrich the life and work at KIT. Employees 2019 Total: 9,398 Teaching and research: 5,183 Professors: 368 Foreign scientists and researchers: 1,178 Infrastructure and services: 4,215 Trainees: 371 Students Winter semester 2019/2020: 24,381 Budget 2019 in Million Euros Total: 951.3 Federal funds: 310.2 State funds: 271.4 Third-party funds: 369.7 EDITORIAL 3 Karlsruhe Institute of Technology – The Research University in the Helmholtz Association – stands for excellent research and outstanding academic ed- ucation. -

Central Oregon Community College to Oregon Institute of Technology Bachelor of Applied Science in Technology and Management

Central Oregon Community College to Oregon Institute of Technology Bachelor of Applied Science in Technology and Management Articulation Agreement 2020 - 2021 Catalog It is agreed that students transferring with an Associate of Applied Science (AAS) or Associate of Science (AS) from Central Oregon Community College (COCC) to Oregon Institute of Technology’s (Oregon Tech) Bachelor of Applied Science in Technology and Management (BTM) will be given full credit for up to 60 credits of Career Technical Electives and additional listed courses as completed. This agreement is based on the evaluation of the rigor and content of the general education and technical courses at both COCC and Oregon Tech, and is subject to a reevaluation every year by both schools for continuance. This agreement is dated August 25, 2020. Baccalaureate students must complete a minimum of 60 credits of upper-division work before a degree will be awarded. Upper-division is defined as 300-and 400-level classes at a bachelor’s degree granting institution. Baccalaureate students at Oregon Tech must complete 45 credits from Oregon Tech before a degree will be awarded. Admission to Oregon Tech is not guaranteed. Students must apply for admission to Oregon Tech in accordance with the then-existing rules, policies and procedures of Oregon Tech. Students are responsible for notifying the Oregon Tech Admissions and Registrar’s Office when operating under an articulation agreement to ensure their credits transfer as outlined in this agreement. In order to utilize this agreement students must be attending COCC during the above catalog year. Students must enroll at Oregon Tech within three years of this approval. -

I-BEST/Guided Pathways Lake Washington Institute of Technology

Promising Practices: I-BEST/Guided Pathways Lake Washington Institute of Technology Since its inception, I-BEST has been among the strongest programs on campus in terms of student outcomes. I-BEST made the move from ESL to college less intimidating and produced tangible positive student outcomes. The program began with the offering of short workforce-oriented certificates, and students completed these programs at an impressive rate. At this time, Lake Washing Institute of Technology offers fourteen I- BEST certificate and degree programs housed in nine of its ten schools, with the first offering in the School of Design slated for development in 2021-22. Additionally, the institution offers a developmental education I-BEST math course contextualized for students in the transportation programs as well as the Academic I-BEST, which leads students to complete transferable courses that meet English, Humanities, Social Science, and Science requirements. Between 2015-20, I-BEST students took about one quarter longer to complete their award than did other college completers. However, they both earned more college credits per quarter and graduated at 2 to 3 times the rate of any student group, regardless of initial placement. The comparison of I-BEST student completion rates compared to those of any other student group has remained constant over each year we have evaluated the program, and they provide the clearest evidence we have of the program's effectiveness. Name of Community College: Lake Washington Institute of Technology (https://www.lwtech.edu) Title of Program: I-BEST/Guided Pathways (https://www.lwtech.edu/academics/i- best) Type of Program: Workforce Training/Career Pathways. -

Science at Liberal Arts Colleges: a Better Education?

Thomas R. Cech Science at Liberal Arts Colleges 195 Science at Liberal Arts Colleges: A Better Education? T WAS THE SUMMER OF 1970. Carol and I had spent four years at Grinnell College, located in the somnolent farming com I munity of Grinnell, Iowa. Now, newly married, we drove westward, where we would enter the graduate program in chemistry at the University of California, Berkeley. How would our liberal arts education serve us in the Ph.D. program of one of the world’s great research universities? As we met our new classmates, one of our preconceptions quickly dissipated: Ber keley graduate students were not only university graduates. They also hailed from a diverse collection of colleges—many of them less known than Grinnell. And as we took our qualifying examinations and struggled with quantum mechanics problem sets, any residual apprehension about the quality of our under graduate training evaporated. Through some combination of what our professors had taught us and our own hard work, we were well prepared for science at the research university level. I have used this personal anecdote to draw the reader’s interest, but not only to that end; it is also a “truth in advertis ing” disclaimer. I am a confessed enthusiast and supporter of the small, selective liberal arts colleges. My pulse quickens when I see students from Carleton, Haverford, and Williams who have applied to our Ph.D. program. I serve on the board of trustees of Grinnell College. On the other hand, I teach undergraduates both in the classroom and in my research labo ratory at the University of Colorado, so I also have personal experience with science education at a research university. -

Digipen Institute of Technology 2021 Digipen Technology Academy Scholarship Overview

DigiPen Institute of Technology 2021 DigiPen Technology Academy Scholarship Overview DigiPen Institute of Technology is pleased to offer three half-tuition merit scholarships to students who have participated in one of the DigiPen Technology Academy Programs, with one scholarship for each of the following tracks: • Video Game Programming Track - This scholarship can be applied only toward DigiPen’s Bachelors of Computer Science in Real-time Interactive Simulation (RTIS), Science in Game Design (BSGD), Computer Science (BSCS), Computer Engineering (BSCE), and Arts in Game Design (BAGD). • Art and Animation Track – The scholarship can be applied only toward DigiPen’s Bachelor of Fine Arts in Digital Art and Animation. • Music and Sound Design Track - This scholarship can be applied only toward DigiPen’s Bachelor of Arts in Music and Sound Design. The 2021 DigiPen Technology Academy Scholarship recipient for each track will receive a tuition waiver equal to 50% of their first-year tuition which will be valid between Fall 2021 and Spring 2025 if the recipient maintains Satisfactory Academic Progress (SAP), as defined by the DigiPen Financial Aid Office and full-time enrollment. Failure to meet these requirements will result in the loss of the scholarship. Requirements Students wishing to apply for this scholarship must meet the following conditions: • Student must have received notice of admission to DigiPen Institute of Technology for an approved degree program (see above) before their application will be evaluated. • Student must be currently enrolled or have successfully completed a minimum of one year in a DigiPen Technology Academy program before the summer of 2021. • Student must be graduating from high school in the 2020-2021 school year.