I/O Virtualization Using Mellanox Infiniband and Channel I/O Virtualization (CIOV) Technology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

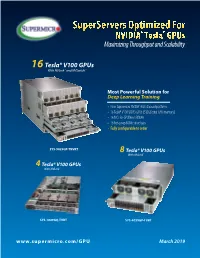

Supermicro GPU Solutions Optimized for NVIDIA Nvlink

SuperServers Optimized For NVIDIA® Tesla® GPUs Maximizing Throughput and Scalability 16 Tesla® V100 GPUs With NVLink™ and NVSwitch™ Most Powerful Solution for Deep Learning Training • New Supermicro NVIDIA® HGX-2 based platform • 16 Tesla® V100 SXM3 GPUs (512GB total GPU memory) • 16 NICs for GPUDirect RDMA • 16 hot-swap NVMe drive bays • Fully configurable to order SYS-9029GP-TNVRT 8 Tesla® V100 GPUs With NVLink™ 4 Tesla® V100 GPUs With NVLink™ SYS-1029GQ-TVRT SYS-4029GP-TVRT www.supermicro.com/GPU March 2019 Maximum Acceleration for AI/DL Training Workloads PERFORMANCE: Highest Parallel peak performance with NVIDIA Tesla V100 GPUs THROUGHPUT: Best in class GPU-to-GPU bandwidth with a maximum speed of 300GB/s SCALABILITY: Designed for direct interconections between multiple GPU nodes FLEXIBILITY: PCI-E 3.0 x16 for low latency I/O expansion capacity & GPU Direct RDMA support DESIGN: Optimized GPU cooling for highest sustained parallel computing performance EFFICIENCY: Redundant Titanium Level power supplies & intelligent cooling control Model SYS-1029GQ-TVRT SYS-4029GP-TVRT • Dual Intel® Xeon® Scalable processors with 3 UPI up to • Dual Intel® Xeon® Scalable processors with 3 UPI up to 10.4GT/s CPU Support 10.4GT/s • Supports up to 205W TDP CPU • Supports up to 205W TDP CPU • 8 NVIDIA® Tesla® V100 GPUs • 4 NVIDIA Tesla V100 GPUs • NVIDIA® NVLink™ GPU Interconnect up to 300GB/s GPU Support • NVIDIA® NVLink™ GPU Interconnect up to 300GB/s • Optimized for GPUDirect RDMA • Optimized for GPUDirect RDMA • Independent CPU and GPU thermal zones -

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 1 of 34 1 BOIES, SCHILLER & FLEXNER LLP WILLIAM A. ISAACSON (pro hac vice) 2 5301 Wisconsin Ave. NW, Suite 800 Washington, D.C. 20015 3 Telephone: (202) 237-2727 Facsimile: (202) 237-6131 4 Email: [email protected] 5 6 BOIES, SCHILLER & FLEXNER LLP BOIES, SCHILLER & FLEXNER LLP JOHN F. COVE, JR. (CA Bar No. 212213) PHILIP J. IOVIENO (pro hac vice) 7 DAVID W. SHAPIRO (CA Bar No. 219265) ANNE M. NARDACCI (pro hac vice) KEVIN J. BARRY (CA Bar No. 229748) 10 North Pearl Street 8 1999 Harrison St., Suite 900 4th Floor Oakland, CA 94612 Albany, NY 12207 9 Telephone: (510) 874-1000 Telephone: (518) 434-0600 Facsimile: (510) 874-1460 Facsimile: (518) 434-0665 10 Email: [email protected] Email: [email protected] [email protected] [email protected] 11 [email protected] 12 Attorneys for Plaintiff Jordan Walker Interim Class Counsel for Direct Purchaser 13 Plaintiffs 14 15 UNITED STATES DISTRICT COURT 16 NORTHERN DISTRICT OF CALIFORNIA 17 18 IN RE GRAPHICS PROCESSING UNITS ) Case No.: M:07-CV-01826-WHA ANTITRUST LITIGATION ) 19 ) MDL No. 1826 ) 20 This Document Relates to: ) THIRD CONSOLIDATED AND ALL DIRECT PURCHASER ACTIONS ) AMENDED CLASS ACTION 21 ) COMPLAINT FOR VIOLATION OF ) SECTION 1 OF THE SHERMAN ACT, 15 22 ) U.S.C. § 1 23 ) ) 24 ) ) JURY TRIAL DEMANDED 25 ) ) 26 ) ) 27 ) 28 THIRD CONSOLIDATED AND AMENDED CLASS ACTION COMPLAINT BY DIRECT PURCHASERS M:07-CV-01826-WHA Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 2 of 34 1 Plaintiffs Jordan Walker, Michael Bensignor, d/b/a Mike’s Computer Services, Fred 2 Williams, and Karol Juskiewicz, on behalf of themselves and all others similarly situated in the 3 United States, bring this action for damages and injunctive relief under the federal antitrust laws 4 against Defendants named herein, demanding trial by jury, and complaining and alleging as 5 follows: 6 NATURE OF THE CASE 7 1. -

An Emerging Architecture in Smart Phones

International Journal of Electronic Engineering and Computer Science Vol. 3, No. 2, 2018, pp. 29-38 http://www.aiscience.org/journal/ijeecs ARM Processor Architecture: An Emerging Architecture in Smart Phones Naseer Ahmad, Muhammad Waqas Boota * Department of Computer Science, Virtual University of Pakistan, Lahore, Pakistan Abstract ARM is a 32-bit RISC processor architecture. It is develop and licenses by British company ARM holdings. ARM holding does not manufacture and sell the CPU devices. ARM holding only licenses the processor architecture to interested parties. There are two main types of licences implementation licenses and architecture licenses. ARM processors have a unique combination of feature such as ARM core is very simple as compare to general purpose processors. ARM chip has several peripheral controller, a digital signal processor and ARM core. ARM processor consumes less power but provide the high performance. Now a day, ARM Cortex series is very popular in Smartphone devices. We will also see the important characteristics of cortex series. We discuss the ARM processor and system on a chip (SOC) which includes the Qualcomm, Snapdragon, nVidia Tegra, and Apple system on chips. In this paper, we discuss the features of ARM processor and Intel atom processor and see which processor is best. Finally, we will discuss the future of ARM processor in Smartphone devices. Keywords RISC, ISA, ARM Core, System on a Chip (SoC) Received: May 6, 2018 / Accepted: June 15, 2018 / Published online: July 26, 2018 @ 2018 The Authors. Published by American Institute of Science. This Open Access article is under the CC BY license. -

It's Meant to Be Played

Issue 10 $3.99 (where sold) THE WAY It’s meant to be played Ultimate PC Gaming with GeForce All the best holiday games with the power of NVIDIA Far Cry’s creators outclass its already jaw-dropping technology Battlefi eld 2142 with an epic new sci-fi battle World of Warcraft: Company of Heroes Warhammer: The Burning Crusade Mark of Chaos THE NEWS Notebooks are set to transform Welcome... PC gaming Welcome to the 10th issue of The Way It’s Meant To Be Played, the he latest must-have gaming system is… T magazine dedicated to the very best in a notebook PC. Until recently considered mainly PC gaming. In this issue, we showcase a means for working on the move or for portable 30 games, all participants in NVIDIA’s presentations, laptops complete with dedicated graphic The Way It’s Meant To Be Played processing units (GPUs) such as the NVIDIA® GeForce® program. In this program, NVIDIA’s Go 7 series are making a real impact in the gaming world. Latest thing: Laptops developer technology engineers work complete with dedicated The advantages are obvious – gamers need no longer be graphic processing units with development teams to get the are making an impact in very best graphics and effects into tied to their desktop set-up. the gaming world. their new titles. The games are then The new NVIDIA® GeForce® Go 7900 notebook rigorously tested by three different labs GPUs are designed for extreme HD gaming, and gaming at NVIDIA for compatibility, stability, and hardware specialists such as Alienware and Asus have performance to ensure that any game seen the potential of the portable platform. -

Data Sheet FUJITSU Tablet STYLISTIC M702

Data Sheet FUJITSU Tablet STYLISTIC M702 Data Sheet FUJITSU Tablet STYLISTIC M702 Designed for the toughest businesses The Fujitsu STYLISTIC M702 is the ideal choice if you are looking for a robust yet lightweight high- performance tablet PC to support you in the field and to increase business continuity. Being water- and dustproof, it protects components most frequently damaged, making it perfect for semi-ruggedized applications. The 25.7 cm (10.1-inch) STYLISTIC M702 provides protection against environmental conditions, ensuring uncompromising productivity whether exposed to rain, humidity or dust. Moreover, it can easily be integrated into your company’s Virtual Desktop Infrastructure (VDI), thus ensuring protected anytime access to your company data while its business software lets you securely manage your contacts and emails. Embedded 4G/LTE delivers latest high-speed connectivity for end-to-end performance in the most challenging situations and the Android operating system’s rich multimedia features also make it perfect for private use. Semi-Ruggedized Ensure absolute business continuity and protect your tablet from environmental conditions.Work from anywhere while exposing the tablet to rain, humidity or dust without causing any component damage. Waterproof (tested for IPX5/7/8 specifications), dustproof (tested for IP5X specification), durable with toughened glass Business Match Ultimate productivity from anywhere. Securely access your business applications, data and company intranet with the business software selection and protect -

Technical Brief

Technical Brief SLI-Ready Memory with Enhanced Performance Profiles One-Click Hassle-Free Memory Performance Boost May 2006 TB-0529-001_v01 One-Click Hassle-Free Memory Performance Boost with SLI-Ready Memory Gamers and PC enthusiasts are endlessly searching for opportunities to improve the performance of their PCs. Optimizing system performance is a function of the major components used—graphics processing unit (GPU) add-in cards, CPU, chipset, and memory. It is also a function of tuning and overclocking the various PC components. Overclocking, however, has disadvantages such as system instability and inconsistent performance measurements from one system to another. SLI-Ready memory with Enhanced Performance Profiles (EPP) is a new approach that simplifies overclocking and ensures memory and platform compatibility. The NVIDIA nForce 590 SLI core logic is the first NVIDIA® platform that supports the new EPP functionality. Memory DIMMs which receive the SLI-Ready certification are required to support EPP technology to ensure the memories can be automatically detected and their full potential realized with the NVIDIA nForce® 590 SLI® chipset. The SLI-Ready certification process ensures the memory modules have passed a comprehensive set of tests and meet the minimum requirements for delivering our customers the outstanding experience they expect from SLI systems. SLI-Ready Memory with EPP at a Glance System memory modules (DIMMs) are built using an electrically-erasable programmable read-only memory (EEPROM) that can hold up to 256 Bytes of data. The EEPROM is used to store Serial Presence Detect (SPD) information defined by JEDEC which include manufacturer part number, manufacturer name, some timing parameters, serial number, etc. -

(12) United States Patent (10) Patent No.: US 7.412,554 B2 Danilak (45) Date of Patent: Aug

USOO7412554B2 (12) United States Patent (10) Patent No.: US 7.412,554 B2 Danilak (45) Date of Patent: Aug. 12, 2008 (54) BUS INTERFACE CONTROLLER FOR 2005, 0140682 A1 6/2005 Sumanaweera et al. COSTEFFECTIVE HIGH PERFORMANCE 2006/0005000 A1* 1/2006 King et al. ..................... T13/1 GRAPHICS SYSTEM WITH TWO ORMORE 2006, OO59451 A1 3/2006 Koehler et al. GRAPHICS PROCESSING UNITS 2006/0098020 A1* 5/2006 Shen et al. .................. 345,520 2006/0294.279 A1* 12/2006 McKee et al. ............... T10,301 (75) Inventor: Radoslav Danilak, Cupertino, CA (US) 2007. O139423 A1 6/2007 Kong et al. (73) Assignee: NVIDIA Corporation, Santa Clara, CA 2007/0214323 A1 9, 2007 Moll et al. (US) (*) Notice: Subject to any disclaimer, the term of this OTHER PUBLICATIONS patent is extended or adjusted under 35 Danilak, Radoslav, entitled “Graphics Processing for Cost Effective U.S.C. 154(b) by 0 days. High Performance Graphics System With Two or More Graphics Processing Units.” U.S. Appl. No. 1 1/454991, filed Jun. 15, 2006. (21) Appl. No.: 11/454,992 Danilak, Radoslav, entitled “Motherboard For Cost-Effective High Performance Graphics System With Two or More Graphics Process (22) Filed: Jun. 15, 2006 ing Units.” U.S. Appl. No. 1 1/455,072, filed Jun. 15, 2006. (65) Prior Publication Data * cited by examiner US 2007/O294458A1 Dec. 20, 2007 Primary Examiner Mark Rinehart Assistant Examiner Kim T Huynh (51) Int. Cl. (74) Attorney, Agent, or Firm—Cooley Godward Kronish G06F 3/4 (2006.01) LLP (52) U.S. Cl. ....................................... 710/305: 710/306 (58) Field of Classification Search ........ -

Dell EMC Poweredge C4140 Technical Guide

Dell EMC PowerEdge C4140 Technical Guide Regulatory Model: E53S Series Regulatory Type: E53S001 Notes, cautions, and warnings NOTE: A NOTE indicates important information that helps you make better use of your product. CAUTION: A CAUTION indicates either potential damage to hardware or loss of data and tells you how to avoid the problem. WARNING: A WARNING indicates a potential for property damage, personal injury, or death. © 2017 - 2019 Dell Inc. or its subsidiaries. All rights reserved. Dell, EMC, and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other trademarks may be trademarks of their respective owners. 2019 - 09 Rev. A00 Contents 1 System overview ......................................................................................................................... 5 Introduction............................................................................................................................................................................ 5 New technologies.................................................................................................................................................................. 5 2 System features...........................................................................................................................7 Specifications......................................................................................................................................................................... 7 Product comparison............................................................................................................................................................. -

Arm-Based Computing Platform Solutions Accelerating Your Arm Project Development

Arm-based Computing Platform Solutions Accelerating Your Arm Project Development Standard Hardware Solutions AIM-Linux & AIM-Android Services Integrated Peripherals Trusty Ecosystem QT Automation SUSI API Transportation Medical MP4 BSP NFS Video Driver LOADER Acceleration Kernel Security Boot Loader Networking www.advantech.com Key Factors for Arm Business Success Advantech’s Arm computing solutions provide an open and unified development platform that minimizes effort and increases resource efficiency when deploying Arm-based embedded applications. Advantech Arm computing platforms fulfill the requirements of power- optimized mobile devices and performance-optimized applications with a broad offering of Computer-on-Modules, single board, and box computer solutions based on the latest Arm technologies. This year, Advantech’s Arm computing will roll out three new innovations to lead embedded Arm technologies into new arena: 1. The i.MX 8 series aims for next generation computing performance and targets new application markets like AI. 2. Developing a new standard: UIO20/40-Express, an expansion interface for extending various I/Os easily and quickly for different embedded applications. 3. We are announcing the Advantech AIM-Linux and AIM-Android, which provide unfiled BSP, modularized App Add-Ons, and SDKs for customers to accelerate their application development. Standardized Hardware Solutions • Computer on Module • Single Board Computer • Computer Box AIM-Linux AIM-Linux & AIM-Android • Unified Embedded Platforms AIM-Android • App Add-Ons -

Mellanox Technologies Delivers 10 Gb/Sec Infiniband Server Blade Reference Design

Mellanox Technologies Delivers 10 Gb/sec InfiniBand Server Blade Reference Design Second Generation InfiniBand Server Blades Deliver the Ultimate Compute Engine for Database and Enterprise Computing Applications Santa Clara, California and Yokneam, Israel, -- July 22, 2002 -- Mellanox Technologies, Ltd., the leading provider of InfiniBandSM silicon, announced the availability of its Nitro II 4X (10 Gb/ sec) InfiniBand server blade reference platform. The Nitro II platform utilizes Mellanox’s second- generation 10 Gb/sec InfiniHostTM host channel adaptor (HCA) and InfiniScaleTM switching sili- con. The Nitro II platform consists of 2.2 GHz Intel® Pentium 4 processor based diskless server blades, dual 16-port 4X switches, and a 10 Gb/sec backplane supporting 480 Gb/sec of switching capacity in a compact 14 blade chassis. The combination of high performance processors, superb memory capacity and second-generation InfiniBand silicon offers OEMs and developers an ideal 10 Gb/sec development platform for optimizing the performance of clustered databases and other data center applications. "InfiniBand diskless server blades create a whole new class of data center solutions that provide two key server improvements. First, Nitro II blades provides more than 3 times the existing CPU performance available through the use of a 2.2 GHz Intel processor versus the 700 or 800 MHz speeds offered in current blade technologies," said Yuval Leader vice president of system solu- tions, Mellanox Technologies. "Secondly, the InfiniBand architecture enables CPU, I/O, and stor- age sharing across all data center systems; rather than the duplication and isolation of these Mellanox Technologies Inc. 2900 Stender Way, Santa Clara, CA 95054 Tel: 408-970-3400 Fax: 408-970-3403 www.mellanox.com 1 Mellanox Technologies Inc Mellanox Technologies Delivers 10 Gb/sec InfiniBand Server Blade Reference Design essential resources in each and every server or server blade. -

ACACES a Decoupled Access/Execute Architecture for Mobile Gpus

ACACES A Decoupled Access/Execute Architecture for Mobile GPUs Jose-Maria Arnau∗;1, Joan-Manuel Parcerisa∗;1, Polychronis Xekalakisy;2, ∗ Computer Architecture Department, Universitat Politecnica de Catalunya y Intel Barcelona Research Center, Intel Labs Barcelona ABSTRACT Smartphones are emerging as one of the fastest growing markets, providing enhanced capabilities every few months. However, supporting these hardware/software improvements comes at the cost of reducing the operating time per battery charge. The GPU is only left with a shrinking fraction of the power budget, but the trend towards better screens will inevitably lead to a higher demand for improved graphics rendering. In this paper, we focus on improving the energy efficiency of the GPU. We show that the main bottleneck for graphical applications are the pixel/texture caches and that traditional techniques for hiding memory latency (prefetching, multithreading) do not work well or come at a high energy cost. We thus propose the migration of GPU designs towards the decoupled access/execute concept. Furthermore, we reduce bandwidth usage by exploiting inter- core data sharing. The end design achieves 93% of the performance of a heavily multithreaded GPU while providing 34% energy savings. 1 Introduction The fast growing of the smartphones market is fueled by the end-user demand for a contin- uously improved mobile computing experience. Current smartphones excel in providing a real web browsing experience, allow for video and picture editing and support a plethora of 3D games. However, supporting all these capabilities comes at a high energy cost, which in turn results in fairly short operating times per battery charge. -

Precision 5820 Tower Spec Sheet

PRECISION 5820 TOWER Big power in an innovative compact design. POWERFUL PERFORMANCE LATEST TECHNOLOGY The Dell Precision 5820 Tower delivers high performance for your Ready for complex projects, including artificial intelligence and most demanding applications with the latest Intel® Xeon® virtual reality workflows, with next generation Radeon™ Pro and and Core® X Series processors and up to 512GB of fast 2666MHz NVIDIA Quadro® professional graphics, with support for up to 600W memory. In addition, every Dell Precision comes with the exclusive of graphics power. Front access FlexBays provide outstanding Dell Precision Optimizer, which automatically tunes your workstation storage expandability, up to 60TB with SATA, SAS and PCIe M.2/U.2 to run some of the most popular (ISV) applications at the fastest NVMe solid state drives, featuring hot-swap access, so you never speeds possible. DPO Premium is a new AI-based version which have to stop creating. optimizes your workstation, based upon how you use it, providing true customization. INNOVATIVE DESIGN OUTSTANDING RELIABILITY The front FlexBay design supports a range of modules from scalable storage to security options, you can build the workstation designed The revolutionary multichannel thermal design delivers advanced for your creative expertise. Secure your data with the optional cooling and acoustics so you can enjoy longer productivity under Smart Card (CAC/PIV) reader, hot swap NVMe SSDs and heavy workloads. Precision workstations are tested and are lockable hard drive carriers. The best-in-class smart design Independent Software Vendor certified to ensure the high- includes integrated front and rear handles for easy deployment performance applications you rely on every day run smoothly.