Mellanox Infiniband HCA to Feature PCI Express Technology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mellanox Technologies Announces the Appointment of Dave Sheffler As Vice President of Worldwide Sales

MELLANOX TECHNOLOGIES ANNOUNCES THE APPOINTMENT OF DAVE SHEFFLER AS VICE PRESIDENT OF WORLDWIDE SALES Former VP of AMD joins InfiniBand Internet Infrastructure Startup SANTA CLARA, CA – January 17, 2000 – Mellanox Technologies, a fabless semiconductor startup company developing InfiniBand semiconductors for the Internet infrastructure, today announced the appointment of Dave Sheffler as its Vice President of Worldwide Sales, reporting to Eyal Waldman, Chief Executive Officer and Chairman of the Board. “Dave Sheffler possesses the leadership, business capabilities, and experience which complement the formidable engineering, marketing, and operations talent that Mellanox has assembled. He combines the highest standards of ethics and achievements, with an outstanding record of grow- ing sales in highly competitive and technical markets. The addition of Dave to the team brings an additional experienced business perspective and will enable Mellanox to develop its sales organi- zation and provide its customers the highest level of technical and logistical support,” said Eyal Waldman. Prior to joining Mellanox Dave served as Vice President of Sales and Marketing for the Americas for Advanced Micro Devices (NYSE: AMD). Previously, Mr. Sheffler was the VP of Worldwide Sales for Nexgen Inc., where he was part of the senior management team that guided the company’s growth, leading to a successful IPO and eventual sale to AMD in January 1996. Mellanox Technologies Inc. 2900 Stender Way, Santa Clara, CA 95054 Tel: 408-970-3400 Fax: 408-970-3403 www.mellanox.com 1 Mellanox Technologies Inc MELLANOX TECHNOLOGIES ANNOUNCES THE APPOINTMENT OF DAVE SHEFFLER AS VICE PRESIDENT OF WORLDWIDE Dave’s track record demonstrates that he will be able to build a sales organization of the highest caliber. -

IBM Cloud Web

IBM’s iDataPlex with Mellanox® ConnectX® InfiniBand Adapters Dual-Port ConnectX 20 and 40Gb/s InfiniBand PCIe Adapters Data center cost structures are shifting, from equipment-focused to space-, power- and personnel-focused. Total cost of owner- ship and green initiatives are major spending drivers as data centers are being refreshed. Topping off those challenges is the rapid evolution of information technology becoming a competitive advantage through business process management and deployment of service oriented architectures. I/O technology plays a key role in meeting many of the spending drivers – provisioning capacity for future growth, efficient scaling of compute, LAN and SAN capacity, reduction of space and power in the data center helping reduce TCO and doing so while enhancing data center agility. IBM iDataPlex and ConnectX InfiniBand Adapter Cards IBM’s iDataPlex with Mellanox ConnectX InfiniBand adapters High-Performance for the Flexible Data Center offers a solution that is eco-friendly, flexible, responsive, and Scientists, engineers, and analysts eager to solve challenging positioned to address the evolving requirements of Internet-Scale problems in engineering, financial markets, and the life and earth and High-Performance sciences rely on high-performance systems. In addition, with the use of multi-core CPUs, virtualized infrastructures and networked Unprecedented Performance, Scalability, and storage, users are demanding increased efficiency of the entire Efficiency for Cloud Computing IT compute and storage infrastructure. -

MLNX OFED Documentation Rev 5.0-2.1.8.0

MLNX_OFED Documentation Rev 5.0-2.1.8.0 Exported on May/21/2020 06:13 AM https://docs.mellanox.com/x/JLV-AQ Notice This document is provided for information purposes only and shall not be regarded as a warranty of a certain functionality, condition, or quality of a product. NVIDIA Corporation (“NVIDIA”) makes no representations or warranties, expressed or implied, as to the accuracy or completeness of the information contained in this document and assumes no responsibility for any errors contained herein. NVIDIA shall have no liability for the consequences or use of such information or for any infringement of patents or other rights of third parties that may result from its use. This document is not a commitment to develop, release, or deliver any Material (defined below), code, or functionality. NVIDIA reserves the right to make corrections, modifications, enhancements, improvements, and any other changes to this document, at any time without notice. Customer should obtain the latest relevant information before placing orders and should verify that such information is current and complete. NVIDIA products are sold subject to the NVIDIA standard terms and conditions of sale supplied at the time of order acknowledgement, unless otherwise agreed in an individual sales agreement signed by authorized representatives of NVIDIA and customer (“Terms of Sale”). NVIDIA hereby expressly objects to applying any customer general terms and conditions with regards to the purchase of the NVIDIA product referenced in this document. No contractual obligations are formed either directly or indirectly by this document. NVIDIA products are not designed, authorized, or warranted to be suitable for use in medical, military, aircraft, space, or life support equipment, nor in applications where failure or malfunction of the NVIDIA product can reasonably be expected to result in personal injury, death, or property or environmental damage. -

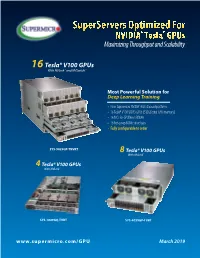

Supermicro GPU Solutions Optimized for NVIDIA Nvlink

SuperServers Optimized For NVIDIA® Tesla® GPUs Maximizing Throughput and Scalability 16 Tesla® V100 GPUs With NVLink™ and NVSwitch™ Most Powerful Solution for Deep Learning Training • New Supermicro NVIDIA® HGX-2 based platform • 16 Tesla® V100 SXM3 GPUs (512GB total GPU memory) • 16 NICs for GPUDirect RDMA • 16 hot-swap NVMe drive bays • Fully configurable to order SYS-9029GP-TNVRT 8 Tesla® V100 GPUs With NVLink™ 4 Tesla® V100 GPUs With NVLink™ SYS-1029GQ-TVRT SYS-4029GP-TVRT www.supermicro.com/GPU March 2019 Maximum Acceleration for AI/DL Training Workloads PERFORMANCE: Highest Parallel peak performance with NVIDIA Tesla V100 GPUs THROUGHPUT: Best in class GPU-to-GPU bandwidth with a maximum speed of 300GB/s SCALABILITY: Designed for direct interconections between multiple GPU nodes FLEXIBILITY: PCI-E 3.0 x16 for low latency I/O expansion capacity & GPU Direct RDMA support DESIGN: Optimized GPU cooling for highest sustained parallel computing performance EFFICIENCY: Redundant Titanium Level power supplies & intelligent cooling control Model SYS-1029GQ-TVRT SYS-4029GP-TVRT • Dual Intel® Xeon® Scalable processors with 3 UPI up to • Dual Intel® Xeon® Scalable processors with 3 UPI up to 10.4GT/s CPU Support 10.4GT/s • Supports up to 205W TDP CPU • Supports up to 205W TDP CPU • 8 NVIDIA® Tesla® V100 GPUs • 4 NVIDIA Tesla V100 GPUs • NVIDIA® NVLink™ GPU Interconnect up to 300GB/s GPU Support • NVIDIA® NVLink™ GPU Interconnect up to 300GB/s • Optimized for GPUDirect RDMA • Optimized for GPUDirect RDMA • Independent CPU and GPU thermal zones -

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 1 of 34 1 BOIES, SCHILLER & FLEXNER LLP WILLIAM A. ISAACSON (pro hac vice) 2 5301 Wisconsin Ave. NW, Suite 800 Washington, D.C. 20015 3 Telephone: (202) 237-2727 Facsimile: (202) 237-6131 4 Email: [email protected] 5 6 BOIES, SCHILLER & FLEXNER LLP BOIES, SCHILLER & FLEXNER LLP JOHN F. COVE, JR. (CA Bar No. 212213) PHILIP J. IOVIENO (pro hac vice) 7 DAVID W. SHAPIRO (CA Bar No. 219265) ANNE M. NARDACCI (pro hac vice) KEVIN J. BARRY (CA Bar No. 229748) 10 North Pearl Street 8 1999 Harrison St., Suite 900 4th Floor Oakland, CA 94612 Albany, NY 12207 9 Telephone: (510) 874-1000 Telephone: (518) 434-0600 Facsimile: (510) 874-1460 Facsimile: (518) 434-0665 10 Email: [email protected] Email: [email protected] [email protected] [email protected] 11 [email protected] 12 Attorneys for Plaintiff Jordan Walker Interim Class Counsel for Direct Purchaser 13 Plaintiffs 14 15 UNITED STATES DISTRICT COURT 16 NORTHERN DISTRICT OF CALIFORNIA 17 18 IN RE GRAPHICS PROCESSING UNITS ) Case No.: M:07-CV-01826-WHA ANTITRUST LITIGATION ) 19 ) MDL No. 1826 ) 20 This Document Relates to: ) THIRD CONSOLIDATED AND ALL DIRECT PURCHASER ACTIONS ) AMENDED CLASS ACTION 21 ) COMPLAINT FOR VIOLATION OF ) SECTION 1 OF THE SHERMAN ACT, 15 22 ) U.S.C. § 1 23 ) ) 24 ) ) JURY TRIAL DEMANDED 25 ) ) 26 ) ) 27 ) 28 THIRD CONSOLIDATED AND AMENDED CLASS ACTION COMPLAINT BY DIRECT PURCHASERS M:07-CV-01826-WHA Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 2 of 34 1 Plaintiffs Jordan Walker, Michael Bensignor, d/b/a Mike’s Computer Services, Fred 2 Williams, and Karol Juskiewicz, on behalf of themselves and all others similarly situated in the 3 United States, bring this action for damages and injunctive relief under the federal antitrust laws 4 against Defendants named herein, demanding trial by jury, and complaining and alleging as 5 follows: 6 NATURE OF THE CASE 7 1. -

An Emerging Architecture in Smart Phones

International Journal of Electronic Engineering and Computer Science Vol. 3, No. 2, 2018, pp. 29-38 http://www.aiscience.org/journal/ijeecs ARM Processor Architecture: An Emerging Architecture in Smart Phones Naseer Ahmad, Muhammad Waqas Boota * Department of Computer Science, Virtual University of Pakistan, Lahore, Pakistan Abstract ARM is a 32-bit RISC processor architecture. It is develop and licenses by British company ARM holdings. ARM holding does not manufacture and sell the CPU devices. ARM holding only licenses the processor architecture to interested parties. There are two main types of licences implementation licenses and architecture licenses. ARM processors have a unique combination of feature such as ARM core is very simple as compare to general purpose processors. ARM chip has several peripheral controller, a digital signal processor and ARM core. ARM processor consumes less power but provide the high performance. Now a day, ARM Cortex series is very popular in Smartphone devices. We will also see the important characteristics of cortex series. We discuss the ARM processor and system on a chip (SOC) which includes the Qualcomm, Snapdragon, nVidia Tegra, and Apple system on chips. In this paper, we discuss the features of ARM processor and Intel atom processor and see which processor is best. Finally, we will discuss the future of ARM processor in Smartphone devices. Keywords RISC, ISA, ARM Core, System on a Chip (SoC) Received: May 6, 2018 / Accepted: June 15, 2018 / Published online: July 26, 2018 @ 2018 The Authors. Published by American Institute of Science. This Open Access article is under the CC BY license. -

It's Meant to Be Played

Issue 10 $3.99 (where sold) THE WAY It’s meant to be played Ultimate PC Gaming with GeForce All the best holiday games with the power of NVIDIA Far Cry’s creators outclass its already jaw-dropping technology Battlefi eld 2142 with an epic new sci-fi battle World of Warcraft: Company of Heroes Warhammer: The Burning Crusade Mark of Chaos THE NEWS Notebooks are set to transform Welcome... PC gaming Welcome to the 10th issue of The Way It’s Meant To Be Played, the he latest must-have gaming system is… T magazine dedicated to the very best in a notebook PC. Until recently considered mainly PC gaming. In this issue, we showcase a means for working on the move or for portable 30 games, all participants in NVIDIA’s presentations, laptops complete with dedicated graphic The Way It’s Meant To Be Played processing units (GPUs) such as the NVIDIA® GeForce® program. In this program, NVIDIA’s Go 7 series are making a real impact in the gaming world. Latest thing: Laptops developer technology engineers work complete with dedicated The advantages are obvious – gamers need no longer be graphic processing units with development teams to get the are making an impact in very best graphics and effects into tied to their desktop set-up. the gaming world. their new titles. The games are then The new NVIDIA® GeForce® Go 7900 notebook rigorously tested by three different labs GPUs are designed for extreme HD gaming, and gaming at NVIDIA for compatibility, stability, and hardware specialists such as Alienware and Asus have performance to ensure that any game seen the potential of the portable platform. -

Meridian Contrarian Fund Holdings As of 12/31/2016

Meridian Contrarian Fund Holdings as of 12/31/2016 Ticker Security Name % Allocation NVDA NVIDIA CORP 5.8% MSFT MICROSOFT CORP 4.1% CFG CITIZENS FINANCIAL GROUP INC 3.8% ALEX ALEXANDER & BALDWIN INC 3.5% EOG EOG RESOURCES INC 3.3% CACI CACI INTERNATIONAL INC 3.3% USB US BANCORP 3.1% XYL XYLEM INC/NY 2.7% TOT TOTAL SA 2.6% VRNT VERINT SYSTEMS INC 2.5% CELG CELGENE CORP 2.4% BOH BANK OF HAWAII CORP 2.2% GIL GILDAN ACTIVEWEAR INC 2.1% LVS LAS VEGAS SANDS CORP 2.0% MLNX MELLANOX TECHNOLOGIES LTD 2.0% AAPL APPLE INC 1.9% ENS ENERSYS 1.8% ZBRA ZEBRA TECHNOLOGIES CORP 1.8% MU MICRON TECHNOLOGY INC 1.7% RYN RAYONIER INC 1.7% KLXI KLX INC 1.7% TRMB TRIMBLE INC 1.6% IRDM IRIDIUM COMMUNICATIONS INC 1.5% QCOM QUALCOMM INC 1.5% Investors should consider the investment objective and policies, risk considerations, charges and ongoing expense of an investment carefully before investing. The prospectus and summary prospectus contains this and other information relevant to an investment in the Fund. Please read the prospectus or summary prospectus carefully before you invest or send money. To obtain a prospectus, please contact your investment representative or access the Meridian Funds’ website at www.meridianfund.com. ALPS Distributors, Inc., a member FINRA is the distributor of the Meridian Mutual Funds, advised by Arrowpoint Asset Management, LLC. ALPS, Meridian and Arrowpoint are unaffiliated. Arrowpoint Partners is a trade name for Arrowpoint Asset Management, LLC., a registered investment adviser. The portfolio holdings for the Meridian Funds are published on the Funds' website on a calendar quarter basis, no earlier than 30 days after the end of the quarter. -

Data Sheet FUJITSU Tablet STYLISTIC M702

Data Sheet FUJITSU Tablet STYLISTIC M702 Data Sheet FUJITSU Tablet STYLISTIC M702 Designed for the toughest businesses The Fujitsu STYLISTIC M702 is the ideal choice if you are looking for a robust yet lightweight high- performance tablet PC to support you in the field and to increase business continuity. Being water- and dustproof, it protects components most frequently damaged, making it perfect for semi-ruggedized applications. The 25.7 cm (10.1-inch) STYLISTIC M702 provides protection against environmental conditions, ensuring uncompromising productivity whether exposed to rain, humidity or dust. Moreover, it can easily be integrated into your company’s Virtual Desktop Infrastructure (VDI), thus ensuring protected anytime access to your company data while its business software lets you securely manage your contacts and emails. Embedded 4G/LTE delivers latest high-speed connectivity for end-to-end performance in the most challenging situations and the Android operating system’s rich multimedia features also make it perfect for private use. Semi-Ruggedized Ensure absolute business continuity and protect your tablet from environmental conditions.Work from anywhere while exposing the tablet to rain, humidity or dust without causing any component damage. Waterproof (tested for IPX5/7/8 specifications), dustproof (tested for IP5X specification), durable with toughened glass Business Match Ultimate productivity from anywhere. Securely access your business applications, data and company intranet with the business software selection and protect -

Storage for HPC and AI

Storage for HPC and AI Make breakthroughs faster with artificial intelligence powered by high performance computing systems and storage BETTER PERFORMANCE Unlock the value of data with high performance storage built for HPC. The flood of information generated by sensors, satellites, simulations, high-throughput devices and medical imaging is pushing data repositories to sizes that were once inconceivable. Data analytics, high performance computing (HPC) and artificial intelligence (AI) are technologies designed to unlock the value of all that data, driving the demand for powerful HPC systems and the storage to support them. Reliable, efficient and easy-to-adopt HPC storage is the key to enabling today’s powerful HPC systems to deliver transformative decision making, business growth and operational efficiencies in the data-driven age. Dell Technologies | Ready Solutions for HPC 2 THE INTELLIGENCE BEHIND DATA INSIGHTS Articial Machine Deep intelligence learning learning AI is a complex set of technologies underpinned by machine learning (ML) and deep learning (DL) algorithms, typically run on powerful HPC systems and storage. Together, they enable organizations to gain deeper insights from data. AI is an umbrella term that describes a machine’s ability to act autonomously and/or interact in a human-like way. ML refers to the ability of a machine to perform a programmed function with the data The capabilities of AI, ML and DL can unleash predictive and prescriptive analytics on a given to it, getting progressively better at the task over time as it analyzes more data massive scale. Like lenses, AI, ML and DL can be used in combination or alone — depending and receives feedback from users or engineers. -

Technical Brief

Technical Brief SLI-Ready Memory with Enhanced Performance Profiles One-Click Hassle-Free Memory Performance Boost May 2006 TB-0529-001_v01 One-Click Hassle-Free Memory Performance Boost with SLI-Ready Memory Gamers and PC enthusiasts are endlessly searching for opportunities to improve the performance of their PCs. Optimizing system performance is a function of the major components used—graphics processing unit (GPU) add-in cards, CPU, chipset, and memory. It is also a function of tuning and overclocking the various PC components. Overclocking, however, has disadvantages such as system instability and inconsistent performance measurements from one system to another. SLI-Ready memory with Enhanced Performance Profiles (EPP) is a new approach that simplifies overclocking and ensures memory and platform compatibility. The NVIDIA nForce 590 SLI core logic is the first NVIDIA® platform that supports the new EPP functionality. Memory DIMMs which receive the SLI-Ready certification are required to support EPP technology to ensure the memories can be automatically detected and their full potential realized with the NVIDIA nForce® 590 SLI® chipset. The SLI-Ready certification process ensures the memory modules have passed a comprehensive set of tests and meet the minimum requirements for delivering our customers the outstanding experience they expect from SLI systems. SLI-Ready Memory with EPP at a Glance System memory modules (DIMMs) are built using an electrically-erasable programmable read-only memory (EEPROM) that can hold up to 256 Bytes of data. The EEPROM is used to store Serial Presence Detect (SPD) information defined by JEDEC which include manufacturer part number, manufacturer name, some timing parameters, serial number, etc. -

Mellanox for Big Data

Mellanox for Big Data March 2013 Company Overview Ticker: MLNX . Leading provider of high-throughput, low-latency server and storage interconnect • FDR 56Gb/s InfiniBand and 10/40/56GbE • Reduces application wait-time for data • Dramatically increases ROI on data center infrastructure . Company headquarters: • Yokneam, Israel; Sunnyvale, California • ~1,160 employees* worldwide . Solid financial position • Record revenue in FY12; $500.8M • Q1’13 guidance ~$78M to $83M • Cash + investments @ 12/31/12 = $426.3M * As of December 2012 © 2013 Mellanox Technologies 2 Leading Supplier of End-to-End Interconnect Solutions Storage Server / Compute Switch / Gateway Front / Back-End Virtual Protocol Interconnect Virtual Protocol Interconnect 56G IB & FCoIB 56G InfiniBand 10/40/56GbE & FCoE 10/40/56GbE Fibre Channel Comprehensive End-to-End InfiniBand and Ethernet Portfolio ICs Adapter Cards Switches/Gateways Host/Fabric Software Cables © 2013 Mellanox Technologies 3 RDMA | Efficiency, Latency, & Application Performance Without RDMA With RDMA and Offload ~53% CPU ~88% CPU Utilization Utilization User SpaceUser SpaceUser ~47% CPU ~12% CPU Overhead/Idle Overhead/Idle System System Space System System Space © 2013 Mellanox Technologies 4 Big Data Solutions © 2013 Mellanox Technologies 5 Big Data Needs Big Pipes . Capabilities are Determined by the weakest component in the system . Different approaches in Big Data marketplace – Same needs • Better Throughput and Latency • Scalable, Faster data Movement Big Data Applications Require High Bandwidth and Low Latency Interconnect * Data Source: Intersect360 Research, 2012, IT and Data scientists survey © 2013 Mellanox Technologies 6 Unstructured Data Accelerator - UDA . Plug-in architecture • Open-source Hive Pig - https://code.google.com/p/uda-plugin/ MapMap ReduceReduce HBase .