Classification and Interpretation in Quantitative Structure-Activity Relationships

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Indexing and Classification System for Earth-Science Data Bases

AN INDEXING AND CLASSIFICATION SYSTEM FOR EARTH-SCIENCE DATA BASES By James C. Schornick, Janet B. Pruitt, Helen E. Stranathan, and Albert C. Duncan U.S. GEOLOGICAL SURVEY Open-File Report 89 47 Reston, Virginia 1989 DEPARTMENT OF THE INTERIOR MANUEL LUJAN, JR., Secretary U.S. GEOLOGICAL SURVEY Dallas L. Peck, Director For additional information Copies of this report can write to: be purchased from: Assistant Chief Hydrologist for U.S. Geological Survey Scientific Information Management Books and Open-File Reports Section U.S. Geological Survey Federal Center, Building 810 440 National Center Box 25425 Reston, Virginia 22092 Denver, Colorado 80225 CONTENTS Page Abstract ............................................................ 1 Introduction ........................................................ 1 Objective of the indexing and classification system .............. 2 Scope of the indexing and classification system .................. 2 The indexing and classification system .............................. 2 The sample medium component ...................................... 3 The general physical/chemical component .......................... 6 The specific physical/chemical component ......................... 6 Organic substances ............................................ 7 Biological taxa ............................................... 8 The indexing and classification code ............................. 9 Data base availability .............................................. 11 Summary ............................................................ -

Genx Chemicals”

EPA-823-P-18-001 Public Comment Draft Human Health Toxicity Values for Hexafluoropropylene Oxide (HFPO) Dimer Acid and Its Ammonium Salt (CASRN 13252-13-6 and CASRN 62037-80-3) Also Known as “GenX Chemicals” This document is a Public Comment draft. It has not been formally released by the U.S. Environmental Protection Agency and should not at this stage be construed to represent Agency policy. This information is distributed solely for the purpose of public review. This document is a draft for review purposes only and does not constitute Agency policy. DRAFT FOR PUBLIC COMMENT – DO NOT CITE OR QUOTE NOVEMBER 2018 Human Health Toxicity Values for Hexafluoropropylene Oxide (HFPO) Dimer Acid and Its Ammonium Salt (CASRN 13252-13-6 and CASRN 62037- 80-3) Also Known as “GenX Chemicals” Prepared by: U.S. Environmental Protection Agency Office of Water (4304T) Health and Ecological Criteria Division Washington, DC 20460 EPA Document Number: 823-P-18-001 NOVEMBER 2018 This document is a draft for review purposes only and does not constitute Agency policy. DRAFT FOR PUBLIC COMMENT – DO NOT CITE OR QUOTE NOVEMBER 2018 Disclaimer This document is a public comment draft for review purposes only. This information is distributed solely for the purpose of public comment. It has not been formally disseminated by EPA. It does not represent and should not be construed to represent any Agency determination or policy. Mention of trade names or commercial products does not constitute endorsement or recommendation for use. i This document is a draft for review purposes only and does not constitute Agency policy. -

Quantitative Structure-Activity Relationships (QSAR) and Pesticides

Quantitative Structure-Activity Relationships (QSAR) and Pesticides Ole Christian Hansen Teknologisk Institut Pesticides Research No. 94 2004 Bekæmpelsesmiddelforskning fra Miljøstyrelsen The Danish Environmental Protection Agency will, when opportunity offers, publish reports and contributions relating to environmental research and development projects financed via the Danish EPA. Please note that publication does not signify that the contents of the reports necessarily reflect the views of the Danish EPA. The reports are, however, published because the Danish EPA finds that the studies represent a valuable contribution to the debate on environmental policy in Denmark. Contents FOREWORD 5 PREFACE 7 SUMMARY 9 DANSK SAMMENDRAG 11 1 INTRODUCTION 13 2QSAR 15 2.1 QSAR METHOD 15 2.2 QSAR MODELLING 17 3 PESTICIDES 21 3.1 MODES OF ACTION 21 3.2 QSAR AND PESTICIDES 22 3.2.1 SMILES notation 23 3.3 PHYSICO-CHEMICAL PROPERTIES 24 3.3.1 Boiling point 24 3.3.2 Melting point 25 3.3.3 Solubility in water 27 3.3.4 Vapour pressure 30 3.3.5 Henry’s Law constant 32 3.3.6 Octanol/water partition coefficient (Kow) 34 3.3.7 Sorption 39 3.4 BIOACCUMULATION 47 3.4.1 Bioaccumulation factor for aquatic organisms 47 3.4.2 Bioaccumulation factor for terrestrial organisms 49 3.5 AQUATIC TOXICITY 50 3.5.1 QSAR models on aquatic ecotoxicity 50 3.5.2 Correlations between experimental and estimated ecotoxicity 53 3.5.3 QSARs developed for specific pesticides 57 3.5.4 QSARs derived from pesticides in the report 60 3.5.5 Discussion on estimated ecotoxicity 86 4 SUMMARY OF CONCLUSIONS 89 REFERENCES 93 APPENDIX A 99 3 4 Foreword The concept of similar structures having similar properties is not new. -

5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28

Appendix B Classification of common chemicals by chemical band 1 1 EXHIBIT 1 2 CHEMICAL CLASSIFICATION LIST 3 4 1. Pyrophoric Chemicals 5 1.1. Aluminum alkyls: R3A1, R2A1C1, RA1C12 6 Examples: Et3A1, Et2A1C1, EtA.1111C12, Me3A1, Diethylethoxyaluminium 7 1.2. Grignard Reagents: RMgX (R=alkyl, aryl, vinyl X=halogen) 8 1.3. Lithium Reagents: RLi (R 7 alkyls, aryls, vinyls) 9 Examples: Butyllithium, Isobutylthhium, sec-Butyllithium, tert-Butyllithium, 10 Ethyllithium, Isopropyllithium, Methyllithium, (Trimethylsilyl)methyllithium, 11 Phenyllithiurn, 2-Thienyllithium, Vinyllithium, Lithium acetylide ethylenediamine 12 complex, Lithium (trimethylsilyl)acetylide, Lithium phenylacetylide 13 1.4. Zinc Alkyl Reagents: RZnX, R2Zn 14 Examples: Et2Zn 15 1.5. Metal carbonyls: Lithium carbonyl, Nickel tetracarbonyl, Dicobalt octacarbonyl 16 1.6. Metal powders (finely divided): Bismuth, Calcium, Cobalt, Hafnium, Iron, 17 Magnesium, Titanium, Uranium, Zinc, Zirconium 18 1.7. Low Valent Metals: Titanium dichloride 19 1.8. Metal hydrides: Potassium Hydride, Sodium hydride, Lithium Aluminum Hydride, 20 Diethylaluminium hydride, Diisobutylaluminum hydride 21 1.9. Nonmetal hydrides: Arsine, Boranes, Diethylarsine, diethylphosphine, Germane, 22 Phosphine, phenylphosphine, Silane, Methanetellurol (CH3TeH) 23 1.10. Non-metal alkyls: R3B, R3P, R3As; Tributylphosphine, Dichloro(methyl)silane 24 1.11. Used hydrogenation catalysts: Raney nickel, Palladium, Platinum 25 1.12. Activated Copper fuel cell catalysts, e.g. Cu/ZnO/A1203 26 1.13. Finely Divided Sulfides: -

Guidelines for the Classification of Hazardous Chemicals

GUIDELINES FOR THE CLASSIFICATION OF HAZARDOUS CHEMICALS DEPARTMENT OF OCCUPATIONAL SAFETY AND HEALTH MINISTRY OF HUMAN RESOURCES MALAYSIA 1997 JKKP: GP (I) 4/97 ISBN 983-99156-6-5 Guidelines for the Classification of Hazardous Chemicals Table of Contents Preface 1 Glossary 2 1. Introduction 4 2. Classification Based on Physicochemical Properties 4 3. Classification Based on Health Effects 10 4. Listed Hazardous Chemicals Based on Health Effects 22 5. Non-Listed Hazardous Chemicals Based on Health Effects 23 6. Procedure for Classifying Chemicals Based on Health Effects 23 References 26 Appendices Appendix I : Formula for Classification of mixtures with 27 ingredient considerations below cut-off levels and having additives effects. Appendix II : Recommended Risk Phrases for Classifications 30 Based on Health Effects Appendix III : Procedure for Classifying a Hazardous Chemical 31 Appendix IV : Health-Effects Based Classification of Non-Listed 32 Hazardous Chemicals and Recommended Risk Phrase Appendix v : Classifying a Chemicals Mixture Using the 37 Concentration Cut-Off Level Concept Appendix VI : Choice of Risk Phrases 41 Appendix VII : List of Hazardous Chemicals 47 Department of Occupational Safety and Health (DOSH) ♣ MALAYSIA iii Guidelines for the Classification of Hazardous Chemicals PREFACE These guidelines may be cited as the Guidelines for the Classification of Hazardous Chemicals (hereinafter referred to as “the Guidelines”. The purpose of the Guidelines is to elaborate on and explain the requirements of Regulation 4 of the Occupational Safety and Health (Classification, Packaging and Labelling of Hazardous Chemicals) Regulations 1997 [P.U. (A) 143] (hereinafter referred to as “the Regulations”) which stipulates the duty of a supplier of hazardous chemicals to classify each hazardous chemicals according to the specific nature of the risk involved in the use and handling of the chemicals at work. -

Development of a Hazardous Material Selection Procedure for the Chemical Accident Response Manual

Korean J. Chem. Eng., 36(3), 333-344 (2019) pISSN: 0256-1115 DOI: 10.1007/s11814-018-0202-x eISSN: 1975-7220 INVITED REVIEW PAPER INVITED REVIEW PAPER Development of a hazardous material selection procedure for the chemical accident response manual Kwanghee Lee*,‡, Byeonggil Lyu*,‡, Hyungtae Cho*, Chanho Park*, Sunghyun Cho*, Sambae Park**, and Il Moon*,† *Department of Chemical and Biomolecular Engineering, Yonsei University, 50 Yonsei-ro, Seodaemun-gu, Seoul 03722, Korea **Korea Environment Corporation, 42 Hwangyeong-ro, Seo-gu, Incheon 22689, Korea (Received 20 July 2018 • accepted 2 December 2018) AbstractAfter the accidental hydrogen-fluoride leak in Gu-mi city, Korea, the Korean government revised its laws on chemical management. The hazardous chemical management laws were strengthened to meet the legal standards, and now the selection of Accident Precaution Chemicals (APCs) is required. This study introduces a novel hazardous material selection procedure for industrial chemical management. The proposed method consists of screening, scor- ing, rating, and selection procedures. Among the 4,994 hazardous chemicals in the database, 1,362 chemicals were selected through the screening process. The selected chemicals were classified as flammable, explosive/reactive, and toxic materials, and in the final step, flash point, heat of combustion, and toxicity of these chemicals were considered in chemical ratings. According to the ratings, 100 toxic materials were selected and used to modify the safety manage- ment manual development software, currently used in Korean companies that deal with hazardous chemicals. The developed algorithm and software are expected to greatly aid plants that deal with hazardous materials. Keywords: Chemical Management Manual, Chemical Classification, Hazardous Material Management, Accident Pre- caution, Accident Response INTRODUCTION government, enact relevant legislation, and to change the corporate culture to better respond to chemical accidents [5-7]. -

Identification of an Unknown – Alcohols, Aldehydes, and Ketones

Identification of an Unknown: Alcohols, Aldehydes, and Ketones How does one determine the identity and structure of an unknown compound? This is not a trivial task. Modern x-ray and spectroscopic techniques have made the job much easier, but for some very complex molecules, identification and structure determination remains a challenge. In addition to spectroscopic information and information obtained from other instrumental methods, chemical reactions can provide useful structural information, and physical properties can contribute significantly to confirming the identity of a compound. In this experiment, you will be asked to identify an unknown liquid, which will be either an alcohol, aldehyde, or ketone. Identification will be accomplished by carrying out chemical tests, called classification tests, preparing a solid derivative of the unknown and determining its melting point (MP), making careful observations, and analyzing the NMR spectrum of the unknown. the carbonyl group O O R OH R H R R alcohol aldehyde ketone A list of alcohols, aldehydes, and ketones, along with the MP of a solid derivative of each compound, is posted on the website. The unknown will be one of these listed compounds. If one can determine to which functional group class (alcohol, aldehyde, or ketone) the unknown belongs, two of the three lists need not be considered and the task will be greatly simplified. To accomplish this, classification tests will be carried out. First, consider some general ways in which alcohols, aldehydes, and ketones react. 1 CLASSIFICATION TESTS, which are simple chemical reactions that produce color changes or form precipitates, can be used to differentiate alcohols, aldehydes, and ketones, and also to provide further structural information. -

Phytochemistry and Biological Activities of Selected Lebanese Plant Species (Crataegus Azarolus L. and Ephedra Campylopoda) Hany Kallassy

Phytochemistry and biological activities of selected Lebanese plant species (Crataegus azarolus L. and Ephedra campylopoda) Hany Kallassy To cite this version: Hany Kallassy. Phytochemistry and biological activities of selected Lebanese plant species (Crataegus azarolus L. and Ephedra campylopoda). Human health and pathology. Université de Limoges, 2017. English. NNT : 2017LIMO0036. tel-01626001 HAL Id: tel-01626001 https://tel.archives-ouvertes.fr/tel-01626001 Submitted on 30 Oct 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Doctorat Université Libanaise THESE de doctorat Pour obtenir le grade de Docteur délivré par L’Ecole Doctorale des Sciences et Technologie (Université Libanaise) Spécialité : Présentée et soutenue publiquement par Kallassy Hany 22-09-2017 Phytochemistry and biological activities of selected Lebanese plant species (Crataegus azarolus L. and Ephedra campylopoda) Directeur de thèse : Pr. Badran Bassam Co-encadrement de la thèse : Dr. Hasan Rammal Membre du Jury M. Bassam BADRAN, Pr, Lebanese university Directeur M. Bertrand LIAGRE, Pr, Limoges university Directeur M. David LEGER, Dr, Limoges university Co-Directeur M. Hasan RAMMAL, Dr, Lebanese university Co-Directeur M. Philippe BILLIALD, Pr, Universite Paris-Sud Rapporteur M. Mohammad MROUEH, Pr, Lebanese American university Rapporteur M. -

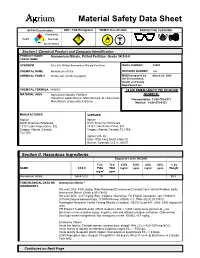

Material Safety Data Sheet

Material Safety Data Sheet NFPA Classification DOT / TDG Pictograms WHMIS Classification PROTECTIVE CLOTHING Flammability 0 Health 1 3 Reactivity OXIDIZER Specific Hazard 5.1 Section I. Chemical Product and Company Identification PRODUCT NAME/ Ammonium Nitrate, Prilled Fertilizer Grade 34.5-0-0 TRADE NAME SYNONYM 34.5-0-0 Prilled Ammonium Nitrate Fertilizer MSDS NUMBER: 14082 CHEMICAL NAME Ammonium nitrate. REVISION NUMBER 4.6 CHEMICAL FAMILY Nitrate salt. (Oxidizing agent) MSDS prepared by March 25, 2003 the Environment, Health and Safety Department on: CHEMICAL FORMULA NH4NO3 24 HR EMERGENCY TELEPHONE MATERIAL USES Agricultural industry: Fertilizer. NUMBER: Industrial applications: Manufacture of chemicals. Transportation: 1-800-792-8311 Manufacture of specialty fertilizers. Medical: 1-888-670-8123 MANUFACTURER SUPPLIER Agrium Agrium North American Wholesale North American Wholesale 13131 Lake Fraser Drive, S.E. 13131 Lake Fraser Drive, S.E. Calgary, Alberta, Canada Calgary, Alberta, Canada, T2J 7E8 T2J 7E8 Agrium U.S. Inc. Suite 1700, 4582 South Ulster St. Denver, Colorado, U.S.A., 80237 Section II. Hazardous Ingredients Exposure Limits (ACGIH) TLV- TLV- STEL STEL CEIL CEIL % by NAME CAS # TWA TWA mg/m3 ppm mg/m3 ppm Weight mg/m3 ppm Ammonium nitrate 6484-52-2 10 99.8 TOXICOLOGICAL DATA ON Ammonium Nitrate:^ INGREDIENTS Rat oral LD50: 4500 mg/kg. [Peer Reviewed] [Environment Canada;Tech Info for Problem Spills: Ammonium Nitrate (Draft) p.59 (1981)] Rat oral LD50: 2217 mg/kg (Rat) [Gigiena i Sanitariya. For English translation, see HYSAAV. (V/O Mezhdunarodnaya Kniga, 113095 Moscow, USSR) V.1- 1936- (52(8),25,1987)] Huntingdon Research Center Testing Results (3 studies), OECD Guide 401: 2462- 2900 mg/kg (rat oral) TFI Product Testing Results, OECD Guideline 402: > 5,000 mg/kg acute dermal LD50, rat, Bacterial reverse mutation assay: negative, with and without metabolic activation, (Salmonella) Developmental terotogenicity: Not teratogenic to rats. -

Caffeic Acid: a Review of Its Potential Use in Medications and Cosmetics

Analytical Methods Accepted Manuscript This is an Accepted Manuscript, which has been through the Royal Society of Chemistry peer review process and has been accepted for publication. Accepted Manuscripts are published online shortly after acceptance, before technical editing, formatting and proof reading. Using this free service, authors can make their results available to the community, in citable form, before we publish the edited article. We will replace this Accepted Manuscript with the edited and formatted Advance Article as soon as it is available. You can find more information about Accepted Manuscripts in the Information for Authors. Please note that technical editing may introduce minor changes to the text and/or graphics, which may alter content. The journal’s standard Terms & Conditions and the Ethical guidelines still apply. In no event shall the Royal Society of Chemistry be held responsible for any errors or omissions in this Accepted Manuscript or any consequences arising from the use of any information it contains. www.rsc.org/methods Page 1 of 9 Analytical Methods 1 2 Analytical Methods RSC Publishing 3 4 5 MINIREVIEW 6 7 8 Cite this: DOI: 9 Caffeic Acid: a review of its potential use for 10 11 medications and cosmetics 12 Received a a a a 13 Accepted C. Magnani, V.L.B. Isaac, M.A. Correa and H.R.N. Salgado 14 DOI: 15 Besides powerful antioxidant activity, increasing collagen production and prevent premature 16 www.rsc.org/ aging, caffeic acid has demonstrated antimicrobial activity and may be promising in the 17 treatment of dermal diseases. The relevance of this study is based on the use of caffeic acid 18 increasingly common in humans. -

Globally Harmonized System of Classification and Labelling of Chemicals (Ghs)

Copyright@United Nations, 2019. All rights reserved ST/SG/AC.10/30/Rev.8 GLOBALLY HARMONIZED SYSTEM OF CLASSIFICATION AND LABELLING OF CHEMICALS (GHS) Eighth revised edition UNITED NATIONS New York and Geneva, 2019 Copyright@United Nations, 2019. All rights reserved NOTE The designations employed and the presentation of the material in this publication do not imply the expression of any opinion whatsoever on the part of the Secretariat of the United Nations concerning the legal status of any country, territory, city or area, or of its authorities, or concerning the delimitation of its frontiers or boundaries. ST/SG/AC.10/30/Rev.8 Copyright © United Nations, 2019 All rights reserved. No part of this publication may, for sales purposes, be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, electrostatic, magnetic tape, mechanical, photocopying or otherwise, without prior permission in writing from the United Nations. UNITED NATIONS Sales No. E.19.II.E.21 Print ISBN 978-92-1-117199-0 eISBN 978-92-1-004083-9 Print ISSN 2412-155X eISSN 2412-1576 - ii - Copyright@United Nations, 2019. All rights reserved FOREWORD 1. The Globally Harmonized System of Classification and Labelling of Chemicals (GHS) is the culmination of more than a decade of work. There were many individuals involved, from a multitude of countries, international organizations, and stakeholder organizations. Their work spanned a wide range of expertise, from toxicology to fire protection, and ultimately required extensive goodwill and the willingness to compromise, in order to achieve this system. 2. The work began with the premise that existing systems should be harmonized in order to develop a single, globally harmonized system to address classification of chemicals, labels, and safety data sheets. -

NAFTA Technical Working Group on Pesticides Quantitative Structure Activity Relationship Guidance Document

TECHNICAL WORKING GROUP ON PESTICIDES (TWG) (Q)UANTITATIVE STRUCTURE ACTIVITY RELATIONSHIP NAFTA [(Q)SAR] GUIDANCE DOCUMENT Page 1 of 186 North American Free Trade Agreement (NAFTA) Technical Working Group on Pesticides (TWG) (Quantitative) Structure Activity Relationship [(Q)SAR] Guidance Document November, 2012 Contributors Mary Manibusan, US EPA Joel Paterson, PMRA Dr. Ray Kent, US EPA Dr. Jonathan Chen, US EPA Dr. Jenny Tao, US EPA Dr. Edward Scollon, US EPA Christine Olinger, US EPA Dr. Patricia Schmieder, US EPA Dr. Chris Russom, US EPA Dr. Kelly Mayo, US EPA Dr. Yin-tak Woo, US EPA Dr. Thomas Steeger, US EPA Dr. Edwin Matthews, US FDA Dr. Sunil Kulkarni, Health Canada External Peer Reviewers Kirk Arvidson, US FDA Mark Bonnell, Environment Canada Bob Diderich, OECD Terry Schultz, OECD Andrew Worth, European Commission – Joint Research Centre Page 2 of 186 PREFACE Integrated Approaches to Testing and Assessment (IATA) and (Q)SAR Pesticide regulatory agencies have traditionally relied on extensive in vivo and in vitro testing to support regulatory decisions on human health and environmental risks. While this approach has provided strong support for risk management decisions, there is a clear recognition that it can often require a large number of laboratory animal studies which can consume significant amounts of resources in terms of time for testing and evaluation. Even with the significant amounts of information from standard in vivo and in vitro testing, pesticide regulators are often faced with questions and issues relating to modes of action for toxicity, novel toxicities, susceptible populations, and other factors that can be challenging to address using traditional approaches.