THE RISE and Fall the 01 BRILLIANT START-UP THAT Some Day We Will Build a Think I~Z~~~~~ Thinking Ing Machine

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Evaluation of Architectural Support for Global Address-Based

Evaluation of Architectural Supp ort for Global AddressBased Communication in LargeScale Parallel Machines y y Arvind Krishnamurthy Klaus E Schauser Chris J Scheiman Randolph Y Wang David E Culler and Katherine Yelick the sp ecic target architecture Wehave develop ed multi Abstract ple highly optimized versions of this compiler employing a Largescale parallel machines are incorp orating increas range of co degeneration strategies for machines with dedi ingly sophisticated architectural supp ort for userlevel mes cated network pro cessors In this studywe use this sp ec saging and global memory access We provide a systematic trum of runtime techniques to evaluate the p erformance evaluation of a broad sp ectrum of current design alternatives tradeos in architectural supp ort for communication found based on our implementations of a global address language in several of the current largescale parallel machines on the Thinking Machines CM Intel Paragon Meiko CS We consider ve imp ortant largescale parallel platforms Cray TD and Berkeley NOW This evaluation includes that havevarying degrees of architectural supp ort for com a range of compilation strategies that makevarying use of munication the Thinking Machines CM Intel Paragon the network pro cessor each is optimized for the target ar Meiko CS Cray TD and Berkeley NOW The CM pro chitecture and the particular strategyWe analyze a family vides direct userlevel access to the network the Paragon of interacting issues that determine the p erformance trade provides a network pro cessor -

Simulating Physics with Computers

International Journal of Theoretical Physics, VoL 21, Nos. 6/7, 1982 Simulating Physics with Computers Richard P. Feynman Department of Physics, California Institute of Technology, Pasadena, California 91107 Received May 7, 1981 1. INTRODUCTION On the program it says this is a keynote speech--and I don't know what a keynote speech is. I do not intend in any way to suggest what should be in this meeting as a keynote of the subjects or anything like that. I have my own things to say and to talk about and there's no implication that anybody needs to talk about the same thing or anything like it. So what I want to talk about is what Mike Dertouzos suggested that nobody would talk about. I want to talk about the problem of simulating physics with computers and I mean that in a specific way which I am going to explain. The reason for doing this is something that I learned about from Ed Fredkin, and my entire interest in the subject has been inspired by him. It has to do with learning something about the possibilities of computers, and also something about possibilities in physics. If we suppose that we know all the physical laws perfectly, of course we don't have to pay any attention to computers. It's interesting anyway to entertain oneself with the idea that we've got something to learn about physical laws; and if I take a relaxed view here (after all I'm here and not at home) I'll admit that we don't understand everything. -

Think in G Machines Corporation Connection

THINK ING MACHINES CORPORATION CONNECTION MACHINE TECHNICAL SUMMARY The Connection Machine System Connection Machine Model CM-2 Technical Summary ................................................................. Version 6.0 November 1990 Thinking Machines Corporation Cambridge, Massachusetts First printing, November 1990 The information in this document is subject to change without notice and should not be construed as a commitment by Thinking Machines Corporation. Thinking Machines Corporation reserves the right to make changes to any products described herein to improve functioning or design. Although the information in this document has been reviewed and is believed to be reliable, Thinking Machines Corporation does not assume responsibility or liability for any errors that may appear in this document. Thinking Machines Corporation does not assume any liability arising from the application or use of any information or product described herein. Connection Machine® is a registered trademark of Thinking Machines Corporation. CM-1, CM-2, CM-2a, CM, and DataVault are trademarks of Thinking Machines Corporation. C*®is a registered trademark of Thinking Machines Corporation. Paris, *Lisp, and CM Fortran are trademarks of Thinking Machines Corporation. C/Paris, Lisp/Paris, and Fortran/Paris are trademarks of Thinking Machines Corporation. VAX, ULTRIX, and VAXBI are trademarks of Digital Equipment Corporation. Symbolics, Symbolics 3600, and Genera are trademarks of Symbolics, Inc. Sun, Sun-4, SunOS, and Sun Workstation are registered trademarks of Sun Microsystems, Inc. UNIX is a registered trademark of AT&T Bell Laboratories. The X Window System is a trademark of the Massachusetts Institute of Technology. StorageTek is a registered trademark of Storage Technology Corporation. Trinitron is a registered trademark of Sony Corporation. -

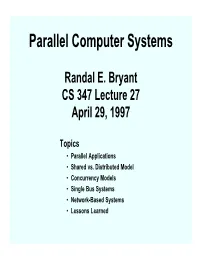

Parallel Computer Systems

Parallel Computer Systems Randal E. Bryant CS 347 Lecture 27 April 29, 1997 Topics • Parallel Applications • Shared vs. Distributed Model • Concurrency Models • Single Bus Systems • Network-Based Systems • Lessons Learned Motivation Limits to Sequential Processing • Cannot push clock rates beyond technological limits • Instruction-level parallelism gets diminishing returns – 4-way superscalar machines only get average of 1.5 instructions / cycle – Branch prediction, speculative execution, etc. yield diminishing returns Applications have Insatiable Appetite for Computing • Modeling of physical systems • Virtual reality, real-time graphics, video • Database search, data mining Many Applications can Exploit Parallelism • Work on multiple parts of problem simultaneously • Synchronize to coordinate efforts • Communicate to share information – 2 – CS 347 S’97 Historical Perspective: The Graveyard • Lots of venture capital and DoD Resarch $$’s • Too big to enumerate, but some examples … ILLIAC IV • Early research machine with overambitious technology Thinking Machines • CM-2: 64K single-bit processors with single controller (SIMD) • CM-5: Tightly coupled network of SPARC processors Encore Computer • Shared memory machine using National microprocessors Kendall Square Research KSR-1 • Shared memory machine using proprietary processor NCUBE / Intel Hypercube / Intel Paragon • Connected network of small processors • Survive only in niche markets – 3 – CS 347 S’97 Historical Perspective: Successes Shared Memory Multiprocessors (SMP’s) • E.g., SGI -

A PARALLEL IMPLEMENTATION of BACKPROPAGATION NEURAL NETWORK on MASPAR MP-1 Faramarz Valafar Purdue University School of Electrical Engineering

Purdue University Purdue e-Pubs ECE Technical Reports Electrical and Computer Engineering 3-1-1993 A PARALLEL IMPLEMENTATION OF BACKPROPAGATION NEURAL NETWORK ON MASPAR MP-1 Faramarz Valafar Purdue University School of Electrical Engineering Okan K. Ersoy Purdue University School of Electrical Engineering Follow this and additional works at: http://docs.lib.purdue.edu/ecetr Valafar, Faramarz and Ersoy, Okan K., "A PARALLEL IMPLEMENTATION OF BACKPROPAGATION NEURAL NETWORK ON MASPAR MP-1" (1993). ECE Technical Reports. Paper 223. http://docs.lib.purdue.edu/ecetr/223 This document has been made available through Purdue e-Pubs, a service of the Purdue University Libraries. Please contact [email protected] for additional information. TR-EE 93-14 MARCH 1993 A PARALLEL IMPLEMENTATION OF BACKPROPAGATION NEURAL NETWORK ON MASPAR MP-1" Faramarz Valafar Okan K. Ersoy School of Electrical Engineering Purdue University W. Lafayette, IN 47906 - * The hdueUniversity MASPAR MP-1 research is supponed in pan by NSF Parallel InfrasmctureGrant #CDA-9015696. - 2 - ABSTRACT One of the major issues in using artificial neural networks is reducing the training and the testing times. Parallel processing is the most efficient approach for this purpose. In this paper, we explore the parallel implementation of the backpropagation algorithm with and without hidden layers [4][5] on MasPar MP-I. This implementation is based on the SIMD architecture, and uses a backpropagation model which is more exact theoretically than the serial backpropagation model. This results in a smoother convergence to the solution. Most importantly, the processing time is reduced both theoretically and experimentally by the order of 3000, due to architectural and data parallelism of the backpropagation algorithm. -

Scalability Study of KSR-1

Scalability Study of the KSR-1 Appeared in Parallel Computing, Vol 22, 1996, 739-759 Umakishore Ramachandran Gautam Shah S. Ravikumar Jeyakumar Muthukumarasamy College of Computing Georgia Institute of Technology Atlanta, GA 30332 Phone: (404) 894-5136 e-mail: [email protected] Abstract Scalability of parallel architectures is an interesting area of current research. Shared memory parallel programming is attractive stemming from its relative ease in transitioning from sequential programming. However, there has been concern in the architectural community regarding the scalability of shared memory parallel architectures owing to the potential for large latencies for remote memory accesses. KSR-1 is a commercial shared memory parallel architecture, and the scalability of KSR-1 is the focus of this research. The study is conducted using a range of experiments spanning latency measurements, synchronization, and analysis of parallel algorithms for three computational kernels and an application. The key conclusions from this study are as follows: The communication network of KSR-1, a pipelined unidirectional ring, is fairly resilient in supporting simultaneous remote memory accesses from several processors. The multiple communication paths realized through this pipelining help in the ef®cient implementation of tournament-style barrier synchronization algorithms. Parallel algorithms that have fairly regular and contiguous data access patterns scale well on this architecture. The architectural features of KSR-1 such as the poststore and prefetch are useful for boosting the performance of parallel applications. The sizes of the caches available at each node may be too small for ef®ciently implementing large data structures. The network does saturate when there are simultaneous remote memory accesses from a fully populated (32 node) ring. -

Thinking Machines

High Performance Comput ing IAnd Communications Week I K1N.G COMMUNICATIONS GROUP, INC. 627 National Press Building, Washington, D.C. 20045 (202) 638-4260 Fax: (202) 662-9744 Thursday, July 8,1993 Volume 2, Number 27 Gordon Bell Makes His Case: Get The Feds out Of Computer Architecture BY RICHARD McCORMACK It's an issue that has been simmering, then smolder- Bell: "You've got ing and occasionally flaring up: will the big massively huge forces tellmg parallel machines costing tens of millions of dollars you who's malnl~ne prove themselves worthy of their promise? Or will and how you bu~ld these machines, developed with millions of dollars computers " from the taxpayer, be an embarrassing bust? It's a debate that occurs daily-even with spouses in bed at night-but not much outside of the high- performance computing industry's small borders. Lit- erally thousands of people are engaged in trying to make massive parallelism a viable technology. But there are still few objective observers, very little data, and not enough experience with the big machines to prove-or disprove-their true worth. afraid to talk about [the situation] because they know Interestingly, though, one of the biggest names in they've conned the world and they have to keep lying computing has made up his mind. The MPPs are aw- to support" their assertions that the technology needs ful, and the companies selling them, notably Intel and government support, says the ever-quotable Bell. "It's Thinking Machines, but others as well, are bound to really bad when it turns the scientists into a bunch of fail, says Gordon Bell, whose name is attached to the liars. -

The KSR1: Experimentation and Modeling of Poststore Amy Apon Clemson University, [email protected]

Clemson University TigerPrints Publications School of Computing 2-1993 The KSR1: Experimentation and Modeling of Poststore Amy Apon Clemson University, [email protected] E Rosti Universita degli studi de Milano E Smirni Vanderbilt University T D. Wagner Vanderbilt University M Madhukar Vanderbilt University See next page for additional authors Follow this and additional works at: https://tigerprints.clemson.edu/computing_pubs Part of the Computer Sciences Commons Recommended Citation Please use publisher's recommended citation. This Article is brought to you for free and open access by the School of Computing at TigerPrints. It has been accepted for inclusion in Publications by an authorized administrator of TigerPrints. For more information, please contact [email protected]. Authors Amy Apon, E Rosti, E Smirni, T D. Wagner, M Madhukar, and L W. Dowdy This article is available at TigerPrints: https://tigerprints.clemson.edu/computing_pubs/9 3 445b 0374303 7 E. Rasti E. Smirni A. W. Apoa L. w. Dowdy .- .. , . - . .. .. ... ..... i- ORNL/TM- 1228 7 I' Engineering Physics and Mathematics Division ; ?J -2 c_ Mathematical Sciences Section I.' THE KSR1: EXPERIMENTATION AND MODELING OF POSTSTORE E. Rosti E. Smirni t T. D. Wagner + A. W. Apon L. W. Dowdy Dipartimento di Scienze dell'Informazione Universitb degli Studi di Milano Via Comelico 39 20135 Milano, Italy t Computer Science Department Vaiiderbilt University Box 1679, Station B Nashville, TN 37235 Date Published: February 1993 This work was partially supported by sub-contract 19X-SL131V from the Oak Ridge National Laboratory, and by grant N. 92.01615.PF69 from the Italian CNR "Progetto Finalizzato Sistemi Informatici e Calcolo Parallel0 - Sottoprogetto 3." Prepared by the Oak Ridge National Laboratory Oak Ridge, Tennessee 37831 managed by Martin Marietta Energy Systems, Inc. -

A Cross Comparison of Data Load Strategies, with Anita Richards, Teradata

Teradata Education Network Presenter Biographies Use the following links to search for presenter by last name. Last update: 12/19/2017 A B C D F G H J K L M N O P R S T V W Z A Abadi, Daniel Prof. Abadi's research interests are in scalable systems, with a particular focus on the architecture of scalable database systems. Before joining the Yale computer science faculty, he spent four years at the Massachusetts Institute of Technology where he received his Ph.D. Abadi has been a recipient of a Churchill Scholarship, an NSF CAREER Award, a Sloan Research Fellowship, the 2007 VLDB Best Paper Award, the 2008 SIGMOD Jim Gray Doctoral Dissertation Award, the 2013-2014 Yale Provost's Teaching Prize, and the 2013 VLDB Early Career Researcher Award. His research on HadoopDB is currently being commercialized by Hadapt, where Abadi also serves as chief scientist. He blogs at DBMS Musings and tweets at @daniel_abadi. Source: http://www.cs.yale.edu/homes/dna/ Bruce Aldridge - Senior Data Scientist, Advanced Analytics Starting with Teradata Corporation in 2002, Bruce Aldridge has been deeply involved in advanced algorithm development, focusing on large data analyses associated with manufacturing, telematics, supply chain, warranty analytics and techniques for wide data sets (extreme numbers of independent variables). As Chief Data Scientist for Manufacturing Analytics, he has been the primary interface with customer for data mining methods and analytic implementation. He has over 14 years of experience in manufacturing quality, reliability, failure analysis, test design and statistical process control. He holds eight patents and has presented papers at multiple technical conferences. -

Long Biography of Stephen Brobst

AlphaZetta alphazetta.ai Long Biography of Stephen Brobst Stephen Brobst is the Chief Technology Officer for Teradata Corporation. His expertise is in the identification and development of opportunities for the strategic use of technology in competitive business environments. Over the past thirty years Stephen has been involved in numerous engagements in which he has been called upon to apply his combined expertise in business strategy and high-end parallel systems to develop frameworks for analytics and machine learning to leverage information for strategic advantage. Clients with whom he has worked include leaders such as eBay, Staples, Fidelity Investments, General Motors Corporation, Kroger Company, Wells Fargo Bank, Wal*Mart, AT&T Communications, Aetna Health Plans, Metropolitan Life Insurance, VISA International, Vodafone, Blue Cross Blue Shield, Nationwide Insurance, American Airlines, Mayo Clinic, Walgreen Corporation, and many more. Stephen has particular expertise in the deployment of solutions for maximizing the value of customer relationships through the use of advanced customer relationship management techniques with omni-channel deployment. Prior to joining Teradata, Stephen successfully launched four start-up companies related to high-end database products and services in the analytics and e-business marketplaces: (1) Tanning Technology Corporation (IPO on NASDAQ), (2) NexTek Solutions (acquired by IBM), (3) eHealthDirect (acquired by HealthEdge), and (4) Strategic Technologies & Systems (acquired by NCR). Previously, Stephen taught graduate courses at Boston University and the Massachusetts Institute of Technology in both the MBA program at the Sloan School of Management and in the Computer Science departments of both universities. He received instructor of the year award for two of his last five years in the MET Computer Science department at Boston University and continues to guest lecture at the Massachusetts Institute of Technology, Stanford University, and the Kellogg Graduate School of Management. -

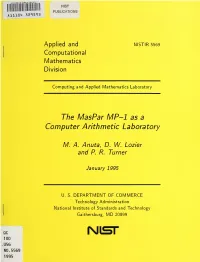

The Maspar MP-1 As a Computer Arithmetic Laboratory

Division Computing and Applied Mathematics Laboratory The MasPar MP-1 as a Computer Arithmetic Laboratory M. A. Anuta, D. W. Lozier and P. R. Turner January 1995 U. S. DEPARTMENT OF COMMERCE Technology Administration National Institute of Standards and Technology Gaithersburg, MD 20899 QC N\sr 100 .U56 NO. 5569 1995 NISTIR 5569 The MasPar MP-l as a Computer Arithmetic Laboratory M. A. Anuta D. W. Lozier P. R. Turner U.S. DEPARTMENT OF COMMERCE Technology Administration National Institute of Standards and Technology Applied and Computational Mathematics Division Computing and Applied Mathematics Laboratory Gaithersburg, MD 20899 January 1995 U.S. DEPARTMENT OF COMMERCE Ronald H. Brown, Secretary TECHNOLOGY ADMINISTRATION Mary L. Good, Under Secretary for Technology NATIONAL INSTITUTE OF STANDARDS AND TECHNOLOGY Arati Prabhakar, Director I I The MasPar MP-1 as a Computer Arithmetic Laboratory Michael A Anuta^ Daniel W Lozier and Peter R Turner^ Abstract This paper describes the use of a massively parallel SIMD computer architecture for the simulation of various forms of computer arithmetic. The particular system used is a DEC/MasPar MP-1 with 4096 processors in a square array. This architecture has many ad\>cmtagesfor such simulations due largely to the simplicity of the individual processors. Arithmetic operations can be spread across the processor array to simulate a hardware chip. Alternatively they may be performed on individual processors to allow simulation of a massively parallel implementation of the arithmetic. Compromises between these extremes permit speed-area trade-offs to be examined. The paper includes a description of the architecture and its features. It then summarizes some of the arithmetic systems which have been, or are to be, implemented. -

The Race Continues

SLALOM Update: The Race Continues John Gustafson, Diane Rover, Stephen Elbert, and Michael Carter Ames Laboratory DOE, Ames, Iowa Last November, we introduced in these pages a new kind of computer benchmark: a complete scientific problem that scales to the amount of computing power available, and always runs in the same amount of time… one minute. SLALOM assigns no penalty for novelty in language or architecture, and runs on computers as different as an Alliant, a MasPar, an nCUBE, and a Toshiba notebook PC. Since that time, there have been several developments: • The number of computer systems in the list has more than doubled. • The algorithms have improved. • An annual award for SLALOM performance has been announced. • SLALOM is the judge for at least one competitive supercomputer procurement. • The massively-parallel contenders are starting to unseat the low-end Cray computers. • All but a few major scientific computer manufacturers are represented in our report. • Many of the original numbers have improved significantly. Most Wanted List We’re still waiting to hear results for a few major players in supercomputing: Thinking Machines, Convex, MEIKO, and Stardent haven’t sent anything to us, nor have any of their customers. We’d also very much like numbers for the WaveTracer and Active Memory Technology computers. Our Single-Instruction, Multiple Data (SIMD) version has been improved since the last Supercomputing Review article, so the groups working on those machines might want to check it out as a better starting point (see inset). The only IBM mainframe measurements are nonparallel and nonvector, so we expect big improvements to its performance.