Neuroimaging Studies on Familiarity of Music in Children with Autism Spectrum Disorder

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Madonna: Kabbalah with the Kids! the Cast of the Bold Type Are Super Talented, So It’S Not Really Surprising to Madonna Makes a Quick Entrance Into Know That

JJ Jr. RSS Twitter Facebook Instagram MAIN ABOUT US EXCLUSIVE CONTACT SEND TIPS Search Chris Pine & Annabelle Wallis Miranda Lambert Mentions Will Smith & Jada Pinkett Smith You Won't Believe What One Hold Hands, Look So Cute Blake Shelton's Girlfriend Don't Say They're Married Woman Did to Protest Donald Together in London! Gwen Stefani in New Interview Anymore Find Out Why Trump (Hint: It's Terrifying) Older Newer SUN, 10 MARCH 2013 AT 7:30 PM Tweet 'The Bold Type' Cast Really Wants To Do A Musical Episode Madonna: Kabbalah with the Kids! The cast of The Bold Type are super talented, so it’s not really surprising to Madonna makes a quick entrance into know that... the Kabbalah Center while all bundled up for the chilly day on Saturday (March 9) in New York City. 'Free Rein' Returns To Netflix For The 54yearold entertainer was Season 2 on July 6th! Free Rein is almost here again and JJJ is accompanied by three of her children, so excited! The new season will center Lourdes, 16, Mercy, 8, and David, 7. on Zoe Phillips as... Last week, Madonna and Lourdes met up with some dancers from her MDNA tour at Antica Pesa in the Williamsburg Justin Bieber & GF Hailey Baldwin section of Brooklyn, where they dined on Head Out to Brooklyn... Justin Bieber and GF Hailey Baldwin are yummy Italian cuisine. continuing to have so much fun together! The 24yearold... An onlooker commented that Madonna seemed like the “coolest mom in the world,” according to People. -

A Case Study of Russia and Ukraine's Use of the Eurovision Song

Lund University FKVK02 Department of Political Science Spring 2020 Peace and Conflict Studies Supervisor: Fredrika Larsson The Battle of Eurovision A case study of Russia and Ukraine’s use of the Eurovision Song Contest as a cultural battlefield Character count: 59 832 Julia Orinius Welander Abstract This study aims to explore and analyze how Eurovision Song Contest functioned as an alternative – cultural – battlefield in the Russian-Ukrainian conflict over Crimea. With the use of soft power politics in warfare as the root of interest, this study uses the theories of cultural diplomacy and visual international relations to explore how images may be central to modern-day warfare and conflicts as the perception of. The study has a theory-building approach and aims to build on the concept of cultural diplomacy in order to explain how the images sent out by states can be politized and used to conduct cultural warfare. To explore how Russia and Ukraine used Eurovision Song Contest as a cultural battlefield this study uses the methodological framework of a qualitative case study with the empirical data being Ukraine’s and Russia’s Eurovision Song Contest performances in 2016 and 2017, respectively, which was analyzed using Roland Barthes’ method of image analysis. The main finding of the study was that both Russia and Ukraine used ESC as a cultural battlefield on which they used their performances to alter the perception of themselves and the other by instrumentalizing culture for political gain. Keywords: cultural diplomacy, visual IR, Eurovision Song Contest, Crimea Word count: 9 732 Table of Contents 1 Introduction ............................................................................................................ -

Touchstones of Popular Culture Among Contemporary College Students in the United States

Minnesota State University Moorhead RED: a Repository of Digital Collections Dissertations, Theses, and Projects Graduate Studies Spring 5-17-2019 Touchstones of Popular Culture Among Contemporary College Students in the United States Margaret Thoemke [email protected] Follow this and additional works at: https://red.mnstate.edu/thesis Part of the Higher Education and Teaching Commons Recommended Citation Thoemke, Margaret, "Touchstones of Popular Culture Among Contemporary College Students in the United States" (2019). Dissertations, Theses, and Projects. 167. https://red.mnstate.edu/thesis/167 This Thesis (699 registration) is brought to you for free and open access by the Graduate Studies at RED: a Repository of Digital Collections. It has been accepted for inclusion in Dissertations, Theses, and Projects by an authorized administrator of RED: a Repository of Digital Collections. For more information, please contact [email protected]. Touchstones of Popular Culture Among Contemporary College Students in the United States A Thesis Presented to The Graduate Faculty of Minnesota State University Moorhead By Margaret Elizabeth Thoemke In Partial Fulfillment of the Requirements for the Degree of Master of Arts in Teaching English as a Second Language May 2019 Moorhead, Minnesota iii Copyright 2019 Margaret Elizabeth Thoemke iv Dedication I would like to dedicate this thesis to my three most favorite people in the world. To my mother, Heather Flaherty, for always supporting me and guiding me to where I am today. To my husband, Jake Thoemke, for pushing me to be the best I can be and reminding me that I’m okay. Lastly, to my son, Liam, who is my biggest fan and my reason to be the best person I can be. -

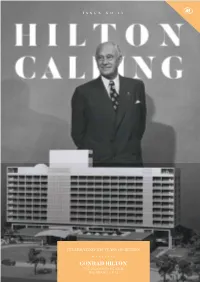

CONRAD HILTON the VISIONARY BEHIND the BRAND – P.12 Visit → Businessventures.Com.Mt

ISSUE NO.13 CELEBRATING 100 YEARS OF HILTON CONRAD HILTON THE VISIONARY BEHIND THE BRAND – P.12 Visit → businessventures.com.mt Surfacing the most beautiful spaces Marble | Quartz | Engineered Stone | Granite | Patterned Tiles | Quartzite | Ceramic | Engineered Wood Halmann Vella Ltd, The Factory, Mosta Road, Lija. LJA 9016. Malta T: (+356) 21 433 636 E: [email protected] www.halmannvella.com Surfacing the most beautiful spaces Marble | Quartz | Engineered Stone | Granite | Patterned Tiles | Quartzite | Ceramic | Engineered Wood Halmann Vella Ltd, The Factory, Mosta Road, Lija. LJA 9016. Malta T: (+356) 21 433 636 E: [email protected] www.halmannvella.com FOREWORD Hilton Calling Dear guests and friends of the Hilton Malta, Welcome to this very special edition of our magazine. The 31st of May 2019 marks the 100th anniversary of the opening of the first Hilton hotel in the world, in Cisco, Texas, in the United States of America. Since that milestone date in 1919, the brand has gone from strength to strength. Globally, there are currently just under 5700 hotels, in more than 100 countries, under a total of 17 brands, with new properties opening every day. Hilton has been EDITOR bringing memorable experiences to countless guests, clients and team members Diane Brincat for a century, returned value to the owners who supported it, and made a strongly positive impact on the various communities in which it operates. We at the Hilton MAGAZINE COORDINATOR Malta are proud to belong to this worldwide family, and to join in fulfilling its Rebecca Millo mission – in Conrad Hilton’s words, to ‘fill the world with the light and warmth of hospitality’ – for at least another 100 years. -

Dschinghis Khan HISTORY MIT RS V04 MIT BILDERN

DIE GESCHICHTE DER POPGRUPPE DSCHINGHIS KHAN Mit Ihren Hits wie Dschinghis Khan – Moskau – Hadschi Halef Omar – Rocking Son of Dschinghis Khan – Pistolero - Mexiko und vielen, vielen mehr eroberte die deutschsprachige Popgruppe Dschinghis Khan als THE CONQUEROR IN MUSIC bis heute die Welt. Alles begann 1979. Der Komponist und Produzent, Ralph Siegel, und der Textdichter, Dr. Bernd Meinunger , schrieben das Lied DSCHINGHIS KHAN, mit welchem die gleichnamige Gruppe DSCHINGHIS KHAN zum Vorentscheid des Deutschen Beitrags für den EUROVISION SONG CONTES T – EIN LIED FÜR ISRAEL – am 17. März 1979 in der Rudi – Sedlma yer in München antratt. Dschinghis Khan, das waren die Künstler Wolfgang Heichel, Steve Bender, Edina Pop, Henriette Strobel, Leslie Mandoki und der talentierte Tänzer Louis Potgieter. Innerhalb von nur 6 Wochen, also unter immensem Zeitdruck und mit hohem finanziellem Aufwand, wurden im Siegel Studio die Plattenproduktion der ersten Single – Dschinghis Khan und Sahara – produziert. Vom angesagten Kostümdesigner , Marc Manó, die exot ischen Kostüme geschneidert; von der international arbeitenden Visagisten, Heidi Moser , die markanten Make ups gestaltet; vom Choreographie-Profi für Fernsehshows , Hannes Winkler, wurde die tänzerische Bühnenshow entwickelt und die Künstler von Dschinghis Khan waren je den Tag im Studio und probten, probten und probten, solange, bis die Bühnenshow perfekt war. Dann kam der große Tag. Am 17. März 1979 war in der Rudi-Sedlmayr-Halle in München die Premiere. Die Popgruppe Dschinghis Khan nahm mit ihrem gleichnamigen Titel „ Dschinghis Khan“ an der Deutschen Vorentscheidung zum EUROVISION SONG CONTEST – EIN LIED FÜR ISRAEL - teil. Drei Minuten hatte Dschinghis Khan Zeit , dem deutschen Fernsehpublikum und den Juroren zu zeigen, das s sie den besten Beitrag präsentieren würden. -

Songs by Title

Songs by Title Title Artist Title Artist #1 Goldfrapp (Medley) Can't Help Falling Elvis Presley John Legend In Love Nelly (Medley) It's Now Or Never Elvis Presley Pharrell Ft Kanye West (Medley) One Night Elvis Presley Skye Sweetnam (Medley) Rock & Roll Mike Denver Skye Sweetnam Christmas Tinchy Stryder Ft N Dubz (Medley) Such A Night Elvis Presley #1 Crush Garbage (Medley) Surrender Elvis Presley #1 Enemy Chipmunks Ft Daisy Dares (Medley) Suspicion Elvis Presley You (Medley) Teddy Bear Elvis Presley Daisy Dares You & (Olivia) Lost And Turned Whispers Chipmunk Out #1 Spot (TH) Ludacris (You Gotta) Fight For Your Richard Cheese #9 Dream John Lennon Right (To Party) & All That Jazz Catherine Zeta Jones +1 (Workout Mix) Martin Solveig & Sam White & Get Away Esquires 007 (Shanty Town) Desmond Dekker & I Ciara 03 Bonnie & Clyde Jay Z Ft Beyonce & I Am Telling You Im Not Jennifer Hudson Going 1 3 Dog Night & I Love Her Beatles Backstreet Boys & I Love You So Elvis Presley Chorus Line Hirley Bassey Creed Perry Como Faith Hill & If I Had Teddy Pendergrass HearSay & It Stoned Me Van Morrison Mary J Blige Ft U2 & Our Feelings Babyface Metallica & She Said Lucas Prata Tammy Wynette Ft George Jones & She Was Talking Heads Tyrese & So It Goes Billy Joel U2 & Still Reba McEntire U2 Ft Mary J Blige & The Angels Sing Barry Manilow 1 & 1 Robert Miles & The Beat Goes On Whispers 1 000 Times A Day Patty Loveless & The Cradle Will Rock Van Halen 1 2 I Love You Clay Walker & The Crowd Goes Wild Mark Wills 1 2 Step Ciara Ft Missy Elliott & The Grass Wont Pay -

DEUTSCHE KARAOKE LIEDER 2Raumwohnung 36 Grad DUI-7001

DEUTSCHE KARAOKE LIEDER 2Raumwohnung 36 Grad DUI-7001 Adel Tawil Ist Da Jemand DUI-7137 Amigos 110 Karat DUI-7166 Andrea Berg Das Gefuhl DUI-7111 Andrea Berg Davon Geht Mein Herz Nicht Unter DUI-7196 Andrea Berg Du Bist Das Feuer DUI-7116 Andrea Berg Du Hast Mich 1000 Mal Belogen DUI-7004 Andrea Berg Feuervogel DUI-7138 Andrea Berg Himmel Auf Erden DUI-7112 Andrea Berg In Dieser Nacht DUI-7003 Andrea Berg Lass Mich In Flammen Stehen DUI-7117 Andrea Berg Lust Auf Pures Leben DUI-7118 Andrea Berg Mosaik DUI-7197 Andrea Berg Wenn Du Jetzt Gehst, Nimm Auch Deine Liebe Mit DUI-7002 Andreas Gabalier I Sing A Liad Für Di DUI-7054 Andreas MarWn Du Bist Alles (Maria Maria) DUI-7139 Anna-Maria Zimmermann Du Hast Mir So Den Kopf Verdreht DUI-7119 Anna-Maria Zimmermann Scheiß Egal DUI-7167 Anstandslos & Durchgeknallt Egal DUI-7168 Beatrice Egli Fliegen DUI-7120 Beatrice Egli Keiner Küsst Mich (So Wie Du) DUI-7175 Beatrice Egli Mein Ein Und Alles DUI-7176 Beatrice Egli Was Geht Ab DUI-7177 Bill Ramsey Ohne Krimi Geht Die Mimi Nie Ins Bea DUI-7063 Cassandra Steen & Adel Tawil Stadt DUI-7055 Charly Brunner Wahre Liebe DUI-7178 Chris Roberts Du Kannst Nicht Immer 17 Sein DUI-7005 Chris Roberts Ich Bin Verliebt In Die Liebe DUI-7134 ChrisWan Anders Einsamkeit Had Viele Namen DUI-7006 ChrisWna Sturmer Engel Fliegen Einsam DUI-7007 ChrisWna Stürmer Mama Ana Ahabak DUI-7008 Cindy & Bert Immer Wieder Sonntags DUI-7136 Claudia Jung Mein Herz Lässt Dich Nie Allein DUI-7009 Conny & Peter Alexander Verliebt Verlobt Verheiratet DUI-7010 Conny Francis Schöner Fremder -

Verbotsliste Zum Members´ Favourite 2012 (MF 2012)

Verbotsliste zum Members´ Favourite 2012 (MF 2012) Interpret Titel Wettbewerb MF = Members´ Favourite (ab 01) OGAEVC = OGAE Video Contest OGAESC = OGAE Song Contest SCC = Second Chance Contest MSC = Member Song Contest (bis 00) auch nicht erlaubt: alle ESC-Final-Lieder von 1956 - 2012 auch nicht erlaubt: alle ESC-Semifinal-Lieder von 2004 - 2012 auch nicht erlaubt: alle ESC- Vorentscheidungs-Titel 2012 2 Eivissa Viva la fiesta MF 02 2Raumwohnung 2 von Millionen von Sternen MF 05 2raumwohnung Besser geht's nicht OGAEVC 07 3Js De stroom SCC 11 4 Elements Kludu labojums MF 04 4´33" Ave Maria Laudata SCC 02 7 Up Daj, spusti se SCC 99 A1 Don´t wanna lose you again SCC 10 Aalto, Saara Blessed with love SCC 11 Aardvarks Disguised by the night MSC 97 ABBA oder Abba As good as new MF 05 ABBA oder Abba Dream world MSC 95 ABBA oder Abba No hay a quien culpar MSC 99 ABBA oder Abba One of us MSC 99 ABBA oder Abba Put on your white sombrero MSC 00 ABBA oder Abba S.O.S. MSC 00 ABBA oder Abba Slipping through my fingers MF 11 Abel Ademloos MF 05 Abel Onderweg MF 02 Abel, Morten The Birmingham Ho OGAEVC 04 Abi 93 Lauf, Siggi, lauf MSC 95 Abrahamsen, Jannicke Rocket ride MF 08 Abreu, Anna Perdone-me MF 09 Academia Operación Triunfo Mi música es tu voz MF 02 Acapella Army of the Lord MSC 00 Ace of Base Black Sea MF 11 Adam Yachad na'amod SCC 91 Adams, Oleta Get here MSC 98 Adele Set fire to the rain MF 11 Adele Someone like you OGAESC 11 Adini, Dana & Salomon, Daniel Rabot hadrachim MF 08 Adler, Ines Ich war so lange nicht verliebt MF 11 Adomaitis, Linas Floating to you SCC 11 Adoro Horizont MF 11 Adrian, Benedicte & Ingrid oder Björnov Kanskje i morgen OGAESC 00 After Dark (Åh) När ni tar saken i egna händer MF 07 Agnes Release me MF 10 Aguilar, Feddy Anak MSC 98 Aharoni, Chen Or SCC 11 Aika Stay SCC 02 Aikakone Alla vaahterapuun OGAESC 96 Ainbrusk Singers Lassie OGAESC 91 Ainhoa Mi razón de vivir SCC 03 Air & Hardy, Françoise oder Francoise Jeanne MSC 99 Airport Impressions Freedom OGAESC 11 Åkerström, C.-S. -

Sorted by ARTIST * * * * Please Note That The

* * * * Sorted by ARTIST * * * * Please note that the artist could be listed by FIRST NAME, or preceded by 'THE' or 'A' Title Artist YEAR ----------------------------------------- ------------------------ ----- 7764 BURN BREAK CRASH 'ANYSA X SNAKEHIPS 2017 2410 VOICES CARRY 'TIL TUESDAY 1985 2802 GET READY 4 THIS 2 UNLIMITED 1995 9144 MOOD 24KGOLDN 2021 8180 WORRY ABOUT YOU 2AM CLUB 2010 2219 BE LIKE THAT 3 DOORS DOWN 2001 6620 HERE WITHOUT YOU 3 DOORS DOWN 2003 1517 KRYPTONITE 3 DOORS DOWN 2000 5216 LET ME GO 3 DOORS DOWN 2005 0914 HOLD ON LOOSELY 38 SPECIAL 1981 8115 DON'T TRUST ME 3OH!3 2009 8214 MY FIRST KISS 3OH!3/ KE$HA 2010 7336 AMNESIA 5 SECONDS OF SUMMER 2014 8710 EASIER 5 SECONDS OF SUMMER 2019 7312 SHE LOOKS SO PERFECT 5 SECONDS OF SUMMER 2014 8581 WANT YOU BACK 5 SECONDS OF SUMMER 2018 8611 YOUNGBLOOD 5 SECONDS OF SUMMER 2018 7413 IN DA CLUB 50 CENT 2004 8758 ALL MY FRIENDS ARE FAKE 58TE McRAE 2020 2805 TOOTSIE ROLL 69 BOYZ 1995 8776 SAY SO 71JA CAT 2020 7318 ALREADY HOME A GREAT BIG WORLD 2014 7117 THIS IS THE NEW YEAR A GREAT BIG WORLD 2013 3109 BACK AND FORTH AALIYAH 1994 4809 MORE THAN A WOMAN AALIYAH 2002 1410 TRY AGAIN AALIYAH 2000 7744 FOOL'S GOLD AARON CARTER 2016 2112 I MISS YOU AARON HALL 1994 2903 DANCING QUEEN ABBA 1977 6157 THE LOOK OF LOVE (PART ONE) ABC 1982 8542 ODYSSEY ACCIDENTALS 2017 8154 WHATAYA WANT FROM ME ADAM LAMBERT 2010 8274 ROLLING IN THE DEEP ADELE 2011 8369 SOMEONE LIKE YOU ADELE 2012 5964 BACK IN THE SADDLE AEROSMITH 1977 5961 DREAM ON AEROSMITH 1973 5417 JADED AEROSMITH 2001 5962 SWEET EMOTION AEROSMITH 1975 5963 WALK THIS WAY AEROSMITH 1976 8162 RELEASE ME AGNES 2010 9132 BANG! AJR 2020 6906 I WANNA LOVE YOU AKON FEAT SNOOP DOGG 2007 7810 LOCKED UP AKON feat STYLES P. -

Západočeská Univerzita V Plzni FAKULTA PEDAGOGICKÁ KATEDRA HUDEBNÍ KULTURY

Západočeská univerzita v Plzni FAKULTA PEDAGOGICKÁ KATEDRA HUDEBNÍ KULTURY KRAJSKÁ HUDEBNÍ SCÉNA PO ROCE 2000 BAKALÁŘSKÁ PRÁCE František Marek Popularizace hudební kultury léta studia (2010 - 2013) Vedoucí práce: Mgr. Vít Aschenbrenner, Ph.D. Plzeň, březen 2013 Prohlašuji, že jsem bakalářskou práci vypracoval samostatně s použitím uvedené literatury a zdrojů informací. Plzeň, …………………………………………… vlastnoruční podpis Poděkování Chtěl bych vyjádřit své poděkování vedoucímu mé bakalářské práce panu Mgr. Vítu Aschenbrennerovi, Ph.D. za jeho cenné rady a připomínky. …………………………………………………… OBSAH ÚVOD ..............................................................................................................................................6 TEORETICKÁ ČÁST ...............................................................................................................................8 1 HUDEBNÍ LOKALITY ........................................................................................................................8 1.1 PLZEŇ .................................................................................................................................9 1.2 DALŠÍ MĚSTA ...................................................................................................................... 16 1.3 VENKOV ............................................................................................................................ 23 2 VÝZNAMNÉ HUDEBNÍ AKCE ........................................................................................................... -

Songs by Artist 08/29/21

Songs by Artist 09/24/21 As Sung By Song Title Track # Alexander’s Ragtime Band DK−M02−244 All Of Me PM−XK−10−08 Aloha ’Oe SC−2419−04 Alphabet Song KV−354−96 Amazing Grace DK−M02−722 KV−354−80 America (My Country, ’Tis Of Thee) ASK−PAT−01 America The Beautiful ASK−PAT−02 Anchors Aweigh ASK−PAT−03 Angelitos Negros {Spanish} MM−6166−13 Au Clair De La Lune {French} KV−355−68 Auld Lang Syne SC−2430−07 LP−203−A−01 DK−M02−260 THMX−01−03 Auprès De Ma Blonde {French} KV−355−79 Autumn Leaves SBI−G208−41 Baby Face LP−203−B−07 Beer Barrel Polka (Roll Out The Barrel) DK−3070−13 MM−6189−07 Beyond The Sunset DK−77−16 Bill Bailey, Won’t You Please Come Home? DK−M02−240 CB−5039−3−13 B−I−N−G−O CB−DEMO−12 Caisson Song ASK−PAT−05 Clementine DK−M02−234 Come Rain Or Come Shine SAVP−37−06 Cotton Fields DK−2034−04 Cry Like A Baby LAS−06−B−06 Crying In The Rain LAS−06−B−09 Danny Boy DK−M02−704 DK−70−16 CB−5039−2−15 Day By Day DK−77−13 Deep In The Heart Of Texas DK−M02−245 Dixie DK−2034−05 ASK−PAT−06 Do Your Ears Hang Low PM−XK−04−07 Down By The Riverside DK−3070−11 Down In My Heart CB−5039−2−06 Down In The Valley CB−5039−2−01 For He’s A Jolly Good Fellow CB−5039−2−07 Frère Jacques {English−French} CB−E9−30−01 Girl From Ipanema PM−XK−10−04 God Save The Queen KV−355−72 Green Grass Grows PM−XK−04−06 − 1 − Songs by Artist 09/24/21 As Sung By Song Title Track # Greensleeves DK−M02−235 KV−355−67 Happy Birthday To You DK−M02−706 CB−5039−2−03 SAVP−01−19 Happy Days Are Here Again CB−5039−1−01 Hava Nagilah {Hebrew−English} MM−6110−06 He’s Got The Whole World In His Hands -

Darude Sandstorm Download Mp3

1 / 2 Darude Sandstorm Download Mp3 Darude - Sandstorm download mp3 · Artist: Darude · Song: Sandstorm · Genre: Pop · Length: 07:03.. Download and print in PDF or MIDI free sheet music for Sandstorm by Darude ... The track was uploaded to MP3.com where it gained global recognition. Trance.. Darude - Sandstorm.mp3.meta · ambient sound, 3 months ago. Duck Toy Squeak Dog Toy Sound Effect (download)-[AudioTrimmer.com].mp3 · sounds .... I'm looking for some way to convert mp3 files into MML. Darude - Sandstorm MIDI Artist Darude: Title Sandstorm Free Download Play MIDI Stop MIDI: Convert .... Get all songs from Darude Sandstorm di Gold Mp3. Download a collection list of songs from Darude Sandstorm easily, free as much as you like, and enjoy!. Darude - Music mp3. Darude - Music Written & arranged by V. · Darude - Music (Original Version) (2003) mp3 · Darude - Sandstorm mp3 · Darude - Music mp3 ... Free Download Darude Sandstorm Marimba Remix Ringtone in high quality. You can download this ringtone in 2 formats:m4r for iPhone, mp3 for android.. Darude - Feel The Beat mp3 Duration 4:12 Size 9.61 MB / Darude 4. Download Darude Sandstorm MP3 Free. All Genres Balearic/Downtempo Bass Breakbeat .... coryxkenshin outro darude sandstorm (4.7 MB) mp3 download really free http://disq.us/t/29kfr1j. 10:54 PM - 22 Jun 2016. 0 replies 0 retweets 0 likes. Reply.. Darude Sandstorm (mp3 Download), 7SuperNova, 04:05, PT4M5S, 5.61 MB, 124902, 660, 43, 2008-10-11 08:44:04, 2021-03-26 00:34:27, Mp3 Lists, .... Addeddate: 2018-11-01 00:53:19. Identifier: whatisthemusictitle_201811. Scanner: Internet Archive HTML5 Uploader 1.6.3.