Measuring the Research Productivity of Political Science Departments Using Google Scholar

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Analyzing Change in International Politics: the New Institutionalism and the Interpretative Approach

Analyzing Change in International Politics: The New Institutionalism and the Interpretative Approach - Guest Lecture - Peter J. Katzenstein* 90/10 This discussion paper was presented as a guest lecture at the MPI für Gesellschaftsforschung, Köln, on April 5, 1990 Max-Planck-Institut für Gesellschaftsforschung Lothringer Str. 78 D-5000 Köln 1 Federal Republic of Germany MPIFG Discussion Paper 90/10 Telephone 0221/ 336050 ISSN 0933-5668 Fax 0221/ 3360555 November 1990 * Prof. Peter J. Katzenstein, Cornell University, Department of Government, McGraw Hall, Ithaca, N.Y. 14853, USA 2 MPIFG Discussion Paper 90/10 Abstract This paper argues that realism misinterprets change in the international system. Realism conceives of states as actors and international regimes as variables that affect national strategies. Alternatively, we can think of states as structures and regimes as part of the overall context in which interests are defined. States conceived as structures offer rich insights into the causes and consequences of international politics. And regimes conceived as a context in which interests are defined offer a broad perspective of the interaction between norms and interests in international politics. The paper concludes by suggesting that it may be time to forego an exclusive reliance on the Euro-centric, Western state system for the derivation of analytical categories. Instead we may benefit also from studying the historical experi- ence of Asian empires while developing analytical categories which may be useful for the analysis of current international developments. ***** In diesem Aufsatz wird argumentiert, daß der "realistische" Ansatz außenpo- litischer Theorie Wandel im internationalen System fehlinterpretiere. Dieser versteht Staaten als Akteure und internationale Regime als Variablen, die nationale Strategien beeinflussen. -

PSC 760R Proseminar in Comparative Politics Fall 2016

PSC 760R Proseminar in Comparative Politics Fall 2016 Professor: Office: Office Phone: Office Hours: Email: Course Description and Learning Outcomes Comparative politics is perhaps the broadest field of political science. This course will introduce students to the major theoretical approaches employed in comparative politics. The major debates and controversies in the field will be examined. Although some associate comparative politics with “the comparative method,” those conducting research in the area of comparative politics use a multitude of methodologies and pursue diverse topics. In this course, students will analyze and discuss the theoretical approaches and methods used in comparative politics. Course Requirements Class participation and attendance. Students must come to class prepared, having completed all of the required reading, and ready to actively discuss the material at hand. Each student must submit comments/questions on the assigned readings for six classes (not including the class you help facilitate). Please e-mail them to me by noon on the day of class. Students are expected to attend each class. Missing classes will have a deleterious effect on this portion of the grade. Arriving late, leaving early, or interrupting class with a phone or other electronic device will also result in a drop in the student’s grade. Students are not allowed to sleep, read newspapers or anything else, listen to headphones, TEXT, or talk to others during class. You must turn off all electronic devices during class. Any exceptions must be cleared with me in advance. Laptop computers and iPads are allowed for taking notes ONLY. I reserve the right to ban laptops, iPads, and so forth. -

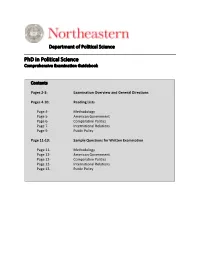

Phd in Political Science Comprehensive Examination Guidebook

Department of Political Science __________________________________________________________ PhD in Political Science Comprehensive Examination Guidebook Contents Pages 2-3: Examination Overview and General Directions Pages 4-10: Reading Lists Page 4- Methodology Page 5- American Government Page 6- Comparative Politics Page 7- International Relations Page 9- Public Policy Page 11-13: Sample Questions for Written Examination Page 11- Methodology Page 12- American Government Page 12- Comparative Politics Page 12- International Relations Page 13- Public Policy EXAMINATION OVERVIEW AND GENERAL DIRECTIONS Doctoral students sit For the comprehensive examination at the conclusion of all required coursework, or during their last semester of coursework. Students will ideally take their exams during the fifth semester in the program, but no later than their sixth semester. Advanced Entry students are strongly encouraged to take their exams during their Fourth semester, but no later than their FiFth semester. The comprehensive examination is a written exam based on the literature and research in the relevant Field of study and on the student’s completed coursework in that field. Petitioning to Sit for the Examination Your First step is to petition to participate in the examination. Use the Department’s graduate petition form and include the following information: 1) general statement of intent to sit For a comprehensive examination, 2) proposed primary and secondary Fields areas (see below), and 3) a list or table listing all graduate courses completed along with the Faculty instructor For the course and the grade earned This petition should be completed early in the registration period For when the student plans to sit For the exam. -

Toward a More Democratic Congress?

TOWARD A MORE DEMOCRATIC CONGRESS? OUR IMPERFECT DEMOCRATIC CONSTITUTION: THE CRITICS EXAMINED STEPHEN MACEDO* INTRODUCTION ............................................................................................... 609 I. SENATE MALAPPORTIONMENT AND POLITICAL EQUALITY................. 611 II. IN DEFENSE OF THE SENATE................................................................ 618 III. CONSENT AS A DEMOCRATIC VIRTUE ................................................. 620 IV. REDISTRICTING AND THE ELECTORAL COLLEGE REFORM? ................ 620 V. THE PROBLEM OF GRIDLOCK, MINORITY VETOES, AND STATUS- QUO BIAS: UNCLOGGING THE CHANNELS OF POLITICAL CHANGE?.... 622 CONCLUSION................................................................................................... 627 INTRODUCTION There is much to admire in the work of those recent scholars of constitutional reform – including Sanford Levinson, Larry Sabato, and prior to them, Robert Dahl – who propose to reinvigorate our democracy by “correcting” and “revitalizing” our Constitution. They are right to warn that “Constitution worship” should not supplant critical thinking and sober assessment. There is no doubt that our 220-year-old founding charter – itself the product of compromise and consensus, and not only scholarly musing – could be improved upon. Dahl points out that in 1787, “[h]istory had produced no truly relevant models of representative government on the scale the United States had already attained, not to mention the scale it would reach in years to come.”1 Political science has since progressed; as Dahl also observes, none of us “would hire an electrician equipped only with Franklin’s knowledge to do our wiring.”2 But our political plumbing is just as archaic. I, too, have participated in efforts to assess the state of our democracy, and co-authored a work that offers recommendations, some of which overlap with * Laurance S. Rockefeller Professor of Politics and the University Center for Human Values; Director of the University Center for Human Values, Princeton University. -

Theda Skocpol

NAMING THE PROBLEM What It Will Take to Counter Extremism and Engage Americans in the Fight against Global Warming Theda Skocpol Harvard University January 2013 Prepared for the Symposium on THE POLITICS OF AMERICA’S FIGHT AGAINST GLOBAL WARMING Co-sponsored by the Columbia School of Journalism and the Scholars Strategy Network February 14, 2013, 4-6 pm Tsai Auditorium, Harvard University CONTENTS Making Sense of the Cap and Trade Failure Beyond Easy Answers Did the Economic Downturn Do It? Did Obama Fail to Lead? An Anatomy of Two Reform Campaigns A Regulated Market Approach to Health Reform Harnessing Market Forces to Mitigate Global Warming New Investments in Coalition-Building and Political Capabilities HCAN on the Left Edge of the Possible Climate Reformers Invest in Insider Bargains and Media Ads Outflanked by Extremists The Roots of GOP Opposition Climate Change Denial The Pivotal Battle for Public Opinion in 2006 and 2007 The Tea Party Seals the Deal ii What Can Be Learned? Environmentalists Diagnose the Causes of Death Where Should Philanthropic Money Go? The Politics Next Time Yearning for an Easy Way New Kinds of Insider Deals? Are Market Forces Enough? What Kind of Politics? Using Policy Goals to Build a Broader Coalition The Challenge Named iii “I can’t work on a problem if I cannot name it.” The complaint was registered gently, almost as a musing after-thought at the end of a June 2012 interview I conducted by telephone with one of the nation’s prominent environmental leaders. My interlocutor had played a major role in efforts to get Congress to pass “cap and trade” legislation during 2009 and 2010. -

Theda Skocpol | Mershon Center for International Security Studies | the Ohio State Univ

Theda Skocpol | Mershon Center for International Security Studies | The Ohio State Univ... Page 1 of 2 The Ohio State University www.osu.edu Help Campus map Find people Webmail Search home > events calendar > september 2006 > theda skocpol September Citizenship Lecture Series October Theda Skocpol November December "Voice and Inequality: The Transformation of American Civic Democracy" January Theda Skocpol Professor of Government February Monday, Sept. 25, 2006 and Sociology March Noon Dean of the Graduate Mershon Center for International Security Studies April School of Arts and 1501 Neil Ave., Columbus, OH 43201 Sciences May Harvard University 2007-08 See a streaming video of this lecture. This streaming video requires RealPlayer. If you do not have RealPlayer, you can download it free. Theda Skocpol is the Victor S. Thomas Professor of Government and Sociology and Dean of the Graduate School of Arts and Sciences at Harvard University. She has also served as Director of the Center for American Political Studies at Harvard from 1999 to 2006. The author of nine books, nine edited collections, and more than seven dozen articles, Skocpol is one of the most cited and widely influential scholars in the modern social sciences. Her work has contributed to the study of comparative politics, American politics, comparative and historical sociology, U.S. history, and the study of public policy. Her first book, States and Social Revolutions: A Comparative Analysis of France, Russia, and China (Cambridge, 1979), won the 1979 C. Wright Mills Award and the 1980 American Sociological Association Award for a Distinguished Contribution to Scholarship. Her Protecting Soldiers and Mothers: The Political Origins of Social Policy in the United States (Harvard, 1992), won five scholarly awards. -

Achieving Cooperating Under Anarchy,” by Robert Axelrod and Robert Keohane, 1985

Devarati Ghosh “Achieving Cooperating Under Anarchy,” by Robert Axelrod and Robert Keohane, 1985 · Definitions: remember that for cooperation to occur, there must be some combination of conflicting and shared interests. Cooperation is distinct from harmony, where there is a total identity of interests between states. Anarchy is the absence of common world government, but it does not imply that the world is devoid of organizational forms. · Three dimensions of structure impact the incentives for states to cooperate: the mutuality of interests, the shadow of the future, and the number of players. The mutuality of interests—is determined by an objective structure of material payoffs, as well as upon actors’ perceptions of that structure. Changes in beliefs about other players, as well as about the most optimal policy, may cause state leaders to prefer unilateral action. This is true of both political-economic and security issues. · The longer the shadow of the future, the more the costs of defection offset the short-term incentive to defect. This is more true of political-economic issues, where there is a greater likelihood of extended interaction, than of security issues, where a successful preemptive war would seriously advantage one of the players. Also important is the ability of states to identifying defection, which Axelrod and Keohane believe does not distinguish political-economic issues from security ones. · Once defection has been identified, the next problem is sanctioning the cheater. This becomes significantly more difficult when there is a large number of actors. Defection can be costly, so there must be enough incentive for states to punish defectors. -

SIS 802 Comparative Politics

Ph.D. Seminar in Comparative Politics SIS 802, Fall 2016 School of International Service American University COURSE INFORMATION Professor: Matthew M. Taylor Email: [email protected] Classes will be held on Tuesdays, 2:35-5:15pm Office hours: Wednesdays (11:30pm-3:30pm) and by appointment. In the case of appointments, please email me at least two days in advance to schedule. Office: SIS 350 COURSE DESCRIPTION Comparative political science is one of the four traditional subfields of political science. It differs from international relations in its focus on individual countries and regions, and its comparison across units – national, subnational, actors, and substantive themes. Yet it is vital to scholars of international relations, not least because of its ability to explain differences in the basic postures of national and subnational actors, as well as in its focus on key variables of interest to international relations, such as democratization, the organization of state decision-making, and state capacity. Both subfields have benefited historically from considerable methodological and theoretical cross-fertilization which has shaped the study of international affairs significantly. The first section of the course focuses on the epistemology of comparative political science, seeking to understand how we know what we know, the accumulation of knowledge, and the objectivity of the social sciences. The remainder of the course addresses substantive debates in the field, although students are encouraged to critically address the theoretical and methodological approaches that are used to explore these substantive issues. COURSE OBJECTIVES This course will introduce students to the field, analyzing many of the essential components of comparative political science: themes, debates, and concepts, as well as different theoretical and methodological approaches. -

Rewriting the Epic of America

One Rewriting the Epic of America IRA KATZNELSON “Is the traditional distinction between international relations and domes- tic politics dead?” Peter Gourevitch inquired at the start of his seminal 1978 article, “The Second Image Reversed.” His diagnosis—“perhaps”—was mo- tivated by the observation that while “we all understand that international politics and domestic structures affect each other,” the terms of trade across the domestic and international relations divide had been uneven: “reason- ing from international system to domestic structure” had been downplayed. Gourevitch’s review of the literature demonstrated that long-standing efforts by international relations scholars to trace the domestic roots of foreign pol- icy to the interplay of group interests, class dynamics, or national goals1 had not been matched by scholarship analyzing how domestic “structure itself derives from the exigencies of the international system.”2 Gourevitch counseled scholars to turn their attention to the international system as a cause as well as a consequence of domestic politics. He also cautioned that this reversal of the causal arrow must recognize that interna- tional forces exert pressures rather than determine outcomes. “The interna- tional system, be it in an economic or politico-military form, is underdeter- mining. The environment may exert strong pulls but short of actual occupation, some leeway in the response to that environment remains.”3 A decade later, Robert Putnam turned to two-level games to transcend the question as to “whether -

Government 2010. Strategies of Political Inquiry, G2010

Government 2010. Strategies of Political Inquiry, G2010 Gary King, Robert Putnam, and Sidney Verba Thursdays 12-2pm, Littauer M-17 Gary King [email protected], http://GKing.Harvard.edu Phone: 617-495-2027 Office: 34 Kirkland Street Robert Putnam [email protected] Phone: 617-495-0539 Office: 79 JFK Street, T376 Sidney Verba [email protected] Phone: 617-495-4421 Office: Littauer Center, M18 Prerequisite or corequisite: Gov1000 Overview If you could learn only one thing in graduate school, it should be how to do scholarly research. You should be able to assess the state of a scholarly literature, identify interesting questions, formulate strategies for answering them, have the methodological tools with which to conduct the research, and understand how to write up the results so they can be published. Although most graduate level courses address these issues indirectly, we provide an explicit analysis of each. We do this in the context of a variety of strategies of empirical political inquiry. Our examples cover American politics, international relations, compara- tive politics, and other subfields of political science that rely on empirical evidence. We do not address certain research in political theory for which empirical evidence is not central, but our methodological emphases will be as varied as our substantive examples. We take empirical evidence to be historical, quantitative, or anthropological. Specific methodolo- gies include survey research, experiments, non-experiments, intensive interviews, statistical analyses, case studies, and participant observation. Assignments Weekly reading assignments are listed below. Since our classes are largely participatory, be sure to complete the readings prior to the class for which they are as- signed. -

Robert O. Keohane: the Study of International Relations*

Robert O. Keohane: The Study of International Relations* Peter A. Gourevitch, University of California, San Diego n electing Robert Keohane APSA I president, members have made an interesting intellectual statement about the study of international rela- tions. Keohane has been the major theoretical challenger in the past quarter century of a previous Asso- ciation president, Kenneth Waltz, whose work dominated the debates in the field for many years. Keo- hane's writings, his debate with Waltz, his ideas about institutions, cooperation under anarchy, transna- tional relations, complex interdepen- dence, ideas, and domestic politics now stand as fundamental reference points for current discussions in this field.1 Theorizing, methodology, exten- sion, and integration: these nouns make a start of characterizing the Robert O. Keohane contributions Robert Keohane has made to the study of international relations. Methodology —Keohane places concepts, techniques, and ap- Theorizing— Keohane challenges the study of the strategic interac- proaches as the broader discpline. the realist analysis that anarchy tion among states onto the foun- and the security dilemma inevita- dations of economics, game The field of international relations bly lead states into conflict, first theory, and methodological indi- has been quite transformed between with the concept of transnational vidualism and encourages the ap- the early 1960s, when Keohane en- relations, which undermines the plication of positivist techniques tered it, and the late 1990s, when his centrality -

Government 601: Methods of Political Analysis I Fall 2003, Tueday 7:00–10:00P (WE 104)

Government 601: Methods of Political Analysis I Fall 2003, Tueday 7:00–10:00p (WE 104) Professors: Walter R. Mebane, Jr. Jonas Pontusson 217 White Hall 205 White Hall 255-3868 255-6764 [email protected] [email protected] office hours: T 3–5, W 2–3 office hours: MW 10–11 Assignment Due Dates due date description TBA one weekly discussion paper as assigned (weeks 3–10, 12) October 28 “explanations” paper November 11 research pre-proposal November 18 Boolean exercise December 15 research proposal Reading Availability We will be reading large proportions of most of the following books, and most are worth having on the shelf, so you may want to buy them. On the other hand, several are expensive. Most of the books should also soon appear on reserve in the Government Reading Room in Olin (room 405). Photocopies of other required reading should also be available in the Reading Room. Browning, Christopher R. 2000. Nazi Policy, Jewish Workers, German Killers. New York: Cam- bridge UP. Browning, Christopher R. 1998. Ordinary Men: Reserve Police Battalion 101 and the Final Solution in Poland. New York: HarperCollins. Campbell, Donald T., and Julian C. Stanley. 1966. Experimental and Quasi-experimental Designs for Research. Chicago: Rand McNally. Goldhagen, Daniel Jonah. 1996. Hitler’s Willing Executioners: Ordinary Germans and the Holo- caust. New York: Knopf. Golden, Miriam. 1997. Heroic Defeats. New York: Cambridge UP. Hedstr¨om,Peter, and Richard Swedborg, eds. 1998. Social Mechanisms: An Analytical Approach to Social Theory. New York: Cambridge UP. King, Gary, Robert O. Keohane and Sidney Verba.