Experience with Hadoop

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Open Source Software Packages

Hitachi Ops Center V. 10.3.1 Open Source Software Packages Contact Information: Hitachi Ops Center Project Manager Hitachi Vantara LLC 2535 Augustine Drive Santa Clara, California 95054 Name of Product/Product Version License Component aesh 2.4 Apache License, Version 2.0 aesh Extensions 1.8 Apache License, Version 2.0 aesh Readline 2.0 Apache License, Version 2.0 aesh Terminal API 2.0 Apache License, Version 2.0 @angular-builders/custom- 8.0.0-RC.0 The MIT License webpack @angular-devkit/build-angular 0.800.0-rc.2 The MIT License @angular-devkit/build-angular 0.803.25 The MIT License @angular-devkit/core 7.3.8 The MIT License @angular-devkit/schematics 7.3.8 The MIT License @angular/animations 7.2.15 The MIT License @angular/animations 8.2.14 The MIT License @angular/cdk 7.3.7 The MIT License Name of Product/Product Version License Component @angular/cli 8.0.0 The MIT License @angular/cli 8.3.25 The MIT License @angular/common 7.2.15 The MIT License @angular/common 8.2.14 The MIT License @angular/compiler 7.2.15 The MIT License @angular/compiler 8.2.14 The MIT License @angular/compiler-cli 8.2.14 The MIT License @angular/core 7.2.15 The MIT License @angular/forms 7.2.13 The MIT License @angular/forms 7.2.15 The MIT License @angular/forms 8.2.14 The MIT License @angular/forms 8.2.7 The MIT License @angular/language-service 8.2.14 The MIT License @angular/platform-browser 7.2.15 The MIT License @angular/platform-browser 8.2.14 The MIT License Name of Product/Product Version License Component @angular/platform-browser- 7.2.15 The MIT License -

A Review on Big Data Analytics in the Field of Agriculture

International Journal of Latest Transactions in Engineering and Science A Review on Big Data Analytics in the field of Agriculture Harish Kumar M Department. of Computer Science and Engineering Adhiyamaan College of Engineering,Hosur, Tamilnadu, India Dr. T Menakadevi Dept. of Electronics and Communication Engineering Adhiyamaan College of Engineering,Hosur, Tamilnadu, India Abstract- Big Data Analytics is a Data-Driven technology useful in generating significant productivity improvement in various industries by collecting, storing, managing, processing and analyzing various kinds of structured and unstructured data. The role of big data in Agriculture provides an opportunity to increase economic gain of the farmers by undergoing digital revolution in this aspect we examine through precision agriculture schemas equipped in many countries. This paper reviews the applications of big data to support agriculture. In addition it attempts to identify the tools that support the implementation of big data applications for agriculture services. The review reveals that several opportunities are available for utilizing big data in agriculture; however, there are still many issues and challenges to be addressed to achieve better utilization of this technology. Keywords—Agriculture, Big data Analytics, Hadoop, HDFS, Farmers I. INTRODUCTION The technologies employed are exciting, involve analysis of mind-numbing amounts of data and require fundamental rethinking as to what constitutes data. Big data is a collecting raw data which undergoes various phases like Classification, Processing and organizing into meaningful information. Raw information cannot be consumed directly for any form of analysis. It’s a process of examining uncover patterns, finding unknown correlation and finding useful information which are adopted for decision making analysis. -

Apache Hbase ™ Reference Guide

Apache HBase ™ Reference Guide Apache HBase Team Version 2.1.9 Contents Preface. 1 Getting Started. 3 1. Introduction . 4 2. Quick Start - Standalone HBase . 5 Apache HBase Configuration. 18 3. Configuration Files . 19 4. Basic Prerequisites . 21 5. HBase run modes: Standalone and Distributed . 27 6. Running and Confirming Your Installation. 31 7. Default Configuration . 32 8. Example Configurations. 72 9. The Important Configurations . 74 10. Dynamic Configuration . 82 Upgrading. 85 11. HBase version number and compatibility. 86 12. Rollback . 92 13. Upgrade Paths . 96 The Apache HBase Shell . 108 14. Scripting with Ruby . 109 15. Running the Shell in Non-Interactive Mode . 110 16. HBase Shell in OS Scripts. 111 17. Read HBase Shell Commands from a Command File . 113 18. Passing VM Options to the Shell. 115 19. Overriding configuration starting the HBase Shell. 116 20. Shell Tricks . 117 Data Model. 123 21. Conceptual View . 124 22. Physical View . 126 23. Namespace . 127 24. Table . 129 25. Row . 130 26. Column Family . 131 27. Cells. 132 28. Data Model Operations . 133 29. Versions . 135 30. Sort Order . 140 31. Column Metadata . 141 32. Joins . 142 33. ACID . 143 HBase and Schema Design . 144 34. Schema Creation . 145 35. Table Schema Rules Of Thumb . 146 RegionServer Sizing Rules of Thumb . 147 36. On the number of column families. 148 37. Rowkey Design . 149 38. Number of Versions . 156 39. Supported Datatypes . 157 40. Joins . 158 41. Time To Live (TTL) . 159 42. Keeping Deleted Cells . 160 43. Secondary Indexes and Alternate Query Paths . 164 44. Constraints . .. -

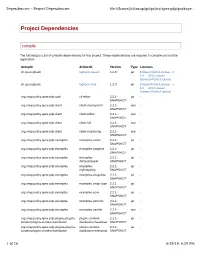

Project Dependencies file:///Home/Jhh/Onap/Git/Policy/Apex-Pdp/Package

Dependencies – Project Dependencies file:///home/jhh/onap/git/policy/apex-pdp/package... Project Dependencies compile The following is a list of compile dependencies for this project. These dependencies are required to compile and run the application: GroupId ArtifactId Version Type Licenses ch.qos.logback logback-classic 1.2.3 jar Eclipse Public License - v 1.0 GNU Lesser General Public License ch.qos.logback logback-core 1.2.3 jar Eclipse Public License - v 1.0 GNU Lesser General Public License org.onap.policy.apex-pdp.auth cli-editor 2.2.1- jar - SNAPSHOT org.onap.policy.apex-pdp.client client-deployment 2.2.1- war - SNAPSHOT org.onap.policy.apex-pdp.client client-editor 2.2.1- war - SNAPSHOT org.onap.policy.apex-pdp.client client-full 2.2.1- war - SNAPSHOT org.onap.policy.apex-pdp.client client-monitoring 2.2.1- war - SNAPSHOT org.onap.policy.apex-pdp.examples examples-aadm 2.2.1- jar - SNAPSHOT org.onap.policy.apex-pdp.examples examples-adaptive 2.2.1- jar - SNAPSHOT org.onap.policy.apex-pdp.examples examples- 2.2.1- jar - decisionmaker SNAPSHOT org.onap.policy.apex-pdp.examples examples- 2.2.1- jar - myfirstpolicy SNAPSHOT org.onap.policy.apex-pdp.examples examples-onap-bbs 2.2.1- jar - SNAPSHOT org.onap.policy.apex-pdp.examples examples-onap-vcpe 2.2.1- jar - SNAPSHOT org.onap.policy.apex-pdp.examples examples-pcvs 2.2.1- jar - SNAPSHOT org.onap.policy.apex-pdp.examples examples-periodic 2.2.1- jar - SNAPSHOT org.onap.policy.apex-pdp.examples examples-servlet 2.2.1- war - SNAPSHOT org.onap.policy.apex-pdp.plugins.plugins- plugins-context- 2.2.1- jar - context.plugins-context-distribution distribution-hazelcast SNAPSHOT org.onap.policy.apex-pdp.plugins.plugins- plugins-context- 2.2.1- jar - context.plugins-context-distribution distribution-infinispan SNAPSHOT 1 of 26 8/19/19, 6:29 PM Dependencies – Project Dependencies file:///home/jhh/onap/git/policy/apex-pdp/package.. -

Open Source Software Packages

Hitachi Ops Center 10.5.0 Open Source Software Packages Contact Information: Hitachi Ops Center Project Manager Hitachi Vantara LLC 2535 Augustine Drive Santa Clara, California 95054 Name of Product/Product Version License Component aesh 2.4 Apache License, Version 2.0 aesh Extensions 1.8 Apache License, Version 2.0 aesh Readline 2.0 Apache License, Version 2.0 aesh Terminal API 2.0 Apache License, Version 2.0 @angular-builders/custom- 8.0.0-RC.0 The MIT License webpack @angular-devkit/build-angular 0.800.0-rc.2 The MIT License @angular-devkit/build-angular 0.901.11 The MIT License @angular-devkit/core 7.3.8 The MIT License @angular-devkit/schematics 7.3.8 The MIT License @angular/animations 9.1.11 The MIT License @angular/animations 9.1.12 The MIT License @angular/cdk 9.2.4 The MIT License Name of Product/Product Version License Component @angular/cdk-experimental 9.2.4 The MIT License @angular/cli 8.0.0 The MIT License @angular/cli 9.1.11 The MIT License @angular/common 9.1.11 The MIT License @angular/common 9.1.12 The MIT License @angular/compiler 9.1.11 The MIT License @angular/compiler 9.1.12 The MIT License @angular/compiler-cli 9.1.12 The MIT License @angular/core 7.2.15 The MIT License @angular/core 9.1.11 The MIT License @angular/core 9.1.12 The MIT License @angular/forms 7.2.15 The MIT License @angular/forms 9.1.0-next.3 The MIT License @angular/forms 9.1.11 The MIT License @angular/forms 9.1.12 The MIT License Name of Product/Product Version License Component @angular/language-service 9.1.12 The MIT License @angular/platform-browser -

Open Source Software Packages

Hitachi Ops Center V. 10.3.0 Open Source Software Packages Contact Information: Hitachi Ops Center Project Manager Hitachi Vantara LLC 2535 Augustine Drive Santa Clara, California 95054 Name of Product/Product Version License Component aesh 2.4 Apache License, Version 2.0 aesh Extensions 1.8 Apache License, Version 2.0 aesh Readline 2.0 Apache License, Version 2.0 aesh Terminal API 2.0 Apache License, Version 2.0 "Java Concurrency in Practice" 1.0-redhat- Creative Commons Attribution 2.5 Generic book annotations 4 @angular-builders/custom- 8.0.0-RC.0 The MIT License webpack @angular-devkit/build-angular 0.800.0-rc.2 The MIT License @angular-devkit/build-angular 0.803.25 The MIT License @angular-devkit/core 7.3.8 The MIT License @angular-devkit/schematics 7.3.8 The MIT License @angular/animations 7.2.15 The MIT License @angular/animations 8.2.14 The MIT License Name of Product/Product Version License Component @angular/cdk 7.3.7 The MIT License @angular/cli 8.0.0 The MIT License @angular/cli 8.3.25 The MIT License @angular/common 7.2.15 The MIT License @angular/common 8.2.14 The MIT License @angular/compiler 7.2.15 The MIT License @angular/compiler 8.2.14 The MIT License @angular/compiler-cli 8.2.14 The MIT License @angular/core 7.2.15 The MIT License @angular/forms 7.2.13 The MIT License @angular/forms 7.2.15 The MIT License @angular/forms 8.2.14 The MIT License @angular/forms 8.2.7 The MIT License @angular/language-service 8.2.14 The MIT License @angular/platform-browser 7.2.15 The MIT License Name of Product/Product Version License -

HCP - CS Product Manager HCP-CS V 2

HITACHI Inspire the Next 2535 Augustine Drive Santa Clara, CA 95054 USA Contact Information : HCP - CS Product Manager HCP-CS v 2 . 1 . 0 Hitachi Vantara LLC 2535 Augustine Dr. Santa Clara CA 95054 Component Version License Modified 18F/domain-scan 20181130-snapshot-988de72b Public Domain activesupport 5.2.1 MIT License Activiti - BPMN Converter 6.0.0 Apache License 2.0 Activiti - BPMN Model 6.0.0 Apache License 2.0 Activiti - DMN API 6.0.0 Apache License 2.0 Activiti - DMN Model 6.0.0 Apache License 2.0 Activiti - Engine 6.0.0 Apache License 2.0 Activiti - Form API 6.0.0 Apache License 2.0 Activiti - Form Model 6.0.0 Apache License 2.0 Activiti - Image Generator 6.0.0 Apache License 2.0 Activiti - Process Validation 6.0.0 Apache License 2.0 Addressable URI parser 2.5.2 Apache License 2.0 Advanced Linux Sound Architecture GNU Lesser General Public License 1.1.8 (ALSA) v2.1 only adzap/timeliness 0.3.8 MIT License aggs-matrix-stats 5.5.1 Apache License 2.0 aggs-matrix-stats 7.6.2 Apache License 2.0 agronholm/pythonfutures 3.3.0 3Delight License ahoward's lockfile 2.1.3 Ruby License ahoward's systemu 2.6.5 Ruby License GNU Lesser General Public License ai's r18n 3.1.2 v3.0 only airbnb/streamalert v3.3.0 Apache License 2.0 BSD 3-clause "New" or "Revised" ANTLR 2.7.7 License BSD 3-clause "New" or "Revised" ANTLR 4.5.1-1 License BSD 3-clause "New" or "Revised" antlr-python-runtime 4.7.2 License antw's iniparse 1.4.4 MIT License AOP Alliance (Java/J2EE AOP 1 Public Domain standard) HITACHI Inspire the Next 2535 Augustine Drive Santa Clara, CA -

Security Target

Enveil ZeroReveal® Compute Fabric Server v.2.5.4 Security Target Acumen Security, LLC. Document Version: 1.4 1 Table Of Contents 1 Security Target Introduction ................................................................................................................. 5 1.1 Security Target and TOE Reference .............................................................................................. 5 1.2 TOE Overview ................................................................................................................................ 5 1.3 TOE Description............................................................................................................................. 6 1.3.1 Evaluated Configuration ....................................................................................................... 6 1.3.2 Physical Boundaries .............................................................................................................. 7 1.3.3 Logical Boundaries ................................................................................................................ 7 1.3.4 TOE Documentation .............................................................................................................. 8 1.3.5 Excluded Functionality .......................................................................................................... 8 2 Conformance Claims ........................................................................................................................... 10 2.1 CC Conformance -

Project Dependencies Project Transitive Dependencies

9/8/2020 Dependencies – Project Dependencies Project Dependencies compile The following is a list of compile dependencies for this project. These dependencies are required to compile and run the application: GroupId ArtifactId Version Type Licenses ch.qos.logback logback- 1.2.3 jar Eclipse Public License - v 1.0 GNU Lesser General classic Public License ch.qos.logback logback-core 1.2.3 jar Eclipse Public License - v 1.0 GNU Lesser General Public License io.netty netty-all 4.1.48.Final jar Apache License, Version 2.0 org.apache.curator curator- 5.0.0 jar The Apache Software License, Version 2.0 framework org.apache.curator curator- 5.0.0 jar The Apache Software License, Version 2.0 recipes org.apache.zookeeper zookeeper 3.6.1 jar Apache License, Version 2.0 org.onap.policy.apex- context- 2.4.1- jar - pdp.context management SNAPSHOT org.projectlombok lombok 1.18.12 jar The MIT License org.slf4j slf4j-api 1.7.30 jar MIT License org.slf4j slf4j-ext 1.7.30 jar MIT License test The following is a list of test dependencies for this project. These dependencies are only required to compile and run unit tests for the application: GroupId ArtifactId Version Type Licenses junit junit 4.13 jar Eclipse Public License 1.0 org.assertj assertj-core 3.16.1 jar Apache License, Version 2.0 org.awaitility awaitility 4.0.3 jar Apache 2.0 Project Transitive Dependencies The following is a list of transitive dependencies for this project. Transitive dependencies are the dependencies of the project dependencies. -

Hitachi Ops Center V.10.0.0

Hitachi Ops Center v. 10.0.0 Open Source Software Packages Contact Information: Hitachi Ops Center Project Manager Hitachi Vantara Corporation 2535 Augustine Drive Santa Clara, California 95054 Name of Product/Product Version License Component @angular-builders/custom-webpack 8.0.0-RC.0 The MIT License @angular-devkit/architect 0.13.5 The MIT License @angular-devkit/build-angular 0.800.0-rc.2 The MIT License @angular-devkit/core 7.3.8 The MIT License @angular-devkit/schematics 7.3.8 The MIT License @angular/cli 8.0.0 The MIT License @babel/helper-module-imports 7.0.0 The MIT License @babel/polyfill 7.2.5 The MIT License @babel/types 7.4.0 The MIT License @babel/types 7.5.0 The MIT License @brickblock/eslint-config-react 1.0.1 ISC License @contentful/rich-text-types 10.2.0 The MIT License Name of Product/Product Version License Component @deepvision/creator.js 1.2.0 The MIT License @emotion/babel-utils 0.6.10 The MIT License @emotion/hash 0.6.6 The MIT License @emotion/memoize 0.6.6 The MIT License @emotion/serialize 0.9.1 The MIT License @emotion/stylis 0.7.1 The MIT License @emotion/unitless 0.6.7 The MIT License @emotion/utils 0.8.2 The MIT License @gitbook/slate-prism 0.6.0-golery.1 Apache License, Version 2.0 @hakatashi/rc-test 2.0.0 The MIT License @icons/material 0.2.4 The MIT License @inst/vscode-bin-darwin 0.0.14 ISC License 4.2.1- @ionic/core dev.20190423 The MIT License 1454.26ca72c @karen2105/test-component 0.1.0 The MIT License @marswang714/redux-logger 3.0.6 The MIT License Name of Product/Product Version License Component -

Open Source Used in CDH 6.X 1

Open Source Used In CDH 6.x 1 Cisco Systems, Inc. www.cisco.com Cisco has more than 200 offices worldwide. Addresses, phone numbers, and fax numbers are listed on the Cisco website at www.cisco.com/go/offices. Text Part Number: 78EE117C99-208239967 Open Source Used In CDH 6.x 1 1 This document contains licenses and notices for open source software used in this product. With respect to the free/open source software listed in this document, if you have any questions or wish to receive a copy of any source code to which you may be entitled under the applicable free/open source license(s) (such as the GNU Lesser/General Public License), please contact us at [email protected]. In your requests please include the following reference number 78EE117C99-208239967 Contents 1.1 Apache ZooKeeper 3.4.5 :2012-09-30 1.1.1 Available under license 1.2 flume-ng 1.9.0 1.2.1 Available under license 1.3 hadoop-common 3.0.0 1.3.1 Available under license 1.4 hbase 2.1.4 1.4.1 Available under license 1.5 hive 2.1.1 1.5.1 Available under license 1.6 hue 4.3.0 1.6.1 Available under license 1.7 impala 3.2.0 1.7.1 Available under license 1.8 kafka 2.2.1 1.8.1 Available under license 1.9 oozie 5.1.0 1.9.1 Available under license 1.10 Python 2.6.6 :6 1.10.1 Available under license 1.11 sentry 2.1.0 1.11.1 Available under license 1.12 spark 2.4.0 1.12.1 Available under license 1.13 sqoop 1.4.7 1.13.1 Available under license Open Source Used In CDH 6.x 1 2 1.1 Apache ZooKeeper 3.4.5 :2012-09-30 1.1.1 Available under license : Apache License Version 2.0, January 2004 http://www.apache.org/licenses/ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION 1. -

Lumada Data Catalog Product Manager Lumada Data Catalog V 6

HITACHI Inspire the Next 2535 Augustine Drive Santa Clara, CA 95054 USA Contact Information : Lumada Data Catalog Product Manager Lumada Data Catalog v 6 . 0 . 0 Hitachi Vantara LLC 2535 Augustine Dr. Santa Clara CA 95054 Component Version License Modified "Java Concurrency in Practice" book 1 Creative Commons Attribution 2.5 annotations BSD 3-clause "New" or "Revised" abego TreeLayout Core 1.0.1 License ActiveMQ Artemis JMS Client All 2.9.0 Apache License 2.0 Aliyun Message and Notification 1.1.8.8 Apache License 2.0 Service SDK for Java An open source Java toolkit for 0.9.0 Apache License 2.0 Amazon S3 Annotations for Metrics 3.1.0 Apache License 2.0 ANTLR 2.7.2 ANTLR Software Rights Notice ANTLR 2.7.7 ANTLR Software Rights Notice BSD 3-clause "New" or "Revised" ANTLR 4.5.3 License BSD 3-clause "New" or "Revised" ANTLR 4.7.1 License ANTLR 4.7.1 MIT License BSD 3-clause "New" or "Revised" ANTLR 4 Tool 4.5.3 License AOP Alliance (Java/J2EE AOP 1 Public Domain standard) Aopalliance Version 1.0 Repackaged 2.5.0 Eclipse Public License 2.0 As A Module Aopalliance Version 1.0 Repackaged Common Development and 2.5.0-b05 As A Module Distribution License 1.1 Aopalliance Version 1.0 Repackaged 2.6.0 Eclipse Public License 2.0 As A Module Apache Atlas Common 1.1.0 Apache License 2.0 Apache Atlas Integration 1.1.0 Apache License 2.0 Apache Atlas Typesystem 0.8.4 Apache License 2.0 Apache Avro 1.7.4 Apache License 2.0 Apache Avro 1.7.6 Apache License 2.0 Apache Avro 1.7.6-cdh5.3.3 Apache License 2.0 Apache Avro 1.7.7 Apache License 2.0 HITACHI Inspire