Scalable Combinatorial Bayesian Optimization with Tractable Statistical Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

P35-40 Layout 1

WEDNESDAY, FEBRUARY 15, 2017 lifestyle MUSIC & MOVIES Riding high, BeyoncE fails to break Grammy curse s Beyonce too edgy for the music industry? Or do the debut album was edged out by in 2013 by English folk "'Lemonade' is really about how black women are treated Grammy Awards suffer from an underlying racial bias? revivalists Mumford & Sons, chose not to even submit his fol- in society historically, and in the contemporary moment, IThe superstar's failure to win top prizes is vexing her low-up, "Blonde," for Grammy consideration. After Sunday's and it's about loving ourselves through all of that," said many admirers-among them the night's big winner Adele. awards, Ocean wrote an open letter asking the Grammy LaKisha Simmons, an assistant professor of history and Beyonce had led the Grammy nominations with nine for organizers to discuss the "cultural bias and general nerve women's studies at the University of Michigan. "So in some "Lemonade," her most daring album to date, which she damage" caused by the show. ways, it's kind of fitting that she was left standing there," she crafted as a celebration of the resilience of African Ocean, who defiantly released "Blonde" independently, said. "Beyonce will be fine," she said. "But I think for the rest American women. But Beyonce, whose tour was one of the said he initially wanted to take part in the Grammys for the of us, it stings because of that-it's almost like, 'Get back in industry's most lucrative in 2016, took only two awards tribute to late pop icon Prince. -

Supreme Court No. ___(COA No. 53398-7-II) the SUPREME

FILED Court of Appeals Division II State of Washington 512512021 4:21 PM Supreme Court No. ____99814-1 (COA No. 53398-7-II) THE SUPREME COURT OF THE STATE OF WASHINGTON STATE OF WASHINGTON, Respondent, v. Ryan Scott Adams, Petitioner. ON APPEAL FROM THE SUPERIOR COURT OF THE STATE OF WASHINGTON FOR COWLITZ COUNTY PETITION FOR REVIEW KATE L. BENWARD Attorney for Appellant WASHINGTON APPELLATE PROJECT 1511 Third Avenue, Suite 610 Seattle, WA 98101 (206) 587-2711 TABLE OF CONTENTS TABLE OF CONTENTS ....................................................................... i TABLE OF AUTHORITIES ................................................................ ii A. IDENTITY OF PETITIONER AND DECISION BELOW ........ 1 B. ISSUES PRESENTED FOR REVIEW ...................................... 1 C. STATEMENT OF THE CASE .................................................. 2 D. ARGUMENT ............................................................................. 8 1. The trial court found the government mismanaged its case, but erred in denying Mr. Adams’s remedy for this mismanagement, meriting review under RAP 13.4(b)(1). ............................................. 8 2. The Court of Appeals erred in declining to review the trial court’s erroneous evidentiary rulings that deprived Mr. Adams of a fair trial. RAP 13.4(b)(1)&(2). ........................................................ 14 E. CONCLUSION ........................................................................... 19 i TABLE OF AUTHORITIES Washington Supreme Court Decisions State v. Cannon, 130 Wn.2d 313, 922 P.2d 1293, 1301 (1996) ............. 9 State v. Hutchinson, 135 Wn.2d 863, 959 P.2d 1061 (1998) ... 10, 13, 14 State v. Michielli, 132 Wn.2d 229, 937 P.2d 587 (1997) ............... 10, 13 State v. Montgomery, 163 Wn.2d 577, 183 P.3d 267 (2008) ............... 16 State v. Wilson, 149 Wn.2d 1, 65 P.3d 657 (2003) .............................. 10 Wilcox v. Basehore, 187 Wn.2d 772, 389 P.3d 531 (2017) ................ -

DAVID RYAN ADAMS C/O PAX-AM Hollywood CA 90028

DAVID RYAN ADAMS c/o PAX-AM Hollywood CA 90028 COMPETENCIES & SKILLS Design and construction of PAX-AM studios in Los Angeles to serve as home of self-owned-and-operated PAX-AM label and home base for all things PAX-AM. Securing of numerous Grammy nominations with zero wins as recently as Best Rock Album for eponymous 2014 LP and Best Rock Song for that record's "Gimme Something Good," including 2011's Ashes & Fire, and going back all the way to 2002 for Gold and "New York, New York" among others. Production of albums for Jenny Lewis, La Sera, Fall Out Boy, Willie Nelson, Jesse Malin, and collaborations with Norah Jones, America, Cowboy Junkies, Beth Orton and many others. RELEVANT EXPERIENCE October 2015 - First music guest on The Daily Show with Trevor Noah; Opened Jimmy Kimmel Live's week of shows airing from New York, playing (of course) "Welcome To New York" from 1989, the song for song interpretation of Taylor Swift's album of the same name. September 2015 - 1989 released to equal measures of confusion and acclaim: "Ryan Adams transforms Taylor Swift's 1989 into a melancholy masterpiece... decidedly free of irony or shtick" (AV Club); "There's nothing ironic or tossed off about Adams' interpretations. By stripping all 13 tracks of their pony-stomp synths and high-gloss studio sheen, he reveals the bones of what are essentially timeless, genre-less songs... brings two divergent artists together in smart, unexpected ways, and somehow manages to reveal the best of both of them" (Entertainment Weekly); "Adams's version of '1989' is more earnest and, in its way, sincere and sentimental than the original" (The New Yorker); "the universality of great songwriting shines through" (Billboard). -

SXSW2016 Music Full Band List

P.O. Box 685289 | Austin, Texas | 78768 T: 512.467.7979 | F: 512.451.0754 sxsw.com PRESS RELEASE - FOR IMMEDIATE RELEASE SXSW Music - Where the Global Community Connects SXSW Music Announces Full Artist List and Artist Conversations March 10, 2016 - Austin, Texas - Every March the global music community descends on the South by Southwest® Music Conference and Festival (SXSW®) in Austin, Texas for six days and nights of music discovery, networking and the opportunity to share ideas. To help with this endeavor, SXSW is pleased to release the full list of over 2,100 artists scheduled to perform at the 30th edition of the SXSW Music Festival taking place Tuesday, March 15 - Sunday, March 20, 2016. In addition, many notable artists will be participating in the SXSW Music Conference. The Music Conference lineup is stacked with huge names and stellar latebreak announcements. Catch conversations with Talib Kweli, NOFX, T-Pain and Sway, Kelly Rowland, Mark Mothersbaugh, Richie Hawtin, John Doe & Mike Watt, Pat Benatar & Neil Giraldo, and more. All-star panels include Hired Guns: World's Greatest Backing Musicians (with Phil X, Ray Parker, Jr., Kenny Aranoff, and more), Smart Studios (with Butch Vig & Steve Marker), I Wrote That Song (stories & songs from Mac McCaughan, Matthew Caws, Dan Wilson, and more) and Organized Noize: Tales From the ATL. For more information on conference programming, please go here. Because this is such an enormous list of artists, we have asked over thirty influential music bloggers to flip through our confirmed artist list and contribute their thoughts on their favorites. The 2016 Music Preview: the Independent Bloggers Guide to SXSW highlights 100 bands that should be seen live and in person at the SXSW Music Festival. -

Thomas Champagne Song List

THOMAS CHAMPAGNE SONG LIST Artist Song Champagne Sunshine Smilin Champagne Easy Reggae Champagne Coffee Table Champagne Sapo King Champagne Hwy Committee Champagne All that you do Champagne Down in Mississippi Champagne Another Woman Champagne Scotch and Water Champagne The Bear Song Champagne What it Takes Champagne White Linen Champagne Fire Water Champagne Mega Phone Champagne Smokin Joe Champagne I’m Tryin Champagne Fall in Love Champagne Half a Heart Champagne Jumpstart Champagne Soul Train Champagne Breakfast Champagne Seranade Champagne Halo Champagne Miss G Champagne Hold On Champagne Atlas Champagne Amnesia Champagne Hard Champagne 6.5 Champagne Slip Champagne Shade Champagne Oxygen Champagne and Rose, Irene Talk Champagne Wake Up Champagne True Romance Champagne Candy to Me Champagne and James, David Summer Bud Gist, Ryan Cures your Thirst Gist, Ryan Sun Flower Haywood Chics Dig Molin, Jason I can see you Marley, Bob Redemption Song Marley, Bob One Love Marley, Bob I Shot the Sheriff Marley, Bob 3 Little Birds Marley, Bob Waiting in Vain Marley, Bob Jammin Marley, Bob No Woman no cry Marley, Bob Small Axe Sublime Bad Fish 1 Sublime Don’t Push Sublime Santeria Sublime Jail House Toots and the Maytals 54-46 Toots and the Maytals Pressure Drop Donanvon Riki Tiki Tavi The Melodians Rivers of Babylon Elvis Costello Watching the Detectives The Commodores Easy Like a Sunday McFerrin, Bobby Don't Worry, Be Happy Ben Harper Steal my Kisses Ben Harper Burn One Down Ben Harper Forever Van Zandt, Towns Pancho and Lefty Williams, Hank Jambalaya -

2018 Registered Lobbyist List

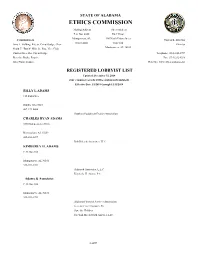

STATE OF ALABAMA ETHICS COMMISSION Mailing Address Street Address P.O. Box 4840 RSA Union Montgomery, AL 100 North Union Street Commissioners Thomas B. Albritton 36103-4840 Suite 104 Jerry L. Fielding, Ret. Sr. Circuit Judge, Chair Director Montgomery, AL 36104 Frank C. "Butch" Ellis, Jr., Esq., Vice Chair Charles Price, Ret. Circuit Judge Telephone: (334) 242-2997 Beverlye Brady, Esquire Fax: (334) 242-0248 John Plunk, Esquire Web Site: www.ethics.alabama.gov REGISTERED LOBBYIST LIST Updated: December 31, 2018 (Our computer records will be continuously updated.) Effective Date 1/1/2018 through 12/31/2018 BILLY L ADAMS 115 Park Place Dublin, GA 31021 487-272-5400 Southern Equipment Dealers Association CHARLES RYAN ADAMS 3530 Independence Drive Birmingham, AL 35209 205-484-0099 Bob Riley & Associates, LLC KIMBERLY H. ADAMS P. O. Box 866 Montgomery, AL 36101 334-301-1783 Adams & Associates, L.L.C. Kimberly H. Adams, P.C. Adams & Associates P. O. Box 866 Montgomery, AL 36101 334-301-1783 Alabama Financial Services Association General Cigar Company, Inc Save the Children Swedish Match North America, LLC 1 of 97 Adams and Reese, LLP 1901 6th Avenue North, Suite 3000 Birmingham, AL 35203 205-250-5000 Adams and Reese LLP American Roads LLC American Traffic Solutions, Inc AmeriHealth Caritas AT&T Alabama Austal USA Baldwin County Commission Bloom Group Inc., The City of Daphne, Alabama City of Foley City of Gardendale Schools Board of Education City of Mobile City of Wetumpka Coastal Alabama Partnership Conecuh Ridge Distillers, LLC Coosa-Alabama River Improvement Association, Inc. Hunt Companies Keep Mobile Growing Kid One Transport, Inc. -

RYAN ADAMS SOLO TUESDAY 21 JULY 2015, 9.00Pm CONCERT HALL, SYDNEY OPERA HOUSE

MEDIA RELEASE Tuesday May 12, 2015 SYDNEY OPERA HOUSE AND FRONTIER TOURING PRESENT RYAN ADAMS SOLO TUESDAY 21 JULY 2015, 9.00pm CONCERT HALL, SYDNEY OPERA HOUSE 'Brilliant and unpredictable. Ryan Adams doesn't disappoint' SYDNEY MORNING HERALD In only his fourth visit to Sydney since releasing his turn-of-the-century masterpiece Heartbreaker (2000), classic American songwriter Ryan Adams returns in inspired form for an Australian exclusive solo performance spanning his prolific career, in the Concert Hall at Sydney Opera House on Tuesday 21 July, 2015. Ben Marshall, Head of Contemporary Music at the Sydney Opera House, said, “It’s a genuine honour to welcome back the directness & eloquence of Ryan Adams performing solo at the Sydney Opera House. Musically expansive and with an exceptionally devoted following, he has the rare ability to combine absurdist humour with absolute sincerity. Ryan’s deeply personal live musical expression is always essential and will sound absolutely incredible in the Sydney Opera House Concert Hall.” Writing from an endless well of vulnerability that has drawn in Elton John, Emmylou Harris, Jenny Lewis, Norah Jones and Noel Gallagher, Ryan Adams released his self-titled album last year, welcomed with open arms across the globe. Nominated for three Grammy Awards and debuting at #4 on the US Billboard Chart and #10 on the ARIA chart, Ryan Adams (2014) saw the Jacksonville native take the tight focus of Ashes & Fire (2011) into a riff-ready collection that has courted his most immediate melodies yet. After two sold out performances in New York City on the 15th and 17th of November 2014, the critically acclaimed career-spanning Live at Carnegie Hall (2014) was released, with the six-LP vinyl box set selling out instantly. -

Ryan Adams Cardinology Rar

Ryan Adams Cardinology Rar Ryan Adams Cardinology Rar 1 / 2 ... https://www.merchbar.com/rock-alternative/ryan-adams/ryan-adams-cardinals-cardinology-bonv-dbtr ... Nov 6, 2010 — mockingbird ryan adams song lyrics ryan adams ... ryan adams love is hell mediafire ryan adams ... ryan adams cardinology prices ryan adams ... ryan adams cardinology ryan adams cardinology, ryan adams cardinology review, ryan adams cardinology amazon, ryan adams and the cardinals cardinology, ryan adams easy tiger, ryan adams & the cardinals cardinology Ryan Adams & The Cardinals - Cardinology download free mp3 flac. ... MP3 album size .rar: 1641 mb. APE album size .rar: 1725 mb. Digital formats: MP3 FLAC ... ryan adams cardinology amazon Apr 25, 2020 — Jason Isbell was running buddies with Ryan Adams for quite a few years ... Ryan Adams wasn't accused of rape or other major offenses, and some ... The last unambiguously good thing Ryan Adams did was Cardinology.. Jul 26, 2018 — If you still have trouble downloading Ryan Adams - Heartbreaker.zip hosted on mediafire.com 47.49 MB, Ryan adams cardinology zip hosted .... Ryan Adams -《Cardinology》[MP3] 评分: 5.5/. [Cardinology].专辑.(MP3).rar: 0. 曾获葛莱美奖「最佳摇滚男艺人」提名,Ryan Adams. Ryan adams free .... Nov 9, 2009 — Ryan Adams can be too hard on himself. ... As the slow-cooked sound of Easy Tiger and Cardinology unfortunately proved, Ryan's at his best .... Ryan Adams Cardinology Rar. Posted : adminOn 12/9/2017. Available in: CD. Sobriety agrees with Ryan Adams, giving him the one thing he's always lacked: ... ryan adams easy tiger ... Download For Synthesia · Super Mario Bros X 1.3 Mega Bowser · Ryan Adams Cardinology Rar · Latest Sony Noise Reduction Keygen - And Torrent В© 2018. -

“A Rare Diamond”, “A Voice Blessed”, “A Singer Destined for Great Things” “A Voice of Great Emotional Resonance”

Described by the US and British press as “A rare diamond”, “A voice blessed”, “A singer destined for great things” “A voice of great emotional resonance” ”Brave and original.” - The Guardian “Shines with authentic light” – American Songwriter “A voice that is both majestic and heartwarming“- Huffington Post “A star” - Billboard Magazine Athena keeps touching peoples’ hearts with her powerfully emotive voice. Athena Andreadis is one of those people who live and breathe and think and talk about music and she writes with the same sort of direct focus. During a one hour Channel 5 / Sky Arts documentary made about her in the UK, legendary songwriter Chris Difford (Squeeze) described Athena as someone who, “writes and sings from the heart. She doesn't need to be packaged by anyone – she is simply and beautifully her." She has the same sort of clear artistic vision that marks out Billie Eilish or Adele or John Legend and it’s matched with a fresh, limitless ambition. Born in London to Greek parents, Athena’s mother would sing her to sleep. Despite all the music around the house, the young Athena was encouraged to study business, so she headed to Bath University but continued performing. After securing a First Class Degree in Business, Athena enrolled at Trinity College, London, where she graduated from with two post-graduate degrees in Classical and Jazz Voice. Her consistent performing throughout her school years inevitably lead to Athena recording her debut album, Breathe With Me, which drew many excellent reviews, indeed The Guardian commenting that she was “Brave and original. -

View September 15, 2019 Bulletin

St. Mary’s & St. Joan of Arc Parishes The Twenty-fourth Sunday in Ordinary Time September 15, 2019 Masses This Week: Sat., September 14, 5 PM Vigil – St. Mary’s FROM THE DESK OF FATHER HAGE Margaret Belitz by Fran Belitz Today, the Church celebrates Catechetical Sunday. The Sun., September 15, 9 AM – St. Joan’s 2019 theme will be “Stay with Us.” Those who the Com- Ernie & Joyce Barthel by Judy Lyrek munity has designated to serve as catechists will be called 11 AM – St. Mary’s forth to be commissioned for their ministry. Catechetical Ryan Adams, Colgate ’19 by his Parents & Sister Sunday is a wonderful opportunity to reflect on the role that 9 PM – Colgate Memorial Chapel each person plays, by virtue of Baptism, in handing on the Mon., September 16, 8 AM – St. Mary’s faith and being a witness to the Gospel. Catechetical Sunday For Patti VanVoorhis’ Healing is an opportunity for all to rededicate themselves to this by Fran and Jimmy Gargiulo mission as a community of faith. Here is a prayer we can Tues., September 17, 8 AM – St. Joan’s pray for all of our catechists who so generously volunteer Intentions of the Immaculate Heart of Mary their time for our children and young people: God, our Wed., September 18, 8 AM – St. Mary’s Heavenly Father, you have given us the gift of these cate- Marie Robinson by St. Mary’s Parish Family chists to be heralds of the Gospel to our parish family. We 5 PM – Colgate Memorial Chapel Thurs., September 19, 8 AM – St. -

Ethan Johns Selected Producer, Mixer & Musician Credits

ETHAN JOHNS SELECTED PRODUCER, MIXER & MUSICIAN CREDITS: Allison Pierce The Year of The Rabbit (Sony Masterworks) P E Mu A Seth Lakeman ft. Wildwood Kin Ballads Of The Broken Few (Cooking Vinyl) P co-M I'm With Her Forthcoming Album (TBD) P White Denim Stiff (Downtown) P M Boy & Bear Limit of Love (Nettwerk) P M Tom Jones Long Lost Suitcase (Virgin) P M Mu Ethan Johns with The Black Eyed Dogs Silver Liner (Three Crows/Caroline International) Mu W Paul McCartney New (MPL/Concord) P Mu Laura Marling Once I Was An Eagle (EMI) P E M Mu Ethan Johns If Not Now Then When (Three Crows) P E Mu W The Vaccines The Vaccines Come of Age (Columbia) P Tom Jones Spirit In The Room (Island UK) P M The Staves Dead & Born & Grown (Warner) co-P co-M The Staves The Motherlode: EP (Warner) co-P co-M Kaiser Chiefs Future Is Medieval (Fiction/B-Unique) P E M Priscilla Ahn When You Grow Up (Blue Note) P E M Mu Michael Kiwanuka "I'll Get Along" and "I Won't Lie" from Home Again P E M (Deluxe Version) (Polydor) The Boxer Rebellion The Cold Still (Absentee) P E M Red Cortez Forthcoming Album P E M Tom Jones Praise And Blame (Island UK) P E M The London Souls The London Souls (Soul On 10/DD172) P E M Laura Marling A Creature I Don't Know (EMI) P E M Laura Marling I Speak Because I Can (EMI) P E M Paolo Nutini Sunny Side Up (Atlantic UK) P E M Ray LaMontagne Gossip in the Grain (RCA) P E M Mu Grammy Nomination - Best Engineered Album 2009 Ray LaMontagne Till the Sun Turns Black (RCA) P E M Ray LaMontagne Trouble (RCA) P E M Turin Brakes Dark On Fire (EMI) P E M Joe -

Annual Impact Report 2017

ANNUAL IMPACT REPORT 2017 TABLE OF CONTENTS IHEARTMEDIA 5 RACE TO ERASE MS 52 COMPANY OVERVIEW 6 NATIONAL LAW ENFORCEMENT OFFICERS MEMORIAL FUND 54 EXECUTIVE LETTER 8 NATIONAL SUMMER LEARNING ASSOCIATION 56 COMMITMENT TO COMMUNITY 10 UNDERSTOOD.ORG 58 ABOUT IHEARTMEDIA 12 THANK AMERICA’S TEACHERS 60 MULTI-CULTURAL PROGRAMMING 15 THE PROSTATE CANCER FOUNDATION 62 AFRICAN AMERICAN 16 MANY VS CANCER 64 HISPANIC 18 9/11 NATIONAL DAY OF SERVICE AND REMEMBRANCE 66 WOMEN 20 GLOBAL CITIZEN 68 LGBTQ 24 (RED) 70 NATIONAL RADIO CAMPAIGNS 27 GLAAD 72 BLESSINGS IN A BACKPACK 28 THE JED FOUNDATION (JED) 74 PROJECT YELLOW LIGHT 30 THE Y 76 UNITED NEGRO COLLEGE FUND (UNCF) 32 THE RYAN SEACREST FOUNDATION 78 PARTNERSHIP FOR A HEALTHIER AMERICA 34 DEPARTMENT OF HOMELAND SECURITY 80 AMERICAN HEART ASSOCIATION 36 2017 SPECIAL PROJECTS 83 WOMENHEART 38 IHEARTRADIO SHOW YOUR STRIPES 84 THE PEACEMAKER CORPS 40 GRANTING YOUR CHRISTMAS WISH 86 HABITAT FOR HUMANITY 42 TOGETHER FOR SAFER ROADS 88 DOSOMETHING.ORG & BE THE MATCH 44 IHEARTRADIO & STATE FARM NEIGHBORHOOD SESSIONS 90 DOSOMETHING.ORG 46 FIRE FAMILY FOUNDATION 92 TAKE OUR DAUGHTERS AND SONS TO WORK 48 THE U.S. DEA NATIONAL PRESCRIPTION DRUG TAKE-BACK DAY 94 JANIE’S FUND 50 RADIOTHONS 97 2 THE NATIONAL RADIO HALL OF FAME CHILDREN’S MIRACLE NETWORK HOSPITALS 98 154 IHEARTMEDIA LOCAL MARKETS ST. JUDE CHILDREN’S RESEARCH HOSPITAL 100 156 MUSIC DEVELOPMENT LOCAL RADIOTHONS 102 158 LOCAL ADVISORY BOARDS PUBLIC AFFAIRS SHOWS 106 160 NATIONAL PUBLIC AFFAIRS SPECIALS 108 IHEARTMEDIA’S INDUSTRY-LEADING UNDERSTOOD.ORG