Extracting and Composing Robust Features with Denoising Autoencoders

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

NVIDIA CEO Jensen Huang to Host AI Pioneers Yoshua Bengio, Geoffrey Hinton and Yann Lecun, and Others, at GTC21

NVIDIA CEO Jensen Huang to Host AI Pioneers Yoshua Bengio, Geoffrey Hinton and Yann LeCun, and Others, at GTC21 Online Conference to Feature Jensen Huang Keynote and 1,300 Talks from Leaders in Data Center, Networking, Graphics and Autonomous Vehicles NVIDIA today announced that its CEO and founder Jensen Huang will host renowned AI pioneers Yoshua Bengio, Geoffrey Hinton and Yann LeCun at the company’s upcoming technology conference, GTC21, running April 12-16. The event will kick off with a news-filled livestreamed keynote by Huang on April 12 at 8:30 am Pacific. Bengio, Hinton and LeCun won the 2018 ACM Turing Award, known as the Nobel Prize of computing, for breakthroughs that enabled the deep learning revolution. Their work underpins the proliferation of AI technologies now being adopted around the world, from natural language processing to autonomous machines. Bengio is a professor at the University of Montreal and head of Mila - Quebec Artificial Intelligence Institute; Hinton is a professor at the University of Toronto and a researcher at Google; and LeCun is a professor at New York University and chief AI scientist at Facebook. More than 100,000 developers, business leaders, creatives and others are expected to register for GTC, including CxOs and IT professionals focused on data center infrastructure. Registration is free and is not required to view the keynote. In addition to the three Turing winners, major speakers include: Girish Bablani, Corporate Vice President, Microsoft Azure John Bowman, Director of Data Science, Walmart -

On Recurrent and Deep Neural Networks

On Recurrent and Deep Neural Networks Razvan Pascanu Advisor: Yoshua Bengio PhD Defence Universit´ede Montr´eal,LISA lab September 2014 Pascanu On Recurrent and Deep Neural Networks 1/ 38 Studying the mechanism behind learning provides a meta-solution for solving tasks. Motivation \A computer once beat me at chess, but it was no match for me at kick boxing" | Emo Phillips Pascanu On Recurrent and Deep Neural Networks 2/ 38 Motivation \A computer once beat me at chess, but it was no match for me at kick boxing" | Emo Phillips Studying the mechanism behind learning provides a meta-solution for solving tasks. Pascanu On Recurrent and Deep Neural Networks 2/ 38 I fθ(x) = f (θ; x) ? F I f = arg minθ Θ EEx;t π [d(fθ(x); t)] 2 ∼ Supervised Learing I f :Θ D T F × ! Pascanu On Recurrent and Deep Neural Networks 3/ 38 ? I f = arg minθ Θ EEx;t π [d(fθ(x); t)] 2 ∼ Supervised Learing I f :Θ D T F × ! I fθ(x) = f (θ; x) F Pascanu On Recurrent and Deep Neural Networks 3/ 38 Supervised Learing I f :Θ D T F × ! I fθ(x) = f (θ; x) ? F I f = arg minθ Θ EEx;t π [d(fθ(x); t)] 2 ∼ Pascanu On Recurrent and Deep Neural Networks 3/ 38 Optimization for learning θ[k+1] θ[k] Pascanu On Recurrent and Deep Neural Networks 4/ 38 Neural networks Output neurons Last hidden layer bias = 1 Second hidden layer First hidden layer Input layer Pascanu On Recurrent and Deep Neural Networks 5/ 38 Recurrent neural networks Output neurons Output neurons Last hidden layer bias = 1 bias = 1 Recurrent Layer Second hidden layer First hidden layer Input layer Input layer (b) Recurrent -

Yoshua Bengio and Gary Marcus on the Best Way Forward for AI

Yoshua Bengio and Gary Marcus on the Best Way Forward for AI Transcript of the 23 December 2019 AI Debate, hosted at Mila Moderated and transcribed by Vincent Boucher, Montreal AI AI DEBATE : Yoshua Bengio | Gary Marcus — Organized by MONTREAL.AI and hosted at Mila, on Monday, December 23, 2019, from 6:30 PM to 8:30 PM (EST) Slides, video, readings and more can be found on the MONTREAL.AI debate webpage. Transcript of the AI Debate Opening Address | Vincent Boucher Good Evening from Mila in Montreal Ladies & Gentlemen, Welcome to the “AI Debate”. I am Vincent Boucher, Founding Chairman of Montreal.AI. Our participants tonight are Professor GARY MARCUS and Professor YOSHUA BENGIO. Professor GARY MARCUS is a Scientist, Best-Selling Author, and Entrepreneur. Professor MARCUS has published extensively in neuroscience, genetics, linguistics, evolutionary psychology and artificial intelligence and is perhaps the youngest Professor Emeritus at NYU. He is Founder and CEO of Robust.AI and the author of five books, including The Algebraic Mind. His newest book, Rebooting AI: Building Machines We Can Trust, aims to shake up the field of artificial intelligence and has been praised by Noam Chomsky, Steven Pinker and Garry Kasparov. Professor YOSHUA BENGIO is a Deep Learning Pioneer. In 2018, Professor BENGIO was the computer scientist who collected the largest number of new citations worldwide. In 2019, he received, jointly with Geoffrey Hinton and Yann LeCun, the ACM A.M. Turing Award — “the Nobel Prize of Computing”. He is the Founder and Scientific Director of Mila — the largest university-based research group in deep learning in the world. -

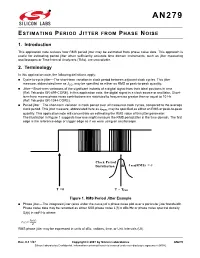

AN279: Estimating Period Jitter from Phase Noise

AN279 ESTIMATING PERIOD JITTER FROM PHASE NOISE 1. Introduction This application note reviews how RMS period jitter may be estimated from phase noise data. This approach is useful for estimating period jitter when sufficiently accurate time domain instruments, such as jitter measuring oscilloscopes or Time Interval Analyzers (TIAs), are unavailable. 2. Terminology In this application note, the following definitions apply: Cycle-to-cycle jitter—The short-term variation in clock period between adjacent clock cycles. This jitter measure, abbreviated here as JCC, may be specified as either an RMS or peak-to-peak quantity. Jitter—Short-term variations of the significant instants of a digital signal from their ideal positions in time (Ref: Telcordia GR-499-CORE). In this application note, the digital signal is a clock source or oscillator. Short- term here means phase noise contributions are restricted to frequencies greater than or equal to 10 Hz (Ref: Telcordia GR-1244-CORE). Period jitter—The short-term variation in clock period over all measured clock cycles, compared to the average clock period. This jitter measure, abbreviated here as JPER, may be specified as either an RMS or peak-to-peak quantity. This application note will concentrate on estimating the RMS value of this jitter parameter. The illustration in Figure 1 suggests how one might measure the RMS period jitter in the time domain. The first edge is the reference edge or trigger edge as if we were using an oscilloscope. Clock Period Distribution J PER(RMS) = T = 0 T = TPER Figure 1. RMS Period Jitter Example Phase jitter—The integrated jitter (area under the curve) of a phase noise plot over a particular jitter bandwidth. -

Hello, It's GPT-2

Hello, It’s GPT-2 - How Can I Help You? Towards the Use of Pretrained Language Models for Task-Oriented Dialogue Systems Paweł Budzianowski1;2;3 and Ivan Vulic´2;3 1Engineering Department, Cambridge University, UK 2Language Technology Lab, Cambridge University, UK 3PolyAI Limited, London, UK [email protected], [email protected] Abstract (Young et al., 2013). On the other hand, open- domain conversational bots (Li et al., 2017; Serban Data scarcity is a long-standing and crucial et al., 2017) can leverage large amounts of freely challenge that hinders quick development of available unannotated data (Ritter et al., 2010; task-oriented dialogue systems across multiple domains: task-oriented dialogue models are Henderson et al., 2019a). Large corpora allow expected to learn grammar, syntax, dialogue for training end-to-end neural models, which typ- reasoning, decision making, and language gen- ically rely on sequence-to-sequence architectures eration from absurdly small amounts of task- (Sutskever et al., 2014). Although highly data- specific data. In this paper, we demonstrate driven, such systems are prone to producing unre- that recent progress in language modeling pre- liable and meaningless responses, which impedes training and transfer learning shows promise their deployment in the actual conversational ap- to overcome this problem. We propose a task- oriented dialogue model that operates solely plications (Li et al., 2017). on text input: it effectively bypasses ex- Due to the unresolved issues with the end-to- plicit policy and language generation modules. end architectures, the focus has been extended to Building on top of the TransferTransfo frame- retrieval-based models. -

Signal-To-Noise Ratio and Dynamic Range Definitions

Signal-to-noise ratio and dynamic range definitions The Signal-to-Noise Ratio (SNR) and Dynamic Range (DR) are two common parameters used to specify the electrical performance of a spectrometer. This technical note will describe how they are defined and how to measure and calculate them. Figure 1: Definitions of SNR and SR. The signal out of the spectrometer is a digital signal between 0 and 2N-1, where N is the number of bits in the Analogue-to-Digital (A/D) converter on the electronics. Typical numbers for N range from 10 to 16 leading to maximum signal level between 1,023 and 65,535 counts. The Noise is the stochastic variation of the signal around a mean value. In Figure 1 we have shown a spectrum with a single peak in wavelength and time. As indicated on the figure the peak signal level will fluctuate a small amount around the mean value due to the noise of the electronics. Noise is measured by the Root-Mean-Squared (RMS) value of the fluctuations over time. The SNR is defined as the average over time of the peak signal divided by the RMS noise of the peak signal over the same time. In order to get an accurate result for the SNR it is generally required to measure over 25 -50 time samples of the spectrum. It is very important that your input to the spectrometer is constant during SNR measurements. Otherwise, you will be measuring other things like drift of you lamp power or time dependent signal levels from your sample. -

Hierarchical Multiscale Recurrent Neural Networks

Published as a conference paper at ICLR 2017 HIERARCHICAL MULTISCALE RECURRENT NEURAL NETWORKS Junyoung Chung, Sungjin Ahn & Yoshua Bengio ∗ Département d’informatique et de recherche opérationnelle Université de Montréal {junyoung.chung,sungjin.ahn,yoshua.bengio}@umontreal.ca ABSTRACT Learning both hierarchical and temporal representation has been among the long- standing challenges of recurrent neural networks. Multiscale recurrent neural networks have been considered as a promising approach to resolve this issue, yet there has been a lack of empirical evidence showing that this type of models can actually capture the temporal dependencies by discovering the latent hierarchical structure of the sequence. In this paper, we propose a novel multiscale approach, called the hierarchical multiscale recurrent neural network, that can capture the latent hierarchical structure in the sequence by encoding the temporal dependencies with different timescales using a novel update mechanism. We show some evidence that the proposed model can discover underlying hierarchical structure in the sequences without using explicit boundary information. We evaluate our proposed model on character-level language modelling and handwriting sequence generation. 1 INTRODUCTION One of the key principles of learning in deep neural networks as well as in the human brain is to obtain a hierarchical representation with increasing levels of abstraction (Bengio, 2009; LeCun et al., 2015; Schmidhuber, 2015). A stack of representation layers, learned from the data in a way to optimize the target task, make deep neural networks entertain advantages such as generalization to unseen examples (Hoffman et al., 2013), sharing learned knowledge among multiple tasks, and discovering disentangling factors of variation (Kingma & Welling, 2013). -

Image Denoising by Autoencoder: Learning Core Representations

Image Denoising by AutoEncoder: Learning Core Representations Zhenyu Zhao College of Engineering and Computer Science, The Australian National University, Australia, [email protected] Abstract. In this paper, we implement an image denoising method which can be generally used in all kinds of noisy images. We achieve denoising process by adding Gaussian noise to raw images and then feed them into AutoEncoder to learn its core representations(raw images itself or high-level representations).We use pre- trained classifier to test the quality of the representations with the classification accuracy. Our result shows that in task-specific classification neuron networks, the performance of the network with noisy input images is far below the preprocessing images that using denoising AutoEncoder. In the meanwhile, our experiments also show that the preprocessed images can achieve compatible result with the noiseless input images. Keywords: Image Denoising, Image Representations, Neuron Networks, Deep Learning, AutoEncoder. 1 Introduction 1.1 Image Denoising Image is the object that stores and reflects visual perception. Images are also important information carriers today. Acquisition channel and artificial editing are the two main ways that corrupt observed images. The goal of image restoration techniques [1] is to restore the original image from a noisy observation of it. Image denoising is common image restoration problems that are useful by to many industrial and scientific applications. Image denoising prob- lems arise when an image is corrupted by additive white Gaussian noise which is common result of many acquisition channels. The white Gaussian noise can be harmful to many image applications. Hence, it is of great importance to remove Gaussian noise from images. -

Active Noise Control Over Space: a Subspace Method for Performance Analysis

applied sciences Article Active Noise Control over Space: A Subspace Method for Performance Analysis Jihui Zhang 1,* , Thushara D. Abhayapala 1 , Wen Zhang 1,2 and Prasanga N. Samarasinghe 1 1 Audio & Acoustic Signal Processing Group, College of Engineering and Computer Science, Australian National University, Canberra 2601, Australia; [email protected] (T.D.A.); [email protected] (W.Z.); [email protected] (P.N.S.) 2 Center of Intelligent Acoustics and Immersive Communications, School of Marine Science and Technology, Northwestern Polytechnical University, Xi0an 710072, China * Correspondence: [email protected] Received: 28 February 2019; Accepted: 20 March 2019; Published: 25 March 2019 Abstract: In this paper, we investigate the maximum active noise control performance over a three-dimensional (3-D) spatial space, for a given set of secondary sources in a particular environment. We first formulate the spatial active noise control (ANC) problem in a 3-D room. Then we discuss a wave-domain least squares method by matching the secondary noise field to the primary noise field in the wave domain. Furthermore, we extract the subspace from wave-domain coefficients of the secondary paths and propose a subspace method by matching the secondary noise field to the projection of primary noise field in the subspace. Simulation results demonstrate the effectiveness of the proposed algorithms by comparison between the wave-domain least squares method and the subspace method, more specifically the energy of the loudspeaker driving signals, noise reduction inside the region, and residual noise field outside the region. We also investigate the ANC performance under different loudspeaker configurations and noise source positions. -

I2t2i: Learning Text to Image Synthesis with Textual Data Augmentation

I2T2I: LEARNING TEXT TO IMAGE SYNTHESIS WITH TEXTUAL DATA AUGMENTATION Hao Dong, Jingqing Zhang, Douglas McIlwraith, Yike Guo Data Science Institute, Imperial College London ABSTRACT of text-to-image synthesis is still far from being solved. Translating information between text and image is a funda- GAN-CLS I2T2I mental problem in artificial intelligence that connects natural language processing and computer vision. In the past few A yellow years, performance in image caption generation has seen sig- school nificant improvement through the adoption of recurrent neural bus parked in networks (RNN). Meanwhile, text-to-image generation begun a parking to generate plausible images using datasets of specific cate- lot. gories like birds and flowers. We’ve even seen image genera- A man tion from multi-category datasets such as the Microsoft Com- swinging a baseball mon Objects in Context (MSCOCO) through the use of gen- bat over erative adversarial networks (GANs). Synthesizing objects home plate. with a complex shape, however, is still challenging. For ex- ample, animals and humans have many degrees of freedom, which means that they can take on many complex shapes. We propose a new training method called Image-Text-Image Fig. 1: Comparing text-to-image synthesis with and without (I2T2I) which integrates text-to-image and image-to-text (im- textual data augmentation. I2T2I synthesizes the human pose age captioning) synthesis to improve the performance of text- and bus color better. to-image synthesis. We demonstrate that I2T2I can generate better multi-categories images using MSCOCO than the state- Recently, both fractionally-strided convolutional and con- of-the-art. -

Noise Reduction Through Active Noise Control Using Stereophonic Sound for Increasing Quite Zone

Noise reduction through active noise control using stereophonic sound for increasing quite zone Dongki Min1; Junjong Kim2; Sangwon Nam3; Junhong Park4 1,2,3,4 Hanyang University, Korea ABSTRACT The low frequency impact noise generated during machine operation is difficult to control by conventional active noise control. The Active Noise Control (ANC) is a destructive interference technic of noise source and control sound by generating anti-phase control sound. Its efficiency is limited to small space near the error microphones. At different locations, the noise level may increase due to control sounds. In this study, the ANC method using stereophonic sound was investigated to reduce interior low frequency noise and increase the quite zone. The Distance Based Amplitude Panning (DBAP) algorithm based on the distance between the virtual sound source and the speaker was used to create a virtual sound source by adjusting the volume proportions of multi speakers respectively. The 3-Dimensional sound ANC system was able to change the position of virtual control source using DBAP algorithm. The quiet zone was formed using fixed multi speaker system for various locations of noise sources. Keywords: Active noise control, stereophonic sound I-INCE Classification of Subjects Number(s): 38.2 1. INTRODUCTION The noise reduction is a main issue according to improvement of living condition. There are passive and active control methods for noise reduction. The passive noise control uses sound absorption material which has efficiency about high frequency. The Active Noise Control (ANC) which is the method for generating anti phase control sound is able to reduce low frequency. -

Yoshua Bengio

Yoshua Bengio Département d’informatique et recherche opérationnelle, Université de Montréal Canada Research Chair Phone : 514-343-6804 on Statistical Learning Algorithms Fax : 514-343-5834 [email protected] www.iro.umontreal.ca/∼bengioy Titles and Distinctions • Full Professor, Université de Montréal, since 2002. Previously associate professor (1997-2002) and assistant professor (1993-1997). • Canada Research Chair on Statistical Learning Algorithms since 2000 (tier 2 : 2000-2005, tier 1 since 2006). • NSERC-Ubisoft Industrial Research Chair, since 2011. Previously NSERC- CGI Industrial Chair, 2005-2010. • Recipient of the ACFAS Urgel-Archambault 2009 prize (covering physics, ma- thematics, computer science, and engineering). • Fellow, CIFAR (Canadian Institute For Advanced Research), since 2004. Co- director of the CIFAR NCAP program since 2014. • Member of the board of the Neural Information Processing Systems (NIPS) Foundation, since 2010. • Action Editor, Journal of Machine Learning Research (JMLR), Neural Compu- tation, Foundations and Trends in Machine Learning, and Computational Intelligence. Member of the 2012 editor-in-chief nominating committee for JMLR. • Fellow, CIRANO (Centre Inter-universitaire de Recherche en Analyse des Organi- sations), since 1997. • Previously Associate Editor, Machine Learning, IEEE Trans. on Neural Net- works. • Founder (1993) and head of the Laboratoire d’Informatique des Systèmes Adaptatifs (LISA), and the Montreal Institute for Learning Algorithms (MILA), currently including 5 faculty, 40 students, 5 post-docs, 5 researchers on staff, and numerous visitors. This is probably the largest concentration of deep learning researchers in the world (around 60 researchers). • Member of the board of the Centre de Recherches Mathématiques, UdeM, 1999- 2009. Member of the Awards Committee of the Canadian Association for Computer Science (2012-2013).