Analysing and Improving the Knowledge-Based Fast Evolutionary MCTS Algorithm

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Rétro Gaming

ATARI - CONSOLE RETRO FLASHBACK 8 GOLD ACTIVISION – 130 JEUX Faites ressurgir vos meilleurs souvenirs ! Avec 130 classiques du jeu vidéo dont 39 jeux Activision, cette Atari Flashback 8 Gold édition Activision saura vous rappeler aux bons souvenirs du rétro-gaming. Avec les manettes sans fils ou vos anciennes manettes classiques Atari, vous n’avez qu’à brancher la console à votre télévision et vous voilà prêts pour l’action ! CARACTÉRISTIQUES : • 130 jeux classiques incluant les meilleurs hits de la console Atari 2600 et 39 titres Activision • Plug & Play • Inclut deux manettes sans fil 2.4G • Fonctions Sauvegarde, Reprise, Rembobinage • Sortie HD 720p • Port HDMI • Ecran FULL HD Inclut les jeux cultes : • Space Invaders • Centipede • Millipede • Pitfall! • River Raid • Kaboom! • Spider Fighter LISTE DES JEUX INCLUS LISTE DES JEUX ACTIVISION REF Adventure Black Jack Football Radar Lock Stellar Track™ Video Chess Beamrider Laser Blast Asteroids® Bowling Frog Pond Realsports® Baseball Street Racer Video Pinball Boxing Megamania JVCRETR0124 Centipede ® Breakout® Frogs and Flies Realsports® Basketball Submarine Commander Warlords® Bridge Oink! Kaboom! Canyon Bomber™ Fun with Numbers Realsports® Soccer Super Baseball Yars’ Return Checkers Pitfall! Missile Command® Championship Golf Realsports® Volleyball Super Breakout® Chopper Command Plaque Attack Pitfall! Soccer™ Gravitar® Return to Haunted Save Mary Cosmic Commuter Pressure Cooker EAN River Raid Circus Atari™ Hangman House Super Challenge™ Football Crackpots Private Eye Yars’ Revenge® Combat® -

Downloaded April 22, 2006

SIX DECADES OF GUIDED MUNITIONS AND BATTLE NETWORKS: PROGRESS AND PROSPECTS Barry D. Watts Thinking Center for Strategic Smarter and Budgetary Assessments About Defense www.csbaonline.org Six Decades of Guided Munitions and Battle Networks: Progress and Prospects by Barry D. Watts Center for Strategic and Budgetary Assessments March 2007 ABOUT THE CENTER FOR STRATEGIC AND BUDGETARY ASSESSMENTS The Center for Strategic and Budgetary Assessments (CSBA) is an independent, nonprofit, public policy research institute established to make clear the inextricable link between near-term and long- range military planning and defense investment strategies. CSBA is directed by Dr. Andrew F. Krepinevich and funded by foundations, corporations, government, and individual grants and contributions. This report is one in a series of CSBA analyses on the emerging military revolution. Previous reports in this series include The Military-Technical Revolution: A Preliminary Assessment (2002), Meeting the Anti-Access and Area-Denial Challenge (2003), and The Revolution in War (2004). The first of these, on the military-technical revolution, reproduces the 1992 Pentagon assessment that precipitated the 1990s debate in the United States and abroad over revolutions in military affairs. Many friends and professional colleagues, both within CSBA and outside the Center, have contributed to this report. Those who made the most substantial improvements to the final manuscript are acknowledged below. However, the analysis and findings are solely the responsibility of the author and CSBA. 1667 K Street, NW, Suite 900 Washington, DC 20036 (202) 331-7990 CONTENTS ACKNOWLEGEMENTS .................................................. v SUMMARY ............................................................... ix GLOSSARY ………………………………………………………xix I. INTRODUCTION ..................................................... 1 Guided Munitions: Origins in the 1940s............. 3 Cold War Developments and Prospects ............ -

Atari Flashback Classics Game List

Title: Atari Flashback Classics Volume 1 & Volume 2 Genre: Arcade (Action, Adventure, Casual) Players: 1-4 (Local and Online) Platforms: Xbox One, PlayStation 4 Publisher: Atari Developer: Code Mystics Release Date: October 2016 Distribution: AT Games Price: $19.99 Target Rating: Everyone (PEGI 7) Website: www.AtariFlashBackClassics.com OVERVIEW: Relive the golden age of video games on the latest generation consoles. Each volume of Atari® Flashback Classics brings 50 iconic Atari games, complete with multiplayer, global leaderboards, and much more. From arcade legends to 2600 classics, this massive library includes some of the most popular Atari titles ever released, including Asteroids®, Centipede®, Missile Command®, and many more. Intuitive interface design delivers the responsive feel of the originals on modern controllers. All new achievements, leaderboards and social features, combined with an amazing archive of classic artwork make Atari Flashback Classics the ultimate Atari collection! FEATURES: 100 Classic Atari 2600 and Arcade Games: Each volume contains 50 seminal Atari titles including Asteroids®, Centipede®, Missile Command®, Tempest®, Warlords®, and many more (full list on following page). Online and Local Multiplayer: Battle your friends for supremacy online or at home. Online Leaderboards: Compare your high scores with players from around the world. Brand New User-Interface: A highly customizable user-interface brings the classic arcade experience to home console controllers. Original Cabinet and Box Art: Relive the glory days with a massive library of period- accurate cabinet, promotional, and box art. Volume One Includes the following Games: 1. 3-D Tic-Tac-Toe (2600) 18. Home Run (2600) 36. Sprint Master (2600) 2. Air-Sea Battle (2600) 19. -

Classic Gaming Expo 2005 !! ! Wow

San Francisco, California August 20-21, 2005 $5.00 Welcome to Classic Gaming Expo 2005 !! ! Wow .... eight years! It's truly amazing to think that we 've been doing this show, and trying to come up with a fresh introduction for this program, for eight years now. Many things have changed over the years - not the least of which has been ourselves. Eight years ago John was a cable splicer for the New York phone company, which was then called NYNEX, and was happily and peacefully married to his wife Beverly who had no idea what she was in for over the next eight years. Today, John's still married to Beverly though not quite as peacefully with the addition of two sons to his family. He's also in a supervisory position with Verizon - the new New York phone company. At the time of our first show, Sean was seven years into a thirteen-year stint with a convenience store he owned in Chicago. He was married to Melissa and they had two daughters. Eight years later, Sean has sold the convenience store and opened a videogame store - something of a life-long dream (or was that a nightmare?) Sean 's family has doubled in size and now consists of fou r daughters. Joe and Liz have probably had the fewest changes in their lives over the years but that's about to change . Joe has been working for a firm that manages and maintains database software for pharmaceutical companies for the past twenty-some years. While there haven 't been any additions to their family, Joe is about to leave his job and pursue his dream of owning his own business - and what would be more appropriate than a videogame store for someone who's life has been devoted to collecting both the games themselves and information about them for at least as many years? Despite these changes in our lives we once again find ourselves gathering to pay tribute to an industry for which our admiration will never change . -

Owner's Manual Model Cx-2600

OWNER'S MANUAL MODEL CX-2600 1 Contents Getting Started ……………………….. 3 Main Menu Explanation ……………………….. 5 Game Listing ……………………….. 6 Credits ……………………….. 106 Technical Support ……………………….. 107 Terms And Conditions ……………………….. 108 2 Getting Started Health Warnings and Precautions • Video games may cause a small percentage of individuals to experience seizures or have momentary loss of consciousness when viewing certain kinds of flashing lights or patterns on a television screen. Certain conditions may include epileptic symptoms even in persons with no prior history or seizures or epilepsy. • If you or anyone in your family has an epileptic condition, consult your physician prior to game play. • It is recommended that parents observe their children when their children play video games. • If you or your child experiences any of the following symptoms: dizziness, altered vision, eye or muscle twitching, involuntary movements, loss of awareness, disorientation, or convulsions, discontinue use immediately. • Some people may experience fatigue or discomfort after playing for a long time. If your hands or arms become tired or uncomfortable during game play, stop playing immediately. • If you continue to experience soreness or discomfort during or after play, stop playing and consult your physician. • If your hands, wrists or arms have been injured or strained in other activities, use of your system could aggravate the condition. As necessary consult your physician before playing video games. • Do not sit or stand too close to the television. • Do not play if you are tired or need sleep. • Always play in a well-lit room. • Be sure to take a 10- to 15- minute break, at least every hour, while playing. -

Game Title FEATURED Games Platform

FEATURED Games Game Title platform Adventure Console Aladdin Console Asteroids® Arcade Asteroids® Deluxe Arcade Bad Dudes vs. Dragon Ninja Arcade Black Widow Arcade Bloody Wolf / Battle Rangers Arcade Break Thru Arcade BurgerTime™ Arcade Burnin’ Rubber™ Arcade Centipede® Arcade Choplifter!™ Console Crystal Castles® Arcade Donald in Maui Mallard Console Express Raider / Western Express Arcade Fighter’s History Arcade Fighter’s History Dynamite / Karnov’s Revenge Arcade Fix-It Felix, Jr. Arcade Gravitar® Arcade Heavy Barrel Arcade Joe & Mac 2: Lost in the tropics Arcade Joe & Mac Returns Arcade Joe & Mac: Caveman Ninja Arcade Jumpman Junior™ Console Karate Champ Arcade Lock ‘n’ Chase Arcade Lunar Lander Arcade Magical Drop / Chain Reaction Arcade Magical Drop III Arcade Major Havoc Arcade Millipede® Arcade Missile Command® Arcade Peter Pepper’s Ice Cream Factory Arcade Pong® Arcade Side Pocket Arcade Super Breakout® Arcade Super Burger Time Arcade Super Real Darwin Arcade Super Star Wars Console Super Star Wars: Return of the Jedi Console Super Star Wars: The Empire Strikes Back Console Tempest® Arcade Tetris® Console Tetris® Plus 2 Arcade The Astyanax Arcade The Jungle Book Console The Lion King Console Tron Arcade Warlords® Arcade Yars’ Revenge Console FULL Games LIST No. Game Title platform 1 3-D Tic-Tac-Toe Console 2 64th Street - A Detective Story Arcade 3 8 Eyes Console 4 Act-Fancer Cybernetick Hyper Weapon Arcade 5 Adventure Console 6 Adventure II Console 7 Air Cavalry Console 8 Air-Sea Battle Console 9 Aladdin Console 10 Alphabet Zoo™ Console 11 Antarctic Adventure™ Console 12 Apocalypse II Console 13 Aquattack™ Console 14 Aquaventure Console 15 Argus Arcade 16 Artillery Duel™ Console 17 Asteroids® Arcade 18 Asteroids® Console 19 Asteroids® Deluxe Arcade 20 Astro Fantasia Arcade 21 Atari Baseball Arcade 22 Atari Basketball Arcade 23 Atari Football Arcade 24 Atari Soccer Arcade 25 Atari Video Cube Console 26 Avalanche Arcade 27 Avenging Spirit Arcade 28 Backgammon Console 29 Bad Dudes vs. -

2005 Minigame Multicart 32 in 1 Game Cartridge 3-D Tic-Tac-Toe Acid Drop Actionauts Activision Decathlon, the Adventure A

2005 Minigame Multicart 32 in 1 Game Cartridge 3-D Tic-Tac-Toe Acid Drop Actionauts Activision Decathlon, The Adventure Adventures of TRON Air Raid Air Raiders Airlock Air-Sea Battle Alfred Challenge (France) (Unl) Alien Alien Greed Alien Greed 2 Alien Greed 3 Allia Quest (France) (Unl) Alligator People, The Alpha Beam with Ernie Amidar Aquaventure Armor Ambush Artillery Duel AStar Asterix Asteroid Fire Asteroids Astroblast Astrowar Atari Video Cube A-Team, The Atlantis Atlantis II Atom Smasher A-VCS-tec Challenge AVGN K.O. Boxing Bachelor Party Bachelorette Party Backfire Backgammon Bank Heist Barnstorming Base Attack Basic Math BASIC Programming Basketball Battlezone Beamrider Beany Bopper Beat 'Em & Eat 'Em Bee-Ball Berenstain Bears Bermuda Triangle Berzerk Big Bird's Egg Catch Bionic Breakthrough Blackjack BLiP Football Bloody Human Freeway Blueprint BMX Air Master Bobby Is Going Home Boggle Boing! Boulder Dash Bowling Boxing Brain Games Breakout Bridge Buck Rogers - Planet of Zoom Bugs Bugs Bunny Bump 'n' Jump Bumper Bash BurgerTime Burning Desire (Australia) Cabbage Patch Kids - Adventures in the Park Cakewalk California Games Canyon Bomber Carnival Casino Cat Trax Cathouse Blues Cave In Centipede Challenge Challenge of.... Nexar, The Championship Soccer Chase the Chuckwagon Checkers Cheese China Syndrome Chopper Command Chuck Norris Superkicks Circus Atari Climber 5 Coco Nuts Codebreaker Colony 7 Combat Combat Two Commando Commando Raid Communist Mutants from Space CompuMate Computer Chess Condor Attack Confrontation Congo Bongo -

Dp Guide Lite Us

Atari 2600 USA Digital Press GB I GB I GB I 3-D Tic-Tac-Toe/Atari R2 Beat 'Em & Eat 'Em/Lady in Wadin R7 Chuck Norris Superkicks/Spike's Pe R7 3-D Tic-Tac-Toe/Sears R3 Berenstain Bears/Coleco R6 Circus/Sears R3 A Game of Concentration/Atari R3 Bermuda Triangle/Data Age R2 Circus Atari/Atari R1 Action Pak/Atari R6 Berzerk/Atari R1 Coconuts/Telesys R3 Adventure/Atari R1 Berzerk/Sears R3 Codebreaker/Atari R2 Adventure/Sears R3 Big Bird's Egg Catch/Atari R2 Codebreaker/Sears R3 Adventures of Tron/M Network R2 Blackjack/Atari R1 Color Bar Generator/Videosoft R9 Air Raid/Men-a-Vision R10 Blackjack/Sears R2 Combat/Atari R1 Air Raiders/M Network R2 Blue Print/CBS Games R3 Commando/Activision R3 Airlock/Data Age R2 BMX Airmaster/Atari R10 Commando Raid/US Games R3 Air-Sea Battle/Atari R1 BMX Airmaster/TNT Games R4 Communist Mutants From Space/St R3 Alien/20th Cent Fox R3 Bogey Blaster/Telegames R3 Condor Attack/Ultravision R8 Alpha Beam with Ernie/Atari R3 Boing!/First Star R7 Congo Bongo/Sega R2 Amidar/Parker Bros R2 Bowling/Atari R1 Cookie Monster Munch/Atari R2 Arcade Golf/Sears R3 Bowling/Sears R2 Copy Cart/Vidco R8 Arcade Pinball/Sears R3 Boxing/Activision R1 Cosmic Ark/Imagic R2 Armor Ambush/M Network R2 Brain Games/Atari R2 Cosmic Commuter/Activision R4 Artillery Duel/Xonox R6 Brain Games/Sears R3 Cosmic Corridor/Zimag R6 Artillery Duel/Chuck Norris SuperKi R4 Breakaway IV/Sears R3 Cosmic Creeps/Telesys R3 Artillery Duel/Ghost Manor/Xonox R7 Breakout/Atari R1 Cosmic Swarm/CommaVid -

Atari IP Catalog 2016 IP List (Highlighted Links Are Included in Deck)

Atari IP Catalog 2016 IP List (Highlighted Links are Included in Deck) 3D Asteroids Atari Video Cube Dodge ’Em Meebzork Realsports Soccer Stock Car * 3D Tic-Tac-Toe Avalanche * Dominos * Meltdown Realsports Tennis Street Racer A Game of Concentration Backgammon Double Dunk Micro-gammon Realsports Volleyball Stunt Cycle * Act of War: Direct Action Barroom Baseball Drag Race * Millipede Rebound * Submarine Commander Act of War: High Treason Basic Programming Fast Freddie * Mind Maze Red Baron * Subs * Adventure Basketball Fatal Run Miniature Golf Retro Atari Classics Super Asteroids & Missile Adventure II Basketbrawl Final Legacy Minimum Return to Haunted House Command Agent X * Bionic Breakthrough Fire Truck * Missile Command Roadrunner Super Baseball Airborne Ranger Black Belt Firefox * Missile Command 2 * RollerCoaster Tycoon Super Breakout Air-Sea Battle Black Jack Flag Capture Missile Command 3D Runaway * Super Bunny Breakout Akka Arrh * Black Widow * Flyball * Monstercise Saboteur Super Football Alien Brigade Boogie Demo Food Fight (Charley Chuck's) Monte Carlo * Save Mary Superbug * Alone In the Dark Booty Football Motor Psycho Scrapyard Dog Surround Alone in the Dark: Illumination Bowling Frisky Tom MotoRodeo Secret Quest Swordquest: Earthworld Alpha 1 * Boxing * Frog Pond Night Driver Sentinel Swordquest: Fireworld Anti-Aircraft * Brain Games Fun With Numbers Ninja Golf Shark Jaws * Swordquest: Waterworld Aquaventure Breakout Gerry the Germ Goes Body Off the Wall Shooting Arcade Tank * Asteroids Breakout * Poppin Orbit * Sky Diver -

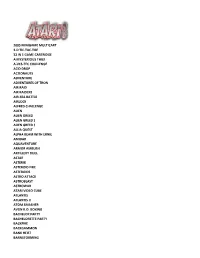

2005 Minigame Multicart 3-D Tic-Tac-Toe 32 in 1 Game Cartridge a Mysterious Thief A-Vcs-Tec Challenge Acid Drop Actionauts Adven

2005 MINIGAME MULTICART 3-D TIC-TAC-TOE 32 IN 1 GAME CARTRIDGE A MYSTERIOUS THIEF A-VCS-TEC CHALLENGE ACID DROP ACTIONAUTS ADVENTURE ADVENTURES OF TRON AIR RAID AIR RAIDERS AIR-SEA BATTLE AIRLOCK ALFRED CHALLENGE ALIEN ALIEN GREED ALIEN GREED 2 ALIEN GREED 3 ALLIA QUEST ALPHA BEAM WITH ERNIE AMIDAR AQUAVENTURE ARMOR AMBUSH ARTILLERY DUEL ASTAR ASTERIX ASTEROID FIRE ASTEROIDS ASTRO ATTACK ASTROBLAST ASTROWAR ATARI VIDEO CUBE ATLANTIS ATLANTIS II ATOM SMASHER AVGN K.O. BOXING BACHELOR PARTY BACHELORETTE PARTY BACKFIRE BACKGAMMON BANK HEIST BARNSTORMING BASE ATTACK BASIC MATH BASIC PROGRAMMING BASKETBALL BATTLEZONE BEAMRIDER BEANY BOPPER BEAT 'EM & EAT 'EM BEE-BALL BERENSTAIN BEARS BERMUDA TRIANGLE BIG BIRD'S EGG CATCH BIONIC BREAKTHROUGH BLACKJACK BLOODY HUMAN FREEWAY BLUEPRINT BMX AIR MASTER BOBBY IS GOING HOME BOGGLE BOING! BOULDER DASH BOWLING BOXING BRAIN GAMES BREAKOUT BRIDGE BUCK ROGERS - PLANET OF ZOOM BUGS BUGS BUNNY BUMP 'N' JUMP BUMPER BASH BURGERTIME BURNING DESIRE CABBAGE PATCH KIDS - ADVENTURES IN THE PARK CAKEWALK CALIFORNIA GAMES CANYON BOMBER CARNIVAL CASINO CAT TRAX CATHOUSE BLUES CAVE IN CENTIPEDE CHALLENGE CHAMPIONSHIP SOCCER CHASE THE CHUCK WAGON CHECKERS CHEESE CHINA SYNDROME CHOPPER COMMAND CHUCK NORRIS SUPERKICKS CIRCUS CLIMBER 5 COCO NUTS CODE BREAKER COLONY 7 COMBAT COMBAT TWO COMMANDO COMMANDO RAID COMMUNIST MUTANTS FROM SPACE COMPUMATE COMPUTER CHESS CONDOR ATTACK CONFRONTATION CONGO BONGO CONQUEST OF MARS COOKIE MONSTER MUNCH COSMIC ARK COSMIC COMMUTER COSMIC CREEPS COSMIC INVADERS COSMIC SWARM CRACK'ED CRACKPOTS -

You've Seen the Movie, Now Play The

“YOU’VE SEEN THE MOVIE, NOW PLAY THE VIDEO GAME”: RECODING THE CINEMATIC IN DIGITAL MEDIA AND VIRTUAL CULTURE Stefan Hall A Dissertation Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY May 2011 Committee: Ronald Shields, Advisor Margaret M. Yacobucci Graduate Faculty Representative Donald Callen Lisa Alexander © 2011 Stefan Hall All Rights Reserved iii ABSTRACT Ronald Shields, Advisor Although seen as an emergent area of study, the history of video games shows that the medium has had a longevity that speaks to its status as a major cultural force, not only within American society but also globally. Much of video game production has been influenced by cinema, and perhaps nowhere is this seen more directly than in the topic of games based on movies. Functioning as franchise expansion, spaces for play, and story development, film-to-game translations have been a significant component of video game titles since the early days of the medium. As the technological possibilities of hardware development continued in both the film and video game industries, issues of media convergence and divergence between film and video games have grown in importance. This dissertation looks at the ways that this connection was established and has changed by looking at the relationship between film and video games in terms of economics, aesthetics, and narrative. Beginning in the 1970s, or roughly at the time of the second generation of home gaming consoles, and continuing to the release of the most recent consoles in 2005, it traces major areas of intersection between films and video games by identifying key titles and companies to consider both how and why the prevalence of video games has happened and continues to grow in power. -

Instruction Manual Model No: Ar3220

INSTRUCTION MANUAL MODEL NO: AR3220 AtGames Digital Media, Inc. www.atgames.net IMPORTANT: READ BEFORE USE In very rare circumstances, some people may experience epileptic seizures when viewing flashing lights or patterns in our everyday life. Flashing lights and patterns are also common to almost any video game. Please consult your physician before playing ANY video game if you have had an epileptic condition or seizure OR if you experience any of the following while playing - Altered vision, eye or muscle twitching, mental confusion or disorientation, loss of awareness of the surroundings or involuntary movements. It is advised to take a 20-minute rest after 1 hour of continuous play. Classic Game Console Appearance and Key List The image below shows the location of the connectors and buttons. Each function is outlined below (the illustration is for reference only). 1 2 8 9 3 4 5 6 7 1 Power 6 Left Player Game Controller Jack Turn the game console’s power Game controller connected to this ON/OFF. jack controls games in 1-player games and controls the first player 2 START (Original RESET Button) in 2-player games. Note that some Press this button to begin or 2-player games alternate use of the reset most games. left player. 3 Difficulty Button - Left Player 7 Right Player Game Controller Jack Press this button to switch Game controller connected to this between one of two difficulty jack controls the second player in levels in most games. 2-player games. 4 Difficulty Button - Right Player 8 AC Adaptor Jack (DC 5V) Press this button to switch The power adaptor plugs into this between one of two difficulty port, then into your AC outlet.