Randomness and Computation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

“To Be a Good Mathematician, You Need Inner Voice” ”Огонёкъ” Met with Yakov Sinai, One of the World’S Most Renowned Mathematicians

“To be a good mathematician, you need inner voice” ”ОгонёкЪ” met with Yakov Sinai, one of the world’s most renowned mathematicians. As the new year begins, Russia intensifies the preparations for the International Congress of Mathematicians (ICM 2022), the main mathematical event of the near future. 1966 was the last time we welcomed the crème de la crème of mathematics in Moscow. “Огонёк” met with Yakov Sinai, one of the world’s top mathematicians who spoke at ICM more than once, and found out what he thinks about order and chaos in the modern world.1 Committed to science. Yakov Sinai's close-up. One of the most eminent mathematicians of our time, Yakov Sinai has spent most of his life studying order and chaos, a research endeavor at the junction of probability theory, dynamical systems theory, and mathematical physics. Born into a family of Moscow scientists on September 21, 1935, Yakov Sinai graduated from the department of Mechanics and Mathematics (‘Mekhmat’) of Moscow State University in 1957. Andrey Kolmogorov’s most famous student, Sinai contributed to a wealth of mathematical discoveries that bear his and his teacher’s names, such as Kolmogorov-Sinai entropy, Sinai’s billiards, Sinai’s random walk, and more. From 1998 to 2002, he chaired the Fields Committee which awards one of the world’s most prestigious medals every four years. Yakov Sinai is a winner of nearly all coveted mathematical distinctions: the Abel Prize (an equivalent of the Nobel Prize for mathematicians), the Kolmogorov Medal, the Moscow Mathematical Society Award, the Boltzmann Medal, the Dirac Medal, the Dannie Heineman Prize, the Wolf Prize, the Jürgen Moser Prize, the Henri Poincaré Prize, and more. -

Excerpts from Kolmogorov's Diary

Asia Pacific Mathematics Newsletter Excerpts from Kolmogorov’s Diary Translated by Fedor Duzhin Introduction At the age of 40, Andrey Nikolaevich Kolmogorov (1903–1987) began a diary. He wrote on the title page: “Dedicated to myself when I turn 80 with the wish of retaining enough sense by then, at least to be able to understand the notes of this 40-year-old self and to judge them sympathetically but strictly”. The notes from 1943–1945 were published in Russian in 2003 on the 100th anniversary of the birth of Kolmogorov as part of the opus Kolmogorov — a three-volume collection of articles about him. The following are translations of a few selected records from the Kolmogorov’s diary (Volume 3, pages 27, 28, 36, 95). Sunday, 1 August 1943. New Moon 6:30 am. It is a little misty and yet a sunny morning. Pusya1 and Oleg2 have gone swimming while I stay home being not very well (though my condition is improving). Anya3 has to work today, so she will not come. I’m feeling annoyed and ill at ease because of that (for the second time our Sunday “readings” will be conducted without Anya). Why begin this notebook now? There are two reasonable explanations: 1) I have long been attracted to the idea of a diary as a disciplining force. To write down what has been done and what changes are needed in one’s life and to control their implementation is by no means a new idea, but it’s equally relevant whether one is 16 or 40 years old. -

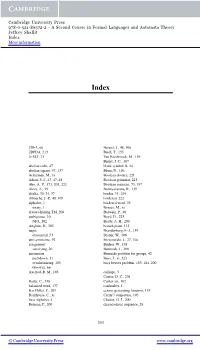

A Second Course in Formal Languages and Automata Theory Jeffrey Shallit Index More Information

Cambridge University Press 978-0-521-86572-2 - A Second Course in Formal Languages and Automata Theory Jeffrey Shallit Index More information Index 2DFA, 66 Berstel, J., 48, 106 2DPDA, 213 Biedl, T., 135 3-SAT, 21 Van Biesbrouck, M., 139 Birget, J.-C., 107 abelian cube, 47 blank symbol, B, 14 abelian square, 47, 137 Blum, N., 106 Ackerman, M., xi Boolean closure, 221 Adian, S. I., 43, 47, 48 Boolean grammar, 223 Aho, A. V., 173, 201, 223 Boolean matrices, 73, 197 Alces, A., 29 Boonyavatana, R., 139 alfalfa, 30, 34, 37 border, 35, 104 Allouche, J.-P., 48, 105 bordered, 222 alphabet, 1 bordered word, 35 unary, 1 Borges, M., xi always-halting TM, 209 Borwein, P., 48 ambiguous, 10 Boyd, D., 223 NFA, 102 Brady, A. H., 200 Angluin, D., 105 branch point, 113 angst Brandenburg, F.-J., 139 existential, 54 Brauer, W., 106 antisymmetric, 92 Brzozowski, J., 27, 106 assignment Bucher, W., 138 satisfying, 20 Buntrock, J., 200 automaton Burnside problem for groups, 42 pushdown, 11 Buss, J., xi, 223 synchronizing, 105 busy beaver problem, 183, 184, 200 two-way, 66 Axelrod, R. M., 105 calliope, 3 Cantor, D. C., 201 Bader, C., 138 Cantor set, 102 balanced word, 137 cardinality, 1 Bar-Hillel, Y., 201 census generating function, 133 Barkhouse, C., xi Cerny’s conjecture, 105 base alphabet, 4 Chaitin, G. J., 200 Berman, P., 200 characteristic sequence, 28 233 © Cambridge University Press www.cambridge.org Cambridge University Press 978-0-521-86572-2 - A Second Course in Formal Languages and Automata Theory Jeffrey Shallit Index More information 234 Index chess, -

Kolmogorov Complexity of Graphs John Hearn Harvey Mudd College

Claremont Colleges Scholarship @ Claremont HMC Senior Theses HMC Student Scholarship 2006 Kolmogorov Complexity of Graphs John Hearn Harvey Mudd College Recommended Citation Hearn, John, "Kolmogorov Complexity of Graphs" (2006). HMC Senior Theses. 182. https://scholarship.claremont.edu/hmc_theses/182 This Open Access Senior Thesis is brought to you for free and open access by the HMC Student Scholarship at Scholarship @ Claremont. It has been accepted for inclusion in HMC Senior Theses by an authorized administrator of Scholarship @ Claremont. For more information, please contact [email protected]. Applications of Kolmogorov Complexity to Graphs John Hearn Ran Libeskind-Hadas, Advisor Michael Orrison, Reader May, 2006 Department of Mathematics Copyright © 2006 John Hearn. The author grants Harvey Mudd College the nonexclusive right to make this work available for noncommercial, educational purposes, provided that this copyright statement appears on the reproduced materials and notice is given that the copy- ing is by permission of the author. To disseminate otherwise or to republish re- quires written permission from the author. Abstract Kolmogorov complexity is a theory based on the premise that the com- plexity of a binary string can be measured by its compressibility; that is, a string’s complexity is the length of the shortest program that produces that string. We explore applications of this measure to graph theory. Contents Abstract iii Acknowledgments vii 1 Introductory Material 1 1.1 Definitions and Notation . 2 1.2 The Invariance Theorem . 4 1.3 The Incompressibility Theorem . 7 2 Graph Complexity and the Incompressibility Method 11 2.1 Complexity of Labeled Graphs . 12 2.2 The Incompressibility Method . -

Compressing Information Kolmogorov Complexity Optimal Decompression

Compressing information Optimal decompression algorithm Almost everybody now is familiar with compress- The definition of KU depends on U. For the trivial ÞÔ ÞÔ µ ´ µ ing/decompressing programs such as , , decompression algorithm U ´y y we have KU x Ö , , etc. A compressing program can x. One can try to find better decompression algo- be applied to any file and produces the “compressed rithms, where “better” means “giving smaller com- version” of that file. If we are lucky, the compressed plexities”. However, the number of short descrip- version is much shorter than the original one. How- tions is limited: There is less than 2n strings of length ever, no information is lost: the decompression pro- less than n. Therefore, for any fixed decompres- gram can be applied to the compressed version to get sion algorithm the number of words whose complex- n the original file. ity is less than n does not exceed 2 1. One may [Question: A software company advertises a com- conclude that there is no “optimal” decompression pressing program and claims that this program can algorithm because we can assign short descriptions compress any sufficiently long file to at most 90% of to some string only taking them away from other its original size. Would you buy this program?] strings. However, Kolmogorov made a simple but How compression works? A compression pro- crucial observation: there is asymptotically optimal gram tries to find some regularities in a file which decompression algorithm. allow to give a description of the file which is shorter than the file itself; the decompression program re- Definition 1 An algorithm U is asymptotically not µ 6 ´ µ · constructs the file using this description. -

Fundamental Theorems in Mathematics

SOME FUNDAMENTAL THEOREMS IN MATHEMATICS OLIVER KNILL Abstract. An expository hitchhikers guide to some theorems in mathematics. Criteria for the current list of 243 theorems are whether the result can be formulated elegantly, whether it is beautiful or useful and whether it could serve as a guide [6] without leading to panic. The order is not a ranking but ordered along a time-line when things were writ- ten down. Since [556] stated “a mathematical theorem only becomes beautiful if presented as a crown jewel within a context" we try sometimes to give some context. Of course, any such list of theorems is a matter of personal preferences, taste and limitations. The num- ber of theorems is arbitrary, the initial obvious goal was 42 but that number got eventually surpassed as it is hard to stop, once started. As a compensation, there are 42 “tweetable" theorems with included proofs. More comments on the choice of the theorems is included in an epilogue. For literature on general mathematics, see [193, 189, 29, 235, 254, 619, 412, 138], for history [217, 625, 376, 73, 46, 208, 379, 365, 690, 113, 618, 79, 259, 341], for popular, beautiful or elegant things [12, 529, 201, 182, 17, 672, 673, 44, 204, 190, 245, 446, 616, 303, 201, 2, 127, 146, 128, 502, 261, 172]. For comprehensive overviews in large parts of math- ematics, [74, 165, 166, 51, 593] or predictions on developments [47]. For reflections about mathematics in general [145, 455, 45, 306, 439, 99, 561]. Encyclopedic source examples are [188, 705, 670, 102, 192, 152, 221, 191, 111, 635]. -

A Modern History of Probability Theory

A Modern History of Probability Theory Kevin H. Knuth Depts. of Physics and Informatics University at Albany (SUNY) Albany NY USA 4/29/2016 Knuth - Bayes Forum 1 A Modern History of Probability Theory Kevin H. Knuth Depts. of Physics and Informatics University at Albany (SUNY) Albany NY USA 4/29/2016 Knuth - Bayes Forum 2 A Long History The History of Probability Theory, Anthony J.M. Garrett MaxEnt 1997, pp. 223-238. Hájek, Alan, "Interpretations of Probability", The Stanford Encyclopedia of Philosophy (Winter 2012 Edition), Edward N. Zalta (ed.), URL = <http://plato.stanford.edu/archives/win2012/entries/probability-interpret/>. 4/29/2016 Knuth - Bayes Forum 3 … la théorie des probabilités n'est, au fond, que le bon sens réduit au calcul … … the theory of probabilities is basically just common sense reduced to calculation … Pierre Simon de Laplace Théorie Analytique des Probabilités 4/29/2016 Knuth - Bayes Forum 4 Taken from Harold Jeffreys “Theory of Probability” 4/29/2016 Knuth - Bayes Forum 5 The terms certain and probable describe the various degrees of rational belief about a proposition which different amounts of knowledge authorise us to entertain. All propositions are true or false, but the knowledge we have of them depends on our circumstances; and while it is often convenient to speak of propositions as certain or probable, this expresses strictly a relationship in which they stand to a corpus of knowledge, actual or hypothetical, and not a characteristic of the propositions in themselves. A proposition is capable at the same time of varying degrees of this relationship, depending upon the knowledge to which it is related, so that it is without significance to call a John Maynard Keynes proposition probable unless we specify the knowledge to which we are relating it. -

The Folklore of Sorting Algorithms

IJCSI International Journal of Computer Science Issues, Vol. 4, No. 2, 2009 25 ISSN (Online): 1694-0784 ISSN (Print): 1694-0814 The Folklore of Sorting Algorithms Dr. Santosh Khamitkar1, Parag Bhalchandra2, Sakharam Lokhande 2, Nilesh Deshmukh2 School of Computational Sciences, Swami Ramanand Teerth Marathwada University, Nanded (MS ) 431605, India Email : 1 [email protected] , 2 [email protected] Abstract progress. Every researcher attempted optimization in past has found stuck to his / her observations regarding sorting The objective of this paper is to review the folklore knowledge experiments and produced his/ her own results. Since seen in research work devoted on synthesis, optimization, and these results were specific to software and hardware effectiveness of various sorting algorithms. We will examine environment therein, we found abundance in the sorting algorithms in the folklore lines and try to discover the complexity analysis which possibly could be thought as tradeoffs between folklore and theorems. Finally, the folklore the folklore knowledge. We tried to review this folklore knowledge on complexity values of the sorting algorithms will be considered, verified and subsequently converged in to knowledge in past research work and came to a conclusion theorems. that it was mainly devoted on synthesis, optimization, effectiveness in working styles of various sorting Key words: Folklore, Algorithm analysis, Sorting algorithm, algorithms. Computational Complexity notations. Our work is not mere commenting earlier work rather we are putting the knowledge embedded in sorting folklore 1. Introduction with some different and interesting view so that students, teachers and researchers can easily understand them. Folklore is the traditional beliefs, legend and customs, Synthesis, in terms of folklore and theorems, hereupon current among people. -

Biography of N. N. Luzin

BIOGRAPHY OF N. N. LUZIN http://theor.jinr.ru/~kuzemsky/Luzinbio.html BIOGRAPHY OF N. N. LUZIN (1883-1950) born December 09, 1883, Irkutsk, Russia. died January 28, 1950, Moscow, Russia. Biographic Data of N. N. Luzin: Nikolai Nikolaevich Luzin (also spelled Lusin; Russian: НиколайНиколаевич Лузин) was a Soviet/Russian mathematician known for his work in descriptive set theory and aspects of mathematical analysis with strong connections to point-set topology. He was the co-founder of "Luzitania" (together with professor Dimitrii Egorov), a close group of young Moscow mathematicians of the first half of the 1920s. This group consisted of the higly talented and enthusiastic members which form later the core of the famous Moscow school of mathematics. They adopted his set-theoretic orientation, and went on to apply it in other areas of mathematics. Luzin started studying mathematics in 1901 at Moscow University, where his advisor was professor Dimitrii Egorov (1869-1931). Professor Dimitrii Fedorovihch Egorov was a great scientist and talented teacher; in addition he was a person of very high moral principles. He was a Russian and Soviet mathematician known for significant contributions to the areas of differential geometry and mathematical analysis. Egorov was devoted and openly practicized member of Russian Orthodox Church and active parish worker. This activity was the reason of his conflicts with Soviet authorities after 1917 and finally led him to arrest and exile to Kazan (1930) where he died from heavy cancer. From 1910 to 1914 Luzin studied at Gottingen, where he was influenced by Edmund Landau. He then returned to Moscow and received his Ph.D. -

Inner Simplicity Vs. Outer Simplicity

Inner simplicity vs. outer simplicity Etienne´ Ghys Editors' note: The text is an edited transcript of the author's conference talk. For me, mathematics is just about understanding. And understanding is a personal and private feeling. However, to appreciate and express this feeling, you need to communicate with others|you need to use language. So there are necessarily two aspects in mathematics: one is very personal, emotional and internal, and the other is more public and external. Today I want to express this very na¨ıve idea for mathematicians that we should dis- tinguish between two kinds of simplicities. Something could be very simple for me, in my mind, and in my way of knowing mathematics, and yet be very difficult to articulate or write down in a mathematical paper. And con- versely, something can be very easy to write down or say in just one sentence of English or French or whatever, and nevertheless be all but completely in- accessible to my mind. This basic distinction is something which I believe to be classical but, nevertheless, we mathematicians conflate the two. We keep forgetting that writing mathematics is not the same as understanding mathematics. Let me begin with a memory that I have from when I was a student a long time ago. I was reading a book by a very famous French mathematician, Jean-Pierre Serre. Here is the cover of the book (Figure 1). For many years I was convinced that the title of the book was a joke. How else, I wondered, can these algebras be complex and simple at the same time? For mathematicians, of course, the words `complex' and `semisimple' have totally different meanings than their everyday ones. -

Randomized Algorithm

Randomized Algorithm Tutorial I Sep. 30 2008 Your TA: Abner C.Y. Huang [email protected] Think about Randomness Determinism vs. Randomness 2 Randomness in Real World 3 Joseph Louis Lagrange's deterministic universe ● offered the most comprehensive treatment of classical mechanics since Newton and formed a basis for the development of mathematical physics in the nineteenth century. 4 ● Werner Heisenberg ● Albert Einstein Heinsberg's Uncertainty God doesn't play dice with the Principle universe. 5 Randomness in Math. 6 Randomness in Mathematics ● Pascal was a mathematician of the first order. He helped create major new area of research, probability theory, with Pierre de Fermat. 7 Pascal's triangle 8 Source of Probability theory: Gambling 9 John Maynard Keynes The long run is a misleading guide to current affairs. In the long run we are all dead. 10 ● He got the Ph.D degree of mathematics at King’s College, Cambridge. ● His thesis is about logic in probabilistic viewpoint. ● Keynes published his Treatise on Probability in 1921, a notable contribution to the philosophical and mathematical underpinnings of , championing the important view that probabilities were no more or less than truth values intermediate between simple truth and falsity. 11 Epistemology ● Bayesian and frequentist interpret idea of probability differently. – Frequentist : an event's probability as the limit of its relative frequency in a large number of trials. ● i.e., parameters are constants. – Bayesian : 'probability' should be interpreted as degree of believability -

Issue 93 ISSN 1027-488X

NEWSLETTER OF THE EUROPEAN MATHEMATICAL SOCIETY Interview Yakov Sinai Features Mathematical Billiards and Chaos About ABC Societies The Catalan Photograph taken by Håkon Mosvold Larsen/NTB scanpix Mathematical Society September 2014 Issue 93 ISSN 1027-488X S E European M M Mathematical E S Society American Mathematical Society HILBERT’S FIFTH PROBLEM AND RELATED TOPICS Terence Tao, University of California In the fifth of his famous list of 23 problems, Hilbert asked if every topological group which was locally Euclidean was in fact a Lie group. Through the work of Gleason, Montgomery-Zippin, Yamabe, and others, this question was solved affirmatively. Subsequently, this structure theory was used to prove Gromov’s theorem on groups of polynomial growth, and more recently in the work of Hrushovski, Breuillard, Green, and the author on the structure of approximate groups. In this graduate text, all of this material is presented in a unified manner. Graduate Studies in Mathematics, Vol. 153 Aug 2014 338pp 9781470415648 Hardback €63.00 MATHEMATICAL METHODS IN QUANTUM MECHANICS With Applications to Schrödinger Operators, Second Edition Gerald Teschl, University of Vienna Quantum mechanics and the theory of operators on Hilbert space have been deeply linked since their beginnings in the early twentieth century. States of a quantum system correspond to certain elements of the configuration space and observables correspond to certain operators on the space. This book is a brief, but self-contained, introduction to the mathematical methods of quantum mechanics, with a view towards applications to Schrödinger operators. Graduate Studies in Mathematics, Vol. 157 Nov 2014 356pp 9781470417048 Hardback €61.00 MATHEMATICAL UNDERSTANDING OF NATURE Essays on Amazing Physical Phenomena and their Understanding by Mathematicians V.