Inner Simplicity Vs. Outer Simplicity

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

“To Be a Good Mathematician, You Need Inner Voice” ”Огонёкъ” Met with Yakov Sinai, One of the World’S Most Renowned Mathematicians

“To be a good mathematician, you need inner voice” ”ОгонёкЪ” met with Yakov Sinai, one of the world’s most renowned mathematicians. As the new year begins, Russia intensifies the preparations for the International Congress of Mathematicians (ICM 2022), the main mathematical event of the near future. 1966 was the last time we welcomed the crème de la crème of mathematics in Moscow. “Огонёк” met with Yakov Sinai, one of the world’s top mathematicians who spoke at ICM more than once, and found out what he thinks about order and chaos in the modern world.1 Committed to science. Yakov Sinai's close-up. One of the most eminent mathematicians of our time, Yakov Sinai has spent most of his life studying order and chaos, a research endeavor at the junction of probability theory, dynamical systems theory, and mathematical physics. Born into a family of Moscow scientists on September 21, 1935, Yakov Sinai graduated from the department of Mechanics and Mathematics (‘Mekhmat’) of Moscow State University in 1957. Andrey Kolmogorov’s most famous student, Sinai contributed to a wealth of mathematical discoveries that bear his and his teacher’s names, such as Kolmogorov-Sinai entropy, Sinai’s billiards, Sinai’s random walk, and more. From 1998 to 2002, he chaired the Fields Committee which awards one of the world’s most prestigious medals every four years. Yakov Sinai is a winner of nearly all coveted mathematical distinctions: the Abel Prize (an equivalent of the Nobel Prize for mathematicians), the Kolmogorov Medal, the Moscow Mathematical Society Award, the Boltzmann Medal, the Dirac Medal, the Dannie Heineman Prize, the Wolf Prize, the Jürgen Moser Prize, the Henri Poincaré Prize, and more. -

Excerpts from Kolmogorov's Diary

Asia Pacific Mathematics Newsletter Excerpts from Kolmogorov’s Diary Translated by Fedor Duzhin Introduction At the age of 40, Andrey Nikolaevich Kolmogorov (1903–1987) began a diary. He wrote on the title page: “Dedicated to myself when I turn 80 with the wish of retaining enough sense by then, at least to be able to understand the notes of this 40-year-old self and to judge them sympathetically but strictly”. The notes from 1943–1945 were published in Russian in 2003 on the 100th anniversary of the birth of Kolmogorov as part of the opus Kolmogorov — a three-volume collection of articles about him. The following are translations of a few selected records from the Kolmogorov’s diary (Volume 3, pages 27, 28, 36, 95). Sunday, 1 August 1943. New Moon 6:30 am. It is a little misty and yet a sunny morning. Pusya1 and Oleg2 have gone swimming while I stay home being not very well (though my condition is improving). Anya3 has to work today, so she will not come. I’m feeling annoyed and ill at ease because of that (for the second time our Sunday “readings” will be conducted without Anya). Why begin this notebook now? There are two reasonable explanations: 1) I have long been attracted to the idea of a diary as a disciplining force. To write down what has been done and what changes are needed in one’s life and to control their implementation is by no means a new idea, but it’s equally relevant whether one is 16 or 40 years old. -

Randomness and Computation

Randomness and Computation Rod Downey School of Mathematics and Statistics, Victoria University, PO Box 600, Wellington, New Zealand [email protected] Abstract This article examines work seeking to understand randomness using com- putational tools. The focus here will be how these studies interact with classical mathematics, and progress in the recent decade. A few representa- tive and easier proofs are given, but mainly we will refer to the literature. The article could be seen as a companion to, as well as focusing on develop- ments since, the paper “Calibrating Randomness” from 2006 which focused more on how randomness calibrations correlated to computational ones. 1 Introduction The great Russian mathematician Andrey Kolmogorov appears several times in this paper, First, around 1930, Kolmogorov and others founded the theory of probability, basing it on measure theory. Kolmogorov’s foundation does not seek to give any meaning to the notion of an individual object, such as a single real number or binary string, being random, but rather studies the expected values of random variables. As we learn at school, all strings of length n have the same probability of 2−n for a fair coin. A set consisting of a single real has probability zero. Thus there is no meaning we can ascribe to randomness of a single object. Yet we have a persistent intuition that certain strings of coin tosses are less random than others. The goal of the theory of algorithmic randomness is to give meaning to randomness content for individual objects. Quite aside from the intrinsic mathematical interest, the utility of this theory is that using such objects instead distributions might be significantly simpler and perhaps giving alternative insight into what randomness might mean in mathematics, and perhaps in nature. -

Fundamental Theorems in Mathematics

SOME FUNDAMENTAL THEOREMS IN MATHEMATICS OLIVER KNILL Abstract. An expository hitchhikers guide to some theorems in mathematics. Criteria for the current list of 243 theorems are whether the result can be formulated elegantly, whether it is beautiful or useful and whether it could serve as a guide [6] without leading to panic. The order is not a ranking but ordered along a time-line when things were writ- ten down. Since [556] stated “a mathematical theorem only becomes beautiful if presented as a crown jewel within a context" we try sometimes to give some context. Of course, any such list of theorems is a matter of personal preferences, taste and limitations. The num- ber of theorems is arbitrary, the initial obvious goal was 42 but that number got eventually surpassed as it is hard to stop, once started. As a compensation, there are 42 “tweetable" theorems with included proofs. More comments on the choice of the theorems is included in an epilogue. For literature on general mathematics, see [193, 189, 29, 235, 254, 619, 412, 138], for history [217, 625, 376, 73, 46, 208, 379, 365, 690, 113, 618, 79, 259, 341], for popular, beautiful or elegant things [12, 529, 201, 182, 17, 672, 673, 44, 204, 190, 245, 446, 616, 303, 201, 2, 127, 146, 128, 502, 261, 172]. For comprehensive overviews in large parts of math- ematics, [74, 165, 166, 51, 593] or predictions on developments [47]. For reflections about mathematics in general [145, 455, 45, 306, 439, 99, 561]. Encyclopedic source examples are [188, 705, 670, 102, 192, 152, 221, 191, 111, 635]. -

A Modern History of Probability Theory

A Modern History of Probability Theory Kevin H. Knuth Depts. of Physics and Informatics University at Albany (SUNY) Albany NY USA 4/29/2016 Knuth - Bayes Forum 1 A Modern History of Probability Theory Kevin H. Knuth Depts. of Physics and Informatics University at Albany (SUNY) Albany NY USA 4/29/2016 Knuth - Bayes Forum 2 A Long History The History of Probability Theory, Anthony J.M. Garrett MaxEnt 1997, pp. 223-238. Hájek, Alan, "Interpretations of Probability", The Stanford Encyclopedia of Philosophy (Winter 2012 Edition), Edward N. Zalta (ed.), URL = <http://plato.stanford.edu/archives/win2012/entries/probability-interpret/>. 4/29/2016 Knuth - Bayes Forum 3 … la théorie des probabilités n'est, au fond, que le bon sens réduit au calcul … … the theory of probabilities is basically just common sense reduced to calculation … Pierre Simon de Laplace Théorie Analytique des Probabilités 4/29/2016 Knuth - Bayes Forum 4 Taken from Harold Jeffreys “Theory of Probability” 4/29/2016 Knuth - Bayes Forum 5 The terms certain and probable describe the various degrees of rational belief about a proposition which different amounts of knowledge authorise us to entertain. All propositions are true or false, but the knowledge we have of them depends on our circumstances; and while it is often convenient to speak of propositions as certain or probable, this expresses strictly a relationship in which they stand to a corpus of knowledge, actual or hypothetical, and not a characteristic of the propositions in themselves. A proposition is capable at the same time of varying degrees of this relationship, depending upon the knowledge to which it is related, so that it is without significance to call a John Maynard Keynes proposition probable unless we specify the knowledge to which we are relating it. -

Biography of N. N. Luzin

BIOGRAPHY OF N. N. LUZIN http://theor.jinr.ru/~kuzemsky/Luzinbio.html BIOGRAPHY OF N. N. LUZIN (1883-1950) born December 09, 1883, Irkutsk, Russia. died January 28, 1950, Moscow, Russia. Biographic Data of N. N. Luzin: Nikolai Nikolaevich Luzin (also spelled Lusin; Russian: НиколайНиколаевич Лузин) was a Soviet/Russian mathematician known for his work in descriptive set theory and aspects of mathematical analysis with strong connections to point-set topology. He was the co-founder of "Luzitania" (together with professor Dimitrii Egorov), a close group of young Moscow mathematicians of the first half of the 1920s. This group consisted of the higly talented and enthusiastic members which form later the core of the famous Moscow school of mathematics. They adopted his set-theoretic orientation, and went on to apply it in other areas of mathematics. Luzin started studying mathematics in 1901 at Moscow University, where his advisor was professor Dimitrii Egorov (1869-1931). Professor Dimitrii Fedorovihch Egorov was a great scientist and talented teacher; in addition he was a person of very high moral principles. He was a Russian and Soviet mathematician known for significant contributions to the areas of differential geometry and mathematical analysis. Egorov was devoted and openly practicized member of Russian Orthodox Church and active parish worker. This activity was the reason of his conflicts with Soviet authorities after 1917 and finally led him to arrest and exile to Kazan (1930) where he died from heavy cancer. From 1910 to 1914 Luzin studied at Gottingen, where he was influenced by Edmund Landau. He then returned to Moscow and received his Ph.D. -

Randomized Algorithm

Randomized Algorithm Tutorial I Sep. 30 2008 Your TA: Abner C.Y. Huang [email protected] Think about Randomness Determinism vs. Randomness 2 Randomness in Real World 3 Joseph Louis Lagrange's deterministic universe ● offered the most comprehensive treatment of classical mechanics since Newton and formed a basis for the development of mathematical physics in the nineteenth century. 4 ● Werner Heisenberg ● Albert Einstein Heinsberg's Uncertainty God doesn't play dice with the Principle universe. 5 Randomness in Math. 6 Randomness in Mathematics ● Pascal was a mathematician of the first order. He helped create major new area of research, probability theory, with Pierre de Fermat. 7 Pascal's triangle 8 Source of Probability theory: Gambling 9 John Maynard Keynes The long run is a misleading guide to current affairs. In the long run we are all dead. 10 ● He got the Ph.D degree of mathematics at King’s College, Cambridge. ● His thesis is about logic in probabilistic viewpoint. ● Keynes published his Treatise on Probability in 1921, a notable contribution to the philosophical and mathematical underpinnings of , championing the important view that probabilities were no more or less than truth values intermediate between simple truth and falsity. 11 Epistemology ● Bayesian and frequentist interpret idea of probability differently. – Frequentist : an event's probability as the limit of its relative frequency in a large number of trials. ● i.e., parameters are constants. – Bayesian : 'probability' should be interpreted as degree of believability -

Issue 93 ISSN 1027-488X

NEWSLETTER OF THE EUROPEAN MATHEMATICAL SOCIETY Interview Yakov Sinai Features Mathematical Billiards and Chaos About ABC Societies The Catalan Photograph taken by Håkon Mosvold Larsen/NTB scanpix Mathematical Society September 2014 Issue 93 ISSN 1027-488X S E European M M Mathematical E S Society American Mathematical Society HILBERT’S FIFTH PROBLEM AND RELATED TOPICS Terence Tao, University of California In the fifth of his famous list of 23 problems, Hilbert asked if every topological group which was locally Euclidean was in fact a Lie group. Through the work of Gleason, Montgomery-Zippin, Yamabe, and others, this question was solved affirmatively. Subsequently, this structure theory was used to prove Gromov’s theorem on groups of polynomial growth, and more recently in the work of Hrushovski, Breuillard, Green, and the author on the structure of approximate groups. In this graduate text, all of this material is presented in a unified manner. Graduate Studies in Mathematics, Vol. 153 Aug 2014 338pp 9781470415648 Hardback €63.00 MATHEMATICAL METHODS IN QUANTUM MECHANICS With Applications to Schrödinger Operators, Second Edition Gerald Teschl, University of Vienna Quantum mechanics and the theory of operators on Hilbert space have been deeply linked since their beginnings in the early twentieth century. States of a quantum system correspond to certain elements of the configuration space and observables correspond to certain operators on the space. This book is a brief, but self-contained, introduction to the mathematical methods of quantum mechanics, with a view towards applications to Schrödinger operators. Graduate Studies in Mathematics, Vol. 157 Nov 2014 356pp 9781470417048 Hardback €61.00 MATHEMATICAL UNDERSTANDING OF NATURE Essays on Amazing Physical Phenomena and their Understanding by Mathematicians V. -

Prefix-Free Kolmogorov Complexity

Prefix-free complexity Properties of Kolmogorov complexity Information Theory and Statistics Lecture 7: Prefix-free Kolmogorov complexity Łukasz Dębowski [email protected] Ph. D. Programme 2013/2014 Project co-financed by the European Union within the framework of the European Social Fund Prefix-free complexity Properties of Kolmogorov complexity Towards algorithmic information theory In Shannon’s information theory, the amount of information carried by a random variable depends on the ascribed probability distribution. Andrey Kolmogorov (1903–1987), the founding father of modern probability theory, remarked that it is extremely hard to imagine a reasonable probability distribution for utterances in natural language but we can still estimate their information content. For that reason Kolmogorov proposed to define the information content of a particular string in a purely algorithmic way. A similar approach has been proposed a year earlier by Ray Solomonoff (1926–2009), who sought for optimal inductive inference. Project co-financed by the European Union within the framework of the European Social Fund Prefix-free complexity Properties of Kolmogorov complexity Kolmogorov complexity—analogue of Shannon entropy The fundamental object of algorithmic information theory is Kolmogorov complexity of a string, an analogue of Shannon entropy. It is defined as the length of the shortest program for a Turing machine such that the machine prints out the string and halts. The Turing machine itself is defined as a deterministic finite state automaton which moves along one or more infinite tapes filled with symbols from a fixed finite alphabet and which may read and write individual symbols. The concrete value of Kolmogorov complexity depends on the used Turing machine but many properties of Kolmogorov complexity are universal. -

Jürgen K. Moser 1928–1999

Jürgen K. Moser 1928–1999 A Biographical Memoir by Paul H. Rabinowitz ©2015 National Academy of Sciences. Any opinions expressed in this memoir are those of the author and do not necessarily reflect the views of the National Academy of Sciences. J Ü RGEN KURT MOSER July 4, 1928–December 17, 1999 Elected to the NAS, 1971 After the death of Jürgen Moser, one of the world’s great mathematicians, the American Mathematical Society published a memorial article about his research. It is well worth beginning here with a lightly edited version of the brief introductory remarks I wrote then: One of those rare people with a gift for seeing mathematics as a whole, Moser was very much aware of its connections to other branches of science. His research had a profound effect on mathematics as well as on astronomy and physics. He made deep and important contributions to an extremely broad range of questions in dynamical systems and celestial mechanics, partial differen- By Paul H. Rabinowitz tial equations, nonlinear functional analysis, differ- ential and complex geometry, and the calculus of variations. To those who knew him, Moser exemplified both a creative scientist and a human being. His standards were high and his taste impeccable. His papers were elegantly written. Not merely focused on his own path- breaking research, he worked successfully for the well-being of math- ematics in many ways. He stimulated several generations of younger people by his penetrating insights into their problems, scientific and otherwise, and his warm and wise counsel, concern, and encouragement. My own experience as his student was typical: then and afterwards I was made to feel like a member of his family. -

A Brief Overview of Probability Theory in Data Science by Geert

A brief overview of probability theory in data science Geert Verdoolaege 1Department of Applied Physics, Ghent University, Ghent, Belgium 2Laboratory for Plasma Physics, Royal Military Academy (LPP–ERM/KMS), Brussels, Belgium Tutorial 3rd IAEA Technical Meeting on Fusion Data Processing, Validation and Analysis, 27-05-2019 Overview 1 Origins of probability 2 Frequentist methods and statistics 3 Principles of Bayesian probability theory 4 Monte Carlo computational methods 5 Applications Classification Regression analysis 6 Conclusions and references 2 Overview 1 Origins of probability 2 Frequentist methods and statistics 3 Principles of Bayesian probability theory 4 Monte Carlo computational methods 5 Applications Classification Regression analysis 6 Conclusions and references 3 Early history of probability Earliest traces in Western civilization: Jewish writings, Aristotle Notion of probability in law, based on evidence Usage in finance Usage and demonstration in gambling 4 Middle Ages World is knowable but uncertainty due to human ignorance William of Ockham: Ockham’s razor Probabilis: a supposedly ‘provable’ opinion Counting of authorities Later: degree of truth, a scale Quantification: Law, faith ! Bayesian notion Gaming ! frequentist notion 5 Quantification 17th century: Pascal, Fermat, Huygens Comparative testing of hypotheses Population statistics 1713: Ars Conjectandi by Jacob Bernoulli: Weak law of large numbers Principle of indifference De Moivre (1718): The Doctrine of Chances 6 Bayes and Laplace Paper by Thomas Bayes (1763): inversion -

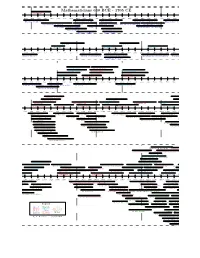

Mathematicians Timeline

Rikitar¯oFujisawa Otto Hesse Kunihiko Kodaira Friedrich Shottky Viktor Bunyakovsky Pavel Aleksandrov Hermann Schwarz Mikhail Ostrogradsky Alexey Krylov Heinrich Martin Weber Nikolai Lobachevsky David Hilbert Paul Bachmann Felix Klein Rudolf Lipschitz Gottlob Frege G Perelman Elwin Bruno Christoffel Max Noether Sergei Novikov Heinrich Eduard Heine Paul Bernays Richard Dedekind Yuri Manin Carl Borchardt Ivan Lappo-Danilevskii Georg F B Riemann Emmy Noether Vladimir Arnold Sergey Bernstein Gotthold Eisenstein Edmund Landau Issai Schur Leoplod Kronecker Paul Halmos Hermann Minkowski Hermann von Helmholtz Paul Erd}os Rikitar¯oFujisawa Otto Hesse Kunihiko Kodaira Vladimir Steklov Karl Weierstrass Kurt G¨odel Friedrich Shottky Viktor Bunyakovsky Pavel Aleksandrov Andrei Markov Ernst Eduard Kummer Alexander Grothendieck Hermann Schwarz Mikhail Ostrogradsky Alexey Krylov Sofia Kovalevskya Andrey Kolmogorov Moritz Stern Friedrich Hirzebruch Heinrich Martin Weber Nikolai Lobachevsky David Hilbert Georg Cantor Carl Goldschmidt Ferdinand von Lindemann Paul Bachmann Felix Klein Pafnuti Chebyshev Oscar Zariski Carl Gustav Jacobi F Georg Frobenius Peter Lax Rudolf Lipschitz Gottlob Frege G Perelman Solomon Lefschetz Julius Pl¨ucker Hermann Weyl Elwin Bruno Christoffel Max Noether Sergei Novikov Karl von Staudt Eugene Wigner Martin Ohm Emil Artin Heinrich Eduard Heine Paul Bernays Richard Dedekind Yuri Manin 1820 1840 1860 1880 1900 1920 1940 1960 1980 2000 Carl Borchardt Ivan Lappo-Danilevskii Georg F B Riemann Emmy Noether Vladimir Arnold August Ferdinand