Intel® Technology Journal | Volume 18, Issue 4, 2014

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2016 8Th International Conference on Cyber Conflict: Cyber Power

2016 8th International Conference on Cyber Conflict: Cyber Power N.Pissanidis, H.Rõigas, M.Veenendaal (Eds.) 31 MAY - 03 JUNE 2016, TALLINN, ESTONIA 2016 8TH International ConFerence on CYBER ConFlict: CYBER POWER Copyright © 2016 by NATO CCD COE Publications. All rights reserved. IEEE Catalog Number: CFP1626N-PRT ISBN (print): 978-9949-9544-8-3 ISBN (pdf): 978-9949-9544-9-0 CopyriGHT AND Reprint Permissions No part of this publication may be reprinted, reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior written permission of the NATO Cooperative Cyber Defence Centre of Excellence ([email protected]). This restriction does not apply to making digital or hard copies of this publication for internal use within NATO, and for personal or educational use when for non-profit or non-commercial purposes, providing that copies bear this notice and a full citation on the first page as follows: [Article author(s)], [full article title] 2016 8th International Conference on Cyber Conflict: Cyber Power N.Pissanidis, H.Rõigas, M.Veenendaal (Eds.) 2016 © NATO CCD COE Publications PrinteD copies OF THIS PUBlication are availaBLE From: NATO CCD COE Publications Filtri tee 12, 10132 Tallinn, Estonia Phone: +372 717 6800 Fax: +372 717 6308 E-mail: [email protected] Web: www.ccdcoe.org Head of publishing: Jaanika Rannu Layout: Jaakko Matsalu LEGAL NOTICE: This publication contains opinions of the respective authors only. They do not necessarily reflect the policy or the opinion of NATO CCD COE, NATO, or any agency or any government. -

Introducing Linux on IBM Z Systems IT Simplicity with an Enterprise Grade Linux Platform

Introducing Linux on IBM z Systems IT simplicity with an enterprise grade Linux platform Wilhelm Mild IBM Executive IT Architect for Mobile, z Systems and Linux © 2016 IBM Corporation IBM Germany What is Linux? . Linux is an operating system – Operating systems are tools which enable computers to function as multi-user, multitasking, and multiprocessing servers. – Linux is typically delivered in a Distribution with many useful tools and Open Source components. Linux is hardware agnostic by design – Linux runs on multiple hardware architectures which means Linux skills are platform independent. Linux is modular and built to coexist with other operating systems – Businesses are using Linux today. More and more businesses proceed with an evolutionary solution strategy based on Linux. 2 © 2016 IBM Corporation What is IBM z Systems ? . IBM z Systems is the family name used by IBM for its mainframe computers – The z Systems families were named for their availability – z stands for zero downtime. The systems are built with spare components capable of hot failovers to ensure continuous operations. IBM z Systems paradigm – The IBM z Systems family maintains full backward compatibility. In effect, current systems are the direct, lineal descendants of System/360, built in 1964, and the System/370 from the 1970s. Many applications written for these systems can still run unmodified on the newest z Systems over five decades later. IBM z Systems variety of Operating Systems – There are different traditional Operating Systems that run on z Systems like z/OS, z/VSE or TPF. With z/VM IBM delivers a mature Hypervisor to virtualize the operating systems. -

Online Banking Customer Awareness and Education Program

Online Banking Customer Awareness and Education Program Online Banking Customer Awareness and Education Program Rev. 6/21 Table of Contents Online Banking Customer Awareness and Education Program Overview ............................................................... 1 Unsolicited Contact .................................................................................................................................................................... 1 Luther Burbank Savings Contact Information ................................................................................................................... 1 Online Banking Security Certification .................................................................................................................................. 1 Email Security............................................................................................................................................................................... 2 Malicious Emails ........................................................................................................................................................................ 2 Email Attachments .................................................................................................................................................................... 2 Verify Emails .............................................................................................................................................................................. 2 Strong and Complex -

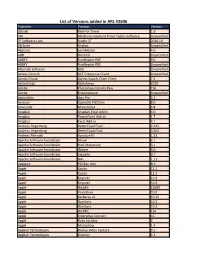

List of Versions Added in ARL #2606

List of Versions added in ARL #2606 Publisher Product Version 3bitlab Red Hot Timer 1.9 3M Medicare Inpatient Pricer Tables Software Unspecified 3T Software Labs Studio 3T 2020.10 AB Sciex Analyst Unspecified Abacom LochMaster 4.0 ABB WinCCU Unspecified ABBYY FineReader PDF 9.0 ABBYY FineReader PDF Unspecified Absolute Software DDS Unspecified Access Control ACT Enterprise Client Unspecified Access Group Access Supply Chain Client 7.2 ActiveCrypt DbDefence 2020 Adobe Photoshop Camera Raw CS6 Adobe Dreamweaver Unspecified algoriddim djay Pro 2.2 Amazon OpenJDK Platform 8.0 Anaconda Miniconda3 3.8 Anaplan Anaplan Excel Addin 4.0 Anaplan PowerPoint Add-in 1.7 Anaplan Excel Add-in 4.1 Andreas Hegenberg BetterTouchTool 2.425 Andreas Hegenberg BetterTouchTool 3.505 Andreas Pehnack Synalyze It! 1.23 Apache Software Foundation Hbase 2.1 Apache Software Foundation Hive Metastore 3.1 Apache Software Foundation JMeter 3.0 Apache Software Foundation zeppelin 0.7 Apache Software Foundation NiFi 1.11 Applanix POSPac UAV 8.5 Apple Xcode 12.1 Apple Xcode 12.2 Apple Keynote 10.2 Apple Keynote 10.3 Apple WebKit 15609 Apple VoiceOver 10.0 Apple Kerberos v5 10.15 Apple Numbers 10.2 Apple Numbers 10.3 Apple WebKit 156 Apple Enterprise Connect 16 Apple Ruby for Mac 2 Apple Monodraw 1.4 Applian Technologies Replay Video Capture 9.1 Applian Technologies Director 4.3 Applied Systems WealthTrack 10.0 Aranda Software Asset Management Unspecified ArcSoft WD Backup Unspecified ASG Technologies ASG-Zena Platform Agent 4.1 Aspose Aspose.Words for Reporting Services -

Introduction to Managing Mobile Devices Using Linux on System Z

Introduction to Managing Mobile Devices using Linux on System z SHARE Pittsburgh – Session 15692 Romney White ([email protected]) System z Architecture and Technology © 2014 IBM Corporation Mobile devices are 80% of devices sold to access the Internet Worldwide Shipment of Internet Access Devices 2013 2017 PC (Desktop & Notebook) PC (Ultrabook) Tablet Phone Worldwide Devices Shipments by Segment (Thousands of Units) Device Type 2012 2013 2014 2017 PC (Desk-Based and Notebook) 341,263 315,229 302,315 271,612 PC (Ultrabooks) 9,822 23,592 38,687 96,350 Tablet 116,113 197,202 265,731 467,951 Mobile Phone 1,746,176 1,875,774 1,949,722 2,128,871 Total 2,213,373 2,411,796 2,556,455 2,964,783 2 Source: Gartner (April 2013) © 2014 IBM Corporation Mobile Internet users will surpass PC internet users by 2015 The number of people accessing the Internet from smartphones, tablets and other mobile devices will surpass the number of users connecting from a home or office computer by 2015, according to a September 2013 study by market analyst firm IDC. PC is the new Legacy! 3 © 2014 IBM Corporation Five mobile trends with significant implications for the enterprise Mobile enables the Mobile is primary Internet of Things Mobile is primary 91% of mobile users keep Global Machine-to-machine 91% of mobile users keep their device within arm’s connections will increase their device within arm’s reach 100% of the time from 2 billion in 2011 to 18 reach 100% of the time billion at the end of 2022 Mobile must create a continuous brand Insights from mobile experience -

Software Withdrawal and Service Discontinuance: IBM Middleware, IBM Security, IBM Analytics, IBM Storage Software, and IBM Z

IBM United States Withdrawal Announcement 916-117, dated September 13, 2016 Software withdrawal and service discontinuance: IBM Middleware, IBM Security, IBM Analytics, IBM Storage Software, and IBM z Systems select products - Some replacements available Table of contents 1 Overview 107Replacement program information 10 Withdrawn programs 210Corrections Overview Effective on the dates shown, IBM(R) will withdraw support for the following program's VRM licensed under the IBM International Program License Agreement: VRM (V3.2.1) Note: V= All means all versions Note: V#.x means all releases of the version # listed Note: V#.#.x means all mods of the version release # listed For Advanced Administration System (AAS) Systems products Program number Program release VRM Withdrawal from name support date 5608-W07 IBM Tivoli(R) 3.2.x September 30, Storage 2017 (See Note FlashCopy(R) SUPT below) Manager For Passport Advantage(R) (PPA) On Premises products IBM Analytics products Program number Program release VRM Withdrawal from name support date 5639-I80 IBM Content 2.3.x September 30, Manager 2018 ImagePlus(R) Workstation program 5722-VI1 IBM DB2(R) 5.3.x September 30, Content Manager 2018 for iSeries 5724-B35 IBM InfoSphere(R) 5.5.x September 30, OptimTM 2016 Application Repository Analyzer 5724-B35 IBM InfoSphere 6.x September 30, Optim Application 2016 Repository Analyzer IBM United States Withdrawal Announcement 916-117 IBM is a registered trademark of International Business Machines Corporation 1 Program number Program release VRM Withdrawal -

Boa Motion to Dismiss

Case 21-10036-CSS Doc 211-4 Filed 02/15/21 Page 425 of 891 Disposition of the Stapled Securities Gain on sale of Stapled Securities by a Non-U.S. Stapled Securityholder will not be subject to U.S. federal income taxation unless (i) the Non-U.S. Stapled Securityholder’s investment in the Stapled Securities is effectively connected with its conduct of a trade or business in the United States (and, if provided by an applicable income tax treaty, is attributable to a permanent establishment or fixed base the Non-U.S. Stapled Securityholder maintains in the United States) and a properly completed Form W-8ECI has not been provided, (ii) the Non-U.S. Stapled Securityholder is present in the United State for 183 days or more in the taxable year of the sale and other specified conditions are met, or (iii) the Non-U.S. Stapled Securityholder is subject to U.S. federal income tax pursuant to the provisions of the U.S. tax law applicable to U.S. expatriates. If gain on the sale of Stapled Securities would be subject to U.S. federal income taxation, the Stapled Securityholder would generally recognise any gain or loss equal to the difference between the amount realised and the Stapled Securityholder’s adjusted basis in its Stapled Securities that are sold or exchanged. This gain or loss would be capital gain or loss, and would be long-term capital gain or loss if the Stapled Securityholder’s holding period in its Stapled Securities exceeds one year. In addition, a corporate Non-U.S. -

IBM® Security Trusteer Rapport™- Extension Installation

Treasury & Payment Solutions Quick Reference Guide IBM® Security Trusteer Rapport™- Extension Installation For users accessing Web-Link with Google Chrome or Mozilla Firefox, Rapport is also available to provide the same browser protection as is provided for devices using Internet Explorer. This requires that a browser extension be enabled. A browser extension is a plug-in software tool that extends the functionality of your web browser without directly affecting viewable content of a web page (for example, by adding a browser toolbar). Google Chrome Extension - How to Install: To install the extension from the Rapport console: 1. Open the IBM Security Trusteer Rapport Console by clicking Start>All Programs>Trusteer Endpoint Protection>Trusteer Endpoint Protection Console. 2. In the Product Settings area, next to ‘Chrome extension’, click install. Note: If Google Chrome is not set as your default browser, the link will open in whichever browser is set as default (e.g., Internet Explorer, as in the image below). 3. Copy the URL from the address bar below and paste it into a Chrome browser to continue: https://chrome.google.com/ webstore/detail/rapport/bbjllphbppobebmjpjcijfbakobcheof 4. Click +ADD TO CHROME to add the extension to your browser: Tip: If the Trusteer Rapport Console does not lead you directly to the Chrome home page, you can: 1. Click the menu icon “ ” at the top right of the Chrome browser window, choose “Tools” and choose “Extensions” to open a new “Options” tab. 1 Quick Reference Guide IBM Security Trusteer Rapport™ -Extension Installation 2. Select the Get more extensions link at the bottom of the page. -

Service Description IBM Trusteer Fraud Protection This Service Description Describes the Cloud Service IBM Provides to Client

Service Description IBM Trusteer Fraud Protection This Service Description describes the Cloud Service IBM provides to Client. Client means the contracting party and its authorized users and recipients of the Cloud Service. The applicable Quotation and Proof of Entitlement (PoE) are provided as separate Transaction Documents. 1. Cloud Service The following Cloud Services are covered by this Service Description: Pinpoint Assure Cloud Services: ● IBM Trusteer Pinpoint Assure ● IBM Trusteer Pinpoint Assure Application ● IBM Trusteer Mobile Carrier Intelligence Rapport Cloud Services: ● IBM Trusteer Rapport for Business Premium Support ● IBM Trusteer Rapport for Retail Premium Support ● IBM Trusteer Rapport II for Business ● IBM Trusteer Rapport II for Retail ● IBM Trusteer Rapport Fraud Feeds for Business ● IBM Trusteer Rapport Fraud Feeds for Business Premium Support ● IBM Trusteer Rapport Fraud Feeds for Retail ● IBM Trusteer Rapport Fraud Feeds for Retail Premium Support ● IBM Trusteer Rapport Phishing Protection for Business ● IBM Trusteer Rapport Phishing Protection for Business Premium Support ● IBM Trusteer Rapport Phishing Protection for Retail ● IBM Trusteer Rapport Phishing Protection for Retail Premium Support ● IBM Trusteer Rapport Mandatory Service for Business ● IBM Trusteer Rapport Mandatory Service for Retail ● IBM Trusteer Rapport Additional Applications for Retail ● IBM Trusteer Rapport Additional Applications for Business ● IBM Trusteer Rapport Large Redeployment ● IBM Trusteer Rapport Small Redeployment Pinpoint Cloud -

Meeting FFIEC Guidance and Cutting Costs with Automated Fraud Prevention White Paper Table of Contents

Meeting FFIEC Guidance and Cutting Costs with Automated Fraud Prevention White Paper Table of Contents Executive Summary 3 Key Requirements for Effective and Sustainable Online Banking Fraud Prevention Solution to Meet FFIEC requirements 3 Overview 4 #1 Layered security provides online banking fraud defense in depth 4 #2 Real-time, intelligence-based risk assessment 6 #3 Rapid adaptation to evolving threats 7 #4 Transaction Anomaly Prevention first 8 #5 Minimize end user impact: balance security, usability and interoperability 9 #6 Meet FFIEC requirements on time and on budget by minimizing deployment, management and operational cost 9 #7 Proven online banking fraud prevention partner 11 Summary 11 About Trusteer 12 | 2 Executive Summary The 2011 FFIEC1 supplement states that “controls implemented in conformance with the guidance several years ago [the 2005 original Guidance] have become less effective“ and clarifies that “malware can compromise some of the most robust online” security controls. Unmistakably, what lead to the release of the FFIEC supplement was the introduction of advanced malware that has created an increasingly hostile online banking environment. Sophisticated malware has become the primary attack tool used by online banking fraudsters to execute account takeover, steal credentials and personal information, and initiate fraudulent transactions. To address emerging threats the FFIEC requires organizations to continuously perform “risk assessments as new information becomes available”, “adjust control mechanisms as appropriate in response to changing threats” and “implement a layered approach to security”. Consequently, financial organizations need to select solutions that are able to identify emerging threats, address their impact and apply layered security that can quickly adapt to the ever changing threat landscape. -

Intel Edge Computing Ecosystem Portfolio E-Booklet

Intel Edge Computing Ecosystem Portfolio e-Booklet Introducing a portfolio of commercial grade products, and ready to scale offerings that are optimized by Intel® Distribution of OpenVINO™ toolkit from Intel® Edge Computing ecosystem partners 2021 Febuary Release Legal notices and disclaimers Intel technologies’ features and benefits depend on system configuration and may require enabled hardware, software or service activation. Performance varies depending on system configuration. No computer system can be absolutely secure. Check with your system manufacturer to learn more. Cost reduction scenarios described are intended as examples of how a given Intel-based product, in the specified circumstances and configurations, may affect future costs and provide cost savings. Circumstances will vary. Intel does not guarantee any costs or cost reduction. Other names and brands may be claimed as the property of others. Any third-party information referenced on this document is provided for information only. Intel does not endorse any specific third-party product or entity mentioned on this document. Intel, the Intel Logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries in the U.S. and/or other countries. Intel Statement on Product Usage Intel is committed to respecting human rights and avoiding complicity in human rights abuses. See Intel’s Global Human Rights Principles. Intel’s products and software are intended only to be used in applications that do not cause or contribute to a violation of an internationally recognized -

Intel® Technology Journal | Volume 18, Issue 2, 2014

Intel® Technology Journal | Volume 18, Issue 2, 2014 Publisher Managing Editor Content Architect Cory Cox Stuart Douglas Paul Cohen Program Manager Technical Editor Technical Illustrators Paul Cohen David Clark MPS Limited Technical and Strategic Reviewers Bruce J Beare Tracey Erway Michael Richmond Judi A Goldstein Kira C Boyko Miao Wei Paul De Carvalho Ryan Tabrah Prakash Iyer Premanand Sakarda Ticky Thakkar Shelby E Hedrick Intel® Technology Journal | 1 Intel® Technology Journal | Volume 18, Issue 2, 2014 Intel Technology Journal Copyright © 2014 Intel Corporation. All rights reserved. ISBN 978-1-934053-63-8, ISSN 1535-864X Intel Technology Journal Volume 18, Issue 2 No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except as permitted under Sections 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 750-4744. Requests to the Publisher for permission should be addressed to the Publisher, Intel Press, Intel Corporation, 2111 NE 25th Avenue, JF3-330, Hillsboro, OR 97124-5961. E-Mail: [email protected]. Th is publication is designed to provide accurate and authoritative information in regard to the subject matter covered. It is sold with the understanding that the publisher is not engaged in professional services. If professional advice or other expert assistance is required, the services of a competent professional person should be sought.