New Technologies and Warfare

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Pressdebatten Om Vietnam Sommaren 1965

Pressdebatten om Vietnam sommaren 1965 Förord Sommaren 1965 flammade det upp en debatt om Vietnamkriget i den svenska pressen, där många författare, ledarskribenter och politiker deltog, de flesta kritiska mot den amerikanska Vietnam-politiken. Denna debatt spelade en viktig opinionsbildande roll, såtillvida att den okritiskt USA-vänliga hållning som hittills hade dominerat den svenska opinionen härmed fick en ordentlig törn. Den USA-kritiska opinionen skulle de kommande åren att förstärkas och fördjupas, inte minst därför att USA satsade allt större resurser på att upptrappa sin närvaro och krigföring i Indokina. Pressdebatten sommaren 1965 fungerade som en väckarklocka, den öppnade ögonen på många och bidrog till att människor kunde lösgöra sig från den närmast naiva pro-amerikanism som dittills dominerat den svenska opinionen efter andra världskriget. Nedan har vi samlat de viktigaste debattinläggen från sommaren 1965. Martin Fahlgren (i augusti 2007) Innehåll Lars Forssell: Brev till en svensk tidning................................................................................... 1 Amerika och vi – Ledare i Dagens Nyheter 6/6 1965................................................................ 2 Sven Lindqvist: ”Förklara, förstå och kanske hoppas” .............................................................. 3 Folke Isaksson: ”Vi prisar det svenska i människan” ................................................................ 6 Olof Lagercrantz: Världen som en enhet .................................................................................. -

Officials Say Flynn Discussed Sanctions

Officials say Flynn discussed sanctions The Washington Post February 10, 2017 Friday, Met 2 Edition Copyright 2017 The Washington Post All Rights Reserved Distribution: Every Zone Section: A-SECTION; Pg. A08 Length: 1971 words Byline: Greg Miller;Adam Entous;Ellen Nakashima Body Talks with Russia envoy said to have occurred before Trump took office National security adviser Michael Flynn privately discussed U.S. sanctions against Russia with that country's ambassador to the United States during the month before President Trump took office, contrary to public assertions by Trump officials, current and former U.S. officials said. Flynn's communications with Russian Ambassador Sergey Kislyak were interpreted by some senior U.S. officials as an inappropriate and potentially illegal signal to the Kremlin that it could expect a reprieve from sanctions that were being imposed by the Obama administration in late December to punish Russia for its alleged interference in the 2016 election. Flynn on Wednesday denied that he had discussed sanctions with Kislyak. Asked in an interview whether he had ever done so, he twice said, "No." On Thursday, Flynn, through his spokesman, backed away from the denial. The spokesman said Flynn "indicated that while he had no recollection of discussing sanctions, he couldn't be certain that the topic never came up." Officials said this week that the FBI is continuing to examine Flynn's communications with Kislyak. Several officials emphasized that while sanctions were discussed, they did not see evidence that Flynn had an intent to convey an explicit promise to take action after the inauguration. Flynn's contacts with the ambassador attracted attention within the Obama administration because of the timing. -

Managing the Human Weapon System: a Vision for an Air Force Human-Performance Doctrine

Calhoun: The NPS Institutional Archive DSpace Repository Faculty and Researchers Faculty and Researchers Collection 2009 Managing the Human Weapon System: A Vision for an Air Force Human-Performance Doctrine Tvaryanas, A.P. Tvaryanas, A.P., Brown, L., and Miller, N.L. "Managing the Human Weapon System: A Vision for an Air Force Human-Performance Doctrine", Air & Space Power Journal, pp. 34-41, Summer 2009. http://hdl.handle.net/10945/36508 Downloaded from NPS Archive: Calhoun In air combat, “the merge” occurs when opposing aircraft meet and pass each other. Then they usually “mix it up.” In a similar spirit, Air and Space Power Journal’s “Merge” articles present contending ideas. Readers are free to join the intellectual battlespace. Please send comments to [email protected] or [email protected]. Managing the Human Weapon System A Vision for an Air Force Human-Performance Doctrine LT COL ANTHONY P. TVARYANAS, USAF, MC, SFS COL LEX BROWN, USAF, MC, SFS NITA L. MILLER, PHD* The basic planning, development, organization and training of the Air Force must be well rounded, covering every modern means of waging air war. The Air Force doctrines likewise must be !exible at all times and entirely uninhibited by tradition. —Gen Henry H. “Hap” Arnold N A RECENT paper on America’s Air special operations forces’ declaration that Force, Gen T. Michael Moseley asserted “humans are more important than hardware” that we are at a strategic crossroads as a in asymmetric warfare.2 Consistent with this consequence of global dynamics and view, in January 2004, the deputy secretary of Ishifts in the character of future warfare; he defense directed the Joint Staff to “develop also noted that “today’s con!uence of global the next generation of . -

First Amended Complaint Exhibit 1 Donald J

Case 2:17-cv-00141-JLR Document 18-1 Filed 02/01/17 Page 1 of 3 First Amended Complaint Exhibit 1 Donald J. Trump Statement on Preventing Muslim Immigration | Donald J Trump for Pre... Page 1 of 2 Case 2:17-cv-00141-JLR Document 18-1 Filed 02/01/17 Page 2 of 3 INSTAGRAM FACEBOOK TWITTER NEWS GET INVOLVED GALLERY ABOUT US SHOP CONTRIBUTE - DECEMBER 07, 2015 - CATEGORIES DONALD J. TRUMP STATEMENT ON VIEW ALL PREVENTING MUSLIM IMMIGRATION STATEMENTS (New York, NY) December 7th, 2015, -- Donald J. Trump is calling for a total and complete shutdown of Muslims entering the United States until our country's ANNOUNCEMENTS representatives can figure out what is going on. According to Pew Research, ENDORSEMENTS among others, there is great hatred towards Americans by large segments of the Muslim population. Most recently, a poll from the Center for Security ADS Policy released data showing "25% of those polled agreed that violence against Americans here in the United States is justified as a part of the global jihad" and 51% of those polled, "agreed that Muslims in America should have the choice of being governed according to Shariah." Shariah authorizes such atrocities as murder against non-believers who won't convert, beheadings and more unthinkable acts ARCHIVE that pose great harm to Americans, especially women. Mr. Trump stated, "Without looking at the various polling data, it is obvious to NOVEMBER 2016 anybody the hatred is beyond comprehension. Where this hatred comes from and OCTOBER 2016 why we will have to determine. Until we are able to determine and understand this problem and the dangerous threat it poses, our country cannot be the victims of SEPTEMBER 2016 horrendous attacks by people that believe only in Jihad, and have no sense of reason or respect for human life. -

Boklista 1949–2019

Boklista 1949–2019 Såhär säger vår medlem som har sammanställt listan: ”Det här är en lista över böcker som har getts ut under min livstid och som finns hemma i min och makens bokhyllor. Visst förbehåll för att utgivningsåren inte är helt korrekt, men jag har verkligen försökt skilja på utgivningsår, nyutgivning, tryckår och översättningsår.” År Författare Titel 1949 Vilhelm Moberg Utvandrarna Per Wästberg Pojke med såpbubblor 1950 Pär Lagerkvist Barabbas 1951 Ivar Lo-Johansson Analfabeten Åke Löfgren Historien om någon 1952 Ulla Isaksson Kvinnohuset 1953 Nevil Shute Livets väv Astrid Lindgren Kalle Blomkvist och Rasmus 1954 William Golding Flugornas herre Simone de Beauvoir Mandarinerna 1955 Astrid Lindgren Lillebror och Karlsson på taket 1956 Agnar Mykle Sången om den röda rubinen Siri Ahl Två små skolkamrater 1957 Enid Blyton Fem följer ett spår 1958 Yaşar Kemal Låt tistlarna brinna Åke Wassing Dödgrävarens pojke Leon Uris Exodus 1959 Jan Fridegård Svensk soldat 1960 Per Wästberg På svarta listan Gösta Gustaf-Jansson Pärlemor 1961 John Åberg Över förbjuden gräns 1962 Lars Görling 491 1963 Dag Hammarskjöld Vägmärken Ann Smith Två i stjärnan 1964 P.O. E n q u i st Magnetisörens femte vinter 1965 Göran Sonnevi ingrepp-modeller Ernesto Cardenal Förlora inte tålamodet 1966 Truman Capote Med kallt blod 1967 Sven Lindqvist Myten om Wu Tao-tzu Gabriel García Márques Hundra år av ensamhet 1968 P.O. E n q u i st Legionärerna Vassilis Vassilikos Z Jannis Ritsos Greklands folk: dikter 1969 Göran Sonnevi Det gäller oss Björn Håkansson Bevisa vår demokrati 1970 Pär Wästberg Vattenslottet Göran Sonnevi Det måste gå 1971 Ylva Eggehorn Ska vi dela 1972 Maja Ekelöf & Tony Rosendahl Brev 1 År Författare Titel 1973 Lars Gustafsson Yllet 1974 Kerstin Ekman Häxringarna Elsa Morante Historien 1975 Alice Lyttkens Fader okänd Mari Cardinal Orden som befriar 1976 Ulf Lundell Jack 1977 Sara Lidman Din tjänare hör P.G . -

Planning For, Selecting, and Implementing Technology Solutions

q A GUIDEBOOK for Planning for, Selecting, and Implementing Technology Solutions FIRST EDITION March 2010 This guide was prepared for the Nation-al Institute of Justice, U.S. Department of Justice, by the Weapons and Protective Systems Technologies Center at The Pennsylvania State University under Cooperative Agreement 2007-DE-BX-K009. It is intended to provide information useful to law enforcement and corrections agencies regarding technology planning, selection, and implementation. It is not proscriptive in nature, rather it serves as a resource for program development within the framework of existing departmental policies and procedures. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors/editors and do not necessarily reflect the views of the National Institute of Justice. They should not be construed as an official Department of Justice position, policy, or decision. This is an informational guidebook designed to provide an overview of less-lethal devices. Readers are cautioned that no particular technology is appropriate for all circumstances and that the information in this guidebook is provided solely to assist readers in independently evaluating their specific circumstances. The Pennsylvania State University does not advocate or warrant any particular technology or approach. The Pennsylvania State University extends no warranties or guarantees of any kind, either express or implied, regarding the guidebook, including but not limited to warranties of non-infringement, merchantability and fitness for a particular purpose. © 2010 The Pennsylvania State University GUIDEBOOK for LESS-LETHAL DEVICES Planning for, Selecting, and Implementing Technology Solutions FOREWORD The National Tactical Officers Association (NTOA) is the leading author- ity on contemporary tactical law enforcement information and training. -

Babushka Egg Concept Book #15 (Amended 1-1-2021)

Babushka Egg Concept Book #15 (amended 1-1-2021) ***************************************. Babushka Egg Concept Book #15 www.apocalypse2008-2015.com Page 1 of 92 Babushka Egg Concept Book #15 (amended 1-1-2021) Basel Dome Window After September 2022 AD - Please read and print before the Web is gone - Pearl # Name Pages 889 Amended Conclusion of the Corona Virus 9 Part 1,2,3,4 Infection 300 The Resurrection Plan 23 Part 1,2,3 of Mankind Why, How & When is the End 270 st 49 of the 21 Century? 242 The Seven Mystery Thunders 62 126 The Population Curve 76 According to the Bible 285 Seven Abomination Changing Christianity 88-92 www.apocalypse2008-2015.com Page 2 of 92 Babushka Egg Concept Book #15 (amended 1-1-2021) To my Relatives and Friends Recently many politicized TV stations announced an increase of a deadly Corona-Virus. Perhaps one should study the history of Mankind connected to Prophecy of the Torah-Bible (Rev. 20:12) ending much Life in nature caused by an atheistic hi-tech culture of our civilization. Like the Gospel mentioned five (5) foolish virgins to hear a voice behind a locked door: Truly, I say to you, I do not know you (Matt. 25:1-13), depart from me. (Matt. 25:41) Therefore you might be interested to pass on this information to a friend or pastor since our 21st Century Civilization was predicted to perish. Thus, a few Pearls were amended to reveal the hidden Truth of the Torah- Bible now exposed in Babushka Egg Concept Book #15 with forbidden knowledge forgotten in many Christian churches, synagogues & universities. -

2011-2012 PIPS Policy Brief Book

The Project on International Peace and Security The College of William and Mary POLICY BRIEFS 2011-2012 The Project on International Peace and Security (PIPS) at the College of William and Mary is a highly selective undergraduate think tank designed to bridge the gap between the academic and policy communities in the area of undergraduate education. The goal of PIPS is to demonstrate that elite students, working with faculty and members of the policy community, can make a meaningful contribution to policy debates. The briefs enclosed are a testament to that mission and the talent and creativity of William and Mary’s undergraduates. Amy Oakes, Director Dennis A. Smith, Director The Project on International Peace and Security The Institute for Theory and Practice of International Relations Department of Government The College of William and Mary P.O. Box 8795 Williamsburg, VA 23187-8795 757.221.5086 [email protected] © The Project on International Peace and Security (PIPS), 2012 TABLE OF CONTENTS ALLISON BAER, “COMBATING RADICALISM IN PAKISTAN: EDUCATIONAL REFORM AND INFORMATION TECHNOLOGY.” .............................................................................................1 BENJAMIN BUCH AND KATHERINE MITCHELL, “THE ACTIVE DENIAL SYSTEM: OBSTACLES AND PROMISE.” ..................................................................................................................19 PETER KLICKER, “A NEW ‘FREEDOM’ FIGHTER: BUILDING ON THE T-X COMPETITION.” ...........................................................................................................37 -

Dominant Land Forces for 21St Century Warfare

No. 73 SEPTEMBER 2009 Dominant Land Forces for 21st Century Warfare Edmund J. Degen A National Security Affairs aperP published on occasion by THE INSTITUTE OF LAND WARFARE ASSOCIATION OF THE UNITED STATES ARMY Arlington, Virginia Dominant Land Forces for 21st Century Warfare by Edmund J. Degen The Institute of Land Warfare ASSOCIATION OF THE UNITED STATES ARMY AN INSTITUTE OF LAND WARFARE PAPER The purpose of the Institute of Land Warfare is to extend the educational work of AUSA by sponsoring scholarly publications, to include books, monographs and essays on key defense issues, as well as workshops and symposia. A work selected for publication as a Land Warfare Paper represents research by the author which, in the opinion of ILW’s editorial board, will contribute to a better understanding of a particular defense or national security issue. Publication as an Institute of Land Warfare Paper does not indicate that the Association of the United States Army agrees with everything in the paper, but does suggest that the Association believes the paper will stimulate the thinking of AUSA members and others concerned about important defense issues. LAND WARFARE PAPER NO. 73, September 2009 Dominant Land Forces for 21st Century Warfare by Edmund J. Degen Colonel Edmund J. Degen recently completed the senior service college at the Joint Forces Staff College and moved to the Republic of Korea, where he served as the U.S. Forces Korea (USFK) J35, Chief of Future Operations. He is presently the Commander of the 3d Battlefield Coordination Detachment–Korea. He previously served as Special Assistant to General William S. -

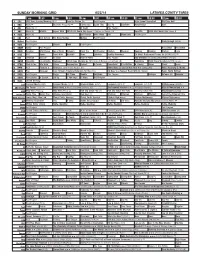

Sunday Morning Grid 6/22/14 Latimes.Com/Tv Times

SUNDAY MORNING GRID 6/22/14 LATIMES.COM/TV TIMES 7 am 7:30 8 am 8:30 9 am 9:30 10 am 10:30 11 am 11:30 12 pm 12:30 2 CBS CBS News Sunday Morning (N) Å Face the Nation (N) Paid Program High School Basketball PGA Tour Golf 4 NBC News Å Meet the Press (N) Å Conference Justin Time Tree Fu LazyTown Auto Racing Golf 5 CW News (N) Å In Touch Paid Program 7 ABC News (N) Wildlife Exped. Wild 2014 FIFA World Cup Group H Belgium vs. Russia. (N) SportCtr 2014 FIFA World Cup: Group H 9 KCAL News (N) Joel Osteen Mike Webb Paid Woodlands Paid Program 11 FOX Paid Joel Osteen Fox News Sunday Midday Paid Program 13 MyNet Paid Program Crazy Enough (2012) 18 KSCI Paid Program Church Faith Paid Program 22 KWHY Como Paid Program RescueBot RescueBot 24 KVCR Painting Wild Places Joy of Paint Wyland’s Paint This Oil Painting Kitchen Mexican Cooking Cooking Kitchen Lidia 28 KCET Hi-5 Space Travel-Kids Biz Kid$ News LinkAsia Healthy Hormones Ed Slott’s Retirement Rescue for 2014! (TVG) Å 30 ION Jeremiah Youssef In Touch Hour of Power Paid Program Into the Blue ›› (2005) Paul Walker. (PG-13) 34 KMEX Conexión En contacto Backyard 2014 Copa Mundial de FIFA Grupo H Bélgica contra Rusia. (N) República 2014 Copa Mundial de FIFA: Grupo H 40 KTBN Walk in the Win Walk Prince Redemption Harvest In Touch PowerPoint It Is Written B. Conley Super Christ Jesse 46 KFTR Paid Fórmula 1 Fórmula 1 Gran Premio Austria. -

Taming the Trolls: the Need for an International Legal Framework to Regulate State Use of Disinformation on Social Media

Taming the Trolls: The Need for an International Legal Framework to Regulate State Use of Disinformation on Social Media * ASHLEY C. NICOLAS INTRODUCTION Consider a hypothetical scenario in which hundreds of agents of the Russian GRU arrive in the United States months prior to a presidential election.1 The Russian agents spend the weeks leading up to the election going door to door in vulnerable communities, spreading false stories intended to manipulate the population into electing a candidate with policies favorable to Russian positions. The agents set up television stations, use radio broadcasts, and usurp the resources of local newspapers to expand their reach and propagate falsehoods. The presence of GRU agents on U.S. soil is an incursion into territorial integrity⎯a clear invasion of sovereignty.2 At every step, Russia would be required to expend tremendous resources, overcome traditional media barriers, and risk exposure, making this hypothetical grossly unrealistic. Compare the hypothetical with the actual actions of the Russians during the 2016 U.S. presidential election. Sitting behind computers in St. Petersburg, without ever setting foot in the United States, Russian agents were able to manipulate the U.S. population in the most sacred of domestic affairs⎯an election. Russian “trolls” targeted vulnerable populations through social media, reaching millions of users at a minimal cost and without reliance on established media institutions.3 Without using * Georgetown Law, J.D. expected 2019; United States Military Academy, B.S. 2009; Loyola Marymount University M.Ed. 2016. © 2018, Ashley C. Nicolas. The author is a former U.S. Army Intelligence Officer. -

Applying Traditional Military Principles to Cyber Warfare

2012 4th International Conference on Cyber Confl ict Permission to make digital or hard copies of this publication for internal use within NATO and for personal or educational use when for non-profi t or non-commercial C. Czosseck, R. Ottis, K. Ziolkowski (Eds.) purposes is granted providing that copies bear this notice and a full citation on the 2012 © NATO CCD COE Publications, Tallinn first page. Any other reproduction or transmission requires prior written permission by NATO CCD COE. Applying Traditional Military Principles to Cyber Warfare Samuel Liles Marcus Rogers Cyber Integration and Information Computer and Information Operations Department Technology Department National Defense University iCollege Purdue University Washington, DC West Lafayette, IN [email protected] [email protected] J. Eric Dietz Dean Larson Purdue Homeland Security Institute Larson Performance Engineering Purdue University Munster, IN West Lafayette, IN [email protected] [email protected] Abstract: Utilizing a variety of resources, the conventions of land warfare will be analyzed for their cyber impact by using the principles designated by the United States Army. The analysis will discuss in detail the factors impacting security of the network enterprise for command and control, the information conduits found in the technological enterprise, and the effects upon the adversary and combatant commander. Keywords: cyber warfare, military principles, combatant controls, mechanisms, strategy 1. INTRODUCTION Adams informs us that rapid changes due to technology have increasingly effected the affairs of the military. This effect whether economic, political, or otherwise has sometimes been extreme. Technology has also made substantial impacts on the prosecution of war. Adams also informs us that information technology is one of the primary change agents in the military of today and likely of the future [1].