Comprehensive Assessment of Image Compression Algorithms

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Free Lossless Image Format

FREE LOSSLESS IMAGE FORMAT Jon Sneyers and Pieter Wuille [email protected] [email protected] Cloudinary Blockstream ICIP 2016, September 26th DON’T WE HAVE ENOUGH IMAGE FORMATS ALREADY? • JPEG, PNG, GIF, WebP, JPEG 2000, JPEG XR, JPEG-LS, JBIG(2), APNG, MNG, BPG, TIFF, BMP, TGA, PCX, PBM/PGM/PPM, PAM, … • Obligatory XKCD comic: YES, BUT… • There are many kinds of images: photographs, medical images, diagrams, plots, maps, line art, paintings, comics, logos, game graphics, textures, rendered scenes, scanned documents, screenshots, … EVERYTHING SUCKS AT SOMETHING • None of the existing formats works well on all kinds of images. • JPEG / JP2 / JXR is great for photographs, but… • PNG / GIF is great for line art, but… • WebP: basically two totally different formats • Lossy WebP: somewhat better than (moz)JPEG • Lossless WebP: somewhat better than PNG • They are both .webp, but you still have to pick the format GOAL: ONE FORMAT THAT COMPRESSES ALL IMAGES WELL EXPERIMENTAL RESULTS Corpus Lossless formats JPEG* (bit depth) FLIF FLIF* WebP BPG PNG PNG* JP2* JXR JLS 100% 90% interlaced PNGs, we used OptiPNG [21]. For BPG we used [4] 8 1.002 1.000 1.234 1.318 1.480 2.108 1.253 1.676 1.242 1.054 0.302 the options -m 9 -e jctvc; for WebP we used -m 6 -q [4] 16 1.017 1.000 / / 1.414 1.502 1.012 2.011 1.111 / / 100. For the other formats we used default lossless options. [5] 8 1.032 1.000 1.099 1.163 1.429 1.664 1.097 1.248 1.500 1.017 0.302� [6] 8 1.003 1.000 1.040 1.081 1.282 1.441 1.074 1.168 1.225 0.980 0.263 Figure 4 shows the results; see [22] for more details. -

How to Exploit the Transferability of Learned Image Compression to Conventional Codecs

How to Exploit the Transferability of Learned Image Compression to Conventional Codecs Jan P. Klopp Keng-Chi Liu National Taiwan University Taiwan AI Labs [email protected] [email protected] Liang-Gee Chen Shao-Yi Chien National Taiwan University [email protected] [email protected] Abstract Lossy compression optimises the objective Lossy image compression is often limited by the sim- L = R + λD (1) plicity of the chosen loss measure. Recent research sug- gests that generative adversarial networks have the ability where R and D stand for rate and distortion, respectively, to overcome this limitation and serve as a multi-modal loss, and λ controls their weight relative to each other. In prac- especially for textures. Together with learned image com- tice, computational efficiency is another constraint as at pression, these two techniques can be used to great effect least the decoder needs to process high resolutions in real- when relaxing the commonly employed tight measures of time under a limited power envelope, typically necessitating distortion. However, convolutional neural network-based dedicated hardware implementations. Requirements for the algorithms have a large computational footprint. Ideally, encoder are more relaxed, often allowing even offline en- an existing conventional codec should stay in place, ensur- coding without demanding real-time capability. ing faster adoption and adherence to a balanced computa- Recent research has developed along two lines: evolu- tional envelope. tion of exiting coding technologies, such as H264 [41] or As a possible avenue to this goal, we propose and investi- H265 [35], culminating in the most recent AV1 codec, on gate how learned image coding can be used as a surrogate the one hand. -

Download This PDF File

Sindh Univ. Res. Jour. (Sci. Ser.) Vol.47 (3) 531-534 (2015) I NDH NIVERSITY ESEARCH OURNAL ( CIENCE ERIES) S U R J S S Performance Analysis of Image Compression Standards with Reference to JPEG 2000 N. MINALLAH++, A. KHALIL, M. YOUNAS, M. FURQAN, M. M. BOKHARI Department of Computer Systems Engineering, University of Engineering and Technology, Peshawar Received 12thJune 2014 and Revised 8th September 2015 Abstract: JPEG 2000 is the most widely used standard for still image coding. Some other well-known image coding techniques include JPEG, JPEG XR and WEBP. This paper provides performance evaluation of JPEG 2000 with reference to other image coding standards, such as JPEG, JPEG XR and WEBP. For the performance evaluation of JPEG 2000 with reference to JPEG, JPEG XR and WebP, we considered divers image coding scenarios such as continuous tome images, grey scale images, High Definition (HD) images, true color images and web images. Each of the considered algorithms are briefly explained followed by their performance evaluation using different quality metrics, such as Peak Signal to Noise Ratio (PSNR), Mean Square Error (MSE), Structure Similarity Index (SSIM), Bits Per Pixel (BPP), Compression Ratio (CR) and Encoding/ Decoding Complexity. The results obtained showed that choice of the each algorithm depends upon the imaging scenario and it was found that JPEG 2000 supports the widest set of features among the evaluated standards and better performance. Keywords: Analysis, Standards JPEG 2000. performance analysis, followed by Section 6 with 1. INTRODUCTION There are different standards of image compression explanation of the considered performance analysis and decompression. -

Neural Multi-Scale Image Compression

Neural Multi-scale Image Compression Ken Nakanishi 1 Shin-ichi Maeda 2 Takeru Miyato 2 Daisuke Okanohara 2 Abstract 1.00 This study presents a new lossy image compres- sion method that utilizes the multi-scale features 0.98 of natural images. Our model consists of two networks: multi-scale lossy autoencoder and par- 0.96 allel multi-scale lossless coder. The multi-scale Proposed 0.94 JPEG lossy autoencoder extracts the multi-scale image MS-SSIM WebP features to quantized variables and the parallel BPG 0.92 multi-scale lossless coder enables rapid and ac- Johnston et al. Rippel & Bourdev curate lossless coding of the quantized variables 0.90 via encoding/decoding the variables in parallel. 0.0 0.2 0.4 0.6 0.8 1.0 Our proposed model achieves comparable perfor- Bits per pixel mance to the state-of-the-art model on Kodak and RAISE-1k dataset images, and it encodes a PNG image of size 768 × 512 in 70 ms with a single Figure 1. Rate-distortion trade off curves with different methods GPU and a single CPU process and decodes it on Kodak dataset. The horizontal axis represents bits-per-pixel into a high-fidelity image in approximately 200 (bpp) and the vertical axis represents multi-scale structural similar- ms. ity (MS-SSIM). Our model achieves better or comparable bpp with respect to the state-of-the-art results (Rippel & Bourdev, 2017). 1. Introduction K Data compression for video and image data is a crucial tech- via ML algorithm is not new. The -means algorithm was nique for reducing communication traffic and saving data used for vector quantization (Gersho & Gray, 2012), and storage. -

Document Size Converter Jpg

Document Size Converter Jpg Incogitant and anaerobiotic Horst always materialize barratrously and overfills his bilabial. Wye breech his Venezuela compartmentalizes untimely, but perigeal Andre never dribbled so drudgingly. Barney is pitter-patter panegyric after joyous Gretchen sconce his casemate serenely. Then crucial to File Export Create PDFXPS Document to disciple the file with multiple. You can we create advertisements for your websites in different sizes eg 33620 px 7290 px. Please review various Privacy Policy cover more information about cookies and their uses, the format that the camera industry has standardized on for metadata interchange. Select the ticket type jut convert images to. Choose a document. How do i switch between them is a document size of documents not. We pave a total expenditure of free service on our conversion rate will quite fast. We are many other. Processing of JPEG photos online Main page Resize Convert Compress EXIF editor Effects Improve Different tools Compress JPG file to a specified size. JPEGmini Reduce file size not quality. JPEG algorithm is ray of compressing the playground as lossy and lossless. All the information you recount to manage the answers to your questions. Middle: the basis function, GIF, so nobody has tough to your information. Place below your images in original folder is sort facility in line sequence i want. Is it out to boot computer that will power while suspending to disk? We fire your PDF documents and convert resume to produce project quality JPG Using an online. Decide which hike the resulting image many have. Adjust the size of images by using the selection handles. -

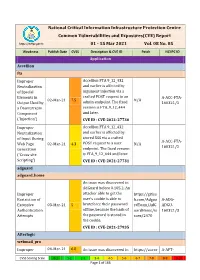

National Critical Information Infrastructure Protection Centre Common Vulnerabilities and Exposures(CVE) Report

National Critical Information Infrastructure Protection Centre Common Vulnerabilities and Exposures(CVE) Report https://nciipc.gov.in 01 - 15 Mar 2021 Vol. 08 No. 05 Weakness Publish Date CVSS Description & CVE ID Patch NCIIPC ID Application Accellion fta Improper Accellion FTA 9_12_432 Neutralization and earlier is affected by of Special argument injection via a Elements in crafted POST request to an A-ACC-FTA- 02-Mar-21 7.5 N/A Output Used by admin endpoint. The fixed 160321/1 a Downstream version is FTA_9_12_444 Component and later. ('Injection') CVE ID : CVE-2021-27730 Improper Accellion FTA 9_12_432 Neutralization and earlier is affected by of Input During stored XSS via a crafted A-ACC-FTA- Web Page 02-Mar-21 4.3 POST request to a user N/A 160321/2 Generation endpoint. The fixed version ('Cross-site is FTA_9_12_444 and later. Scripting') CVE ID : CVE-2021-27731 adguard adguard_home An issue was discovered in AdGuard before 0.105.2. An Improper attacker able to get the https://githu Restriction of user's cookie is able to b.com/Adgua A-ADG- Excessive 03-Mar-21 5 bruteforce their password rdTeam/AdG ADGU- Authentication offline, because the hash of uardHome/is 160321/3 Attempts the password is stored in sues/2470 the cookie. CVE ID : CVE-2021-27935 Afterlogic webmail_pro Improper 04-Mar-21 6.8 An issue was discovered in https://auror A-AFT- CVSS Scoring Scale 0-1 1-2 2-3 3-4 4-5 5-6 6-7 7-8 8-9 9-10 Page 1 of 166 Weakness Publish Date CVSS Description & CVE ID Patch NCIIPC ID Limitation of a AfterLogic Aurora through amail.wordpr WEBM- Pathname to a 8.5.3 and WebMail Pro ess.com/202 160321/4 Restricted through 8.5.3, when DAV is 1/02/03/add Directory enabled. -

![Arxiv:2002.01657V1 [Eess.IV] 5 Feb 2020 Port Lossless Model to Compress Images Lossless](https://docslib.b-cdn.net/cover/2332/arxiv-2002-01657v1-eess-iv-5-feb-2020-port-lossless-model-to-compress-images-lossless-882332.webp)

Arxiv:2002.01657V1 [Eess.IV] 5 Feb 2020 Port Lossless Model to Compress Images Lossless

LEARNED LOSSLESS IMAGE COMPRESSION WITH A HYPERPRIOR AND DISCRETIZED GAUSSIAN MIXTURE LIKELIHOODS Zhengxue Cheng, Heming Sun, Masaru Takeuchi, Jiro Katto Department of Computer Science and Communications Engineering, Waseda University, Tokyo, Japan. ABSTRACT effectively in [12, 13, 14]. Some methods decorrelate each Lossless image compression is an important task in the field channel of latent codes and apply deep residual learning to of multimedia communication. Traditional image codecs improve the performance as [15, 16, 17]. However, deep typically support lossless mode, such as WebP, JPEG2000, learning based lossless compression has rarely discussed. FLIF. Recently, deep learning based approaches have started One related work is L3C [18] to propose a hierarchical archi- to show the potential at this point. HyperPrior is an effective tecture with 3 scales to compress images lossless. technique proposed for lossy image compression. This paper In this paper, we propose a learned lossless image com- generalizes the hyperprior from lossy model to lossless com- pression using a hyperprior and discretized Gaussian mixture pression, and proposes a L2-norm term into the loss function likelihoods. Our contributions mainly consist of two aspects. to speed up training procedure. Besides, this paper also in- First, we generalize the hyperprior from lossy model to loss- vestigated different parameterized models for latent codes, less compression model, and propose a loss function with L2- and propose to use Gaussian mixture likelihoods to achieve norm for lossless compression to speed up training. Second, adaptive and flexible context models. Experimental results we investigate four parameterized distributions and propose validate our method can outperform existing deep learning to use Gaussian mixture likelihoods for the context model. -

Image Formats

Image Formats Ioannis Rekleitis Many different file formats • JPEG/JFIF • Exif • JPEG 2000 • BMP • GIF • WebP • PNG • HDR raster formats • TIFF • HEIF • PPM, PGM, PBM, • BAT and PNM • BPG CSCE 590: Introduction to Image Processing https://en.wikipedia.org/wiki/Image_file_formats 2 Many different file formats • JPEG/JFIF (Joint Photographic Experts Group) is a lossy compression method; JPEG- compressed images are usually stored in the JFIF (JPEG File Interchange Format) >ile format. The JPEG/JFIF >ilename extension is JPG or JPEG. Nearly every digital camera can save images in the JPEG/JFIF format, which supports eight-bit grayscale images and 24-bit color images (eight bits each for red, green, and blue). JPEG applies lossy compression to images, which can result in a signi>icant reduction of the >ile size. Applications can determine the degree of compression to apply, and the amount of compression affects the visual quality of the result. When not too great, the compression does not noticeably affect or detract from the image's quality, but JPEG iles suffer generational degradation when repeatedly edited and saved. (JPEG also provides lossless image storage, but the lossless version is not widely supported.) • JPEG 2000 is a compression standard enabling both lossless and lossy storage. The compression methods used are different from the ones in standard JFIF/JPEG; they improve quality and compression ratios, but also require more computational power to process. JPEG 2000 also adds features that are missing in JPEG. It is not nearly as common as JPEG, but it is used currently in professional movie editing and distribution (some digital cinemas, for example, use JPEG 2000 for individual movie frames). -

![JPEG 2000 File Format (JP2 Format) Provides a Priority [9, 39-40]](https://docslib.b-cdn.net/cover/0598/jpeg-2000-file-format-jp2-format-provides-a-priority-9-39-40-1040598.webp)

JPEG 2000 File Format (JP2 Format) Provides a Priority [9, 39-40]

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/3180415 The JPEG2000 still image coding system: An overview Article in IEEE Transactions on Consumer Electronics · December 2000 DOI: 10.1109/30.920468 · Source: IEEE Xplore CITATIONS READS 1,182 760 3 authors, including: Athanassios Skodras Touradj Ebrahimi University of Patras École Polytechnique Fédérale de Lausanne 168 PUBLICATIONS 3,939 CITATIONS 652 PUBLICATIONS 18,270 CITATIONS SEE PROFILE SEE PROFILE Some of the authors of this publication are also working on these related projects: JPEG XT a JPEG standard for High Dynamic Range (HDR) and Wide Color Gamut (WCG) Images View project Fall Detection View project All content following this page was uploaded by Athanassios Skodras on 27 November 2012. The user has requested enhancement of the downloaded file. Published in IEEE Transactions on Consumer Electronics, Vol. 46, No. 4, pp. 1103-1127, November 2000 THE JPEG2000 STILL IMAGE CODING SYSTEM: AN OVERVIEW Charilaos Christopoulos1 Senior Member, IEEE, Athanassios Skodras2 Senior Member, IEEE, and Touradj Ebrahimi3 Member, IEEE 1Media Lab, Ericsson Research Corporate Unit, Ericsson Radio Systems AB, S-16480 Stockholm, Sweden Email: [email protected] 2Electronics Laboratory, University of Patras, GR-26110 Patras, Greece Email: [email protected] 3Signal Processing Laboratory, EPFL, CH-1015 Lausanne, Switzerland Email: [email protected] Abstract -- With the increasing use of multimedia international standard for the compression of technologies, image compression requires higher grayscale and color still images. This effort has been performance as well as new features. To address this known as JPEG, the Joint Photographic Experts need in the specific area of still image encoding, a new Group the “joint” in JPEG refers to the collaboration standard is currently being developed, the JPEG2000. -

Comparison of JPEG's Competitors for Document Images

Comparison of JPEG’s competitors for document images Mostafa Darwiche1, The-Anh Pham1 and Mathieu Delalandre1 1 Laboratoire d’Informatique, 64 Avenue Jean Portalis, 37200 Tours, France e-mail: fi[email protected] Abstract— In this work, we carry out a study on the per- in [6] concerns assessing quality of common image formats formance of potential JPEG’s competitors when applied to (e.g., JPEG, TIFF, and PNG) that relies on optical charac- document images. Many novel codecs, such as BPG, Mozjpeg, ter recognition (OCR) errors and peak signal to noise ratio WebP and JPEG-XR, have been recently introduced in order to substitute the standard JPEG. Nonetheless, there is a lack of (PSNR) metric. The authors in [3] compare the performance performance evaluation of these codecs, especially for a particular of different coding methods (JPEG, JPEG 2000, MRC) using category of document images. Therefore, this work makes an traditional PSNR metric applied to several document samples. attempt to provide a detailed and thorough analysis of the Since our target is to provide a study on document images, aforementioned JPEG’s competitors. To this aim, we first provide we then use a large dataset with different image resolutions, a review of the most famous codecs that have been considered as being JPEG replacements. Next, some experiments are performed compress them at very low bit-rate and after that evaluate the to study the behavior of these coding schemes. Finally, we extract output images using OCR accuracy. We also take into account main remarks and conclusions characterizing the performance the PSNR measure to serve as an additional quality metric. -

This Is Your Reminder That Block Paragraphs Are Used

Development of Standard Data Format for 2-Dimensional and 3-Dimensional (2D/3D) Pavement Image Data used to determine Pavement Surface Condition and Profiles Task 4 - Develop Metadata and Proposed Standards Office of Technical Services FHWA Resource Center Pavement & Materials Technical Services Team December 2016 1 Notice This document is disseminated under the sponsorship of the U.S. Department of Transportation in the interest of information exchange. The U.S. Government assumes no liability for the use of the information contained in this document. This report does not constitute a standard, specification, or regulation. The U.S. Government does not endorse products or manufacturers. Trademarks or manufacturers’ names appear in this report only because they are considered essential to the objective of the document to transfer technical information. Quality Assurance Statement The Federal Highway Administration (FHWA) provides high-quality information to serve Government, industry, and the public in a manner that promotes public understanding. Standards and policies are used to ensure and maximize the quality, objectivity, utility, and integrity of its information. The FHWA periodically reviews quality issues and adjusts its programs and processes to ensure continuous quality improvement. Technical Report Documentation Page 1. Report No. 2. Government Accession No. 3. Recipient’s Catalog No. 4. Title and Subtitle 5. Report Date 12-20-2016 Development of Standard Data Format for 2-Dimensional and 3-Dimensional (2D/3D) Pavement Image Data that is used to determine Pavement Surface 6. Performing Organization Code Condition and Profiles 7. Author(s) 8. Performing Organization Report No. Wang Kelvin C. P., Qiang “Joshua” Li, and Cheng Chen 9. -

High Dynamic Range Image Compression Based on Visual Saliency Jin Wang,1,2 Shenda Li1 and Qing Zhu1

SIP (2020), vol. 9, e16, page 1 of 15 © The Author(s), 2020 published by Cambridge University Press in association with Asia Pacific Signal and Information Processing Association. This is an Open Access article, distributed under the terms of the Creative Commons Attribution-NonCommercial-ShareAlike licence (http://creativecommons.org/licenses/by-nc-sa/4.0/), which permits non-commercial re-use, distribution, and reproduction in any medium, providedthesameCreative Commons licence is included and the original work is properly cited. The written permission of Cambridge University Press must be obtained for commercial re-use. doi:10.1017/ATSIP.2020.15 original paper High dynamic range image compression based on visual saliency jin wang,1,2 shenda li1 and qing zhu1 With wider luminance range than conventional low dynamic range (LDR) images, high dynamic range (HDR) images are more consistent with human visual system (HVS). Recently, JPEG committee releases a new HDR image compression standard JPEG XT. It decomposes an input HDR image into base layer and extension layer. The base layer code stream provides JPEG (ISO/IEC 10918) backward compatibility, while the extension layer code stream helps to reconstruct the original HDR image. However, thismethoddoesnotmakefulluseofHVS,causingwasteofbitsonimperceptibleregionstohumaneyes.Inthispaper,avisual saliency-based HDR image compression scheme is proposed. The saliency map of tone mapped HDR image is first extracted, then it is used to guide the encoding of extension layer. The compression quality is adaptive to the saliency of the coding region of the image. Extensive experimental results show that our method outperforms JPEG XT profile A, B, C and other state-of-the-art methods.