Linux Audio Conference 2019

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Proceedings 2005

LAC2005 Proceedings 3rd International Linux Audio Conference April 21 – 24, 2005 ZKM | Zentrum fur¨ Kunst und Medientechnologie Karlsruhe, Germany Published by ZKM | Zentrum fur¨ Kunst und Medientechnologie Karlsruhe, Germany April, 2005 All copyright remains with the authors www.zkm.de/lac/2005 Content Preface ............................................ ............................5 Staff ............................................... ............................6 Thursday, April 21, 2005 – Lecture Hall 11:45 AM Peter Brinkmann MidiKinesis – MIDI controllers for (almost) any purpose . ....................9 01:30 PM Victor Lazzarini Extensions to the Csound Language: from User-Defined to Plugin Opcodes and Beyond ............................. .....................13 02:15 PM Albert Gr¨af Q: A Functional Programming Language for Multimedia Applications .........21 03:00 PM St´ephane Letz, Dominique Fober and Yann Orlarey jackdmp: Jack server for multi-processor machines . ......................29 03:45 PM John ffitch On The Design of Csound5 ............................... .....................37 04:30 PM Pau Arum´ıand Xavier Amatriain CLAM, an Object Oriented Framework for Audio and Music . .............43 Friday, April 22, 2005 – Lecture Hall 11:00 AM Ivica Ico Bukvic “Made in Linux” – The Next Step .......................... ..................51 11:45 AM Christoph Eckert Linux Audio Usability Issues .......................... ........................57 01:30 PM Marije Baalman Updates of the WONDER software interface for using Wave Field Synthesis . 69 02:15 PM Georg B¨onn Development of a Composer’s Sketchbook ................. ....................73 Saturday, April 23, 2005 – Lecture Hall 11:00 AM J¨urgen Reuter SoundPaint – Painting Music ........................... ......................79 11:45 AM Michael Sch¨uepp, Rene Widtmann, Rolf “Day” Koch and Klaus Buchheim System design for audio record and playback with a computer using FireWire . 87 01:30 PM John ffitch and Tom Natt Recording all Output from a Student Radio Station . -

Moving Average Filters

CHAPTER 15 Moving Average Filters The moving average is the most common filter in DSP, mainly because it is the easiest digital filter to understand and use. In spite of its simplicity, the moving average filter is optimal for a common task: reducing random noise while retaining a sharp step response. This makes it the premier filter for time domain encoded signals. However, the moving average is the worst filter for frequency domain encoded signals, with little ability to separate one band of frequencies from another. Relatives of the moving average filter include the Gaussian, Blackman, and multiple- pass moving average. These have slightly better performance in the frequency domain, at the expense of increased computation time. Implementation by Convolution As the name implies, the moving average filter operates by averaging a number of points from the input signal to produce each point in the output signal. In equation form, this is written: EQUATION 15-1 Equation of the moving average filter. In M &1 this equation, x[ ] is the input signal, y[ ] is ' 1 % y[i] j x [i j ] the output signal, and M is the number of M j'0 points used in the moving average. This equation only uses points on one side of the output sample being calculated. Where x[ ] is the input signal, y[ ] is the output signal, and M is the number of points in the average. For example, in a 5 point moving average filter, point 80 in the output signal is given by: x [80] % x [81] % x [82] % x [83] % x [84] y [80] ' 5 277 278 The Scientist and Engineer's Guide to Digital Signal Processing As an alternative, the group of points from the input signal can be chosen symmetrically around the output point: x[78] % x[79] % x[80] % x[81] % x[82] y[80] ' 5 This corresponds to changing the summation in Eq. -

Discrete-Time Fourier Transform 7

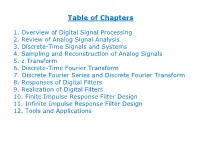

Table of Chapters 1. Overview of Digital Signal Processing 2. Review of Analog Signal Analysis 3. Discrete-Time Signals and Systems 4. Sampling and Reconstruction of Analog Signals 5. z Transform 6. Discrete-Time Fourier Transform 7. Discrete Fourier Series and Discrete Fourier Transform 8. Responses of Digital Filters 9. Realization of Digital Filters 10. Finite Impulse Response Filter Design 11. Infinite Impulse Response Filter Design 12. Tools and Applications Chapter 1: Overview of Digital Signal Processing Chapter Intended Learning Outcomes: (i) Understand basic terminology in digital signal processing (ii) Differentiate digital signal processing and analog signal processing (iii) Describe basic digital signal processing application areas H. C. So Page 1 Signal: . Anything that conveys information, e.g., . Speech . Electrocardiogram (ECG) . Radar pulse . DNA sequence . Stock price . Code division multiple access (CDMA) signal . Image . Video H. C. So Page 2 0.8 0.6 0.4 0.2 0 vowel of "a" -0.2 -0.4 -0.6 0 0.005 0.01 0.015 0.02 time (s) Fig.1.1: Speech H. C. So Page 3 250 200 150 100 ECG 50 0 -50 0 0.5 1 1.5 2 2.5 time (s) Fig.1.2: ECG H. C. So Page 4 1 0.5 0 -0.5 transmitted pulse -1 0 0.2 0.4 0.6 0.8 1 time 1 0.5 0 -0.5 received pulse received t -1 0 0.2 0.4 0.6 0.8 1 time Fig.1.3: Transmitted & received radar waveforms H. C. So Page 5 Radar transceiver sends a 1-D sinusoidal pulse at time 0 It then receives echo reflected by an object at a range of Reflected signal is noisy and has a time delay of which corresponds to round trip propagation time of radar pulse Given the signal propagation speed, denoted by , is simply related to as: (1.1) As a result, the radar pulse contains the object range information H. -

Finite Impulse Response (FIR) Digital Filters (II) Ideal Impulse Response Design Examples Yogananda Isukapalli

Finite Impulse Response (FIR) Digital Filters (II) Ideal Impulse Response Design Examples Yogananda Isukapalli 1 • FIR Filter Design Problem Given H(z) or H(ejw), find filter coefficients {b0, b1, b2, ….. bN-1} which are equal to {h0, h1, h2, ….hN-1} in the case of FIR filters. 1 z-1 z-1 z-1 z-1 x[n] h0 h1 h2 h3 hN-2 hN-1 1 1 1 1 1 y[n] Consider a general (infinite impulse response) definition: ¥ H (z) = å h[n] z-n n=-¥ 2 From complex variable theory, the inverse transform is: 1 n -1 h[n] = ò H (z)z dz 2pj C Where C is a counterclockwise closed contour in the region of convergence of H(z) and encircling the origin of the z-plane • Evaluating H(z) on the unit circle ( z = ejw ) : ¥ H (e jw ) = åh[n]e- jnw n=-¥ 1 p h[n] = ò H (e jw )e jnwdw where dz = jejw dw 2p -p 3 • Design of an ideal low pass FIR digital filter H(ejw) K -2p -p -wc 0 wc p 2p w Find ideal low pass impulse response {h[n]} 1 p h [n] = H (e jw )e jnwdw LP ò 2p -p 1 wc = Ke jnwdw 2p ò -wc Hence K h [n] = sin(nw ) n = 0, ±1, ±2, …. ±¥ LP np c 4 Let K = 1, wc = p/4, n = 0, ±1, …, ±10 The impulse response coefficients are n = 0, h[n] = 0.25 n = ±4, h[n] = 0 = ±1, = 0.225 = ±5, = -0.043 = ±2, = 0.159 = ±6, = -0.053 = ±3, = 0.075 = ±7, = -0.032 n = ±8, h[n] = 0 = ±9, = 0.025 = ±10, = 0.032 5 Non Causal FIR Impulse Response We can make it causal if we shift hLP[n] by 10 units to the right: K h [n] = sin((n -10)w ) LP (n -10)p c n = 0, 1, 2, …. -

Informatique Et MAO 1 : Configurations MAO (1)

Ce fichier constitue le support de cours “son numérique” pour les formations Régisseur Son, Techniciens Polyvalent et MAO du GRIM-EDIF à Lyon. Elles ne sont mises en ligne qu’en tant qu’aide pour ces étudiants et ne peuvent être considérées comme des cours. Elles utilisent des illustrations collectées durant des années sur Internet, hélas sans en conserver les liens. Veuillez m'en excuser, ou me contacter... pour toute question : [email protected] 4ème partie : Informatique et MAO 1 : Configurations MAO (1) interface audio HP monitoring stéréo microphone(s) avec entrées/sorties ou surround analogiques micro-ordinateur logiciels multipistes, d'édition, de traitement et de synthèse, plugins etc... (+ lecteur-graveur CD/DVD/BluRay) surface de contrôle clavier MIDI toutes les opérations sont réalisées dans l’ordinateur : - l’interface audio doit permettre des latences faibles pour le jeu instrumental, mais elle ne nécessite pas de nombreuses entrées / sorties analogiques - la RAM doit permettre de stocker de nombreux plugins (et des quantités d’échantillons) - le processeur doit être capable de calculer de nombreux traitements en temps réel - l’espace de stockage et sa vitesse doivent être importants - les périphériques de contrôle sont réduits au minimum, le coût total est limité SON NUMERIQUE - 4 - INFORMATIQUE 2 : Configurations MAO (2) HP monitoring stéréo microphones interface audio avec de nombreuses ou surround entrées/sorties instruments analogiques micro-ordinateur Effets logiciels multipistes, d'édition et de traitement, plugins (+ -

The Book of Audacity

THE BOOK OF AUDACITY Record, Edit, Mix, and Master with the Free Audio Editor by Carla Schroder San Francisco THE BOOK OF AUDACITY. Copyright © 2011 by Carla Schroder. All rights reserved. No part of this work may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage or retrieval system, without the prior written permission of the copyright owner and the publisher. 15 14 13 12 11 1 2 3 4 5 6 7 8 9 ISBN-10: 1-59327-270-7 ISBN-13: 978-1-59327-270-8 Publisher: William Pollock Production Editor: Serena Yang Cover and Interior Design: Octopod Studios Developmental Editor: Tyler Ortman Technical Reviewer: Alvin Goats Copyeditor: Kim Wimpsett Compositor: Serena Yang Proofreader: Paula L. Fleming Indexer: Nancy Guenther For information on book distributors or translations, please contact No Starch Press, Inc. directly: No Starch Press, Inc. 38 Ringold Street, San Francisco, CA 94103 phone: 415.863.9900; fax: 415.863.9950; [email protected]; www.nostarch.com Library of Congress Cataloging-in-Publication Data Schroder, Carla. The book of Audacity : record, edit, mix, and master with the free audio editor / by Carla Schroder. p. cm. Includes bibliographical references. ISBN-13: 978-1-59327-270-8 ISBN-10: 1-59327-270-7 1. Audacity (Computer file) 2. Digital audio editors. I. Title. ML74.4.A84S37 2010 781.3’4536-dc22 2010037594 No Starch Press and the No Starch Press logo are registered trademarks of No Starch Press, Inc. Other product and company names mentioned herein may be the trademarks of their respective owners. -

Photo Editing

All recommendations are from: http://www.mediabistro.com/10000words/7-essential-multimedia-tools-and-their_b376 Photo Editing Paid Free Photoshop Splashup Photoshop may be the industry leader when it comes to photo editing and graphic design, but Splashup, a free online tool, has many of the same capabilities at a much cheaper price. Splashup has lots of the tools you’d expect to find in Photoshop and has a similar layout, which is a bonus for those looking to get started right away. Requires free registration; Flash-based interface; resize; crop; layers; flip; sharpen; blur; color effects; special effects Fotoflexer/Photobucket Crop; resize; rotate; flip; hue/saturation/lightness; contrast; various Photoshop-like effects Photoshop Express Requires free registration; 2 GB storage; crop; rotate; resize; auto correct; exposure correction; red-eye removal; retouching; saturation; white balance; sharpen; color correction; various other effects Picnik “Auto-fix”; rotate; crop; resize; exposure correction; color correction; sharpen; red-eye correction Pic Resize Resize; crop; rotate; brightness/contrast; conversion; other effects Snipshot Resize; crop; enhancement features; exposure, contrast, saturation, hue and sharpness correction; rotate; grayscale rsizr For quick cropping and resizing EasyCropper For quick cropping and resizing Pixenate Enhancement features; crop; resize; rotate; color effects FlauntR Requires free registration; resize; rotate; crop; various effects LunaPic Similar to Microsoft Paint; many features including crop, scale -

Porting Portaudio API on ASIO

GRAME - Computer Music Research Lab. Technical Note - 01-11-06 Porting PortAudio API on ASIO Stéphane Letz November 2001 Grame - Computer Music Research Laboratory 9, rue du Garet BP 1185 69202 FR - LYON Cedex 01 [email protected] Abstract This document describes a port of the PortAudio API using the ASIO API on Macintosh and Windows. It explains technical choices used, buffer size adaptation techniques that guarantee minimal additional latency, results and limitations. 1 The ASIO API ASIO (Audio Streaming Input Ouput) is an API defined and proposed by Steinberg. It addesses the area of efficient audio processing, synchronization, low latency and extentibility on the hardware side. ASIO allows the handling of multi-channel professional audio cards, and different sample rates (32 kHz to 96 kHz), different sample formats (16, 24, 32 bits of 32/64 floating point formats). ASIO is available on MacOS and Windows. 2 PortAudio API PortAudio is a library that provides streaming audio input and output. It is a cross-platform API that works on Windows, Macintosh, Linux, SGI, FreeBSD and BeOS. This means that programs that need to process or generate an audio signal, can run on several different computers just by recompiling the source code. PortAudio is intended to promote the exchange of audio synthesis software between developers on different platforms. 3 Technical choices Porting PortAudio to ASIO means that some technical choices have to be made. The life cycle of the ASIO driver must be “mapped” to the life cycle of a PortAudio application. Each PortAudio function will be implemented using one or more ASIO functions. -

Python-Sounddevice Release 0.4.2

python-sounddevice Release 0.4.2 Matthias Geier 2021-07-18 Contents 1 Installation 2 2 Usage 3 2.1 Playback................................................3 2.2 Recording...............................................4 2.3 Simultaneous Playback and Recording................................4 2.4 Device Selection...........................................4 2.5 Callback Streams...........................................5 2.6 Blocking Read/Write Streams.....................................6 3 Example Programs 6 3.1 Play a Sound File...........................................6 3.2 Play a Very Long Sound File.....................................8 3.3 Play a Very Long Sound File without Using NumPy......................... 10 3.4 Play a Web Stream.......................................... 12 3.5 Play a Sine Signal........................................... 14 3.6 Input to Output Pass-Through..................................... 15 3.7 Plot Microphone Signal(s) in Real-Time............................... 17 3.8 Real-Time Text-Mode Spectrogram................................. 19 3.9 Recording with Arbitrary Duration.................................. 21 3.10 Using a stream in an asyncio coroutine............................... 23 3.11 Creating an asyncio generator for audio blocks........................... 24 4 Contributing 26 4.1 Reporting Problems.......................................... 26 4.2 Development Installation....................................... 28 4.3 Building the Documentation..................................... 28 5 API Documentation -

Scummvm Documentation

ScummVM Documentation CadiH May 10, 2021 The basics 1 Understanding the interface4 1.1 The Launcher........................................4 1.2 The Global Main Menu..................................7 2 Handling game files 10 2.1 Multi-disc games...................................... 11 2.2 CD audio.......................................... 11 2.3 Macintosh games...................................... 11 3 Adding and playing a game 13 3.1 Where to get the games.................................. 13 3.2 Adding games to the Launcher.............................. 13 3.3 A note about copyright.................................. 21 4 Saving and loading a game 22 4.1 Saving a game....................................... 22 4.2 Location of saved game files............................... 27 4.3 Loading a game...................................... 27 5 Keyboard shortcuts 30 6 Changing settings 31 6.1 From the Launcher..................................... 31 6.2 In the configuration file.................................. 31 7 Connecting a cloud service 32 8 Using the local web server 37 9 AmigaOS 4 42 9.1 What you’ll need...................................... 42 9.2 Installing ScummVM.................................... 42 9.3 Transferring game files.................................. 42 9.4 Controls........................................... 44 9.5 Paths............................................ 44 9.6 Settings........................................... 44 9.7 Known issues........................................ 44 10 Android 45 i 10.1 What you’ll need..................................... -

How to Create Music with GNU/Linux

How to create music with GNU/Linux Emmanuel Saracco [email protected] How to create music with GNU/Linux by Emmanuel Saracco Copyright © 2005-2009 Emmanuel Saracco How to create music with GNU/Linux Warning WORK IN PROGRESS Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is available on the World Wide Web at http://www.gnu.org/licenses/fdl.html. Revision History Revision 0.0 2009-01-30 Revised by: es Not yet versioned: It is still a work in progress. Dedication This howto is dedicated to all GNU/Linux users that refuse to use proprietary software to work with audio. Many thanks to all Free developers and Free composers that help us day-by-day to make this possible. Table of Contents Forword................................................................................................................................................... vii 1. System settings and tuning....................................................................................................................1 1.1. My Studio....................................................................................................................................1 1.2. File system..................................................................................................................................1 1.3. Linux Kernel...............................................................................................................................2 -

Qtractor – a Audio/MIDI Multi-Track Sequencer

Qtractor – A Audio/MIDI multi-track sequencer Rui Nuno CAPELA rncbc.org [email protected] Abstract sequencer for MIDI, thus currently being a Linux- only application. There are not known intentions Qtractor is a MIDI/Audio multi-track sequencer on support to any other platforms. application written in C++ around the Qt4 toolkit Qtractor is free open-source software, licensed using Qt Designer. The initial target platform will be Linux, where the Jack Audio Connection Kit under the GPL and is welcoming all collaboration (JACK) for audio, and the Advanced Linux Sound and review from the Linux Audio developer and Architecture (ALSA) for MIDI, are the main user community in particular and the public in infrastructures to evolve as a fairly-featured Linux general. Desktop Audio Workstation GUI, specially dedicated to the personal home-studio. 2 Installation Keywords 2.1 Requirements Linux, audio, MIDI, JACK, ALSA, multi-track, The software requirements for build and run- recording, sequencer time are listed as follows: 1 Introduction Mandatory: As a new Linux Audio offering, Qtractor targets ● Qt4 (core, gui, xml) [2] and positions itself comfortably tagged as for the ● JACK [3] techno-boy bedroom home-studio. However, in ● ALSA [4] general, it is just yet another digital audio and ● libsndfile[5] MIDI multi-track composition and arranger software application. The design and functionality Optional (opted-in at build time): model takes as fundamental the now usual multi- track composing techniques for modern music- ● libvorbis (enc, file) [6] making. It aims to be intuitive and easy to use. ● libmad [7] Unfortunately, for the classic and erudite ● libsamplerate [8] musician, there are and will be no plans to integrate any kind of score editor.