Petsc Users Manual Revision 3.4

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Emacspeak — the Complete Audio Desktop User Manual

Emacspeak | The Complete Audio Desktop User Manual T. V. Raman Last Updated: 19 November 2016 Copyright c 1994{2016 T. V. Raman. All Rights Reserved. Permission is granted to make and distribute verbatim copies of this manual without charge provided the copyright notice and this permission notice are preserved on all copies. Short Contents Emacspeak :::::::::::::::::::::::::::::::::::::::::::::: 1 1 Copyright ::::::::::::::::::::::::::::::::::::::::::: 2 2 Announcing Emacspeak Manual 2nd Edition As An Open Source Project ::::::::::::::::::::::::::::::::::::::::::::: 3 3 Background :::::::::::::::::::::::::::::::::::::::::: 4 4 Introduction ::::::::::::::::::::::::::::::::::::::::: 6 5 Installation Instructions :::::::::::::::::::::::::::::::: 7 6 Basic Usage. ::::::::::::::::::::::::::::::::::::::::: 9 7 The Emacspeak Audio Desktop. :::::::::::::::::::::::: 19 8 Voice Lock :::::::::::::::::::::::::::::::::::::::::: 22 9 Using Online Help With Emacspeak. :::::::::::::::::::: 24 10 Emacs Packages. ::::::::::::::::::::::::::::::::::::: 26 11 Running Terminal Based Applications. ::::::::::::::::::: 45 12 Emacspeak Commands And Options::::::::::::::::::::: 49 13 Emacspeak Keyboard Commands. :::::::::::::::::::::: 361 14 TTS Servers ::::::::::::::::::::::::::::::::::::::: 362 15 Acknowledgments.::::::::::::::::::::::::::::::::::: 366 16 Concept Index :::::::::::::::::::::::::::::::::::::: 367 17 Key Index ::::::::::::::::::::::::::::::::::::::::: 368 Table of Contents Emacspeak :::::::::::::::::::::::::::::::::::::::::: 1 1 Copyright ::::::::::::::::::::::::::::::::::::::: -

The Journal of AUUG Inc. Volume 25 ¯ Number 3 September 2004

The Journal of AUUG Inc. Volume 25 ¯ Number 3 September 2004 Features: mychart Charting System for Recreational Boats 8 Lions Commentary, part 1 17 Managing Debian 24 SEQUENT: Asynchronous Distributed Data Exchange 51 Framework News: Minutes to AUUG board meeting of 5 May 2004 13 Liberal license for ancient UNIX sources 16 First Australian UNIX Developer’s Symposium: CFP 60 First Digital Pest Symposium 61 Regulars: Editorial 1 President’s Column 3 About AUUGN 4 My Home Network 5 AUUG Corporate Members 23 A Hacker’s Diary 29 Letters to AUUG 58 Chapter Meetings and Contact Details 62 AUUG Membership AppLication Form 63 ISSN 1035-7521 Print post approved by Australia Post - PP2391500002 AUUGN The journal of AUUG Inc. Volume 25, Number 3 September 2004 Editor ial Gr eg Lehey <[email protected]> After last quarter's spectacularly late delivery of For those newcomers who don't recall the “Lions AUUGN, things aregradually getting back to nor- Book”, this is the “Commentary on the Sixth Edi- mal. I had hoped to have this on your desk by tion UNIX Operating System” that John Lions the end of September,but it wasn't to be. Given wr ote for classes at UNSW back in 1977. Suppos- that that was only a couple of weeks after the “Ju- edly they werethe most-photocopied of all UNIX- ly” edition, this doesn’t seem to be such a prob- related documents. Ihad mislaid my photocopy, lem. I'm fully expecting to get the December is- poor as it was (weren't they all?) some time earli- sue out in time to keep you from boredom over er,soIwas delighted to have an easy to read ver- the Christmas break. -

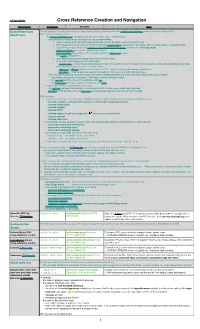

⅀ Xref Local PDF

⬉ Topic Index Cross Reference Creation and Navigation Description Keystroke Function Note Cross References Emacs provides several cross reference tools. Some tools are unified under the unified xref interface some others are independent. with Emacs PEL support several of them: • The unified xref interface is available since Emacs version 25.1. This includes: • The xref unified interface can be used with various back-ends: 1. major-mode specific load/interpretation backend (such as what is used for Emacs Lisp) 2. Tags-based tools using external TAGS file with the etags syntax supported by the etags utility and other etags-compatible tools: • etags (Emacs tags utility) ; see how to create TAGS files with etags, used by the xref-etag-mode. • Universal Ctags (successor of Exuberant Ctags) • GNU GLOBAL gtags utility with Universal Ctags and Pygments plugin. • The gxref xref back-end 3. other specialized parsers based tools that do not use tags: • Programming language agnostic packages: • dumb-jump, a fast grep/ag/ripgrep-based engine to navigate in over 40 programming languages without tags/database index files. • Specialized packages for specific major modes: • rtag-xref, a RTags backend specialized for C/C++ code. It uses a client/server application. • info-xref an internal package used to navigate into info document external references. • The xref unified interface can also be used with various front-end selectors when several entries are found for a search: • The default xref selector that uses a *xref* buffer to show all search result • The ivy-xref interface to select candidates with ivy • The helm-xref interface to select candidates with helm. -

How to Deal with Your Raspberry Spy

How To Deal With Your Raspberry Spy Gavin L. Rebeiro March 2, 2021 2 Cover The artwork on the cover of this work is used under ex- plicit written permission by the artist. Any copying, dis- tributing or reproduction of this artwork without the same explicit permission is considered theft and/or misuse of in- tellectual and creative property. • Artist: Cay • Artist Contact Details: [email protected] • Artist Portfolio: https://thecayart.wixsite.com/artwork/ contact These covers are here to spice things up a bit. Want to have your artwork showcased? Just send an email over to [email protected] 3 4 COVER and let us know! For encrypted communications, you can use the OpenPGP Key provided in chapter 6. Copyright • Author: Gavin L. Rebeiro • Copyright Holder: Gavin L. Rebeiro, 2021 • Contact Author: [email protected] • Publisher: E2EOPS PRESS LIMITED • Contact Publisher: [email protected] This work is licensed under a Creative Commons Attribution- ShareAlike 4.0 International License. For encrypted communications, you can use the OpenPGP Key provided in chapter 6. 5 6 COPYRIGHT Chapter 1 Acknowledgements Techrights techrights.org (TR) deserves credit for coverage of the Raspberry Spy Foundation’s underhand tactics; a heart- felt thanks to everyone who participated and notified TR about the Raspberry Spy espionage. TR has been robbed of credit they deserved in the early days of news coverage. The fol- lowing links go over some of the news coverage from TR: • Raspberry Pi (at Least Raspbian GNU/Linux and/or Rasp- berry Pi Foundation) Appears to -

Experience with ANSI C Markup Language for a Cross-Referencer

Experience with ANSI C Markup Language for a cross-referencer Hayato Kawashima and Katsuhiko Gondow Department of Information Science, Japan Advanced Institute of Science and Technology (JAIST) 1-1 Asahidai Tatsunokuchi Nomi Ishikawa, 923-1292, JAPAN {hayato-k, gondow}@jaist.ac.jp Abstract duced ACML (ANSI C Markup Language) [6]. ACML is a kind of domain-specific language (DSL) to describe a com- The purpose of this paper is twofold: (1) to examine mon data format specific to ANSI C in the domain of CASE the properties of our ANSI C Markup Language (ACML) tools. That is, ACML is defined as a set of XML [1] tags as a domain-specific language (DSL); and (2) to show that and attributes, which describe ANSI C program’s syntax ACML is useful as a DSL by implementing an ANSI C cross- trees, types, symbol tables, and relationships among lan- referencer using ACML. guage constructs. We have introduced ACML as a DSL for developing A DSL is a programming language dedicated to a par- CASE tools. ACML is defined as a set of XML tags and ticular domain or problem. For example, Lex and Yacc are attributes, and describes ANSI C program’s syntax trees, used for lexers and parsers; VHDL for electronic hardware types, symbol tables, and so on. That is, ACML is the DSL description. Furthermore, even markup languages can also which plays the role of intermediate representation among be viewed as DSLs, because they can be good languages to CASE tools. ACML-tagged documents are automatically describe some data’s structures, relationships and semantics generated from ANSI C programs, and then used as input specific to some particular domain, even though they are of CASE tools. -

Universal Ctags Documentation Release 0.3.0

Universal Ctags Documentation Release 0.3.0 Universal Ctags Team 22 September 2021 Contents 1 Building ctags 2 1.1 Building with configure (*nix including GNU/Linux)........................2 1.2 Building/hacking/using on MS-Windows..............................4 1.3 Building on Mac OS.........................................7 2 Man pages 9 2.1 ctags.................................................9 2.2 tags.................................................. 33 2.3 ctags-optlib.............................................. 40 2.4 ctags-client-tools........................................... 46 2.5 ctags-incompatibilities........................................ 53 2.6 ctags-faq............................................... 56 2.7 ctags-lang-inko............................................ 62 2.8 ctags-lang-iPythonCell........................................ 62 2.9 ctags-lang-julia............................................ 63 2.10 ctags-lang-python.......................................... 65 2.11 ctags-lang-r.............................................. 69 2.12 ctags-lang-sql............................................. 70 2.13 ctags-lang-tcl............................................. 72 2.14 ctags-lang-verilog.......................................... 73 2.15 readtags................................................ 76 3 Parsers 81 3.1 Asm parser.............................................. 81 3.2 CMake parser............................................. 81 3.3 The new C/C++ parser........................................ 82 3.4 The -

Petsc Users Manual Revision 3.7

ANL-95/11 Rev 3.7 PETSc Users Manual Revision 3.7 Mathematics and Computer Science Division About Argonne National Laboratory Argonne is a U.S. Department of Energy laboratory managed by UChicago Argonne, LLC under contract DE-AC02-06CH11357. The Laboratory’s main facility is outside Chicago, at 9700 South Cass Avenue, Argonne, Illinois 60439. For information about Argonne and its pioneering science and technology programs, see www.anl.gov. DOCUMENT AVAILABILITY Online Access: U.S. Department of Energy (DOE) reports produced after 1991 and a growing number of pre-1991 documents are available free via DOE's SciTech Connect (http://www.osti.gov/scitech/) Reports not in digital format may be purchased by the public from the National Technical Information Service (NTIS): U.S. Department of Commerce National Technical Information Service 5301 Shawnee Rd Alexandra, VA 22312 www.ntis.gov Phone: (800) 553-NTIS (6847) or (703) 605-6000 Fax: (703) 605-6900 Email: [email protected] Reports not in digital format are available to DOE and DOE contractors from the Office of Scientific and Technical Information (OSTI): U.S. Department of Energy Office of Scientific and Technical Information P.O. Box 62 Oak Ridge, TN 37831-0062 www.osti.gov Phone: (865) 576-8401 Fax: (865) 576-5728 Email: [email protected] Disclaimer This report was prepared as an account of work sponsored by an agency of the United States Government. Neither the United States Government nor any agency thereof, nor UChicago Argonne, LLC, nor any of their employees or officers, makes any warranty, express or implied, or assumes any legal liability or responsibility for the accuracy, completeness, or usefulness of any information, apparatus, product, or process disclosed, or represents that its use would not infringe privately owned rights. -

An Empirical Security Study of the Native Code in the JDK

An Empirical Security Study of the Native Code in the JDK Gang Tan and Jason Croft Boston College [email protected], [email protected] Abstract Java code It is well known that the use of native methods in Java class Vulnerable { defeats Java’s guarantees of safety and security, which is //Declare a native method private native void bcopy(byte[] arr); why the default policy of Java applets, for example, does public void byteCopy(byte[] arr) { not allow loading non-local native code. However, there //Call the native method is already a large amount of trusted native C/C++ code bcopy(arr); that comprises a significant portion of the Java Develop- } static { ment Kit (JDK). We have carried out an empirical secu- System.loadLibrary("Vulnerable"); rity study on a portion of the native code in Sun’s JDK } 1.6. By applying static analysis tools and manual inspec- } tion, we have identified in this security-critical code pre- C code viously undiscovered bugs. Based on our study, we de- scribe a taxonomy to classify bugs. Our taxonomy pro- #include <jni.h> vides guidance to construction of automated and accurate #include "Vulnerable.h" JNIEXPORT void JNICALL Java_Vulnerable_bcopy bug-finding tools. We also suggest systematic remedies (JNIEnv *env, jobject obj, jobject arr) that can mediate the threats posed by the native code. { char buffer[512]; jbyte *carr; 1 Introduction carr = (*env)->GetByteArrayElements (env,arr,0); //Unbounded string copy to a local buffer Since its birth in the mid 90s, Java has grown to be one strcpy(buffer, carr); of the most popular computing platforms. -

The Table of Equivalents / Replacements / Analogs of Windows Software in Linux

The table of equivalents / replacements / analogs of Windows software in Linux. Last update: 16.07.2003, 31.01.2005, 27.05.2005, 04.12.2006 You can always find the last version of this table on the official site: http://www.linuxrsp.ru/win-lin-soft/. This page on other languages: Russian, Italian, Spanish, French, German, Hungarian, Chinese. One of the biggest difficulties in migrating from Windows to Linux is the lack of knowledge about comparable software. Newbies usually search for Linux analogs of Windows software, and advanced Linux-users cannot answer their questions since they often don't know too much about Windows :). This list of Linux equivalents / replacements / analogs of Windows software is based on our own experience and on the information obtained from the visitors of this page (thanks!). This table is not static since new application names can be added to both left and the right sides. Also, the right column for a particular class of applications may not be filled immediately. In future, we plan to migrate this table to the PHP/MySQL engine, so visitors could add the program themselves, vote for analogs, add comments, etc. If you want to add a program to the table, send mail to winlintable[a]linuxrsp.ru with the name of the program, the OS, the description (the purpose of the program, etc), and a link to the official site of the program (if you know it). All comments, remarks, corrections, offers and bugreports are welcome - send them to winlintable[a]linuxrsp.ru. Notes: 1) By default all Linux programs in this table are free as in freedom. -

GNU Automake for Version 1.7.8, 7 September 2003

GNU Automake For version 1.7.8, 7 September 2003 David MacKenzie and Tom Tromey Copyright °c 1995, 1996, 2000, 2001, 2002, 2003 Free Software Foundation, Inc. This is the first edition of the GNU Automake documentation, and is consistent with GNU Automake 1.7.8. Published by the Free Software Foundation 59 Temple Place - Suite 330, Boston, MA 02111-1307 USA Permission is granted to make and distribute verbatim copies of this manual provided the copyright notice and this permission notice are preserved on all copies. Permission is granted to copy and distribute modified versions of this manual under the con- ditions for verbatim copying, provided that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. Permission is granted to copy and distribute translations of this manual into another lan- guage, under the above conditions for modified versions, except that this permission notice may be stated in a translation approved by the Free Software Foundation. Chapter 2: General ideas 1 1 Introduction Automake is a tool for automatically generating ‘Makefile.in’s from files called ‘Makefile.am’. Each ‘Makefile.am’ is basically a series of make variable definitions1, with rules being thrown in occasionally. The generated ‘Makefile.in’s are compliant with the GNU Makefile standards. The GNU Makefile Standards Document (see section “Makefile Conventions” in The GNU Coding Standards) is long, complicated, and subject to change. The goal of Automake is to remove the burden of Makefile maintenance from the back of the individual GNU maintainer (and put it on the back of the Automake maintainer). -

Manual.Pdf Will Be Located in the Latex Directory of the Distribution

Manual for version 1.8.7 Written by Dimitri van Heesch ©1997-2014 Contents I User Manual 1 1 Introduction 3 2 Installation 7 2.1 Compiling from source on UNIX..................................... 7 2.2 Installing the binaries on UNIX...................................... 8 2.3 Known compilation problems for UNIX ................................. 9 2.4 Compiling from source on Windows................................... 10 2.5 Installing the binaries on Windows.................................... 10 2.6 Tools used to develop doxygen ..................................... 11 3 Getting Started 13 3.1 Step 0: Check if doxygen supports your programming language.................... 14 3.2 Step 1: Creating a configuration file................................... 14 3.3 Step 2: Running doxygen ........................................ 15 3.3.1 HTML output .......................................... 15 3.3.2 LaTeX output .......................................... 16 3.3.3 RTF output ........................................... 16 3.3.4 XML output ........................................... 16 3.3.5 Man page output ........................................ 16 3.3.6 DocBook output......................................... 16 3.4 Step 3: Documenting the sources.................................... 17 4 Documenting the code 19 4.1 Special comment blocks......................................... 19 4.1.1 Comment blocks for C-like languages (C/C++/C#/Objective-C/PHP/Java)........... 19 4.1.1.1 Putting documentation after members....................... 21 4.1.1.2 -

GNU Automake for Version 1.15, 31 December 2014

GNU Automake For version 1.15, 31 December 2014 David MacKenzie Tom Tromey Alexandre Duret-Lutz Ralf Wildenhues Stefano Lattarini This manual is for GNU Automake (version 1.15, 31 December 2014), a program that creates GNU standards-compliant Makefiles from template files. Copyright c 1995-2014 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with no Invariant Sections, with no Front-Cover texts, and with no Back-Cover Texts. A copy of the license is included in the section entitled \GNU Free Documentation License." i Table of Contents 1 Introduction::::::::::::::::::::::::::::::::::::: 1 2 An Introduction to the Autotools ::::::::::::: 1 2.1 Introducing the GNU Build System::::::::::::::::::::::::::::: 1 2.2 Use Cases for the GNU Build System::::::::::::::::::::::::::: 2 2.2.1 Basic Installation:::::::::::::::::::::::::::::::::::::::::: 2 2.2.2 Standard Makefile Targets:::::::::::::::::::::::::::::::: 4 2.2.3 Standard Directory Variables :::::::::::::::::::::::::::::: 4 2.2.4 Standard Configuration Variables :::::::::::::::::::::::::: 5 2.2.5 Overriding Default Configuration Setting with config.site ::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 6 2.2.6 Parallel Build Trees (a.k.a. VPATH Builds) :::::::::::::::: 6 2.2.7 Two-Part Installation ::::::::::::::::::::::::::::::::::::: 8 2.2.8 Cross-Compilation:::::::::::::::::::::::::::::::::::::::::