A Method of Merging Vmware Disk Images Through File System Unification by Sarah X

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Membrane: Operating System Support for Restartable File Systems Swaminathan Sundararaman, Sriram Subramanian, Abhishek Rajimwale, Andrea C

Membrane: Operating System Support for Restartable File Systems Swaminathan Sundararaman, Sriram Subramanian, Abhishek Rajimwale, Andrea C. Arpaci-Dusseau, Remzi H. Arpaci-Dusseau, Michael M. Swift Computer Sciences Department, University of Wisconsin, Madison Abstract and most complex code bases in the kernel. Further, We introduce Membrane, a set of changes to the oper- file systems are still under active development, and new ating system to support restartable file systems. Mem- ones are introduced quite frequently. For example, Linux brane allows an operating system to tolerate a broad has many established file systems, including ext2 [34], class of file system failures and does so while remain- ext3 [35], reiserfs [27], and still there is great interest in ing transparent to running applications; upon failure, the next-generation file systems such as Linux ext4 and btrfs. file system restarts, its state is restored, and pending ap- Thus, file systems are large, complex, and under develop- plication requests are serviced as if no failure had oc- ment, the perfect storm for numerous bugs to arise. curred. Membrane provides transparent recovery through Because of the likely presence of flaws in their imple- a lightweight logging and checkpoint infrastructure, and mentation, it is critical to consider how to recover from includes novel techniques to improve performance and file system crashes as well. Unfortunately, we cannot di- correctness of its fault-anticipation and recovery machin- rectly apply previous work from the device-driver litera- ery. We tested Membrane with ext2, ext3, and VFAT. ture to improving file-system fault recovery. File systems, Through experimentation, we show that Membrane in- unlike device drivers, are extremely stateful, as they man- duces little performance overhead and can tolerate a wide age vast amounts of both in-memory and persistent data; range of file system crashes. -

Darwin Release 3.0.1

Using Darwin Release 3.0.1 Thinking Machines Corporation First printing, May 1998 The information in this document is subject to change without notice and should not be construed as a commitment by Thinking Machines Corporation. Thinking Machines reserves the right to make changes to any product described herein. Although the information in this document has been reviewed and is believed to be reliable, Thinking Machines Corporation assumes no liability for errors in this document. Thinking Machines does not assume any liability arising from the application or use of any information or product described herein. Thinking Machines and Darwin are registered trademarks of Thinking Machines Corporation. Note: Darwin" is a registered trademark of Thinking Machines Corporation in the United States. Darwin" is a registered trademark of Science in Finance Ltd. in the United Kingdom. Therefore Darwin" is not available from Thinking Machines Corporation in the United Kingdom. In the United Kingdom, Thinking Machines Corporation sells its product under the name LoyaltyStream." HPĆUX and HPĆUX 10.20 are trademarks of HewlettĆPackard Company. INFORMIX is a trademark of Informix Software, Inc. InstallShield is a trademark of InstallShield Corporation. INTERSOLV is a trademark of INTERSOLV, Inc. Microsoft, Windows, Windows NT, and Windows 95 are trademarks of Microsoft Corporation. Oracle is a trademark of Oracle Corporation. Open Windows is a trademark of Sun Microsystems, Inc. Sun, Solaris, Sun Ultra, Ultra, and Sun Workstation are trademarks of Sun Microsystems, Inc. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc., in the United States and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. -

Cygwin User's Guide

Cygwin User’s Guide Cygwin User’s Guide ii Copyright © Cygwin authors Permission is granted to make and distribute verbatim copies of this documentation provided the copyright notice and this per- mission notice are preserved on all copies. Permission is granted to copy and distribute modified versions of this documentation under the conditions for verbatim copying, provided that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. Permission is granted to copy and distribute translations of this documentation into another language, under the above conditions for modified versions, except that this permission notice may be stated in a translation approved by the Free Software Foundation. Cygwin User’s Guide iii Contents 1 Cygwin Overview 1 1.1 What is it? . .1 1.2 Quick Start Guide for those more experienced with Windows . .1 1.3 Quick Start Guide for those more experienced with UNIX . .1 1.4 Are the Cygwin tools free software? . .2 1.5 A brief history of the Cygwin project . .2 1.6 Highlights of Cygwin Functionality . .3 1.6.1 Introduction . .3 1.6.2 Permissions and Security . .3 1.6.3 File Access . .3 1.6.4 Text Mode vs. Binary Mode . .4 1.6.5 ANSI C Library . .4 1.6.6 Process Creation . .5 1.6.6.1 Problems with process creation . .5 1.6.7 Signals . .6 1.6.8 Sockets . .6 1.6.9 Select . .7 1.7 What’s new and what changed in Cygwin . .7 1.7.1 What’s new and what changed in 3.2 . -

Dropdmg 3.6.2 Manual

DropDMG 3.6.2 Manual C-Command Software c-command.com February 16, 2021 Contents 1 Introduction 4 1.1 Feature List..............................................4 2 Installing and Updating 6 2.1 Requirements.............................................6 2.2 Installing DropDMG.........................................7 2.3 Updating From a Previous Version.................................7 2.4 Reinstalling a Fresh Copy......................................8 2.5 Uninstalling DropDMG.......................................9 2.6 Security & Privacy Access......................................9 3 Using DropDMG 13 3.1 Basics................................................. 13 3.2 Making a Bootable Device Image of a Hard Drive......................... 14 3.3 Backing Up Your Files to CD/DVD................................ 16 3.4 Burning Backups of CDs/DVDs................................... 17 3.5 Restoring Files and Disks...................................... 18 3.6 Making Images With Background Pictures............................. 19 3.7 Protecting Your Files With Encryption............................... 20 3.8 Transferring Files Securely...................................... 21 3.9 Sharing Licenses and Layouts.................................... 21 3.10 Splitting a File or Folder Into Pieces................................ 22 3.11 Creating a DropDMG Quick Action................................ 22 4 Menus 23 4.1 The DropDMG Menu........................................ 23 4.1.1 About DropDMG...................................... 23 4.1.2 Software -

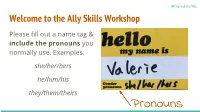

Welcome to the Ally Skills Workshop

@frameshiftllc Welcome to the Ally Skills Workshop Please fill out a name tag & include the pronouns you normally use. Examples: she/her/hers he/him/his they/them/theirs Pronouns Ally Skills Workshop Valerie Aurora http://frameshiftconsulting.com/ally-skills-workshop/ CC BY-SA Frame Shift Consulting LLC, Dr. Sheila Addison, The Ada Initiative @frameshiftllc Format of the workshop ● 30 minute introduction ● 45 minute group discussion of scenarios ● 15 minute break ● 75 minute group discussion of scenarios ● 15 minute wrap-up ~3 hours total @frameshiftllc SO LONG! 2 hour-long workshop: most common complaint was "Too short!" 3 hour-long workshop: only a few complaints that it was too short https://flic.kr/p/7NYUA3 CC BY-SA Toshiyuki IMAI @frameshiftllc Valerie Aurora Founder Frame Shift Consulting Taught ally skills to 2500+ people in Spain, Germany, Australia, Ireland, Sweden, Mexico, New Zealand, etc. Linux kernel and file systems developer for 10+ years Valerie Aurora @frameshiftllc Let’s talk about technical privilege We are more likely to listen to people who "are technical" … but we shouldn’t be "Technical" is more likely to be granted to white men I am using my technical privilege https://frYERZelic.kr/p/ CC BY @sage_solar to end technical privilege! @frameshiftllc What is an ally? Some terminology first: Privilege: an unearned advantage given by society to some people but not all Oppression: systemic, pervasive inequality that is present throughout society, that benefits people with more privilege and harms those with fewer privileges -

UNIX Text Processing Tools—Programs for Sorting, Compar- Ing, and in Various Ways Examining the Contents of Text Files

UNIX® TEXT PROCESSING DALE DOUGHERTY AND TIM O’REILLY and the staffofO’Reilly & Associates, Inc. CONSULTING EDITORS: Stephen G. Kochan and PatrickH.Wood HAYDEN BOOKS ADivision of HowardW.Sams & Company 4300 West 62nd Street Indianapolis, Indiana 46268 USA Copyright © 1987 Dale Dougherty and Tim O’Reilly FIRST EDITION SECOND PRINTING — 1988 INTERNET "UTP Revival" RELEASE — 2004 The UTP RevivalRelease is distributed according to the terms of the Creative Commons Attribution License. A copyofthe license is available at http://creativecommons.org/licenses/by/1.0 International Standard Book Number: 0-672-46291-5 Library of Congress Catalog Card Number: 87-60537 Trademark Acknowledgements All terms mentioned in this book that are known to be trademarks or service marks are listed below. Nei- ther the authors nor the UTP Revivalmembers can attest to the accuracyofthis information. Use of a term in this book should not be regarded as affecting the validity of anytrademark or service mark. Apple is a registered trademark and Apple LaserWriter is a trademark of Apple Computer,Inc. devps is a trademark of Pipeline Associates, Inc. Merge/286 and Merge/386 are trademarks of Locus Computing Corp. DDL is a trademark of Imagen Corp. Helvetica and Times Roman are registered trademarks of Allied Corp. IBM is a registered trademark of International Business Machines Corp. Interpress is a trademark of Xerox Corp. LaserJet is a trademark of Hewlett-Packard Corp. Linotronic is a trademark of Allied Corp. Macintosh is a trademark licensed to Apple Computer,Inc. Microsoft is a registered trademark of Microsoft Corp. MKS Toolkit is a trademark of Mortice Kern Systems, Inc. -

Symantec Web Security Service Policy Guide

Web Security Service Policy Guide Version 6.10.4.1/OCT.12.2018 Symantec Web Security Service/Page 2 Policy Guide/Page 3 Copyrights Copyright © 2018 Symantec Corp. All rights reserved. Symantec, the Symantec Logo, the Checkmark Logo, Blue Coat, and the Blue Coat logo are trademarks or registered trademarks of Symantec Corp. or its affiliates in the U.S. and other coun- tries. Other names may be trademarks of their respective owners. This document is provided for informational purposes only and is not intended as advertising. All warranties relating to the information in this document, either express or implied, are disclaimed to the maximum extent allowed by law. The information in this document is subject to change without notice. THE DOCUMENTATION IS PROVIDED "AS IS" AND ALL EXPRESS OR IMPLIED CONDITIONS, REPRESENTATIONS AND WARRANTIES, INCLUDING ANY IMPLIED WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE OR NON-INFRINGEMENT, ARE DISCLAIMED, EXCEPT TO THE EXTENT THAT SUCH DISCLAIMERS ARE HELD TO BE LEGALLY INVALID. SYMANTEC CORPORATION SHALL NOT BE LIABLE FOR INCIDENTAL OR CONSEQUENTIAL DAMAGES IN CONNECTION WITH THE FURNISHING, PERFORMANCE, OR USE OF THIS DOCUMENTATION. THE INFORMATION CONTAINED IN THIS DOCUMENTATION IS SUBJECT TO CHANGE WITHOUT NOTICE. Symantec Corporation 350 Ellis Street Mountain View, CA 94043 www.symantec.com Policy Guide/Page 4 Symantec Web Security Service Policy Guide The Symantec Web Security Service solutions provide real-time protection against web-borne threats. As a cloud-based product, the Web Security Service leverages Symantec's proven security technology as well as the WebPulse™ cloud com- munity of over 75 million users. -

A Novel Scheduling Framework Leveraging Hardware Cache Partitioning for Cache-Side-Channel Elimination in Clouds

A Novel Scheduling Framework Leveraging Hardware Cache Partitioning for Cache-Side-Channel Elimination in Clouds Read Sprabery Konstantin Evchenko Abhilash Raj University of Illinois, University of Illinois, Oregon State University Urbana-Champaign Urbana-Champaign [email protected] [email protected] [email protected] Rakesh B. Bobba Sibin Mohan Roy H. Campbell Oregon State University University of Illinois, University of Illinois, [email protected] Urbana-Champaign Urbana-Champaign [email protected] [email protected] ABSTRACT on the Last-Level-Cache (LLC) that is shared across multiple cores While there exist many isolation mechanisms that are available [18, 23, 43, 44, 48] – these make defenses much harder. to cloud service providers, including virtual machines, containers, Many defenses against cache-side-channel attacks in cloud envi- etc. , the problem of side-channel increases in importance as a re- ronments have also been proposed (e.g., [6, 19, 21, 22, 24, 25, 28, 31, maining security vulnerability – particularly in the presence of 32, 37–40, 45, 51]). However, the proposed solutions suer from a shared caches and multicore processors. In this paper we present variety of drawbacks: (a) some are probabilistic [25, 37, 51]; (b) oth- a hardware-software mechanism that improves the isolation of ers do not protect applications when SMT is enabled [51]; (c) some cloud processes in the presence of shared caches on multicore require developers to re-write applications [19, 22, 31], (d) while chips. Combining the Intel CAT architecture that enables cache others require hardware changes [39, 40] impacting deployability; partitioning on the y with novel scheduling techniques and state (e) some depend on violating x86 semantics by modifying the reso- cleansing mechanisms, we enable cache-side-channel free comput- lution, accuracy or availability of timing instructions [21, 24, 38] ing for Linux-based containers and virtual machines, in particular, and consequently require signicant changes to the applications. -

Filesystems HOWTO Filesystems HOWTO Table of Contents Filesystems HOWTO

Filesystems HOWTO Filesystems HOWTO Table of Contents Filesystems HOWTO..........................................................................................................................................1 Martin Hinner < [email protected]>, http://martin.hinner.info............................................................1 1. Introduction..........................................................................................................................................1 2. Volumes...............................................................................................................................................1 3. DOS FAT 12/16/32, VFAT.................................................................................................................2 4. High Performance FileSystem (HPFS)................................................................................................2 5. New Technology FileSystem (NTFS).................................................................................................2 6. Extended filesystems (Ext, Ext2, Ext3)...............................................................................................2 7. Macintosh Hierarchical Filesystem − HFS..........................................................................................3 8. ISO 9660 − CD−ROM filesystem.......................................................................................................3 9. Other filesystems.................................................................................................................................3 -

SGI™ Propack 1.3 for Linux™ Start Here

SGI™ ProPack 1.3 for Linux™ Start Here Document Number 007-4062-005 © 1999—2000 Silicon Graphics, Inc.— All Rights Reserved The contents of this document may not be copied or duplicated in any form, in whole or in part, without the prior written permission of Silicon Graphics, Inc. LIMITED AND RESTRICTED RIGHTS LEGEND Use, duplication, or disclosure by the Government is subject to restrictions as set forth in the Rights in Data clause at FAR 52.227-14 and/or in similar or successor clauses in the FAR, or in the DOD, DOE or NASA FAR Supplements. Unpublished rights reserved under the Copyright Laws of the United States. Contractor/ manufacturer is SGI, 1600 Amphitheatre Pkwy., Mountain View, CA 94043-1351. Silicon Graphics is a registered trademark and SGI and SGI ProPack for Linux are trademarks of Silicon Graphics, Inc. Intel is a trademark of Intel Corporation. Linux is a trademark of Linus Torvalds. NCR is a trademark of NCR Corporation. NFS is a trademark of Sun Microsystems, Inc. Oracle is a trademark of Oracle Corporation. Red Hat is a registered trademark and RPM is a trademark of Red Hat, Inc. SuSE is a trademark of SuSE Inc. TurboLinux is a trademark of TurboLinux, Inc. UNIX is a registered trademark in the United States and other countries, licensed exclusively through X/Open Company, Ltd. SGI™ ProPack 1.3 for Linux™ Start Here Document Number 007-4062-005 Contents List of Tables v About This Guide vii Reader Comments vii 1. Release Features 1 Feature Overview 2 Qualified Drivers 3 Patches and Changes to Base Linux Distributions 3 2. -

“Add-On-Packages” in R Installation and Administration

R Installation and Administration Version 2.15.3 Patched (2013-03-03) R Core Team Permission is granted to make and distribute verbatim copies of this manual provided the copyright notice and this permission notice are preserved on all copies. Permission is granted to copy and distribute modified versions of this manual under the con- ditions for verbatim copying, provided that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. Permission is granted to copy and distribute translations of this manual into another lan- guage, under the above conditions for modified versions, except that this permission notice may be stated in a translation approved by the R Core Team. Copyright c 2001{2012 R Core Team ISBN 3-900051-09-7 i Table of Contents 1 Obtaining R ::::::::::::::::::::::::::::::::::::: 1 1.1 Getting and unpacking the sources ::::::::::::::::::::::::::::: 1 1.2 Getting patched and development versions :::::::::::::::::::::: 1 1.2.1 Using Subversion and rsync:::::::::::::::::::::::::::::::: 1 2 Installing R under Unix-alikes ::::::::::::::::: 3 2.1 Simple compilation ::::::::::::::::::::::::::::::::::::::::::::: 3 2.2 Help options ::::::::::::::::::::::::::::::::::::::::::::::::::: 4 2.3 Making the manuals:::::::::::::::::::::::::::::::::::::::::::: 4 2.4 Installation :::::::::::::::::::::::::::::::::::::::::::::::::::: 6 2.5 Uninstallation :::::::::::::::::::::::::::::::::::::::::::::::::: 8 2.6 Sub-architectures::::::::::::::::::::::::::::::::::::::::::::::: 8 2.6.1 Multilib -

Symantec Web Security Service Policy Guide

Web Security Service Policy Guide Revision: NOV.07.2020 Symantec Web Security Service/Page 2 Policy Guide/Page 3 Copyrights Broadcom, the pulse logo, Connecting everything, and Symantec are among the trademarks of Broadcom. The term “Broadcom” refers to Broadcom Inc. and/or its subsidiaries. Copyright © 2020 Broadcom. All Rights Reserved. The term “Broadcom” refers to Broadcom Inc. and/or its subsidiaries. For more information, please visit www.broadcom.com. Broadcom reserves the right to make changes without further notice to any products or data herein to improve reliability, function, or design. Information furnished by Broadcom is believed to be accurate and reliable. However, Broadcom does not assume any liability arising out of the application or use of this information, nor the application or use of any product or circuit described herein, neither does it convey any license under its patent rights nor the rights of others. Policy Guide/Page 4 Symantec WSS Policy Guide The Symantec Web Security Service solutions provide real-time protection against web-borne threats. As a cloud-based product, the Web Security Service leverages Symantec's proven security technology, including the WebPulse™ cloud community. With extensive web application controls and detailed reporting features, IT administrators can use the Web Security Service to create and enforce granular policies that are applied to all covered users, including fixed locations and roaming users. If the WSS is the body, then the policy engine is the brain. While the WSS by default provides malware protection (blocks four categories: Phishing, Proxy Avoidance, Spyware Effects/Privacy Concerns, and Spyware/Malware Sources), the additional policy rules and options you create dictate exactly what content your employees can and cannot access—from global allows/denials to individual users at specific times from specific locations.