3D Video Playback Håkan Andersson

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Download Media Player Codec Pack Version 4.1 Media Player Codec Pack

download media player codec pack version 4.1 Media Player Codec Pack. Description: In Microsoft Windows 10 it is not possible to set all file associations using an installer. Microsoft chose to block changes of file associations with the introduction of their Zune players. Third party codecs are also blocked in some instances, preventing some files from playing in the Zune players. A simple workaround for this problem is to switch playback of video and music files to Windows Media Player manually. In start menu click on the "Settings". In the "Windows Settings" window click on "System". On the "System" pane click on "Default apps". On the "Choose default applications" pane click on "Films & TV" under "Video Player". On the "Choose an application" pop up menu click on "Windows Media Player" to set Windows Media Player as the default player for video files. Footnote: The same method can be used to apply file associations for music, by simply clicking on "Groove Music" under "Media Player" instead of changing Video Player in step 4. Media Player Codec Pack Plus. Codec's Explained: A codec is a piece of software on either a device or computer capable of encoding and/or decoding video and/or audio data from files, streams and broadcasts. The word Codec is a portmanteau of ' co mpressor- dec ompressor' Compression types that you will be able to play include: x264 | x265 | h.265 | HEVC | 10bit x265 | 10bit x264 | AVCHD | AVC DivX | XviD | MP4 | MPEG4 | MPEG2 and many more. File types you will be able to play include: .bdmv | .evo | .hevc | .mkv | .avi | .flv | .webm | .mp4 | .m4v | .m4a | .ts | .ogm .ac3 | .dts | .alac | .flac | .ape | .aac | .ogg | .ofr | .mpc | .3gp and many more. -

Megui) Recommended Fix: Download Here

Join Forum | Login | Today's Posts | Tutorials | Windows 10 Forum | Windows 8 Forum Windows 7 Help Forums Windows 7 help and support Tutorials » Windows 7: Video Encoding x264 (MeGUI) Recommended Fix: Download Here Page 1 of 3 1 2 3 > Video Encoding (Using MeGUI x264 Encoder) MeGUI x264 Video Encoding Published by Wishmaster 15 Aug 2010 --How to Setup & Encode Content with MeGUI x264 Published by Encoder-- This tutorial will show you how to use MeGUI to create a MP4 or MKV file for your Media Center collection, from your legally owned DVD collection. Wishmaster I will not cover how to decrypt. This tutorial will be in 2 parts: Part 1: Installing,setting up,and configuring everything needed as well as the configuring the encoder itself. Part 2: The actual encoding process. Theres alot of info. to cover so lets get started. Step 1: The Essential Tools & Utilities 1) Shark007's Codec pack (optional) If you plan on using WMC for playback, and wish to use FFDshow as the decoder, you will need the 64bit add on as well. However, for the purposes of MeGUI, only the 32bit package is needed. [Please Note] You do not actually need any 3rd party Codecs such as FFDShow or Sharks codec pack. Both DVD and BluRay can be indexed and encoded with built in codecs. Extracting BluRay video streams to MKV format is the easiest way to work with them. You will need Haali splitter for these MKVs but if using Shark007s package, it is included.. You will need it only if you decide not to use this codec package. -

Making Speech Recognition Work on the Web Christopher J. Varenhorst

Making Speech Recognition Work on the Web by Christopher J. Varenhorst Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Masters of Engineering in Computer Science and Engineering at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY May 2011 c Massachusetts Institute of Technology 2011. All rights reserved. Author.................................................................... Department of Electrical Engineering and Computer Science May 20, 2011 Certified by . James R. Glass Principal Research Scientist Thesis Supervisor Certified by . Scott Cyphers Research Scientist Thesis Supervisor Accepted by . Christopher J. Terman Chairman, Department Committee on Graduate Students Making Speech Recognition Work on the Web by Christopher J. Varenhorst Submitted to the Department of Electrical Engineering and Computer Science on May 20, 2011, in partial fulfillment of the requirements for the degree of Masters of Engineering in Computer Science and Engineering Abstract We present an improved Audio Controller for Web-Accessible Multimodal Interface toolkit { a system that provides a simple way for developers to add speech recognition to web pages. Our improved system offers increased usability and performance for users and greater flexibility for developers. Tests performed showed a %36 increase in recognition response time in the best possible networking conditions. Preliminary tests shows a markedly improved users experience. The new Wowza platform also provides a means of upgrading other Audio Controllers easily. Thesis Supervisor: James R. Glass Title: Principal Research Scientist Thesis Supervisor: Scott Cyphers Title: Research Scientist 2 Contents 1 Introduction and Background 7 1.1 WAMI - Web Accessible Multimodal Toolkit . 8 1.1.1 Existing Java applet . 11 1.2 SALT . -

Ethical Decision Making Framework Pdf

Ethical decision making framework pdf Continue FOLLOW USA Results 1 - 10 of 17 Prev 1 2 Next Android users can grab the K Lite codec package for free. Download the latest version of this full free media player file for your Android set. Android users like Samsung can better enjoy the app. Here are some of the main features the players explain. K Lite Codec pack Full Features K-Lite Codec Pack apk K-Lite Codec Pack is a collection of audio and video codec that allows the Android operating system and its software to play different audio and video formats that are not supported by its operating system. K-Lite codec Pack encodes and decodes Direct Show filters to audio and video forms. The K-Lite Codec package has a convenient design that provides you with the best solution for audio and film files. K-Lite Codec is often updated with its latest and best components. Components are carefully selected for specific purposes. It's convenient and easy to use. You can install the K-Lite Codec package on its components that you would like to install. K-Lite Codec has four editions, like, Basic. Standard. Full and Mega. It's compatible with XP beyond. Some components that can handle multiple formats. K-Lite codec provide useful features such as, subtitle display, streaming audio bit, file association option and detection of broken codec. Removal removes everything includes registry keys. You can install Music Player - Audio Player Apk App Direct Download for Android Other Features: K-Lite Codec Package works great with Windows Media Player and Media Center. -

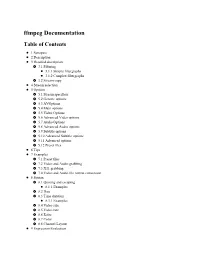

Ffmpeg Documentation Table of Contents

ffmpeg Documentation Table of Contents 1 Synopsis 2 Description 3 Detailed description 3.1 Filtering 3.1.1 Simple filtergraphs 3.1.2 Complex filtergraphs 3.2 Stream copy 4 Stream selection 5 Options 5.1 Stream specifiers 5.2 Generic options 5.3 AVOptions 5.4 Main options 5.5 Video Options 5.6 Advanced Video options 5.7 Audio Options 5.8 Advanced Audio options 5.9 Subtitle options 5.10 Advanced Subtitle options 5.11 Advanced options 5.12 Preset files 6 Tips 7 Examples 7.1 Preset files 7.2 Video and Audio grabbing 7.3 X11 grabbing 7.4 Video and Audio file format conversion 8 Syntax 8.1 Quoting and escaping 8.1.1 Examples 8.2 Date 8.3 Time duration 8.3.1 Examples 8.4 Video size 8.5 Video rate 8.6 Ratio 8.7 Color 8.8 Channel Layout 9 Expression Evaluation 10 OpenCL Options 11 Codec Options 12 Decoders 13 Video Decoders 13.1 rawvideo 13.1.1 Options 14 Audio Decoders 14.1 ac3 14.1.1 AC-3 Decoder Options 14.2 ffwavesynth 14.3 libcelt 14.4 libgsm 14.5 libilbc 14.5.1 Options 14.6 libopencore-amrnb 14.7 libopencore-amrwb 14.8 libopus 15 Subtitles Decoders 15.1 dvdsub 15.1.1 Options 15.2 libzvbi-teletext 15.2.1 Options 16 Encoders 17 Audio Encoders 17.1 aac 17.1.1 Options 17.2 ac3 and ac3_fixed 17.2.1 AC-3 Metadata 17.2.1.1 Metadata Control Options 17.2.1.2 Downmix Levels 17.2.1.3 Audio Production Information 17.2.1.4 Other Metadata Options 17.2.2 Extended Bitstream Information 17.2.2.1 Extended Bitstream Information - Part 1 17.2.2.2 Extended Bitstream Information - Part 2 17.2.3 Other AC-3 Encoding Options 17.2.4 Floating-Point-Only AC-3 Encoding -

Video Codec Set-Up

Quintic Software Tutorial 5 Video Codecs Contents Page 1. Video Codecs 2. Vista Codec Pack Install Instructions 3. Xvid Video Codec Installation 4. XP Backwards Compatibility Video Utility (Only for Vista Users) 1. Video Codecs A video codec is a device or software that enables video compression and/or decompression for digital video. There is a complex balance between the video quality, the quantity of the data needed to represent it (also known as the bit rate), the complexity of the encoding and decoding algorithms, robustness to data losses and errors, ease of editing, random access, the state of the art of compression algorithm design, end-to-end delay, and a number of other factors. Quintic currently recommend using the following codecs for use with all levels of Quintic software: - Vista Video Codec Package - Xvid MPEG 4 Video Codec Package - XP Backwards compatibility Video utility (Only use if trying to play XP captured videos on a Vista/7/8 operating system) All of these codecs / video utilities can be downloaded from: http://www.quintic.com/downloads/system_tools.htm Installing the recommended codecs provides your system with specific instructions on how to compress and decompress videos. Once you've installed the codecs on your system you will be able to play the videos within the Quintic Video Analysis software player. 2. Vista Codec Pack Install Instructions The Vista codec Package is a collection of codec’s (audio and video), that make it possible to open the majority of the formats existing multimedia’s (Xvid, Windows video media 9, FFDShow, ogg and ac-3 acm). -

Zoom Player Documentation

Table of Contents Part I Introduction 1 1 Feature................................................................................................................................... Chart 5 2 Features................................................................................................................................... in detail 10 3 Options................................................................................................................................... & Settings 19 Advanced Options.......................................................................................................................................................... 20 Interface ......................................................................................................................................................... 21 Control Bar ......................................................................................................................................... 22 Buttons ................................................................................................................................... 23 Timeline Area ................................................................................................................................... 23 Display ................................................................................................................................... 24 Keyboard ........................................................................................................................................ -

Ffmpeg Codecs Documentation Table of Contents

FFmpeg Codecs Documentation Table of Contents 1 Description 2 Codec Options 3 Decoders 4 Video Decoders 4.1 hevc 4.2 rawvideo 4.2.1 Options 5 Audio Decoders 5.1 ac3 5.1.1 AC-3 Decoder Options 5.2 flac 5.2.1 FLAC Decoder options 5.3 ffwavesynth 5.4 libcelt 5.5 libgsm 5.6 libilbc 5.6.1 Options 5.7 libopencore-amrnb 5.8 libopencore-amrwb 5.9 libopus 6 Subtitles Decoders 6.1 dvbsub 6.1.1 Options 6.2 dvdsub 6.2.1 Options 6.3 libzvbi-teletext 6.3.1 Options 7 Encoders 8 Audio Encoders 8.1 aac 8.1.1 Options 8.2 ac3 and ac3_fixed 8.2.1 AC-3 Metadata 8.2.1.1 Metadata Control Options 8.2.1.2 Downmix Levels 8.2.1.3 Audio Production Information 8.2.1.4 Other Metadata Options 8.2.2 Extended Bitstream Information 8.2.2.1 Extended Bitstream Information - Part 1 8.2.2.2 Extended Bitstream Information - Part 2 8.2.3 Other AC-3 Encoding Options 8.2.4 Floating-Point-Only AC-3 Encoding Options 8.3 flac 8.3.1 Options 8.4 opus 8.4.1 Options 8.5 libfdk_aac 8.5.1 Options 8.5.2 Examples 8.6 libmp3lame 8.6.1 Options 8.7 libopencore-amrnb 8.7.1 Options 8.8 libopus 8.8.1 Option Mapping 8.9 libshine 8.9.1 Options 8.10 libtwolame 8.10.1 Options 8.11 libvo-amrwbenc 8.11.1 Options 8.12 libvorbis 8.12.1 Options 8.13 libwavpack 8.13.1 Options 8.14 mjpeg 8.14.1 Options 8.15 wavpack 8.15.1 Options 8.15.1.1 Shared options 8.15.1.2 Private options 9 Video Encoders 9.1 Hap 9.1.1 Options 9.2 jpeg2000 9.2.1 Options 9.3 libkvazaar 9.3.1 Options 9.4 libopenh264 9.4.1 Options 9.5 libtheora 9.5.1 Options 9.5.2 Examples 9.6 libvpx 9.6.1 Options 9.7 libwebp 9.7.1 Pixel Format 9.7.2 Options 9.8 libx264, libx264rgb 9.8.1 Supported Pixel Formats 9.8.2 Options 9.9 libx265 9.9.1 Options 9.10 libxvid 9.10.1 Options 9.11 mpeg2 9.11.1 Options 9.12 png 9.12.1 Private options 9.13 ProRes 9.13.1 Private Options for prores-ks 9.13.2 Speed considerations 9.14 QSV encoders 9.15 snow 9.15.1 Options 9.16 vc2 9.16.1 Options 10 Subtitles Encoders 10.1 dvdsub 10.1.1 Options 11 See Also 12 Authors 1 Description# TOC This document describes the codecs (decoders and encoders) provided by the libavcodec library. -

Dolby Ac3 Audio Download Directshow Decoder

Dolby Ac3 Audio Download Directshow Decoder Dolby Ac3 Audio Download Directshow Decoder 1 / 4 2 / 4 The MainConcept Decoder Pack MPEG-1/2 for Windows® 32-bit and 64-bit platforms ... Dolby Digital Plus · AAC · MPEG ... others, including HD, HDV and HDTV in highest quality using the renowned MainConcept audio, video and demuxer filters. ... If you like to evaluate the decoder filters, we recommend downloading our ... AC3Filter is a DirectShow decoder needed to play DVDs and AVI files rendered with ... whether the track is ripped from AC3/ Audio CDs, DTS/ Audio CDs or PES DVDs. ... Dolby Surround / Pro Logic / Pro Logic II downmix .... AC3 (Audio Coding 3) is a synonym for Dolby Digital, was created by Dolby Labs for use in a Dolby Digital ... Download AC3 Player (mirror). Directshow Filter. AC3Filter is a free DirectShow filter for real time audio decoding and processing. Windows Server 2012 R2 VL en-US 2018 ISO Free Download This file contains a track in the Dolby AC3 Audio (code "8192") format. You may need to install a DirectShow decoder for this audio format in order to ... Just a bunch of incoherent snippets about "oh just download this [shoddy ... Youtube gets legal TV episodes Adobe Illustrator CC 2015.2.1 19.2.1 RePack Release on 28Feb2016 Forget passwords: Secure yourself with a passphrase and these tools directshow decoder dolby ac3 audio download divx - Dolby Ac3 Audio Code 8192 für Divx Player Downloads und Anleitungen In diesem Tutorial möchten wir .... Codecs are needed for encoding and decoding (playing) audio and video. ... 3 / 4 ffdshow; ffdshow is a very powerful DirectShow filter that can decode many audio and .. -

Ffsake: Further Fluff with Ffmpeg

ffsake: further fluff with ffmpeg Talking Tech, Spring 2017 2/5/17 Ethan Gates What is ffmpeg? ● Leading open-source framework for processing and manipulating audiovisual material ● Aims to decode, encode, transcode, mux, demux, stream, filter and play “anything that humans and machines have created” ● A combination of tools and libraries ○ ffmpeg - media conversion ○ ffprobe - metadata analysis/extraction ○ ffplay - playback ■ libavutil - various utilities (e.g. math equations) ■ libavcodec - codec library ■ libavformat - format (wrapper/container) library ■ libavdevice - device library (drivers for common/open hardware) ■ libavfilter - filter library ■ libswscale - scaling library ■ libswresample - audio sampling library Who’s using it? ● VLC Media Player ● YouTube ● Media Player Classic ● Chromium/Google Chrome ● Handbrake ● Facebook ● Audacity ● DaVinci Resolve ● DCP-o-matic ● Axle But what can it do? ● Make derivative copies ● Re-wrap files ● Edit files ● Generate checksums ● Create test files/streams ● Extract/insert metadata ● Generate informative (or just plain cool) audio/video effects ● Livestream video/screencapture * Thanks for this slide, Andrew Weaver Installation All about that configuration! $ brew info ffmpeg $ brew install ffmpeg --with-sdl2 --with-freetype --with-openjpeg --with-x265 --with-rubberband --with-tesseract Translation: with ffplay (playback), with added text/titles support, with JPEG 2000 codec, with H.265 (HEVC) codec, with extra audio filters, with OCR support Tasks to Cover I. Making a test file/signal -

VIDEO DIGITIZATION at the AUSTRIAN MEDIATHEK Chives Obtain a Quality Assurance Instrument for the Recording of Their Media

ARTICLE 8LIIQTPS]QIRXSJWYGLERSRPMRIGIVXM½GEXMSRWIVZMGITVSZMHIWFIRI½XWJSVFSXLTEVXMIWEV- VIDEO DIGITIZATION AT THE AUSTRIAN MEDIATHEK chives obtain a quality assurance instrument for the recording of their media. In the past, sound Herman Lewetz, Austian Mediathek9 carrier owners had to implement quality control by means of a cost-intensive strategy: mul- tiple recording of a single sound medium and time-consuming comparative analysis. The avail- In September 2009 the Austrian Mediathek started a project called “Österreich am Wort“. EFMPMX]SJSFNIGXMZIQIEWYVIQIRXVIWYPXWEPPS[WJSVEHVEWXMGEPP]WMQTPM½IHUYEPMX]QSRMXSVMRK Its goal is to digitize and publish via the web about 10,000 full-length recordings within three years. The misfortune for me personally was that in the proposal for this project someone had For companies or organisations dealing with the process of mass migration of analogue media, claimed 2,000 of these to be video recordings. This meant I had to start what we so far suc- long-term evaluation is an important tool for supporting the continuous internal improve- cessfully had postponed: Video digitization. ment process. For example, slow deterioration over time can be made visible. Besides the cost savings due to precise situation assessment, there is another advantage for service providers: Requirements [LIRYWMRKTPE]FEGOHIZMGIWSJHMJJIVIRXUYEPMX]MXQE]LEZIFIIRHMJ½GYPXXSEVKYIXLILMKLIV costs for recording with high-quality machines to their clients. With the help of the automatic, %WE½VWXWXIT[ISYXPMRIHXLIVIUYMVIQIRXW[IXLSYKLXMQTSVXERXJSVEHMKMXM^EXMSRWGLIQE reference-based analysis, quality differences between average and high-class playback devices that best supported long-term preservation. Unlike audio digitization there is still no widely become easily measurable. By documenting the measurement results, the client can easily see accepted archive format for video. -

A Complete End-To-End Open Source Toolchain for the Versatile Video Coding (VVC) Standard

A Complete End-To-End Open Source Toolchain for the Versatile Video Coding (VVC) Standard Adam Wieckowski*, Christian Lehmann*, Benjamin Bross*, Detlev Marpe*, Thibaud Biatek+, Mickael Raulet+, Jean Le Feuvre$ *Video Communication and Applications Department, Fraunhofer HHI, Berlin, Germany +ATEME, Vélizy-Villacoublay, France $LTCI, Telecom Paris, Institut Polytechnique de Paris, France {firstname.lastname}@hhi.fraunhofer.de, {t.biatek,m.raulet}@ateme.com, [email protected] ABSTRACT Standard. In Proceedings of the 29th ACM International Conference on Multimedia (MM’21). ACM, Chengdu, China, 4 pages. Versatile Video Coding (VVC) is the most recent international https://doi.org/10.1145/1234567890 video coding standard jointly developed by ITU-T and ISO/IEC, which has been finalized in July 2020. VVC allows for significant bit-rate reductions around 50% for the same subjective video 1 Introduction quality compared to its predecessor, High Efficiency Video In July 2021, the Versatile Video Coding (VVC) standard has Coding (HEVC). One year after finalization, VVC support in been finalized by the Joint Video Experts Team (JVET) of ITU-T devices and chipsets is still under development, which is aligned VCEG and ISO/IEC MPEG [1][2]. VVC was designed with two with the typical development cycles of new video coding main objectives: significant bit-rate reduction over its predecessor standards. This paper presents open-source software packages that High Efficiency Video Coding (HEVC) for the same perceived allow building a complete VVC end-to-end toolchain already one video quality and versatility to facilitate coding and transport for a year after its finalization. This includes the Fraunhofer HHI wide range of applications and content types.