Ffsake: Further Fluff with Ffmpeg

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Download Media Player Codec Pack Version 4.1 Media Player Codec Pack

download media player codec pack version 4.1 Media Player Codec Pack. Description: In Microsoft Windows 10 it is not possible to set all file associations using an installer. Microsoft chose to block changes of file associations with the introduction of their Zune players. Third party codecs are also blocked in some instances, preventing some files from playing in the Zune players. A simple workaround for this problem is to switch playback of video and music files to Windows Media Player manually. In start menu click on the "Settings". In the "Windows Settings" window click on "System". On the "System" pane click on "Default apps". On the "Choose default applications" pane click on "Films & TV" under "Video Player". On the "Choose an application" pop up menu click on "Windows Media Player" to set Windows Media Player as the default player for video files. Footnote: The same method can be used to apply file associations for music, by simply clicking on "Groove Music" under "Media Player" instead of changing Video Player in step 4. Media Player Codec Pack Plus. Codec's Explained: A codec is a piece of software on either a device or computer capable of encoding and/or decoding video and/or audio data from files, streams and broadcasts. The word Codec is a portmanteau of ' co mpressor- dec ompressor' Compression types that you will be able to play include: x264 | x265 | h.265 | HEVC | 10bit x265 | 10bit x264 | AVCHD | AVC DivX | XviD | MP4 | MPEG4 | MPEG2 and many more. File types you will be able to play include: .bdmv | .evo | .hevc | .mkv | .avi | .flv | .webm | .mp4 | .m4v | .m4a | .ts | .ogm .ac3 | .dts | .alac | .flac | .ape | .aac | .ogg | .ofr | .mpc | .3gp and many more. -

Making Speech Recognition Work on the Web Christopher J. Varenhorst

Making Speech Recognition Work on the Web by Christopher J. Varenhorst Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Masters of Engineering in Computer Science and Engineering at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY May 2011 c Massachusetts Institute of Technology 2011. All rights reserved. Author.................................................................... Department of Electrical Engineering and Computer Science May 20, 2011 Certified by . James R. Glass Principal Research Scientist Thesis Supervisor Certified by . Scott Cyphers Research Scientist Thesis Supervisor Accepted by . Christopher J. Terman Chairman, Department Committee on Graduate Students Making Speech Recognition Work on the Web by Christopher J. Varenhorst Submitted to the Department of Electrical Engineering and Computer Science on May 20, 2011, in partial fulfillment of the requirements for the degree of Masters of Engineering in Computer Science and Engineering Abstract We present an improved Audio Controller for Web-Accessible Multimodal Interface toolkit { a system that provides a simple way for developers to add speech recognition to web pages. Our improved system offers increased usability and performance for users and greater flexibility for developers. Tests performed showed a %36 increase in recognition response time in the best possible networking conditions. Preliminary tests shows a markedly improved users experience. The new Wowza platform also provides a means of upgrading other Audio Controllers easily. Thesis Supervisor: James R. Glass Title: Principal Research Scientist Thesis Supervisor: Scott Cyphers Title: Research Scientist 2 Contents 1 Introduction and Background 7 1.1 WAMI - Web Accessible Multimodal Toolkit . 8 1.1.1 Existing Java applet . 11 1.2 SALT . -

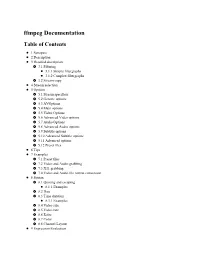

Ffmpeg Documentation Table of Contents

ffmpeg Documentation Table of Contents 1 Synopsis 2 Description 3 Detailed description 3.1 Filtering 3.1.1 Simple filtergraphs 3.1.2 Complex filtergraphs 3.2 Stream copy 4 Stream selection 5 Options 5.1 Stream specifiers 5.2 Generic options 5.3 AVOptions 5.4 Main options 5.5 Video Options 5.6 Advanced Video options 5.7 Audio Options 5.8 Advanced Audio options 5.9 Subtitle options 5.10 Advanced Subtitle options 5.11 Advanced options 5.12 Preset files 6 Tips 7 Examples 7.1 Preset files 7.2 Video and Audio grabbing 7.3 X11 grabbing 7.4 Video and Audio file format conversion 8 Syntax 8.1 Quoting and escaping 8.1.1 Examples 8.2 Date 8.3 Time duration 8.3.1 Examples 8.4 Video size 8.5 Video rate 8.6 Ratio 8.7 Color 8.8 Channel Layout 9 Expression Evaluation 10 OpenCL Options 11 Codec Options 12 Decoders 13 Video Decoders 13.1 rawvideo 13.1.1 Options 14 Audio Decoders 14.1 ac3 14.1.1 AC-3 Decoder Options 14.2 ffwavesynth 14.3 libcelt 14.4 libgsm 14.5 libilbc 14.5.1 Options 14.6 libopencore-amrnb 14.7 libopencore-amrwb 14.8 libopus 15 Subtitles Decoders 15.1 dvdsub 15.1.1 Options 15.2 libzvbi-teletext 15.2.1 Options 16 Encoders 17 Audio Encoders 17.1 aac 17.1.1 Options 17.2 ac3 and ac3_fixed 17.2.1 AC-3 Metadata 17.2.1.1 Metadata Control Options 17.2.1.2 Downmix Levels 17.2.1.3 Audio Production Information 17.2.1.4 Other Metadata Options 17.2.2 Extended Bitstream Information 17.2.2.1 Extended Bitstream Information - Part 1 17.2.2.2 Extended Bitstream Information - Part 2 17.2.3 Other AC-3 Encoding Options 17.2.4 Floating-Point-Only AC-3 Encoding -

© 2019 Jimi Jones

© 2019 Jimi Jones SO MANY STANDARDS, SO LITTLE TIME: A HISTORY AND ANALYSIS OF FOUR DIGITAL VIDEO STANDARDS BY JIMI JONES DISSERTATION Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Library and Information Science in the Graduate College of the University of Illinois at Urbana-Champaign, 2019 Urbana, Illinois Doctoral Committee: Associate Professor Jerome McDonough, Chair Associate Professor Lori Kendall Assistant Professor Peter Darch Professor Howard Besser, New York University ABSTRACT This dissertation focuses on standards for digital video - the social aspects of their design and the sociotechnical forces that drive their development and adoption. This work is a history and analysis of how the MXF, JPEG 2000, FFV1 and Matroska standards have been adopted and/or adapted by libraries and archives of different sizes. Well-funded institutions often have the resources to develop tailor-made specifications for the digitization of their analog video objects. Digital video standards and specifications of this kind are often derived from the needs of the cinema production and television broadcast realms in the United States and may be unsuitable for smaller memory institutions that are resource-poor and/or lack staff with the knowledge to implement these technologies. This research seeks to provide insight into how moving image preservation professionals work with - and sometimes against - broadcast and film production industries in order to produce and/or implement standards governing video formats and encodings. This dissertation describes the transition of four digital video standards from niches to widespread use in libraries and archives. It also examines the effects these standards produce on cultural heritage video preservation by interviewing people who implement the standards as well as people who develop them. -

Download Video Player for Pc 10 Best and Free Video Players for Windows 10 PC in 2021

download video player for pc 10 Best And Free Video Players For Windows 10 PC in 2021. We all love to watch TV shows, Movies on our computers. Since computers are more preferred nowadays, more and more streaming sites are popping out of the web. However, not everyone out there loves to stream videos because video streaming can be expensive. We first need to have a proper internet connection and a subscription to the streaming service to watch videos. In this case, downloading videos seems to be the best option as we have to spend the internet data once rather than streaming it again and again. But, what after downloading the video? Is it enough to get the best media experience? Well, no! To get the best video experience, we need to use the best media player. The media player is the only thing that decides our media consumption experience. So, having a good media player app is the most vital thing for media consumption. In this article, we will share the list of the best free video players for Windows. List of 10 Best And Free Video Players For Windows 10. These video players are free to download, and you can use them to get the most amazing video watching experience. So, let’s explore the ten best free video players for Windows. 1. Media Player Classic. If you are searching for a powerful video player tool for Windows 10 operating system, you need to give Media Player Classic a try. Guess what? The tool provides users with lots of customization options. -

Movavi Screen Capture 7

Movavi Screen Capture 7 Don't know where to start? Read these tutorials: Recording screen Capturing online video Capturing video from players Capture any fragment of your Record video from online video Record any video in your player. screen or the full desktop. websites such as YouTube or Vimeo. Capturing Skype calls Recording only audio Need more help? Record Skype calls with sound from both ends. Record any sound from a device Write to us at [email protected] or music on your computer. Or click here to open live chat. Table of Contents Removing trial restrictions ....................................................................................................................................................................................3 Getting an activation key ................................................................................................................................................................................4 Activating Screen Capture ..............................................................................................................................................................................5 Activating without Internet access ..................................................................................................................................................................6 Tutorials .................................................................................................................................................................................................................8 -

5Lesson 5: Multimedia on the Web

5Lesson 5: Multimedia on the Web Objectives By the end of this lesson, you will be able to: 1.5.7: Download and store files using a Web browser. 1.5.10: Install and upgrade common plug-ins, add-ons and viewers (e.g., Adobe Reader, Adobe Flash Player, Microsoft Silverlight, Windows Media Player, Apple QuickTime) and identify their common file name extensions. 1.5.11: Use document and multimedia file formats, including PDF, PNG, RTF, PostScript (PS), AVI, MPEG, MP3, MP4, Ogg. Convert between file formats when appropriate. 5-2 Internet Business Associate Pre-Assessment Questions 1. Briefly describe C++. 2. Which statement about vector graphics is true? a. Vector graphics are saved as sequences of vector statements. b. Vector graphics have much larger file sizes than raster graphics. c. Vector graphics are pixel-based. d. GIFs and JPGs are vector graphics. 3. Name at least two examples of browser plug-ins. © 2014 Certification Partners, LLC. — All Rights Reserved. Version 2.1 Lesson 5: Multimedia on the Web 5-3 Introduction to Multimedia on the Web NOTE: Multimedia on the Web has expanded rapidly as broadband connections have allowed Multimedia use on users to connect at faster speeds. Almost all Web sites, including corporate sites, feature the Web has been hindered by multimedia content and interactive objects. For instance, employee orientation sessions, bandwidth audio and video memos, and training materials are often placed on the Internet or limitations. Until all Internet users have corporate intranets. high-speed connections Nearly all network-connected devices, such as PCs, tablets, smartphones and smart TVs, (broadband or can view online interactive multimedia. -

Free Mpc Download MPC-HC (Media Player Classic) 1.9.14

free mpc download MPC-HC (Media Player Classic) 1.9.14. MPC-HC is a lightweight, open source media player. It supports most video and audio file formats out there. Download. What's New. Certified. Similar to 11. Windows 64-bit Windows 32-bit Portable 64-bit Portable 32-bit. The player supports all common video and audio file formats available for playback. Most important thing is that we're completely free, 0 spyware, 0 ads and no user tracking. It has a built in DVD player with real-time zoom, support for AVI subtitles, QuickTime and RealVideo support (requires QuickTime and/or Real Player); built-in MPEG2/SVCD/DVD codec. Media Player Classic was created and is currently maintained by a programmer named "Gabest". This is a mod of Media Player Classic design for home cinema usage. Watch movies on any SSE CPU, even on your old computer back from '99. With its wide array of options, MPC-HC can be customized to fit almost any needs. Among other things we added custom toolbars. MPC-HC can also be used as DVB player. Note: The original MPC-HC was abandoned by developers in 2017 but this version carries on the legacy. Media Player Classic. Media Player Classic is an open-source media player for 32-bit and 64-bit versions of Windows operating systems. MPC-HC is capable of playing Video CD, Super Video CD, and other digital optical disc storage formats automatically; all necessary codecs have been installed in the program. The program and its source code are based on the K-lite Codec Pack and the Combined Community Codec Pack. -

Recommended File Formats for Long-Term Archiving and for Web Dissemination in Phaidra

Recommended file formats for long-term archiving and for web dissemination in Phaidra Edited by Gianluca Drago May 2019 License: https://creativecommons.org/licenses/by-nc-sa/4.0/ Premise This document is intended to provide an overview of the file formats to be used depending on two possible destinations of the digital document: long-term archiving uploading to Phaidra and subsequent web dissemination When the document uploaded to Phaidra is also the only saved file, the two destinations end up coinciding, but in general one will probably want to produce two different files, in two different formats, so as to meet the differences in requirements and use in the final destinations. In the following tables, the recommendations for long-term archiving are distinct from those for dissemination in Phaidra. There are no absolute criteria for choosing the file format. The choice is always dependent on different evaluations that the person who is carrying out the archiving will have to make on a case by case basis and will often result in a compromise between the best achievable quality and the limits imposed by the costs of production, processing and storage of files, as well as, for the preceding, by the opportunity of a conversion to a new format. 1 This choice is particularly significant from the perspective of long-term archiving, for which a quality that respects the authenticity and integrity of the original document and a format that guarantees long-term access to data are desirable. This document should be seen more as an aid to the reasoned choice of the person carrying out the archiving than as a list of guidelines to be followed to the letter. -

FFV1 Video Codec Specification

FFV1 Video Codec Specification by Michael Niedermayer [email protected] Contents 1 Introduction 2 2 Terms and Definitions 2 2.1 Terms ................................................. 2 2.2 Definitions ............................................... 2 3 Conventions 3 3.1 Arithmetic operators ......................................... 3 3.2 Assignment operators ........................................ 3 3.3 Comparison operators ........................................ 3 3.4 Order of operation precedence .................................... 4 3.5 Range ................................................. 4 3.6 Bitstream functions .......................................... 4 4 General Description 5 4.1 Border ................................................. 5 4.2 Median predictor ........................................... 5 4.3 Context ................................................ 5 4.4 Quantization ............................................. 5 4.5 Colorspace ............................................... 6 4.5.1 JPEG2000-RCT ....................................... 6 4.6 Coding of the sample difference ................................... 6 4.6.1 Range coding mode ..................................... 6 4.6.2 Huffman coding mode .................................... 9 5 Bitstream 10 5.1 Configuration Record ......................................... 10 5.1.1 In AVI File Format ...................................... 11 5.1.2 In ISO/IEC 14496-12 (MP4 File Format) ......................... 11 5.1.3 In NUT File Format .................................... -

Pragmatic Audiovisual Preservation

http://doi.org/10.7207/twr20-10 Pragmatic Audiovisual Preservation Ashley Blewer DPC Technology Watch Report October 2020 © Digital Preservation Coalition 2020 and Ashley Blewer 2020 ISSN: 2048-7916 DOI: http://doi.org/10.7207/twr20-10 All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, without prior permission in writing from the publisher. The moral rights of the author have been asserted. First published in Great Britain in 2020 by the Digital Preservation Coalition. Pragmatic Audiovisual Preservation Foreword The Digital Preservation Coalition (DPC) is an advocate and catalyst for digital preservation, ensuring our members can deliver resilient long-term access to digital content and services. It is a not-for- profit membership organization whose primary objective is to raise awareness of the importance of the preservation of digital material and the attendant strategic, cultural and technological issues. It supports its members through knowledge exchange, capacity building, assurance, advocacy and partnership. The DPC’s vision is to make our digital memory accessible tomorrow. The DPC Technology Watch Reports identify, delineate, monitor and address topics that have a major bearing on ensuring our collected digital memory will be for the future. They provide an advanced introduction in order to support those charged with ensuring a robust digital memory, and they are of general interest to a wide and international audience with interests in computing, information management, collections management and technology. The reports are commissioned after consultation among DPC members about shared priorities and challenges; they are commissioned from experts; and they are thoroughly scrutinized by peers before being released. -

Ffmpeg Codecs Documentation Table of Contents

FFmpeg Codecs Documentation Table of Contents 1 Description 2 Codec Options 3 Decoders 4 Video Decoders 4.1 hevc 4.2 rawvideo 4.2.1 Options 5 Audio Decoders 5.1 ac3 5.1.1 AC-3 Decoder Options 5.2 flac 5.2.1 FLAC Decoder options 5.3 ffwavesynth 5.4 libcelt 5.5 libgsm 5.6 libilbc 5.6.1 Options 5.7 libopencore-amrnb 5.8 libopencore-amrwb 5.9 libopus 6 Subtitles Decoders 6.1 dvbsub 6.1.1 Options 6.2 dvdsub 6.2.1 Options 6.3 libzvbi-teletext 6.3.1 Options 7 Encoders 8 Audio Encoders 8.1 aac 8.1.1 Options 8.2 ac3 and ac3_fixed 8.2.1 AC-3 Metadata 8.2.1.1 Metadata Control Options 8.2.1.2 Downmix Levels 8.2.1.3 Audio Production Information 8.2.1.4 Other Metadata Options 8.2.2 Extended Bitstream Information 8.2.2.1 Extended Bitstream Information - Part 1 8.2.2.2 Extended Bitstream Information - Part 2 8.2.3 Other AC-3 Encoding Options 8.2.4 Floating-Point-Only AC-3 Encoding Options 8.3 flac 8.3.1 Options 8.4 opus 8.4.1 Options 8.5 libfdk_aac 8.5.1 Options 8.5.2 Examples 8.6 libmp3lame 8.6.1 Options 8.7 libopencore-amrnb 8.7.1 Options 8.8 libopus 8.8.1 Option Mapping 8.9 libshine 8.9.1 Options 8.10 libtwolame 8.10.1 Options 8.11 libvo-amrwbenc 8.11.1 Options 8.12 libvorbis 8.12.1 Options 8.13 libwavpack 8.13.1 Options 8.14 mjpeg 8.14.1 Options 8.15 wavpack 8.15.1 Options 8.15.1.1 Shared options 8.15.1.2 Private options 9 Video Encoders 9.1 Hap 9.1.1 Options 9.2 jpeg2000 9.2.1 Options 9.3 libkvazaar 9.3.1 Options 9.4 libopenh264 9.4.1 Options 9.5 libtheora 9.5.1 Options 9.5.2 Examples 9.6 libvpx 9.6.1 Options 9.7 libwebp 9.7.1 Pixel Format 9.7.2 Options 9.8 libx264, libx264rgb 9.8.1 Supported Pixel Formats 9.8.2 Options 9.9 libx265 9.9.1 Options 9.10 libxvid 9.10.1 Options 9.11 mpeg2 9.11.1 Options 9.12 png 9.12.1 Private options 9.13 ProRes 9.13.1 Private Options for prores-ks 9.13.2 Speed considerations 9.14 QSV encoders 9.15 snow 9.15.1 Options 9.16 vc2 9.16.1 Options 10 Subtitles Encoders 10.1 dvdsub 10.1.1 Options 11 See Also 12 Authors 1 Description# TOC This document describes the codecs (decoders and encoders) provided by the libavcodec library.