A Rhetorical Take on Productivity Aids in Audio Engineering Software

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

32A Production Notes

J A N E Y P I C T U R E S Production Notes 32A A FILM BY MARIAN QUINN Featuring Ailish McCarthy, Sophie Jo Wasson, Orla Long, Riona Smith with Aidan Quinn, Orla Brady and Jared Harris Winner of the Tiernan McBride Award for Screenwriting Written and Directed by Marian Quinn Produced by Tommy Weir Roshanak Behesht Nedjad, Co-Producer James Flynn, Executive Producer Adrian Devane, Line Producer PJ Dillon, Director of Photography Paki Smith, Production Designer Gerry Leonard, Composer Driscoll Calder, Costume Designer Lars Ginzel, Sound Designer Rune Schweitzer, Editor A Janey Pictures/Flying Moon co-production, 2007 89 minutes, certification TBD T h e O l d D i s p e n s a r y, D r o m a h a i r e , L e i t r i m , I r e l a n d • t e l e p h o n e : + 3 5 3 . 7 1 . 9 1 3 . 8 8 0 1 • w w w. j a n e y p i c t u r e s . c o m Summary How does life measure up for a 13-year old girl? Dublin 1979. The Northside. Maeve Brennan gets up and puts on her first bra. The summer holidays beckon for Maeve and her trio of friends, Ruth, Orla and Claire. But they still have to suffer the indignities of the vigilant Sister Una as she patrols the school for latecomers. At home, the put-upon Maeve washes her bra and fears it’s already lost its luster… Until an encounter with Brian Power, the local heart-throb, sets her on a collision course with her friends. -

An Incredible New Sound for Engineers

An Incredible New Sound for Engineers Bruce Swedien comments on the recording techniques and production HIStory of Michael Jackson's latest album by Daniel Sweeney "HIStory" In The Making Increasingly, the launch of a new Michael Jackson collection has taken on the dimensions of a world event. Lest this be doubted, the videos promoting the King of Pop's latest effort, "HIStory", depict him with patently obvious symbolism as a commander of armies presiding over monster rallies of impassioned followers. But whatever one makes of hoopla surrounding the album, one can scarcely ignore its amazing production values and the skill with which truly vast musical resources have been brought to bear upon the project. Where most popular music makes do with the sparse instrumentation of a working band fleshed out with a bit of synth, "HIStory" brings together such renowned studio musicians and production talents as Slash, Steve Porcaro, Jimmy Jam, Nile Rodgers, plus a full sixty piece symphony orchestra, several choirs including the Andrae Crouch Singers, star vocalists such as sister Janet Jackson and Boys II Men, and the arrangements of Quincy Jones and Jeremy Lubbock. Indeed, the sheer richness of the instrumental and vocal scoring is probably unprecedented in the entire realm of popular recording. But the richness extends beyond the mere density of the mix to the overall spatial perspective of the recording. Just as Phil Spector's classic popular recordings of thirty years ago featured a signature "wall of sound" suggesting a large, perhaps overly reverberant recording space, so the recent recordings of Michael Jackson convey a no less distinctive though different sense of deep space-what for want of other words one might deem a "hall of sound". -

The Bruce Swedien Recording Method Free

FREE THE BRUCE SWEDIEN RECORDING METHOD PDF Bruce Swedien,Bill Gibson | 304 pages | 16 Oct 2013 | Hal Leonard Corporation | 9781458411198 | English | Milwaukee, United States The Bruce Swedien Recording Method - Recording - Harmony Central Bruce Swedien has been the engineer of choice for Michael Jackson and his producer Quincy Jones, among many others. By the age of 14 he was spending his holidays recording all comers, and even set up his own radio station to broadcast the results to the neighbourhood! At 19 he'd already worked for Tommy Dorsey and was setting up his own commercial studio in an old cinema in his home town of Minneapolis. Go The Bruce Swedien Recording Method what it sounds like in the studio and listen to the music. Off The Bruce Swedien Recording Method go to the studio, and Quincy hands over his score to the copyists. It still gives me the chills to think about it! Don't you believe it! One slice of hindsight on the subject from Quincy Jones has always particularly intrigued me: "We had to leave space for God to walk through the room. One of the original track sheets from the Thriller albums. However, despite the busting of the 'black box' myth, there are indeed fundamental ways in which the Acusonic approach affected the sound of Thriller, and indeed its predecessor. It was just incredible. As he comments in his new book, In The Studio With Michael Jackson: "These true stereo images add much to the depth and clarity of the final production. Stereo mics are vastly overrated. -

The Recording Academy® Producers & Engineers Wing® to Present Grammy® Soundtable— “Sonic Imprints: Songs That Changed My Life” at 129Th Aes Convention

® The Recording Academy Producers & Engineers Wing 3030 Olympic Boulevard • Santa Monica, CA 90404 E-mail: p&[email protected] THE RECORDING ACADEMY® PRODUCERS & ENGINEERS WING® TO PRESENT GRAMMY® SOUNDTABLE— “SONIC IMPRINTS: SONGS THAT CHANGED MY LIFE” TH AT 129 AES CONVENTION Diverse Group of High Profile Producer and Engineers Will Gather Saturday Nov. 6 at 2:30PM in Room 134 To Dissect Songs That Have Left An Indelible Mark SANTA MONICA, Calif. (Oct. 15, 2010) — Some songs are hits, some we just love, and some have changed our lives. For the GRAMMY SoundTable titled “Sonic Imprints: Songs That Changed My Life,” on Saturday, Nov. 6 at 2:30PM in Room 134, a select group of producer/engineers will break down the DNA of their favorite tracks and explain what moved them, what grabbed them, and why these songs left a life long impression. Moderated by Sylvia Massy, (Prince, Johnny Cash, Sublime) and featuring panelists Joe Barresi (Bad Religion, Queens of the Stone Age, Tool), Jimmy Douglass (Justin Timberlake, Jay Z, John Legend), Nathaniel Kunkel (Lyle Lovett, Everclear, James Taylor) and others, this event is sure to be lively, fun, and inspirational.. More Participant Information (other panelists TBA): Sylvia Massy broke into the big time with 1993's “Undertow” by Los Angeles rock band Tool, and went on to engineer for a diverse group of artists including Aerosmith, Babyface, Big Daddy Kane, The Black Crowes, Bobby Brown, Prince, Julio Iglesias, Seal, Skunk Anansie, and Paula Abdul, among many others. Massy also engineered and mixed projects with producer Rick Rubin, including Johnny Cash's “Unchained” album which won a GRAMMY award for Best Country Album. -

June 14-20, 2018

JUNE 14-20, 2018 FACEBOOK.COM/WHATZUPFTWAYNE // WWW.WHATZUP.COM ----------------------- Feature • Al Di Meola --------------------- Master of the Guitar By Deborah Kennedy tar player. In the early 80s, he turned more toward the electric side of things, writing and recording Electric Like Mozart, New Jersey-born guitarist Al Di Rendezvous and Scenario, which included the key- Meola has sometimes been criticized for playing too board work of Jan Hammer, who would go on to com- many notes. The criticism is actually a compliment in pose the theme to the television show Miami Vice. disguise. Di Meola, who will be at the Clyde Theater Guitar in all its forms is Di Meola’s primary pas- Wednesday, June 20 at 7 p.m., is able to blaze through sion. He also loves to collaborate with other artists, and songs so quickly, listeners are often left stunned by his in 1980, he teamed up with flamenco guitarist Paco de passion, technique and precision. Lucia and British fusion guitarist John McLaughlin to Di Meola grew up in Bergenfield, New Jersey and release Friday Night in San Francisco, a beloved and began playing guitar at eight years old. His love of popular live recording of three musicians at the top of Elvis inspired him to pick up the instrument. Later, he their game. became an enthusi- Since then, ast for jazz, country Di Meola has played and bluegrass. Still with basically ev- later, he enrolled eryone, including at the prestigious Paul Simon, Car- Berklee College of los Santana, Steve Music in Boston, Winwood, Herbie and his education Hancock, Stevie continued when, at Wonder, Les Paul, age 19, he was re- Jimmy Page, Frank cruited by keyboard- Zappa and Luciano ing and composing Pavarotti. -

Find Doc \ Bruce Swedien: in the Studio with Michael Jackson

YJ2V6U5Q62K4 \\ Doc # Bruce Swedien: In the Studio with Michael Jackson (Paperback) Bruce Swedien: In th e Studio with Mich ael Jackson (Paperback) Filesize: 8.29 MB Reviews Here is the finest publication i have read through until now. I am quite late in start reading this one, but better then never. I am just easily can get a pleasure of studying a created publication. (Morgan Bashirian) DISCLAIMER | DMCA VT3UBYGGTLT7 ^ PDF Bruce Swedien: In the Studio with Michael Jackson (Paperback) BRUCE SWEDIEN: IN THE STUDIO WITH MICHAEL JACKSON (PAPERBACK) Hal Leonard Corporation, United States, 2009. Paperback. Condition: New. Language: English . Brand New Book. (Book). No one was closer to Michael Jackson at the height of his creative powers than Bruce Swedien, the five-time Grammy winner who, with Jackson and producer Quincy Jones, formed the trio responsible for the sound of Jackson s records records that topped the charts and shook the world. Friend, co-creator, and colleague, Bruce Swedien was a seasoned recording engineer plucked from a job at legendary Universal Audio in Chicago when he began working with Michael Jackson and Quincy Jones on the soundtrack to The Wiz, and he was the master technician who gave the records their sound as the trio progressed to Jackson s greatest triumphs, O the Wall and the iconic, history-making Thriller, which revolutionized music and video and fixed Jackson in culture as the King of Pop. In the Studio with Michael Jackson is the chronicle of those times, when everything was about the music, the magic, and the amazing talent of a man who changed the face of pop music and culture forever. -

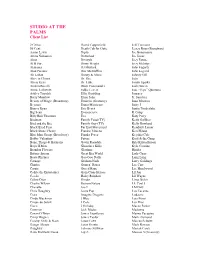

Client and Artist List

STUDIO AT THE PALMS Client List 2 Cellos David Copperfield Jeff Timmons 50 Cent Death Cab for Cutie Jersey Boys (Broadway) Aaron Lewis Diplo Joe Bonamassa Akina Nakamori Disturbed Joe Jonas Akon Divinyls Joey Fatone Al B Sure Dizzy Wright Joey McIntyre Alabama DJ Mustard John Fogarty Alan Parsons Doc McStuffins John Legend Ali Lohan Donny & Marie Johnny Gill Alice in Chains Dr. Dre JoJo Alicia Keys Dr. Luke Jordin Sparks Andrea Bocelli Duck Commander Josh Gracin Annie Leibovitz Eddie Levert Jose “Pepe” Quintano Ashley Tinsdale Ellie Goulding Journey Barry Manilow Elton John Jr. Sanchez Beauty of Magic (Broadway) Eminem (Grammy) Juan Montero Beyonce Ennio Morricone Juicy J Bianca Ryan Eric Benet Justin Timberlake Big Sean Evanescence K Camp Billy Bob Thornton Eve Katy Perry Birdman Family Feud (TV) Keith Galliher Bird and the Bee Family Guy (TV) Kelly Rowland Black Eyed Peas Far East Movement Kendrick Lamar Black Stone Cherry Frankie Moreno Keri Hilson Blue Man Group (Broadway) Franky Perez Keyshia Cole Bobby Valentino Future Kool & the Gang Bone, Thugs & Harmony Gavin Rossdale Kris Kristofferson Boyz II Men Ghostface Killa Kyle Cousins Brandon Flowers Gloriana Hinder Britney Spears Great Big World Lady Gaga Busta Rhymes Goo Goo Dolls Lang Lang Carnage Graham Nash Larry Goldings Charise Guns n’ Roses Lee Carr Cassie Gucci Mane Lee Hazelwood Cedric the Entertainer Gym Class Heroes Lil Jon Cee-lo Haley Reinhart Lil Wayne Celine Dion Hinder Limp Bizkit Charlie Wilson Human Nature LL Cool J Chevelle Ice-T LMFAO Chris Daughtry Icona Pop Los -

Michael Brauer Issue 64

FEATURE ‘BRAUERIZING’ THE MIX Michael Brauer has mixed The New York-based star mixer, Michael H. the ladder from the proverbial tea-boy beginnings Brauer, has a reputation for eclecticism, both to staff engineer at Media Sound Studios in NYC countless singles and albums in the wide variety of artists and genres he’s worked during 1976-84, picking up tricks of the trade from over the years, developing his with, and in his approach to mixing. His credit list is the likes of Bob Clearmountain, Mike Barbiero, certainly very impressive and exceptionally Tony Bongiovi, Harvey Goldberg, and others. He own approach to compression wide-ranging, featuring stellar names like The subsequently went freelance and graduated to the along the way. ‘Brauerizing’ Rolling Stones, Coldplay, Prefab Sprout, Was (Not role of occasional producer, but for the last 15 years Was), James Brown, Aerosmith, Jeff Buckley, David or so he has preferred to almost exclusively work the mix, as he calls it, is his Byrne, Tony Bennett, Billy Joel, Rod Stewart, Paul as a mixer. Ironically, given the absolutely massive (trademarked) signature. McCartney, Ben Folds, Pet Shop Boys, Bob Dylan, amount of outboard in his studio, Brauer likes to de- Willie Nelson, MeatLoaf, John Mayer, Martha emphasise the importance of technology and prefers Text: Paul Tingen Wainwright, Pete Murray and many, many others. to talk about feel and colours. Photography: Michael Brauerul Tingen As for his approach, Brauer has become legendary When approached about giving us the lowdown in the mix world for his liberal and innovative use on his mix of Coldplay’s hit Violet Hill, Brauer of compression. -

The Aesthetics of 5.1 Surround

Barbour Applying Aural Research, 1 Applying Aural Research: the aesthetics of 5.1 surround Jim Barbour School of Social and Behavioural Sciences, Swinburne University email: [email protected] art to illustrate some of the aural possibilities. Despite Abstract significant limitations inherent in the 5.1 surround Multi-channel surround sound audio offers a richer sound specification, it is the position of this paper that perceptual experience than traditional stereo it represents an opportunity to deliver an exciting and reproduction, recreating three-dimensional aural immersive aural experience to a consumer today. soundfields. Understanding the limitations of human auditory perception and surround sound technology 2 Consumer Surround may assist the exploration of acoustic space as an For many decades, it has been a goal of many expressive dimension. audio practitioners to deliver the immersive, three- dimensional soundfield of the real world, or of their 1 Introduction creative imagination, to listeners in their home Research and development into new digital audio environment. software and hardware has resulted in multi-channel digital audio recording technology that allows the 2.1 Quad: 1970s creation of immersive aural environments reproduced The development of multi-track tape recorders in by multiple loudspeakers arranged around and above the 1970’s led to successful experiments with four the listening position. The audio delivery platform channel quadraphonic recording, which succeeded in offered by the Digital Versatile Disc has provided the capturing and reproducing a high quality three- facility for musicians, composers and sound designers dimensional soundfield in a controlled studio to produce three-dimensional audio works in studio environment. -

ENTERTAINMENT Page 17 Technique • Friday, March 24, 2000 • 17

ENTERTAINMENT page 17 Technique • Friday, March 24, 2000 • 17 Fu Manchu on the road On shoulders of giants ENTERTAINMENT The next time you head off down the Oasis returns after some troubling highway and are looking for some years with a new release and new hope tunes, try King of the Road. Page 19 for the future of the band. Page 21 Technique • Friday, March 24, 2000 Passion from the mouthpiece all the way to the back row By Alan Back Havana, Cuba, in 1949, Sandoval of jazz, classical idioms, and Living at a 15-degree tilt began studying classical trumpet traditional Cuban styles. at the age of 12, and his early During a 1977 visit to Cuba, Spend five minutes, or even experience included a three-year bebop master and Latin music five seconds, talking with enrollment at the Cuba National enthusiast Dizzy Gillespie heard trumpeter Arturo Sandoval and the young upstart performing you get an idea of just how with Irakere and decided to be- dedicated he is to his art. Whether “I don’t have to ask gin mentoring him. In the fol- working as a recording artist, lowing years, Sandoval left the composer, professor, or amateur permission to group in order to tour with both actor, he knows full well where anybody to do Gillespie’s United Nation Or- his inspiration comes from and chestra and an ensemble of his does everything in his power to what I have to.” own. The two formed a friend- pass that spark along to anyone Arturo Sandoval ship that lasted until the older who will listen. -

8123 Songs, 21 Days, 63.83 GB

Page 1 of 247 Music 8123 songs, 21 days, 63.83 GB Name Artist The A Team Ed Sheeran A-List (Radio Edit) XMIXR Sisqo feat. Waka Flocka Flame A.D.I.D.A.S. (Clean Edit) Killer Mike ft Big Boi Aaroma (Bonus Version) Pru About A Girl The Academy Is... About The Money (Radio Edit) XMIXR T.I. feat. Young Thug About The Money (Remix) (Radio Edit) XMIXR T.I. feat. Young Thug, Lil Wayne & Jeezy About Us [Pop Edit] Brooke Hogan ft. Paul Wall Absolute Zero (Radio Edit) XMIXR Stone Sour Absolutely (Story Of A Girl) Ninedays Absolution Calling (Radio Edit) XMIXR Incubus Acapella Karmin Acapella Kelis Acapella (Radio Edit) XMIXR Karmin Accidentally in Love Counting Crows According To You (Top 40 Edit) Orianthi Act Right (Promo Only Clean Edit) Yo Gotti Feat. Young Jeezy & YG Act Right (Radio Edit) XMIXR Yo Gotti ft Jeezy & YG Actin Crazy (Radio Edit) XMIXR Action Bronson Actin' Up (Clean) Wale & Meek Mill f./French Montana Actin' Up (Radio Edit) XMIXR Wale & Meek Mill ft French Montana Action Man Hafdís Huld Addicted Ace Young Addicted Enrique Iglsias Addicted Saving abel Addicted Simple Plan Addicted To Bass Puretone Addicted To Pain (Radio Edit) XMIXR Alter Bridge Addicted To You (Radio Edit) XMIXR Avicii Addiction Ryan Leslie Feat. Cassie & Fabolous Music Page 2 of 247 Name Artist Addresses (Radio Edit) XMIXR T.I. Adore You (Radio Edit) XMIXR Miley Cyrus Adorn Miguel Adorn Miguel Adorn (Radio Edit) XMIXR Miguel Adorn (Remix) Miguel f./Wiz Khalifa Adorn (Remix) (Radio Edit) XMIXR Miguel ft Wiz Khalifa Adrenaline (Radio Edit) XMIXR Shinedown Adrienne Calling, The Adult Swim (Radio Edit) XMIXR DJ Spinking feat. -

I the ROLE of MUSIC THEORY in MUSIC PRODUCTION AND

THE ROLE OF MUSIC THEORY IN MUSIC PRODUCTION AND ENGINEERING by GEORGE A. WIEDERKEHR A THESIS Presented to the School of Music and Dance and the Graduate School of the University of Oregon in partial fulfillment of the requirements for the degree of Master of Arts September 2015 i THESIS APPROVAL PAGE Student: George A. Wiederkehr Title: The Role of Music Theory in Music Production and Engineering This thesis has been accepted and approved in partial fulfillment of the requirements for the Master of Arts degree in the School of Music and Dance by: Jack Boss Chair Stephen Rodgers Member Lance Miller Member and Scott L. Pratt Dean of the Graduate School Original approval signatures are on file with the University of Oregon Graduate School. Degree awarded September 2015 ii © 2015 George A. Wiederkehr This work is licensed under a Creative Commons Attribution (United States) License. iii THESIS ABSTRACT George A. Wiederkehr Master of Arts School of Music and Dance September 2015 Title: The Role of Music Theory in Music Production and Engineering Due to technological advancements, the role of the musician has changed dramatically in the 20 th and 21 st centuries. For the composer or songwriter especially, it is becoming increasingly expected for them to have some familiarity with music production and engineering, so that they are able to provide a finished product to employers, clients, or listeners. One goal of a successful production or engineered recording is to most effectively portray the recorded material. Music theory, and specifically analysis, has the ability to reveal important or expressive characteristics in a musical work.