Composite Video Artifact Removal by Nonlinear Bilateral Filtering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Analog/SDI to SDI/Optical Converter with TBC/Frame Sync User Guide

Analog/SDI to SDI/Optical Converter with TBC/Frame Sync User Guide ENSEMBLE DESIGNS Revision 6.0 SW v1.0.8 This user guide provides detailed information for using the BrightEye™1 Analog/SDI to SDI/Optical Converter with Time Base Corrector and Frame Sync. The information in this user guide is organized into the following sections: • Product Overview • Functional Description • Applications • Rear Connections • Operation • Front Panel Controls and Indicators • Using The BrightEye Control Application • Warranty and Factory Service • Specifications • Glossary BrightEye-1 BrightEye 1 Analog/SDI to SDI/Optical Converter with TBC/FS PRODUCT OVERVIEW The BrightEye™ 1 Converter is a self-contained unit that can accept both analog and digital video inputs and output them as optical signals. Analog signals are converted to digital form and are then frame synchronized to a user-supplied video reference signal. When the digital input is selected, it too is synchronized to the reference input. Time Base Error Correction is provided, allowing the use of non-synchronous sources such as consumer VTRs and DVD players. An internal test signal generator will produce Color Bars and the pathological checkfield test signals. The processed signal is output as a serial digital component television signal in accordance with ITU-R 601 in both electrical and optical form. Front panel controls permit the user to monitor input and reference status, proper optical laser operation, select video inputs and TBC/Frame Sync function, and adjust video level. Control and monitoring can also be done using the BrightEye PC or BrightEye Mac application from a personal computer with USB support. -

Advanced PAL Comb Filter-II (APCF-II) MC141627

MOTOROLA Order this document SEMICONDUCTOR TECHNICAL DATA by MC141627/D MC141627 Product Preview Advanced PAL Comb Filter-II FT SUFFIX (APCF-II) QFP PACKAGE CASE 898 The Advanced PAL Comb Filter–II is a video signal processor for VCRs, 48 1 LDPs, and TVs. It separates the Luminance Y and Chrominance C signal from the NTSC/PAL composite signal by using digital signal processing techniques ORDERING INFORMATION which minimize dot–crawl and cross–color. The built–in 4xFSC PLL circuit MC141627FT Quad Flat Package (QFP) allows a subcarrier signal input, which generates 4xFSC clock for video signal processing. This filter allows a video signal input of an extended frequency bandwidth by using a 4xFSC clock. The built–in vertical enhancer circuit reduces noise and dot crawl on the Luminance Y signal. The built–in A/D and D/A converters allow easy connection to analog video circuits. • Built–In High Speed 8–Bit A/D Converter • Four Line Memories (4540 Bytes) • Advanced Comb–II Process • Built–In Vertical Enhancer • Vertical Dot Reduction Process • Two Built–In High Speed 8–Bit D/A Converters • Built–In 4xFSC PLL Circuit • Built–In Clamp Circuit • Digital Interface Mode • On–Chip Reference Voltage for A/D Converter PIN ASSIGNMENT D5 D6 D7 C0 C1 D4 C3 C2 C4 C5 C6 C7 36 25 D3 37 24 TE1 D2 TE0 D1 MODE1 D0 MODE0 BYPASS CLK(AD) VH GND(D) GND(D) NC VCC(D) CLC FSC CLout N/M Vin PAL/NTSC RBT RTP Comb/BPF 48 13 1 12 out out CC bias Y C PCO BIAS I FILIN OV CC(DA) CC(AD) V REF(DA) V GND(AD) GND(DA) NC = NO CONNECTION This document contains information on a product under development. -

A Look at SÉCAM III

Viewer License Agreement You Must Read This License Agreement Before Proceeding. This Scroll Wrap License is the Equivalent of a Shrink Wrap ⇒ Click License, A Non-Disclosure Agreement that Creates a “Cone of Silence”. By viewing this Document you Permanently Release All Rights that would allow you to restrict the Royalty Free Use by anyone implementing in Hardware, Software and/or other Methods in whole or in part what is Defined and Originates here in this Document. This Agreement particularly Enjoins the viewer from: Filing any Patents (À La Submarine?) on said Technology & Claims and/or the use of any Restrictive Instrument that prevents anyone from using said Technology & Claims Royalty Free and without any Restrictions. This also applies to registering any Trademarks including but not limited to those being marked with “™” that Originate within this Document. Trademarks and Intellectual Property that Originate here belong to the Author of this Document unless otherwise noted. Transferring said Technology and/or Claims defined here without this Agreement to another Entity for the purpose of but not limited to allowing that Entity to circumvent this Agreement is Forbidden and will NOT release the Entity or the Transfer-er from Liability. Failure to Comply with this Agreement is NOT an Option if access to this content is desired. This Document contains Technology & Claims that are a Trade Secret: Proprietary & Confidential and cannot be transferred to another Entity without that Entity agreeing to this “Non-Disclosure Cone of Silence” V.L.A. Wrapper. Combining Other Technology with said Technology and/or Claims by the Viewer is an acknowledgment that [s]he is automatically placing Other Technology under the Licenses listed below making this License Self-Enforcing under an agreement of Confidentiality protected by this Wrapper. -

Brighteye 72 - Page 1 3G/HD/SD SDI to HDMI Converter User Guide Brighteyetm 72

3G/HD/SD SDI to HDMI Converter User Guide BrightEyeTM 72 BrightEyeTM 72 3G/HD/SD SDI to HDMI Converter User Guide Revision 1.1 SW v1.0.0 www.ensembledesigns.com BrightEye 72 - Page 1 3G/HD/SD SDI to HDMI Converter User Guide BrightEyeTM 72 Contents PRODUCT OVERVIEW AND FUNCTIONAL DESCRIPTION 4 Use with any HDMI Monitor 4 SDI Input 4 Test Signal Generator 4 Video Proc Amp and Color Corrector 4 Audio Metering Overlay and Disembedding 5 Graticule Overlay 5 Closed Caption Decoding 6 Timecode Reader 6 Horizontal and Vertical Shift 7 BLOCK DIAGRAM 8 APPLICATIONS 10 REAR CONNECTORS 11 Power Connection 11 USB Connector 11 HDMI Out 11 Audio Out 11 3G/HD/SD SDI In 12 3G/HD/SD SDI Out 12 MODULE CONFIGURATION AND CONTROL 13 Front Panel Controls and Indicators 13 Status Indicators 13 Adjusting Parameters from the Front Panel 14 Front Panel Control and On-Screen Display 14 Examples of Using the Front Panel in Conjunction with On-Screen Display 15 www.ensembledesigns.com BrightEye 72 - Page 2 3G/HD/SD SDI to HDMI Converter User Guide BrightEyeTM 72 USING THE BRIGHTEYE CONTROL APPLICATION 18 Software version requirement 18 Input Menu 19 Proc Menu 20 Color Correct Menu 21 Audio Menu 22 Config Menu 23 Captions Menu 24 Timecode Menu 25 SOFTWARE UPDATING 26 WARRANTY AND FACTORY SERVICE 26 Warranty 26 Factory Service 26 SPECIFICATIONS 27 GLOSSARY 29 www.ensembledesigns.com BrightEye 72 - Page 3 3G/HD/SD SDI to HDMI Converter User Guide BrightEyeTM 72 PRODUCT OVERVIEW AND FUNCTIONAL DESCRIPTION The BrightEye 72 turns any High Definition Multimedia Interface (HDMI) display into a monitoring solution with professional monitoring features. -

TVP5160 3DYC Operation

Application Report SLEA109–April 2011 TVP5160 3DYC Operation ..................................................................................................................................................... 1 Introduction The TVP5160 provides 3DYC functionality for both NTSC and PAL. This feature builds on the existing 2D 5-line comb filter and is designed to reduce the dot crawl and false color in composite video inputs by storing and processing multiple frames. By using multiple frames, the advantage of 3DYC is best seen on static images or areas within video where the scene has no motion. With areas of motion, the 2D comb filter is used; in still areas, the 3DYC is used. 2 Modes of Operation and Memory Requirements 3DYC may operate independently or concurrently with 3DNR. The various modes of operation for the 3DNR and 3DYC are listed in Table 1. Table 1. 3DYC and 3DNR Modes of Operation Memory Mode Operation Required Mode 0 3DYC + 3DNR 4MB Mode 1 3DYC only 2MB Mode 2 2D 5-line CF + 3DNR 2MB Mode 3 2D 5-line CF only (default) None 3 Technology Overview 3DYC works in parallel with the 2D comb filter based on the user settings and the level of motion detected in the video. Motion is detected by comparison of luma and chroma from three frames of the input composite signal. The frame differences are converted to absolute numbers, and a threshold is subtracted from this absolute value. The resultant difference is limited to a lower limit, gained up, and limited to an upper limit. The lower limit is indicative of 3D comb output and the upper limit is indicative of 2D comb output. The output is termed Km. -

23PFL5522D/12 Philips Widescreen Flat TV with Digital Crystal Clear

Philips widescreen flat TV with Digital Crystal Clear 23" LCD integrated digital 23PFL5522D Turn up your viewing experience with Integrated Digital Tuner Here is a wonderful new TV that not only gives you a great picture and sound but also modern styling and quality finish. It features Digital Crystal Clear for natural, vivid images and Incredible Surround for superb audio. Vivid, natural and razor sharp images • HD LCD WXGA display, with a 1366 x 768p resolution • Integrated Digital Tuner for DVB-T reception • HD Ready for the highest quality display of HD signals • Digital Crystal Clear provides vivid cinema-like images • 3D combfilter separates colors for a razor-sharp image Exciting and lifelike sound • Incredible Surround™ for enhanced audio enjoyment Slim, stylish design to complement your interior • Compact and slim design that fits in every room Designed for your convenience • 1000 page Hypertext for instant fast access to teletext For advanced performance • 2 HDMI inputs for full digital HD connection in one cable Widescreen flat TV with Digital Crystal Clear 23PFL5522D/12 23" LCD integrated digital Specifications Picture/Display • Remote Control: TV • Aspect ratio: Widescreen, 16:9 • Remote control type: RC2034301/01 • Brightness: 500 cd/m² • Screen Format Adjustments: 4:3, Movie expand • Contrast ratio (typical): 700:1 14:9, Movie expand 16:9, Subtitle Zoom, Super • Response time (typical): 8 ms Zoom, Widescreen • Viewing angle (H / V): 160 / 160 degree • Picture in Picture: Text dual screen • Diagonal screen size: 23 inch -

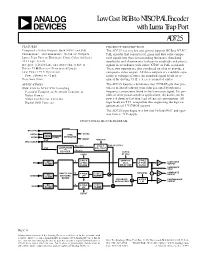

AD725 Data Sheet

Low Cost RGB to NTSC/PAL Encoder a with Luma Trap Port AD725 FEATURES PRODUCT DESCRIPTION Composite Video Output: Both NTSC and PAL The AD725 is a very low cost general purpose RGB to NTSC/ Chrominance and Luminance (S-Video) Outputs PAL encoder that converts red, green and blue color compo- Luma Trap Port to Eliminate Cross Color Artifacts nent signals into their corresponding luminance (baseband TTL Logic Levels amplitude) and chrominance (subcarrier amplitude and phase) Integrated Delay Line and Auto-Tuned Filters signals in accordance with either NTSC or PAL standards. Drives 75 V Reverse-Terminated Loads These two outputs are also combined on-chip to provide a Low Power +5 V Operation composite video output. All three outputs are available sepa- Power-Down to <1 mA rately at voltages of twice the standard signal levels as re- Very Low Cost quired for driving 75 Ω, reverse-terminated cables. APPLICATIONS The AD725 features a luminance trap (YTRAP) pin that pro- RGB/VGA to NTSC/PAL Encoding vides a means of reducing cross color generated by subcarrier Personal Computers/Network Computers frequency components found in the luminance signal. For por- Video Games table or other power-sensitive applications, the device can be Video Conference Cameras powered down to less than 1 µA of current consumption. All Digital Still Cameras logic levels are TTL compatible thus supporting the logic re- quirements of 3 V CMOS systems. The AD725 is packaged in a low cost 16-lead SOIC and oper- ates from a +5 V supply. FUNCTIONAL BLOCK DIAGRAM NTSC/PAL HSYNC SYNC CSYNC XNOR VSYNC CSYNC SEPARATOR BURST 4FSC NTSC/PAL 4FSC CLOCK FSC 90؇C ؎180؇C FSC 90؇C/270؇C 4FSC QUADRATURE (PAL ONLY) +4 FSC 0؇C DECODER CLOCK CSYNC AT 8FSC 3-POLE SAMPLED- 2-POLE DC Y LUMINANCE RED LP PRE- DATA LP POST- X2 CLAMP OUTPUT FILTER DELAY LINE FILTER LUMINANCE TRAP U NTSC/PAL X2 COMPOSITE RGB-TO-YUV 4-POLE U ⌺ OUTPUT GREEN DC ENCODING LPF CLAMP CLAMP MATRIX BALANCED 4-POLE CHROMINANCE X2 MODULATORS LPF OUTPUT DC V 4-POLE V BLUE CLAMP LPF CLAMP BURST REV. -

TM-1650SDU/TM-950DU SDI Monitor Brochure

® 16-INCH COLOR MONITOR TM-1650SDU Built-In SDI and Multi-Application Color Monitor 9-INCH COLOR MONITOR TM-950DU Built-In SDI Monitor for Studio Application Serial Digital Interface TM-1650SDU Serial Digital Component Input TM-950DU Serial Digital Component Input 550 Lines of Horizontal Resolution NTSC/PAL Compatible 16:9/4:3 Selectable Aspect Ratio 16:9 Display Capability On-Screen Menu Tally Lamp Wired Remote Control Tally/Remote Big Screen, Small Cabinet Design Rugged Metal Cabinet Component/RGB Input Terminals Underscan and Pulse Cross Under Scan, Blue Check Rack Mountable NTSC/PAL Compatible Packing the Power Demanded by Today’s 16-INCH SDI COLOR MONITOR TM-1650SDU The TM-1650SDU delivers the flexibility and the Serial Digital Interface practicality needed in today’s fast-moving broadcast, production and editing environments. Flexibility is further enhanced by a Serial Digital Interface (SDI), which complements comprehensive input terminals for analog signals. With a big 16-inch screen in a compact body, the TM-1650SDU takes up little space, yet provides the picture quality of a top-of-the-line monitor. Packed with powerful features, the TM-1650SDU has a Flat Square CRT with 550 lines of horizontal resolution. Its intuitive on-screen menu allows quick and simple adjustment of a wide range of settings including Blue Check and Under Scan. Ⅲ SDI Direct Input 14-inch model and most Compatible to SMPTE 259M standard manufacturer’s 13-inch monitor, yet serial digital component signal. is just 1.2-inch wider. That translates into space savings together with the Ⅲ 16-inch Monitor Delivers big picture that comes with a 16-inch, Crisp Color Pictures high-resolution display. -

SD-P2600 Portable DVD Player

SD-P2600 Portable DVD Player KEY FEATURES • ColorStream Pro® Component Video Output • Digital Cinema Progressive (3:2 Pulldown) • Direct Digital Solution • Digital Picture Zoom • JPEG Viewer • 2-Channel DVD-Audio Compatibility • WMA & MP3 Playback • Spatializer® N-2-2 ULTRA™ 3-D Virtual Surround Sound and Natural Headphone™ • Bass Reflex Speakers with Titanium Drivers • 2 Headphone Output Jacks • Memory Card Slot (SD/MMC) • 3.5-Hour Rapid Charge Battery • Credit Card Size Remote Control • Car Cord Included ADVANTAGE TOSHIBA • 8.9" Widescreen, Poly-Silicon TFT LCD Display with 1024x600 Pixel Resolution and Direct Digital Solution for the best picture quality • ColorStream Pro® Progressive Scan Component Video Outputs compatible with today's finest analog and digital televisions for delivery of stunningly colorful images • 10-Bit/54mHz Video digital-to-analog conversion for maximum color purity, detail, and resolution • 24-Bit/192 kHz PCM Audio Compatibility delivers warmer, more natural-sounding audio from many types of discs • DVD-Video/DVD-Audio (2 Ch)/CD/Video CD playback. DVD-R/CD-R/CD-RW* Compatible playback ensures discs made with personal computers can be played back in the home theater system, too • JPEG Viewer enables a user to display digital photographs on a television set in the JPEG digital format SD-P2600 TECHNOLOGY & BENEFITS VIDEO • WMA & MP3 Playback - Recordable Compact Discs (CD-R & • 8.9" Widescreen TFT LCD Display - 16:9 Polysilicon TFT display is CD-RW) with music stored in either the Windows Media™ Audio or perfect for viewing standard, letterbox, and anamorphic DVD movies. MP3 file formats can be enjoyed. • Direct Digital Solution - Toshiba's unique Direct Digital Solution • Spatializer® N-2-2 ULTRA™ 3-D Virtual Surround Sound and sends the DVD video information to the LCD driver directly from Natural Headphone™ - Provides a simulated surround sound MPEG decoder in original digital form, bypassing unnecessary effect from sources that contain only a stereo soundtrack. -

20PFL5522D/05 Philips Flat TV with Digital Crystal Clear

Philips Flat TV with Digital Crystal Clear 51 cm (20") LCD integrated digital 20PFL5522D Turn up your viewing experience with Integrated Digital Tuner Here is a wonderful new TV that not only gives you great picture and sound but also modern styling and quality finish. It features Digital Crystal Clear for natural, vivid images and Incredible Surround for superb audio. Vivid, natural and razor sharp images • Enhanced-definition LCD VGA display, 640 x 480p • Integrated Digital Tuner for DVB-T reception • Digital Crystal Clear provides vivid cinema-like images • 3D combfilter separates colors for a razor-sharp image Exciting and lifelike sound • Incredible Surround™ for enhanced audio enjoyment Slim, stylish design to complement your interior • Compact and slim design that fits in every room Designed for your convenience • 1000 page Hypertext for instant fast access to teletext For advanced performance • 2 HDMI inputs for full digital HD connection in one cable Flat TV 20PFL5522D/05 51 cm (20") LCD, integrated digital Highlights LCD VGA display, 640 x 480p color from the black-and-white information Compact and slim design This VGA display with state-of-the-art LCD and remove both horizontally and vertically This design style emphasizes a slim, compact screen technology has an enhanced resolution hanging dots, as well as dot crawl. The result is look that saves space and fits in anywhere. of 640 x 480p. a razor sharp image. 2 HDMI inputs Integrated Digital DVB-T tuner Incredible Surround™ HDMI makes an uncompressed digital RGB The integrated Digital DVB-T tuner lets you Incredible Surround is an audio technology connection from the source to the screen. -

Detection of Noise in Composite Step Signal Pattern by Visualizing Signal Waveforms

European Journal of Sustainable Development Research, 2018, 2(2), 14 ISSN: 2542-4742 Detection of Noise in Composite Step Signal Pattern by Visualizing Signal Waveforms Sarika Malhotra 1, Chaman Verma 2* 1 Imperial College of Engineering and Research, JSPM, Wagholi, Pune, INDIA 2 Department of Media and Educational Informatics, Faculty of Informatics, Eötvös Loránd University, Budapest, HUNGARY *Corresponding Author: [email protected] & [email protected] Citation: Malhotra, S. and Verma, C. (2018). Detection of Noise in Composite Step Signal Pattern by Visualizing Signal Waveforms. European Journal of Sustainable Development Research, 2(2), 14. https://doi.org/10.20897/ejosdr/76013 Published: March 7, 2018 ABSTRACT The Step Composite Signals is the combination of vital informative signals that are compressed and coded to produce a predefined test image on a display device. It carries the desired sequence of information from source to destination. This information may be transmitted as digital signal, video information or data signal required as an input for the destination module. For testing of display panels, Composite Test Signals are the most important attribute of test signal transmission system. In the current research paper we present an approach for the noise detection in Composite Step Signal by analysing Composite Step Signal waveforms. The analysis of the signal waveforms reveals that the noise affected components of the signal and subsequently noise reduction process is initiated which targets noisy signal component only. Thus the quality of signal is not compromised during noise reduction process. Keywords: step composite signal, signal waveforms, noise detection, signal transmission, test signal INTRODUCTION The Composite Step Signal comprises of predefined test signal components which are fused together to generate a desired test pattern (van Roermund and Snijder, 1989). -

YCS 100 N High Quality Digital Decoder with Video Decoder & Encoder Time Base Stabilization N Decodes Composite Video Into S-Video

SIGNAL PROCESSORS YCS 100 n High quality digital decoder with VIDEO DECODER & ENCODER time base stabilization n Decodes composite video into S-video n Encodes S-video into composite video n Simultaneous composite video and S-video output n Buffered loop-throughs for local video monitoring n Compatible with NTSC, PAL, and SECAM video signals n Compact, rack-mountable metal enclosure The Extron YCS 100 is a video transcoder that decodes composite video into S-video, and encodes S-video into composite video. It is ideal for applications requiring video signal conversion, such as conference rooms, classrooms, video production facilities, and residential entertainment systems. www.extron.com DESCRIPTION SPECIFICATIONS (CONT.) The Extron YCS 100 combines a composite video to S-video decoder and an S-video to composite video encoder in a single enclosure. It automatically Minimum/maximum levels ..................... Analog: 0.3 V to 1.5 Vp-p for Y with no offset at detects NTSC, PAL, and SECAM format video signals, and simultaneously unity gain outputs composite video and S-video to allow for recording and display at Impedance ............................................ 75 ohms the same time. The YCS 100 is ideal for applications in conference rooms, Return loss ............................................. -27 dB for Y, -31 dB for C @ 5 MHz Input coupling ........................................ AC coupled classrooms, video production facilities, and residential entertainment systems. Signal processing type ............................ Analog encoding VIDEO The YCS 100 features a high quality digital decoder with time base stabilization to optimize performance with composite video sources, including worn or Video output — encoder porTION multi-generation videotapes, consumer VCRs, and other devices with unstable Number/signal type ...............................