Context and Domain Knowledge Enhanced Entity Spotting in Informal Text

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

“Vogue” Em Performances Do Carnaval Carioca Strike a Pose!

ARTIGO COMUN. MÍDIA CONSUMO, SÃO PAULO, V. 16, N. 46, P. 376-395, MAI./AGO. 2019 DOI 10.18568/CMC.V16I46.1901 Strike a pose! A mediação do videoclipe “Vogue” em performances do Carnaval carioca Strike a Pose! Mediations of “vogue” music video in Performances of Brazilian Carnival Simone Pereira de Sá1 Rodolfo Viana de Paulo2 Resumo: O artigo tem por objetivo abordar as mediações do videoclipe da canção “Vogue”, de Madonna, buscando discutir o seu agenciamento de corpo- reidades periféricas em dois momentos: nos anos 1980, quando o videoclipe se apropria e amplia a visibilidade da dança voguing, praticada pela cena cultural LGBT nova-iorquina, e duas décadas depois, quando o videoclipe é, por sua vez, uma das referências para jovens dançarinos gays nas suas apresentações em alas das escolas de samba do Carnaval carioca. Assim, interessa-nos discutir as zonas de diálogo e de tensão entre performances locais e globais, tendo como aportes teóricos a discussão sobre “cosmopolitismo estético” (REGEV, 2013) e sobre as divas pop como ícones culturais (JENNEX, 2013), entre outras referências. Palavras-chave: Vogue; Madonna; performance; videoclipe; escolas de samba. Abstract: The article discusses the mediations of Madonna’s Music Vid- eo Vogue, seeking to discuss its agency on peripheral bodies in two moments: the first, in the 80s, when the song and music video appropriates the steps and gestures of Voguing created by the LGBT scene in New York; and the second moment, two decades later, when the music video is, in turn, one of the refer- ences for young, black and gay dancers who practice Vogue dance during their presentations on Rio de Janeiro’s samba schools in the present time. -

PERFORMED IDENTITIES: HEAVY METAL MUSICIANS BETWEEN 1984 and 1991 Bradley C. Klypchak a Dissertation Submitted to the Graduate

PERFORMED IDENTITIES: HEAVY METAL MUSICIANS BETWEEN 1984 AND 1991 Bradley C. Klypchak A Dissertation Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY May 2007 Committee: Dr. Jeffrey A. Brown, Advisor Dr. John Makay Graduate Faculty Representative Dr. Ron E. Shields Dr. Don McQuarie © 2007 Bradley C. Klypchak All Rights Reserved iii ABSTRACT Dr. Jeffrey A. Brown, Advisor Between 1984 and 1991, heavy metal became one of the most publicly popular and commercially successful rock music subgenres. The focus of this dissertation is to explore the following research questions: How did the subculture of heavy metal music between 1984 and 1991 evolve and what meanings can be derived from this ongoing process? How did the contextual circumstances surrounding heavy metal music during this period impact the performative choices exhibited by artists, and from a position of retrospection, what lasting significance does this particular era of heavy metal merit today? A textual analysis of metal- related materials fostered the development of themes relating to the selective choices made and performances enacted by metal artists. These themes were then considered in terms of gender, sexuality, race, and age constructions as well as the ongoing negotiations of the metal artist within multiple performative realms. Occurring at the juncture of art and commerce, heavy metal music is a purposeful construction. Metal musicians made performative choices for serving particular aims, be it fame, wealth, or art. These same individuals worked within a greater system of influence. Metal bands were the contracted employees of record labels whose own corporate aims needed to be recognized. -

A CHRISTMAS E Y CAROL

D is c G o v u e id r A CHRISTMAS e y CAROL Written Adapted and Music and by Directed by Lyrics by Charles Mark Gregg Dickens Cuddy Coffin P.L.A.Y. (Performance = Literature + Art + You) Student Matinee Series 2017-2018 Season 1 About Scrooge’s Journey, from Dear Educators, Director Many of us know well this classic tale of Ebenezer Scrooge’s redemptive journey, second Mark Cuddy: chance at life, and change of heart. It’s a story, we assume, most people know. Yet every year at our Teacher Workshop for A Christmas Carol, I am pleasantly reminded by our local “I’m a proponent of saying that the educators that, quite often, our production is many a young student’s very first exposure to more you know, this story, and to live theatre as a whole. the more you real- When you take a moment to let it sink in, isn’t that incredibly heartening and delightful? ize you don’t know. That this story, of all stories – one so full of compassion and forgiveness and hope – is so often It was important the first theatre performance many of our children will experience? And, furthermore, that we for me that, during Scrooge’s journey, get to watch them discover and enjoy it for the first time? What a gift! he started to place For many others, adults and kids alike, who consider it an annual tradition to attend A himself in context, Christmas Carol, why do we return to this tale year after year, well after we know the and that it was part outcome and could, perhaps, even recite all the words and songs ourselves? Some, I imagine, of the lesson for him. -

The Jays Jay Brown NATION BUILDER

The Jays Jay Brown NATION BUILDER oc Nation co-founder of the arts, having joined the and CEO Jay Brown Hammer Museum’s board of R has succeeded beyond directors in 2018, as well as his wildest dreams. He’s part of a champion of philanthropic Jay-Z’s inner circle, along with causes. In short, this avid fish- longtime righthand man Tyran erman keeps reeling in the big “Tata” Smith, Roc Nation ones. Brown is making his COO Desiree Perez and her mark, indelibly and with a deep- husband, Roc Nation Sports seated sense of purpose. President “OG” Juan Perez. “I think the legacy you He’s a dedicated supporter create…is built on the people RAINMAKERS TWO 71 you help,” Brown told CEO.com in “Pon de Replay,” L.A. told Jay-Z not to Tevin Campbell, The Winans, Patti 2018. “It’s not in how much money you let Rihanna leave the building until the Austin, Tamia, Tata Vega and Quincy make or what you buy or anything like contract was signed. Jay-Z and his team himself—when he was 19. “He mentored that. It’s about how many people you closed a seven-album deal, and since then, me and taught me the business,” Brown touch. It’s in how many jobs you help she’s sold nearly 25 million albums in the says of Quincy. “He made sure if I was people get and how many dreams you U.S. alone, while the biggest of her seven going to be in the business, I was going to help them achieve.” tours, 2013’s Diamonds World Tour, learn every part of the business.” By this definition, Brown’s legacy is grossed nearly $142 million on 90 dates. -

1. Summer Rain by Carl Thomas 2. Kiss Kiss by Chris Brown Feat T Pain 3

1. Summer Rain By Carl Thomas 2. Kiss Kiss By Chris Brown feat T Pain 3. You Know What's Up By Donell Jones 4. I Believe By Fantasia By Rhythm and Blues 5. Pyramids (Explicit) By Frank Ocean 6. Under The Sea By The Little Mermaid 7. Do What It Do By Jamie Foxx 8. Slow Jamz By Twista feat. Kanye West And Jamie Foxx 9. Calling All Hearts By DJ Cassidy Feat. Robin Thicke & Jessie J 10. I'd Really Love To See You Tonight By England Dan & John Ford Coley 11. I Wanna Be Loved By Eric Benet 12. Where Does The Love Go By Eric Benet with Yvonne Catterfeld 13. Freek'n You By Jodeci By Rhythm and Blues 14. If You Think You're Lonely Now By K-Ci Hailey Of Jodeci 15. All The Things (Your Man Don't Do) By Joe 16. All Or Nothing By JOE By Rhythm and Blues 17. Do It Like A Dude By Jessie J 18. Make You Sweat By Keith Sweat 19. Forever, For Always, For Love By Luther Vandros 20. The Glow Of Love By Luther Vandross 21. Nobody But You By Mary J. Blige 22. I'm Going Down By Mary J Blige 23. I Like By Montell Jordan Feat. Slick Rick 24. If You Don't Know Me By Now By Patti LaBelle 25. There's A Winner In You By Patti LaBelle 26. When A Woman's Fed Up By R. Kelly 27. I Like By Shanice 28. Hot Sugar - Tamar Braxton - Rhythm and Blues3005 (clean) by Childish Gambino 29. -

University of Oklahoma Graduate College Performing Gender: Hell Hath No Fury Like a Woman Horned a Thesis Submitted to the Gradu

UNIVERSITY OF OKLAHOMA GRADUATE COLLEGE PERFORMING GENDER: HELL HATH NO FURY LIKE A WOMAN HORNED A THESIS SUBMITTED TO THE GRADUATE FACULTY in partial fulfillment of the requirements for the Degree of MASTER OF ARTS By GLENN FLANSBURG Norman, Oklahoma 2021 PERFORMING GENDER: HELL HATH NO FURY LIKE A WOMAN HORNED A THESIS APPROVED FOR THE GAYLORD COLLEGE OF JOURNALISM AND MASS COMMUNICATION BY THE COMMITTEE CONSISTING OF Dr. Ralph Beliveau, Chair Dr. Meta Carstarphen Dr. Casey Gerber © Copyright by GLENN FLANSBURG 2021 All Rights Reserved. iv TABLE OF CONTENTS Abstract ........................................................................................................................................... vi Introduction .................................................................................................................................... 1 Heavy Metal Reigns…and Quickly Dies ....................................................................................... 1 Music as Discourse ...................................................................................................................... 2 The Hegemony of Heavy Metal .................................................................................................. 2 Theory ......................................................................................................................................... 3 Encoding/Decoding Theory ..................................................................................................... 3 Feminist Communication -

Exhibits 5 Through 8 to Declaration of Katherine A. Moerke

10-PR-16-4610'PR'16'46 Filed in First Judicial District Court 12/5/201612/5/2016 6:27:08 PM Carver County, MN EXHIBITEXHIBIT 5 10-PR-16-46 Filed in First Judicial District Court 12/5/2016 6:27:08 PM Carver County, MN Reed Smith LLP 599 Lexington Avenue New York, NY 10022-7650 Jordan W. Siev Tel +1 212 521 5400 Direct Phone: +1 212 205 6085 Fax +1 212 521 5450 Email: [email protected] reedsmith.com October 17, 2016 By Email ([email protected]) Laura Halferty Stinson Leonard Street 150 South Fifth Street Suite 2300 Minneapolis, MN 55402 Re: Roc Nation LLC as Exclusive Rights Holder to Assets of the Estate of Prince Rogers Nelson Dear Ms. Halferty: Roc Nation Musical Assets Artist Bremer May 27 Letter Nation, and its licensors, licensees and assigns, controls and administers certain specific rights in connection with various Artist Musical Assets. Roc Nation does so pursuant to agreements between the relevant parties including, but not limited to, that certain exclusive distribution agreement between Roc MPMusic SA., on the one hand, and NPG Records, Inc. NR NPG Distribution Agreement recordings and other intellectual property rights. The Distribution Agreement provides that the term of the Distribution Agreement is the longer of three years or full recoupment of monies advanced under the Distribution Agreement. As neither of these milestones has yet occurred, the Distribution Agreement remains in full force and effect. By way of background, and as highlighted in the May 27 Letter, Roc Nation and NPG have enjoyed a successful working relationship that has included, among other things, the Distribution involvement of Roc Nation in various aspects o -owned music streaming service. -

Bad Habit Song List

BAD HABIT SONG LIST Artist Song 4 Non Blondes Whats Up Alanis Morissette You Oughta Know Alanis Morissette Crazy Alanis Morissette You Learn Alanis Morissette Uninvited Alanis Morissette Thank You Alanis Morissette Ironic Alanis Morissette Hand In My Pocket Alice Merton No Roots Billie Eilish Bad Guy Bobby Brown My Prerogative Britney Spears Baby One More Time Bruno Mars Uptown Funk Bruno Mars 24K Magic Bruno Mars Treasure Bruno Mars Locked Out of Heaven Chris Stapleton Tennessee Whiskey Christina Aguilera Fighter Corey Hart Sunglasses at Night Cyndi Lauper Time After Time David Guetta Titanium Deee-Lite Groove Is In The Heart Dishwalla Counting Blue Cars DNCE Cake By the Ocean Dua Lipa One Kiss Dua Lipa New Rules Dua Lipa Break My Heart Ed Sheeran Blow BAD HABIT SONG LIST Artist Song Elle King Ex’s & Oh’s En Vogue Free Your Mind Eurythmics Sweet Dreams Fall Out Boy Beat It George Michael Faith Guns N’ Roses Sweet Child O’ Mine Hailee Steinfeld Starving Halsey Graveyard Imagine Dragons Whatever It Takes Janet Jackson Rhythm Nation Jessie J Price Tag Jet Are You Gonna Be My Girl Jewel Who Will Save Your Soul Jo Dee Messina Heads Carolina, Tails California Jonas Brothers Sucker Journey Separate Ways Justin Timberlake Can’t Stop The Feeling Justin Timberlake Say Something Katy Perry Teenage Dream Katy Perry Dark Horse Katy Perry I Kissed a Girl Kings Of Leon Sex On Fire Lady Gaga Born This Way Lady Gaga Bad Romance Lady Gaga Just Dance Lady Gaga Poker Face Lady Gaga Yoü and I Lady Gaga Telephone BAD HABIT SONG LIST Artist Song Lady Gaga Shallow Letters to Cleo Here and Now Lizzo Truth Hurts Lorde Royals Madonna Vogue Madonna Into The Groove Madonna Holiday Madonna Border Line Madonna Lucky Star Madonna Ray of Light Meghan Trainor All About That Bass Michael Jackson Dirty Diana Michael Jackson Billie Jean Michael Jackson Human Nature Michael Jackson Black Or White Michael Jackson Bad Michael Jackson Wanna Be Startin’ Something Michael Jackson P.Y.T. -

Modeling Musical Influence Through Data

Modeling Musical Influence Through Data The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:38811527 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Other Posted Material, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#LAA Modeling Musical Influence Through Data Abstract Musical influence is a topic of interest and debate among critics, historians, and general listeners alike, yet to date there has been limited work done to tackle the subject in a quantitative way. In this thesis, we address the problem of modeling musical influence using a dataset of 143,625 audio files and a ground truth expert-curated network graph of artist-to-artist influence consisting of 16,704 artists scraped from AllMusic.com. We explore two audio content-based approaches to modeling influence: first, we take a topic modeling approach, specifically using the Document Influence Model (DIM) to infer artist-level influence on the evolution of musical topics. We find the artist influence measure derived from this model to correlate with the ground truth graph of artist influence. Second, we propose an approach for classifying artist-to-artist influence using siamese convolutional neural networks trained on mel-spectrogram representations of song audio. We find that this approach is promising, achieving an accuracy of 0.7 on a validation set, and we propose an algorithm using our trained siamese network model to rank influences. -

Song & Music in the Movement

Transcript: Song & Music in the Movement A Conversation with Candie Carawan, Charles Cobb, Bettie Mae Fikes, Worth Long, Charles Neblett, and Hollis Watkins, September 19 – 20, 2017. Tuesday, September 19, 2017 Song_2017.09.19_01TASCAM Charlie Cobb: [00:41] So the recorders are on and the levels are okay. Okay. This is a fairly simple process here and informal. What I want to get, as you all know, is conversation about music and the Movement. And what I'm going to do—I'm not giving elaborate introductions. I'm going to go around the table and name who's here for the record, for the recorded record. Beyond that, I will depend on each one of you in your first, in this first round of comments to introduce yourselves however you wish. To the extent that I feel it necessary, I will prod you if I feel you've left something out that I think is important, which is one of the prerogatives of the moderator. [Laughs] Other than that, it's pretty loose going around the table—and this will be the order in which we'll also speak—Chuck Neblett, Hollis Watkins, Worth Long, Candie Carawan, Bettie Mae Fikes. I could say things like, from Carbondale, Illinois and Mississippi and Worth Long: Atlanta. Cobb: Durham, North Carolina. Tennessee and Alabama, I'm not gonna do all of that. You all can give whatever geographical description of yourself within the context of discussing the music. What I do want in this first round is, since all of you are important voices in terms of music and culture in the Movement—to talk about how you made your way to the Freedom Singers and freedom singing. -

Party at the Limit Song List

Party At The Limit Song List Walking on Sunshine by Katina and the Waves Shook Me All Night Long by AC/DC Wagon Wheel by Darius Rucker Route 66 by Nat King Cole Rock Steady by The Whispers Play that Funky Music by Wild Cherry Humble and Kind by Tim McGraw Hole in the Wall by Mel Waiters Honey I’m Good by Andy Grammer Got My Whiskey by Mel Waiters Don’t Know Why by Norah Jones Brown Eyed Girl by Van Morrison Boogie Oogie Oogie by A Taste of Honey Bad Girls by Donna Summers Bang Bang by Ariana Grande, Nicki Minaj, Jessie J At Last by Etta James Anniversary by Tone, Toni, Tony All I Do is Win by DJ Khaled Bad Boys for Life Intro EWF Intro Lose Yourself Intro Blame it by Jamie Foxx Cake by the Ocean by DNCE Can’t Feel My Face by The Weekend Get Right Back to My Baby by Vivian Green Free Falling by Tom Petty Footsteps in the Dark by Isley Brothers Hey Ya by Andre 3000 Hotline Bling by Drake Just the Two of Us by Bill Withers Isn’t She Lovely by Stevie Wonder Ribbon in the Sky by Stevie Wonder Natural Woman by Aretha Franklin Livin La Vida Loca by Rickey Martin Love on Top by Beyonce Ordinary People by John Legend On and On by Erykah Badu Please Don’t Stop the Music by Rihanna Real Love by Mary J Blige Just Fine by Mary J Blige Crazy in Love by Beyonce De Ja Vu by Beyonce Stand by Me by Ben E King Staying Alive by the Bee Gees Stay With Me by Sam Smith All of Me by John Legend Starboy by The Weekend Smooth Operator by Sade To Be Real- Chaka Khan Sweet Love by Anita Baker Chicken Fried by Zac Brown Band I Got a Feeling by Black Eyed Peas OMG -

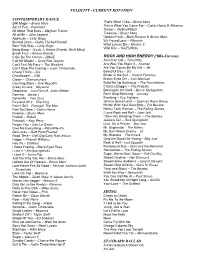

Velocity Current Rotation

VELOCITY - CURRENT ROTATION CONTEMPORARY DANCE 24K Magic – Bruno Mars That's What I Like – Bruno Mars This is What You Came For – Calvin Harris ft. Rihanna Ain’t It Fun - Paramore All About That Bass – Meghan Trainor Timber – Ke$ha/Pitbull All of Me – John Legend Treasure – Bruno Mars Applause – Lady Gaga Uptown Funk – Mark Ronson ft. Bruno Mars Blurred Lines – Robin Thicke/Pharrell We Found Love – Rihanna Born This Way – Lady Gaga What Lovers Do – Maroon 5 Bang Bang – Jessie J, Ariana Grande, Nicki Minaj Wild One – Sia/FloRida Break Free – Ariana Grande Cake By The Ocean – DNCE ROCK AND HIGH ENERGY (‘60s-Current) Call Me Maybe – Carly Rae Jepson American Girl – Tom Petty Can't Feel My Face – The Weeknd Any Way You Want It – Journey Can’t Stop The Feeling – Justin Timberlake Are You Gonna Be My Girl – Jet Cheap Thrills – Sia Beautiful Day – U2 Cheerleader – OMI Blister in the Sun – Violent Femmes Closer – Chainsmokers Brown Eyed Girl – Van Morrison Counting Stars – One Republic Build Me Up Buttercup – The Foundations Crazy In Love – Beyoncé Chelsea Dagger – The Fratellis Despacito – Luis Fonsi ft. Justin Bieber Dancing In the Dark – Bruce Springsteen Domino – Jessie J Don’t Stop Believing – Journey Dynamite – Taio Cruz Everlong – Foo Fighters Ex’s and Oh’s – Elle King Gimme Some Lovin’ – Spencer Davis Group Feel It Still – Portugal. The Man Hit Me With Your Best Shot – Pat Benatar Feel So Close – Calvin Harris Honky Tonk Woman – The Rolling Stones Finesse – Bruno Mars I Love Rock and Roll – Joan Jett Fireball – Pitbull I Saw Her Standing There – The Beatles Firework – Katy Perry Jessie’s Girl – Rick Springfield Forget You – Cee Lo Green Livin’ On A Prayer – Bon Jovi Give Me Everything – Pitbull/Ne-Yo Mr.