Image Based Representation for 3D Content Delivery

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gwani Software TRAINING Department (Knowledge & Expertise) Corel Draw Curriculum

Gwani Software Training Department (Knowledge & Expertise) Corel Draw Curriculum December 2011 1 © Gwani Software Ltd, 2011. All rights reserved. Disclaimer CorelDraw is a trademark of Corel Inc., And any other trademark used belongs to its rightful owner. Warning This document is an exclusive property of Gwani Software Ltd, permission is granted to print and copy this document by trainees, instructors, supervisors and affiliated academies of Gwani Software likewise for non- commercial distribution by anyone. With the exception of the above permission, no part of this document may be printed, copied, modified or used by anyone without a prior written permission is obtained from Gwani Software. Contravening this provision may lead to legal proceeding in a court of law. Proposed by Abubakar Muhammad [email protected] Approved for usage by Al-Ameen Abubakar, Director Training, this 5th day of Muharram, 1433 equivalent to 1/12/2011. 2 Gwani Software TRAINING DEPARTMENT Corel Draw General Description: - this course is intended to provide basic and advanced skills in designing graphics in CorelDraw. Aims: - The aims of this course are: - 1. To drill the trainee on the basic operations in Corel draw 2. To avail the trainee with the knowledge of Corel draw’s workspace and how to create are. 3. To drill the trainee on skills needed to create logo and banner. 4. To drill the trainee on skills needed to create layout. 5. To drill the trainee on recording and using macro in drawings. 6. To drill the trainee on how to work with tables & calendar in Corel draw. 7. To drill the trainee on how to export drawings into other formats. -

R-Photo User's Manual

User's Manual © R-Tools Technology Inc 2020. All rights reserved. www.r-tt.com © R-tools Technology Inc 2020. All rights reserved. No part of this User's Manual may be copied, altered, or transferred to, any other media without written, explicit consent from R-tools Technology Inc.. All brand or product names appearing herein are trademarks or registered trademarks of their respective holders. R-tools Technology Inc. has developed this User's Manual to the best of its knowledge, but does not guarantee that the program will fulfill all the desires of the user. No warranty is made in regard to specifications or features. R-tools Technology Inc. retains the right to make alterations to the content of this Manual without the obligation to inform third parties. Contents I Table of Contents I Start 1 II Quick Start Guide in 3 Steps 1 1 Step 1. Di.s..k.. .S..e..l.e..c..t.i.o..n.. .............................................................................................................. 1 2 Step 2. Fi.l.e..s.. .M..a..r..k.i.n..g.. ................................................................................................................ 4 3 Step 3. Re..c..o..v..e..r.y.. ...................................................................................................................... 6 III Features 9 1 File Sorti.n..g.. .............................................................................................................................. 9 2 File Sea.r.c..h.. ............................................................................................................................ -

Designing and Developing a Model for Converting Image Formats Using Java API for Comparative Study of Different Image Formats

International Journal of Scientific and Research Publications, Volume 4, Issue 7, July 2014 1 ISSN 2250-3153 Designing and developing a model for converting image formats using Java API for comparative study of different image formats Apurv Kantilal Pandya*, Dr. CK Kumbharana** * Research Scholar, Department of Computer Science, Saurashtra University, Rajkot. Gujarat, INDIA. Email: [email protected] ** Head, Department of Computer Science, Saurashtra University, Rajkot. Gujarat, INDIA. Email: [email protected] Abstract- Image is one of the most important techniques to Different requirement of compression in different area of image represent data very efficiently and effectively utilized since has produced various compression algorithms or image file ancient times. But to represent data in image format has number formats with time. These formats includes [2] ANI, ANIM, of problems. One of the major issues among all these problems is APNG, ART, BMP, BSAVE, CAL, CIN, CPC, CPT, DPX, size of image. The size of image varies from equipment to ECW, EXR, FITS, FLIC, FPX, GIF, HDRi, HEVC, ICER, equipment i.e. change in the camera and lens puts tremendous ICNS, ICO, ICS, ILBM, JBIG, JBIG2, JNG, JPEG, JPEG 2000, effect on the size of image. High speed growth in network and JPEG-LS, JPEG XR, MNG, MIFF, PAM, PCX, PGF, PICtor, communication technology has boosted the usage of image PNG, PSD, PSP, QTVR, RAS, BE, JPEG-HDR, Logluv TIFF, drastically and transfer of high quality image from one point to SGI, TGA, TIFF, WBMP, WebP, XBM, XCF, XPM, XWD. another point is the requirement of the time, hence image Above mentioned formats can be used to store different kind of compression has remained the consistent need of the domain. -

Custom File Type Detection Technical Documentation

Technical Article DriveLock – Custom file type detection Technical Documentation DriveLock SE 2017 DriveLock – Custom file type detection Table of Contents INTRODUCTION........................................................................................................................................................... 2 CUSTOM FILE TYPE DEFINITIONS ................................................................................................................................. 3 CREATING CUSTOM FILE TYPE DEFINITIONS ................................................................................................................................ 4 CUSTOM DLL DEVELOPMENT ...................................................................................................................................... 8 1 DriveLock – Custom file type detection Introduction DriveLock features access control to certain files types based on the content of these files. Using file filtering an administrator can configure which file types are allowed to be read and / or written to removable storage. As in Windows file types are basically determined by a file extension any user or malicious software can simply rename files to another file extension, for example a user could rename its music collection from *.MP3 to *.DOC so that a file filter “thinks” it must be a collection of probably allowed Word documents. To circumvent this type of attack, DriveLock’s file filter scans the content of each file to ensure the file content is from the type the file extension -

The Webp Manual Was Written by Jeremy Wagner

IMPRINT Imprint © 2018 Smashing Media AG, Freiburg, Germany ISBN (PDF): 978-3-945749-67-8 Cover Design: Ricardo Gimenes eBook Production: Cosima Mielke Tools: Elja Friedman Syntax Highlighting: Prism by Lea Verou Idea & Concept: Smashing Media AG The WebP Manual was written by Jeremy Wagner. 2 Table of Contents Foreword ........................................................................................4 WebP Basics..................................................................................6 Performance................................................................................ 21 Converting Images To WebP ................................................34 Using WebP Images.................................................................62 In Closing.....................................................................................78 Appendix...................................................................................... 80 Thanks...........................................................................................83 About The Author ..................................................................... 84 3 FOREWORD Foreword Performance matters now more than ever. What we send over the wire, how we send it, and how much of it we send matters. Because users matter. Web perfor- mance is a vast subject with many nooks and crannies, and all deserve their due attention. Of serious concern, however, is the role of media in performance, specifically images. Images are powerful. Engaging visuals evoke visceral feelings. -

Image and Video Compression Coding Theory Contents

Image and Video Compression Coding Theory Contents 1 JPEG 1 1.1 The JPEG standard .......................................... 1 1.2 Typical usage ............................................. 1 1.3 JPEG compression ........................................... 2 1.3.1 Lossless editing ........................................ 2 1.4 JPEG files ............................................... 3 1.4.1 JPEG filename extensions ................................... 3 1.4.2 Color profile .......................................... 3 1.5 Syntax and structure .......................................... 3 1.6 JPEG codec example ......................................... 4 1.6.1 Encoding ........................................... 4 1.6.2 Compression ratio and artifacts ................................ 8 1.6.3 Decoding ........................................... 10 1.6.4 Required precision ...................................... 11 1.7 Effects of JPEG compression ..................................... 11 1.7.1 Sample photographs ...................................... 11 1.8 Lossless further compression ..................................... 11 1.9 Derived formats for stereoscopic 3D ................................. 12 1.9.1 JPEG Stereoscopic ...................................... 12 1.9.2 JPEG Multi-Picture Format .................................. 12 1.10 Patent issues .............................................. 12 1.11 Implementations ............................................ 13 1.12 See also ................................................ 13 1.13 References -

Srfax Files Supported

SRFax Supported File Formats We support the following file formats for sending faxes by email or from our web site interface; Compressed Files • Zipped Files - zip A compressed file can contain any number of files in the formats listed below. Document Files • Adobe Acrobat File - pdf • Microsoft Word Document - doc, docx • Microsoft Excel Spreadsheet - xls, xlsx • Microsoft PowerPoint Presentation - ppt • .rtf - Microsoft Rich Text Format - rtf • .wri - Microsoft WordPad Document - wri • Text File - txt Web Files • HTML files – htm, html Image Files • Adobe Digital Negative - dng • Adobe Encapsulated PostScript - eps, epi, ps, ai • Adobe Photoshop Document - psd, pdd • Adobe Portable Document Format - pdf • Aldus Placeable metafiles - apm • Alias Wavefront PIX - pix • Amiga Interchange File - iff, lbm • AT&T Multigen ICN - icn • Biorad confocal image - pic • CALS type 1 and type 2 - cal, cals • Canon photo RAW CRW - crw • Canon photo RAW CR2 - cr2 • Cineon Image File Format - dpx • Compuserve RLE - rle • Creo photo RAW - mos • DCX - Multipaged PCX - dcx • Dicom Medical Image - dcm, dicom, dic, acr • Direct Draw Surface - dds • Dr. Halo - cut • Enhanced Metafiles - emf • FlashPix Format - fpx, mix • FUJI photo RAW - raf • GEM Raster Image - gem, img • Graphics Interchange Format - gif • Group3 1D Fax File - g3 • Group4 Fax File - g4 • HDRI Radiance - hdr, rgbe, xyze • ICA Image Object Content Architecture Format - ica, mda, psg • JPEG Network Graphic - jng • Joint Belevel Image Expert Group - jbg, jbig • Joint Belevel Image Expert Group -

Autonomy Information Connectivity

Technical Brief Information Connectivity Autonomy Supports Virtually Any Format, Source and Language Every enterprise has a complex mix of off-the-shelf and custom applications, databases, information technologies and file formats. Autonomy is capable of aggregating any form of structured, semi-structured and unstructured data, wherever it is located, regardless of language. Supported Data Formats Support for over 1000 formats including: Archive • Graphics Interchange Format (.gif) • UNIS Composer 669 Module • Raw Audio CD Data (.cdr) • 7-Zip (7Z) • HP Graphics Language (.hpg) (.669) • Audition Loop (.cel) • Apple Disk Image (DMG) • Joint Photographic Experts Group • Six Channel Module (.6cm) • Creative Music Format (.cmf) • BinHex(HQX) (.jpeg) • Eight Channel Module (.8cm) • Sony Ericsson Protected Content • Bzip2 Compressed Archive Format • Lotus AMIDraw Graphics (.sdw) • Amiga OctaMed Music File File (.copy) (BZIP2) • Lotus Pic (.pic) (.8med) • Cubase Project (.cpr) • GZIP (GZ) • Macintosh Raster (.pic, .pict) • Amiga 8-Bit Sound File (.8svx) • Cubase Waveform File (.csh) • ISO-9660 CD Disc Image Format • MacPaint (.pntg) • Advanced Audio Coding File (.aac) • cVideo (.cvc) (ISO) • Microsoft Document Imaging • ABC Music Notation (.abc) • Cakewalk SONAR Project (.cwp) • Java Archive (JAR) (.mdi) • Audio Codec 3 File (.ac3) • OPL2 FM Audio File (.d00) • Legato EMailXtender Archive (EMX) • Microsoft Office Drawing (.mso) • ADPCM Compressed Audio File • OPL2 FM Audio File (.d01) • MacBinary Container (BIN) • Nero Encrypted File (.nef) (.act) -

Syllabi of the Course

Rayat shikshansanstha’s Yashavantrao Chavan Institute of Science, Satara Undergraduate Programme B.Sc. –I Animation Science (Entire) Syllabi of the course Choice based credit system syllabus (To be implemented from academic year 2018-21) Department of Animation Science 1 | P a g e Index Sr. No. Details Page No. 1 Preamble 03 2. General Objectives of Programme 03 3. General Programme Outcomes 03 4. Programme Specific Objectives 04 5. Programme Outcomes (Subject) 04 Curriculum Structure and detailed Syllabi of B.Sc. I 6 5-51 2 | P a g e Preamble: Animation is a lead Course in today’s world. It has very good Prospects and it gives a broad platform to student creativity. The Course has wild scope. By considering the need of different Industries and present scenario in animation industry the syllabus is designed. While designing the syllabus intellectual level of UG Students have been consider. The students who don’t know about the Animation will be able to understood and work independently in the Industrial world after completion of his graduate degree. Animation is not only creation of cartoons but also its plays important role in Automobile industry, Mechanical industry, Web development, different coding, Vfx, Graphics designing, Film industry and etc. Bachelor of Animation course is one among the most demanded courses in today’s world, In the very recent trend India is emerging in the field of “Animation” and this would create a very huge employment in India, there are many big giant companies who are outsourcing their animation work in India like Disney. Animation as a Profession can be the best decision for those who are computer lovers, who can think different, innovative and keep capacity of presenting what they think. -

50 Held for 3Rd, 4Th, 5Th, and 6Th Grades at District, Area and State Levels

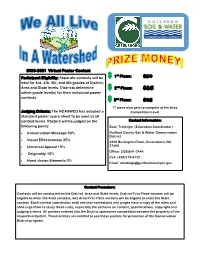

2020-2021 Virtual Poster Contest st Participant Eligibility: Separate contests will be $ 1 Place: $50 held for 3rd, 4th, 5th, and 6th grades at District, Area and State levels. Districts determine nd $ 2 Place: $35 which grade level(s) for their individual poster contests. $ 3rd Place: $25 1st place also gets to compete at the Area Judging Criteria: The NCASWCD has adopted a Competition Level standard poster score sheet to be used at all contest levels. Posters will be judged on the Contact Information: following points Sean Trollinger (Education Coordinator) • Conservation Message 50% Guilford County Soil & Water Conservation District • Visual Effectiveness 25% 3309 Burlington Road, Greensboro, NC • Universal Appeal 10% 27405 Office: (336)641-2444 • Originality 10% Cell: (336)279-6122 • Hand-drawn Elements 5% Email: [email protected] Contest Procedure: Contests will be conducted on the District, Area and State levels. District First Place winners will be eligible to enter the Area contests, and Area First Place winners will be eligible to enter the State contest. Each contest coordinator shall see that contestants and judges have a copy of the rules and shall urge them to study these rules, especially the sections on content, specifications, copyright and judging criteria. All posters entered into the District sponsored competition become the property of the respective District. These entities are entitled to use these posters for promotion of the Conservation District program. “We All Live In A Watershed” Specifications: • Posters should be designed along the following specifications. See Poster Pointers for tips. • Posters shall be designed so that if they were to be printed on paper they would be no larger than 24 X 36 inches. -

Supported File Formats

Supported File Formats Supported in ACDSee 12 and ACDSee Pro 3 Image read support formats ABR Adobe™ brush format ANI Windows animated cursor Shows cursor animation ART AOL ART Still images ARW Sony RAW BMP Windows Bitmap Windows and OS/2; 2/8/16/24/32 bpp; RLE and uncompressed CNV Canvas Thumbnails only CR2 Canon RAW CR2 cRAW Sony cRAW CRW Canon RAW CRW CUR Windows cursor CVX Canvas Thumbnails only DCR Kodak RAW DCR DCX Multiple-page PCX All sub-types and multiple pages supported DNG Digital negative An open-source archival format for RAW files DJV DjVu EMF Enhanced Metafile Format Win32 enhanced placeable metafiles ERF Epson RAW EPS Encapsulated PostScript Displays embedded thumbnail (TIFF formats) and first page only FPX FlashPix Multiple resolution support GIF Graphics Interchange Format Single page and animated; 87a and 89a HDR Creo RAW ICN AT&T ICN ICO Windows icon Shows multiple resolutions as separate pages IFF EA/Amiga Interchange File 1-24 bpp, including HAM and HAM8; multiple pages and Format animations not supported JBR Corel® brush format JP2 JPEG2000 48-bit support JPC JPEG2000 48-bit support JPG JPEG JFIF JFIF and Adobe™ CMYK KDC Kodak™ KDC Reads both the full image and the embedded thumbnail MAG MAG All sub-types supported MRW Konica Minolta RAW NEF Nikon RAW Supported File Formats ORF Olympus RAW PBM Portable BitMap PBR Corel® Paint Shop Pro® brush format PCD Kodak™ PhotoCD Up to 3072x2048 resolution (16BASE) PCX ZSoft Publishers Paintbrush All sub-types supported PEF Pentax RAW PEF, Samsung RAW PGM Portable GrayMap PIC SoftImage PIC All sub-types supported PCT Macintosh PICT PDF Adobe Acrobat documents Can read all pages. -

CE 874 - Secure Software Systems

CE 874 - Secure Software Systems Fuzzing Mehdi Kharrazi Department of Computer Engineering Sharif University of Technology Acknowledgments: Some of the slides are fully or partially obtained from other sources. A reference is noted on the bottom of each slide, when the content is fully obtained from another source. Otherwise a full list of references is provided on the last slide. Program Analysis • How could we analyze a program (with source code) and look for problems? • How accurate would our analysis be without executing the code? • If we execute the code, what input values should we use to test/analyze the code? • What if we don’t have the source code? https://www.viva64.com Spring 1398 Ce 874 - Fuzzing Fuzzing By: Joxean Koret Spring 1398 Ce 874 - Fuzzing Vulnerability Discovery Methodologies – White Box • Source code review • Static analysis • Pros • Coverage • Cons • Dependencies • Are we testing reality? • Compiler issues • Implementation scenarios Spring 1398 Ce 874 - Fuzzing [Sutton’06] Vulnerability Discovery Methodologies – White Box • Source code review • Static analysis • Pros • Coverage • Cons • Dependencies • Are we testing reality? • Compiler issues • Implementation scenarios Spring 1398 Ce 874 - Fuzzing [Sutton’06] Vulnerability Discovery Methodologies – Black Box • Reverse engineering (Static analysis) • Pros • Complex vulnerabilities uncovered • Cons • Time consuming • Deep knowledge required • Fuzzing (Dynamic analysis) • Pros • Relatively simple and Realistic • Cons • Coverage • Complex vulnerabilities missed Spring