Author(S) Prasert, Sunyaruk Title Multi Angle

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

June 2015 Some Thoughts from Bill Wow! It Has Been a Busy Spring

The Sight-Loss Support Group of Central Pennsylvania, Inc. Turning Darkness into Light Since 1982 [email protected] www.slsg.org 814-238-0132 June 2015 Some Thoughts from Bill Wow! It has been a busy spring. We have accomplished so much it's hard to put it into a few words. We have closed our “bricks and mortar” office and started our virtual officenot an easy task after 32 years of record keeping. Thanks to all who helped in the move. I want to especially thank Roger Gaumond, who has gone above and beyond in: managing the move of our inventory to a storage area, researching and obtaining a mobile "hot spot" telephone system, obtaining Go-To-Meeting software so we can communicate with remote individuals and organizations, setting up our first Community Information Presentations, and generally keeping us all going. We started a new Outreach program to present who we are, what we do, and how we do it. This has been met with a lot of enthusiasm both internally and externally. So far, we have gone to several area retirement homes to give this talk and to present guest speakers on subjects like the Audio-Description program, the Puppy Raiser program, and North Central Sight Services. Thank you to Roger (again), Eileen Rivoir, Nanette Anslinger, Rana Arnold, Susan Kennedy, and Heather Engel. Susan developed a brief survey and a method of capturing data from those surveys taken after presentations, to monitor and measure how we are doing. This is vital, for if we do not measure we cannot report the results to United Way. -

Spatial Analysis of Crime Hot Spots Using Gis in University Off- Campus Environments, Ondo State, Nigeria

Middle States Geographer; 2019, 52:70-81 SPATIAL ANALYSIS OF CRIME HOT SPOTS USING GIS IN UNIVERSITY OFF- CAMPUS ENVIRONMENTS, ONDO STATE, NIGERIA Abiodun Daniel Olabode Department of Geography and Planning Sciences, Adekunle Ajasin University, PMB 001, Akungba-Akoko, Ondo, Nigeria. ABSTRACT: The university students in this study experience high crime rate over the years and often subject to feeling of insecurities. Hence, the need for this study to develop a hot spot map for crime locations with a view to forming points of security alert for crime reduction in the study area. Primary and secondary data were used in this study. Primary data were collected directly from students who reside off-campus. Data were collected through the use of questionnaire focusing on type, location and crime occurrence in the study area. The coordinates of the study locations were obtained with handheld Global Positioning System (GPS). However, secondary data included base map of the study area. Purposive sampling technique was adopted to select 5 off-campus locations. Proportionate sampling was used to select 190 hostels, one-third of existing 570 hostels. Systematic random sampling was further adopted in selecting 190 students in the study location. Such that in each hostel, a student was administered with a copy of questionnaire and a total of 190 copies of questionnaire were administered in the study. Methods of simple percentages, 3-points likert scale and geo-spatial techniques were used for data analysis. Results reveal burglary, theft, rape and assault as major crimes. The causes of crimes are peer group influence, drug abuse, cultism, poverty, and overpopulation. -

Hot Spot Schedule 2018

HOT SPOT PRESENTED BY Free weekend events on the waterfront July– September, 2018 www.friendsofwaterfrontseattle.org/events The Thermals at 2017 KEXP Rocks the Dock Join Friends of Waterfront Seattle this summer for free community events for all ages at Waterfront Park on Pier 58! Friends of Waterfront Seattle is the nonprofit partner to the City of Seattle, helping to execute, steward, and program the waterfront park. Our mission is to build awareness, fundraise, and lead strategies that support the long-term health of the park, all with the goal to realize the park’s social, environmental, economic, and cultural potential. Hot Spot is a pilot program created by Friends that is enhancing Seattle’s central waterfront now with activities that provide a preview of our urban shoreline’s future as a vibrant park for all to enjoy. Hot Spot brings a dynamic mix of live music, performance, sports, and more to existing Waterfront Park during July through September weekends. JulyJuly 20182018 Events Events 2017 What’s Poppin’ Ladiez?! KEXP Rocks the Dock JUL 7 Awesome local bands kick off the Hot Spot season with the third annual KEXP Rocks the Dock concert hosted by KEXP 90.3, Seattle’s non-commercial independent radio station that produces an award-winning, 3 - 7 PM innovative blend of music for a worldwide audience. Featuring: Stas Thee Boss and Nappy Nina, Misundvrstood & Gypsy Temple, Breaks and Swells, and DJ & Emcee OCNotes Beach PARTY JUL 8 Start your summer with fun in the sun. Local bands bring reggae and surf music to Hot Spot. -

View/Download Resume

MILES PHILLIPS AEA / SAG -AFTRA E-mail: [email protected] Lyric Baritone to G ADR Agency: 888-902-3414 NEW YORK Blond-Grey / Blue Website: milesphillips.com 5’10” / 190 MACBETT Banco TWELFTH NIGHT Feste QUEEN MARGARET Edward IV / Cardinal Beaufort STRANGE INTERLUDE Charlie Marsden WORDS WORDS WORDS & MUSIC Antipholus S. / Mark Antony / Guiderius / Feste THE MERCHANT OF VENICE / THE DOCTOR OF ROME Bassanio PRIDE RIVER CROSSING: A SPOON RIVER FOR A NEW CENTURY ♦ 10 Roles ELEGIES FOR ANGELS PUNKS AND RAGING QUEENS Bud BROADWAY MUSICALS OF 1930 Soloist MOMENTO MORI Doctor Karl / Father Karl CONCERTS / READINGS / WORKSHOPS CAMELOT Arthur CLASS OF ‘68 Daniel IGHT ISHING N F Evan PINOCCHIO OF CHELSEA Gepetto THE AGE OF INNOCENCE Larry Lefferts / Ned Winsett SOUTHERN RAIN Jack / Captain Marshall MY FAIR LADY Higgins POESCRYPT Edgar Allan Poe REGIONAL A CHRISTMAS CAROL: THE MUSICAL ♦ Ebenezer Scrooge THE SOUND OF MUSIC Captain von Trapp PENNY SERENADE Roger NOISES OFF ♦ Garry CABARET Cliff / Emcee CAMELOT ♦ Mordred ACCOMPLICE Derek / Hal ROMANCE / ROMANCE Alfred / Sam YOU’RE GONNA LOVE TOMORROW Georges / Antony / Dionysos / Prince / Hinkley JOSEPH … AMAZING … DREAMCOAT Reuben BLOOD AND GUTS: THE ROCK ORESTIEIA Orestes THE BALLAD OF THE EL GIMPO CAFÉ Abel HOUSE OF FLOWERS Merlin THE THREE SISTERS Kulygin THE CONTRAST Colonel Manly DEAR WORLD Julian FILM / TV KEEPERS Father Tom (Lead) K-PAX Prot (S.I. – Kevin Spacey) RECORDING SOLO: MILES PHILLIPS – might as well be… ORIGINAL CAST: BROADWAY MUSICALS OF 1930, BLOOD AND GUTS: THE ROCK ORESTEIA, -

Saddlebred Sidewalk List 1989-2018

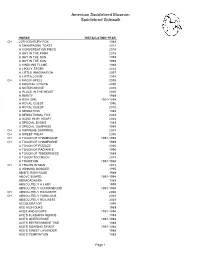

American Saddlebred Museum Saddlebred Sidewalk HORSE INSTALLATION YEAR CH 20TH CENTURY FOX 1989 A CHAMPAGNE TOAST 2011 A CONVERSATION PIECE 2014 A DAY IN THE PARK 2018 A DAY IN THE SUN 1999 A DAY IN THE SUN 1998 A KINDLING FLAME 1989 A LIKELY STORY 2014 A LITTLE IMAGINATION 2007 A LOTTA LOVIN' 1998 CH A MAGIC SPELL 2005 A MAGICAL CHOICE 2000 A NOTCH ABOVE 2016 A PLACE IN THE HEART 2000 A RARITY 1989 A RICH GIRL 1991-1994 A ROYAL QUEST 1996 A ROYAL QUEST 2010 A SENSATION 1989 A SENSATIONAL FOX 2002 A SONG IN MY HEART 2004 A SPECIAL EVENT 1989 A SPECIAL SURPRISE 1998 CH A SUPREME SURPRISE 2001 CH A SWEET TREAT 2000 CH A TOUCH OF CHAMPAGNE 1991-1994 CH A TOUCH OF CHAMPAGNE 1989 A TOUCH OF PIZZAZZ 2006 A TOUCH OF RADIANCE 1995 A TOUCH OF TENDERNESS 1989 A TOUCH TOO MUCH 2018 A TRADITION 1991-1994 CH A TRAVELIN' MAN 2012 A WINNING WONDER 1995 ABIE'S IRISH ROSE 1989 ABOVE BOARD 1991-1994 ABRACADABRA 1989 ABSOLUTELY A LADY 1999 ABSOLUTELY COURAGEOUS 1991-1994 CH ABSOLUTELY EXQUISITE 2005 CH ABSOLUTELY FABULOUS 2001 ABSOLUTELY NO LIMITS 2001 ACCELERATOR 1998 ACE HIGH DUKE 1989 ACES AND EIGHTS 1991-1994 ACE'S FLASHING GENIUS 1989 ACE'S QUEEN ROSE 1991-1994 ACE'S REFRESHMENT TIME 1989 ACE'S SOARING SPIRIIT 1991-1994 ACE'S SWEET LAVENDER 1989 ACE'S TEMPTATION 1989 Page 1 American Saddlebred Museum Saddlebred Sidewalk HORSE INSTALLATION YEAR ACTIVE SERVICE 1996 ADF STRICTLY CLASS 1989 ADMIRAL FOX 1996 ADMIRAL'S AVENGER 1991-1994 ADMIRAL'S BLACK FEATHER 1991-1994 ADMIRAL'S COMMAND 1989 CH ADMIRAL'S FLEET 1998 CH ADMIRAL'S MARK 1989 CH ADMIRAL'S MARK -

ARSC Journal

BROADWAY BABIES I LOVE NEW YORK - Vocal and instrumental 45rpm. (Struttin' Records STR 103) $.75 MARTIN CHARNIN'S MINI-ALBUM (Take Home Tunes THT 771)7" album/$4.95; DR. JAZZ AND OTHER MUSICALS (Take Home Tunes THT 777) 12" album/$7.95; THE ROBBER BRIDEGROOM (Take Home Tunes THT 761) 711 album/$4.95; CANADA (Broadway Baby Records BBD 776) 12" album/$7.95; SNOW WHITE and BEAUTY AND THE BEAST (Take Home Tunes THT 775) 12" album/$7.95; THE SECOND SHEPHERDlS PLAY (Broadway Baby Records BBD 774) 12" album/$7.95; DOWNRIVER (Take Home Tunes THT 7811) 12 11 album/$7.95; THE BAKER'S WIFE (Take Home Tunes THT 772) 12" album/$8.95; NEFERTITI (Take Home Tunes THT 7810) 12" album/$8.95; TWO (Take Home Tunes THT 788) 12" album/ $7.95. Available in some record stores or by mail from Take Home Tunes, Box 496, Georgetown, Connecticut. SMITHSONIAN AMERICAN THEATRE SERIES: George and Ira Gershwin's OH, KAY! (DPL 1-0310); SOUVENIRS OF HOT CHOCOLATES (P 14587). Available from Smithsonian Customer Service, P.O. Box 10230, Des Moines, Iowa 50336. $6.99 each plus 90 cents postage and handling charge. I had never heard of Steve Karmen until early this year, but I was very familiar with his disco-paean to the Empire State and its name sake city, I LOVE NEW YORK. Mr Karmen is by now a millionaire, I pre sume, and supporting the state he so loves, but in spite of the constant repetition of theme, a simple four-note structure, and the incessant blaring of numerous vocal versions on television and radio, I have yet to tire of hearing the piece. -

Sondheim's" Into the Woods". Artsedge Curricula, Lessons And

DOCUMENT RESUME ED 477 984 CS 511 366 AUTHOR Bauernschub, Mary Beth TITLE Sondheim's "Into the Woods". ArtsEdge Curricula, Lessons and Activities. INSTITUTION John F. Kennedy Center for the Performing Arts, Washington, DC. SPONS AGENCY National Endowment for the Arts (NFAH), Washington, DC.; MCI WorldCom, Arlington, VA.; Department of Education, Washington, DC. PUB DATE 2002-00-00 NOTE 24p. AVAILABLE FROM For full text: http://artsedge.kennedy-center.org/ teaching_materials/curricula/curricula.cfm?subject_id=LNA. PUB TYPE Guides Classroom - Teacher (052) EDRS PRICE EDRS Price MFO1 /PCO1 Plus Postage. DESCRIPTORS Class Activities; *Fairy Tales; Intermediate Grades; Lesson Plans; Middle Schools; *Music Activities; *Musical Composition; Scripts; Teacher Developed Materials; Units of Study IDENTIFIERS Musicals; National Arts Education Standards ABSTRACT This curriculum unit introduces intermediate grade and middle school students to the work of Stephen Sondheim, one of the most talented composers of the contemporary American musical theater, and teaches them about the process of writing an original musical. The unit notes that in his musical "Into the Woods" Sondheim incorporates (and distorts) elements of traditional fairy tales to create a lively and unique piece of theater. In the unit, students will create the libretto and script for an original musical based on the Grimm Brothers' fairy tale, "The Frog Prince." The unit's lesson--Sondheim's "Into the Woods": Fairy Tale Tunes--takes only two days to complete. Its classroom activities focus on improvisational techniques and small-group work. The unit provides a step-by-step detailed instructional plan for the teacher. (NKA) Reproductions supplied by EDRS are the best that can be made from the original document. -

Apr – Nov 17 How to Book the Plays

Apr – Nov 17 How to book The plays Online Select your own seat online nationaltheatre.org.uk By phone 020 7452 3000 Mon – Sat: 9.30am – 8pm In person South Bank, London, SE1 9PX Mon – Sat: 9.30am – 11pm See p37 for Sunday and holiday opening times Other ways Friday Rush Follies Mosquitoes Oslo to get tickets £20 tickets are released online every Friday at 1pm Playing from 22 Aug 18 July – 28 Sep 5 – 23 Sep for the following week’s performances. For Angels in America see Ballot on p21 Day Tickets £18 / £15 tickets available in person on the day of the performance No booking fee online or in person. A £2.50 fee per transaction for phone bookings. If you choose to have your tickets sent by post, a £1 fee applies per transaction. Postage costs may vary for group and overseas bookings. Access symbols used in this brochure CAP Captioned TT Touch Tour AD Audio-Described Jane Eyre The Majority Salomé 26 Sep – 21 Oct 11 – 28 Aug 2 May – 15 July TRAVELEX £15 TICKETS The National Theatre Partner for Innovation Partner for Learning Sponsored by in partnership with Partner for Connectivity Outdoor Media Partner Official Airline Official Hotel Partner Common Barber Shop Ugly Lies the Bone 30 May – 5 Aug Chronicles Playing until 6 June 30 May – 8 July Workshops Partner The National Theatre’s Supporter for new writing Pouring Partner International Hotel Partner Image Image Partner for Lighting and Energy Sponsor of NT Live in the UK TBC TBC Angels in America Angels in America Amadeus Part One Part Two Returning 2018 Playing until 19 Aug Playing until 19 Aug 2 3 AUGUST Tue 22 7.30 Wed 23 7.30 Fri 25 7.30 Sat 26 7.30 Follies Tue 29 7.30 Wed 30 7.30 book by James Goldman Thu 31 7.30 music and lyrics by Stephen Sondheim SEPTEMBER Fri 1 7.30 Sat 2 2.00 7.30 Mon 4 7.30 Tue 5 2.00 7.30 Cast includes Wed 6 7.00 New York, 1971. -

Download Timewarp Inc's Press Kit & Rider (English)

TIMEWARP INC _ Soundsystem (Live) Timewarp inc. With many of his tracks featured in international Lounge / Freestyle / Downtempo famous compilations such as Black Coffee (Ecco Chamber), Sensuality Lounge and Camapari Red Passion compilations (Sony Music ), White Lounge (Music Park) and Ibiza Global Radio Moods (Irma), he wrightfully won thier participation in many festivals (Spring three in Vienna, where he played with Gilles Paterson & Quantic Soul Orchestra, in Croatia at San Pelegrino Festival with Dimitri from Paris and Smoove & Tuyrell and in Brasil's Tibe Festival). Timewarp inc has released 2 personal albums, two remix albums and three singles, while a great number of independent productions and remixes have been released worlwide either as maxi- singles or in various compilations and digital ep's. TIMEWARP INC _ Soundsystem (Live) Many Timewarp inc songs were included in compilations such as 'Urban Deluxe' (V2) and 'Vicious Games', "Campari Red Passion Lounge", " Sensuality Lounge" (Sony BMG, Sony Music), has appeared as DJ Timewarp in famous 'must' clubs of Athens like Soul, Guru, Loop, Funky! Earth and other open-minded bars across Greece (B- live in Larissa along with Parov Stelar) also with his five member band Timewarp Inc during the annual Music Day Festival. Band Memebers: Angelos Stoumpos (arranger,composer,producer,dj,electronics,keys,mastering engineer) Salomi Vasiliadou (vocals) Tasos Fotiou (sax/flute) Dj Kommit (scratch) Irene G. (promotion - bookings - press) Live sets: http://www.timewarpmusic.org/timewarpinc.html TIMEWARP INC _ Soundsystem (Live) Techical Rider: 1 SHURE SM58S SHURE MICROPHONES 2x 2 MOTU 828 MKII MOTU 1x 3 ONE BIG STAGE TABLE (200X100cm) 4 AUDIX ADX10 FLP AUDIX MICROPHONES 1x 5 LAPTOP STAND (MAC BOOK PRO) 1x 6 KEYBOARD STAND 1x 7 CONGAS SET 1x 8 BONGOS SET 1 x 9 Stage monitors Stage monitors 6x 10 Stage Digital Sound console 24 or 32 Channels with 8 Busses Accomodation / Catering (6 People) Hotel Room **** Dinner before the show. -

WKT-Connection-Nov-Dec-2020.Pdf

BROADBAND: HELPING YOU SERVE NOVEMBER/DECEMBER 2020 CONNECTIONPublished for the members of West Kentucky & Tennessee Telecommunications Cooperative DEER, OH DEER Blood River Outfitters offers hunting experience SPREADING THE WORD SERVICE AND SOLACE Churches use technology to Broadband powers reach more people community outreach WK&T Sports showcases local athletes INDUSTRY NEWS Rural Connections By SHIRLEY BLOOMFIELD, CEO NTCA–The Rural Broadband Association Being thankful for broadband in 2020 hen you’re making your list of things to be thankful for this Wseason, make room for this: “access to broadband from a reliable, community-based provider.” This year has taught us many things, one being that broadband is vital to so many areas of our lives — work, school, health and more. I recently spoke with a journalist who has been covering the gaps in broadband connectivity across our country. She lives in a beautiful community in the moun- tains of Vermont and is lucky to be able Hot spots rely on fast to download emails — forget anything internet networks like streaming or VPN access. She has Wired up learned from working with NTCA and onnecting rural communities to reliable broadband networks represents a some of our members that building vital challenge for not only individual states but also the nation as a whole. broadband is not a cheap proposition. CJobs, education, health care and more increasingly rely on fast internet There are physical hurdles (Vermont access. mountains?) that make the task even As state and national policymakers consider strategies to expand broadband more formidable. networks, weighing the benefits of an often misunderstood technology might Several months into a remote world, prove beneficial. -

Module 9: Suppression, Communication, and Mop-Up

Module 9: Suppression, Communication, and Mop-up Topic 1: Introduction Module introduction Narration Script: Just about everything you do in the wildland fire service is designed to prepare you for the moment when you get the call to report for duty. You grab your gear, drive to the base, and get your briefing and assignment by Command. Last week, you went to a grass fire next to a road. Today the call is a wildland fire in a remote area. It’s time to put your training to the real test. As you drive to the scene, you gather more information and begin thinking about your response. What kind of conditions are you going to find? Will you have enough water? Which attack strategy might be most appropriate? In other words, how are you going to handle suppression, communication, and mop-up? It’s time to do what you joined up for—to fight and manage fires—always remembering to provide for safety first. Fire suppression methods introduction Now it’s time to find out what you’ll actually be doing on the fireline. This module introduces you to the many suppression techniques you have at your disposal to control and extinguish wildland fire. This is a broad (and deep!) module where you’ll find information on: • Breaking the fire triangle • Fire suppression techniques and safety • Supporting heavy equipment operations and airdrops • Communications • Mop-up and patrol A wildland response can make for a long day, week, or even a month. This module will help you prepare for getting the job done. -

3 2021 CURRENTS Web2 Vb

Vote Tue. 4/6 School Board & State Ed. Super. Page 4 Info. Daylight Saving Spring ahead Sunday March 14 FREE! News You Can Use • Riverwest, Harambee and The East Side Vol 20 Issue 3 MARCH 2021 Kovac & Coggs are our Alderpersons in 2020 Follies never happened in 2020 Ballot Box Page 18 Easter March 23 FREE! News You Can Use • Riverwest, Harambee and The East Side Vol 19 Issue 3 March 2020 FREE! THE COMMUNITY VOICE OF MILWAUKEE’S LEFT BANK Vol 7 Issue 3 March 2008 Where have you put your eggs? Elections 2020 by Vince Bushell the vote the candidates receive, but a front runner will be hard to beat coming out of To avoid confusion, let me explain the Super Tuesday election with a big lead. metaphor in the title of this piece. The id- Right now, Bernie Sanders is highly favored iom, “Don’t put all your eggs in one basket”, for the Super Tuesday election, which in- Garden of the Month .............. 2 in this case, refers to risking the future of cludes big states like California and Texas. government in one office, the President of Trump will be the Republican nominee the United States. unless something highly unusual happens In the spring run off primary on Feb- between now and the November election. ruary 18th in the city of Milwaukee only 67 thousand of 289 thousand registered voters State Supreme Court Justice voted. That is 23 percent voted. Those are Change at the Supreme Court level in the numbers. the State of Wisconsin is slow.