F. Zecca (A Cura Di), Il Cinema Della Convergenza. Industria

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

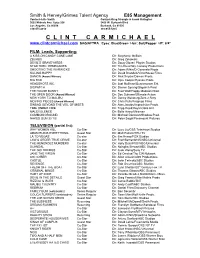

Hervey-Grimes-Resume.Pdf

Smith & Hervey/Grimes Talent Agency E85 Management Contact-Julie Smith Contact-Greg Strangis & Jacob Gallagher 3002 Midvale Ave. Suite 206 3406 W. Burbank Blvd Los Angeles, Ca 90034 Burbank, Ca 91505 310-475-2010 310-955-5835 C L I N T C A R M I C H A E L www.clintcarmichael.com SAG/AFTRA Eyes: Blue/Green Hair: Salt/Pepper HT: 6’4” FILM: Leads, Supporting: A KISS ON CANDY CANE LANE Dir: Stephanie McBain ZBURBS Dir: Greg Zekowski DEVIL’S GRAVEYARDS Dir: Doug Glover/ Pilgrim Studios STAR TREK: RENEGADES Dir: Tim Russ/Sky Conway Productions SHOOTING THE WARWICKS Dir: Adam Rifkin/D-Generate Prods KILLING HAPPY Dir: David Brandvik/Victorhouse Films DAMON (Award Winner) Dir: Nick Snyder/Damon Prods. BIG BAD Dir: Opie Cooper/Eyevox Prods. HEADSHOTS INC. Dir: Joel Hoffman/Quarterwater Ent. DISPATCH Dir: Steven Sprung/Dispatch Prod. THE HOUSE BUNNY Dir: Fred Wolf/Happy Madison Prod. THE OPEN DOOR (Award Winner) Dir: Doc Duhame/Ultimate Action NEW YORK TO MALIBU Dir: Danny Weisberg/Zero 2 Sixty MOVING PIECES (Award Winner) Dir: Chris Pulis/Froglegs Films SINBAD: BEYOND THE VEIL OF MISTS Dir: Alan Jacobs/Improvision Prods. TIME UNDER FIRE Dir: Tripp Reed/Royal Oaks Ent. MALEVOLENCE Dir: Belle Avery/Miramax COMMON GROUND Dir: Michael Donovan/Shadow Prod. NAKED GUN 33 1/3 Dir: Peter Segal/Paramount Pictures TELEVISION (partial list): WHY WOMEN KILL Co-Star Dir: Lucy Liu/CBS Television Studios ADAM RUINS EVERYTHING Guest Star Dir: Matt Pollack/TRU TV LA TO VEGAS Co-star Dir: Jim Hensz/FOX Studios LAW & ORDER TRUE CRIME Co-star Dir: Fred -

GLAAD Media Institute Began to Track LGBTQ Characters Who Have a Disability

Studio Responsibility IndexDeadline 2021 STUDIO RESPONSIBILITY INDEX 2021 From the desk of the President & CEO, Sarah Kate Ellis In 2013, GLAAD created the Studio Responsibility Index theatrical release windows and studios are testing different (SRI) to track lesbian, gay, bisexual, transgender, and release models and patterns. queer (LGBTQ) inclusion in major studio films and to drive We know for sure the immense power of the theatrical acceptance and meaningful LGBTQ inclusion. To date, experience. Data proves that audiences crave the return we’ve seen and felt the great impact our TV research has to theaters for that communal experience after more than had and its continued impact, driving creators and industry a year of isolation. Nielsen reports that 63 percent of executives to do more and better. After several years of Americans say they are “very or somewhat” eager to go issuing this study, progress presented itself with the release to a movie theater as soon as possible within three months of outstanding movies like Love, Simon, Blockers, and of COVID restrictions being lifted. May polling from movie Rocketman hitting big screens in recent years, and we remain ticket company Fandango found that 96% of 4,000 users hopeful with the announcements of upcoming queer-inclusive surveyed plan to see “multiple movies” in theaters this movies originally set for theatrical distribution in 2020 and summer with 87% listing “going to the movies” as the top beyond. But no one could have predicted the impact of the slot in their summer plans. And, an April poll from Morning COVID-19 global pandemic, and the ways it would uniquely Consult/The Hollywood Reporter found that over 50 percent disrupt and halt the theatrical distribution business these past of respondents would likely purchase a film ticket within a sixteen months. -

DVD Movie List by Genre – Dec 2020

Action # Movie Name Year Director Stars Category mins 560 2012 2009 Roland Emmerich John Cusack, Thandie Newton, Chiwetel Ejiofor Action 158 min 356 10'000 BC 2008 Roland Emmerich Steven Strait, Camilla Bella, Cliff Curtis Action 109 min 408 12 Rounds 2009 Renny Harlin John Cena, Ashley Scott, Aidan Gillen Action 108 min 766 13 hours 2016 Michael Bay John Krasinski, Pablo Schreiber, James Badge Dale Action 144 min 231 A Knight's Tale 2001 Brian Helgeland Heath Ledger, Mark Addy, Rufus Sewell Action 132 min 272 Agent Cody Banks 2003 Harald Zwart Frankie Muniz, Hilary Duff, Andrew Francis Action 102 min 761 American Gangster 2007 Ridley Scott Denzel Washington, Russell Crowe, Chiwetel Ejiofor Action 113 min 817 American Sniper 2014 Clint Eastwood Bradley Cooper, Sienna Miller, Kyle Gallner Action 133 min 409 Armageddon 1998 Michael Bay Bruce Willis, Billy Bob Thornton, Ben Affleck Action 151 min 517 Avengers - Infinity War 2018 Anthony & Joe RussoRobert Downey Jr., Chris Hemsworth, Mark Ruffalo Action 149 min 865 Avengers- Endgame 2019 Tony & Joe Russo Robert Downey Jr, Chris Evans, Mark Ruffalo Action 181 mins 592 Bait 2000 Antoine Fuqua Jamie Foxx, David Morse, Robert Pastorelli Action 119 min 478 Battle of Britain 1969 Guy Hamilton Michael Caine, Trevor Howard, Harry Andrews Action 132 min 551 Beowulf 2007 Robert Zemeckis Ray Winstone, Crispin Glover, Angelina Jolie Action 115 min 747 Best of the Best 1989 Robert Radler Eric Roberts, James Earl Jones, Sally Kirkland Action 97 min 518 Black Panther 2018 Ryan Coogler Chadwick Boseman, Michael B. Jordan, Lupita Nyong'o Action 134 min 526 Blade 1998 Stephen Norrington Wesley Snipes, Stephen Dorff, Kris Kristofferson Action 120 min 531 Blade 2 2002 Guillermo del Toro Wesley Snipes, Kris Kristofferson, Ron Perlman Action 117 min 527 Blade Trinity 2004 David S. -

Women in Business Awards Luncheon at the Hotel Irvine, Where Aston Martin Americas President Laura Schwab Delivered the Keynote Address

10.5.20 SR_WIB.qxp_Layout 1 10/2/20 12:14 PM Page 29 WOMEN IN BUSINESS NOMINEES START ON PAGE B-60 INSIDE 2019 WINNERS GO BIG IN IRVINE, LAND NEW PARTNERS, INVESTMENTS PAGE 30 PRESENTED BY DIAMOND SPONSOR PLATINUM SPONSORS GOLD SPONSOR SILVER SPONSORS 10.5.20 SR_WIB.qxp_Layout 1 10/2/20 1:36 PM Page 30 30 ORANGE COUNTY BUSINESS JOURNAL www.ocbj.com OCTOBER 5, 2020 Winning Execs Don’t Rest on Their Laurels $1B Cancer Center Underway; Military Wins; Spanish Drug Investment Orange County’s business community last year celebrated the Business Journal’s 25th annual Women in Business Awards luncheon at the Hotel Irvine, where Aston Martin Americas President Laura Schwab delivered the keynote address. The winners, selected from 200 nominees, have not been resting on their laurels, even in the era of the coronavirus. Here are updates on what the five winners have been doing. —Peter J. Brennan Avatar Partners City of Hope Shortly after Marlo Brooke won the Busi- (AR) quality assurance solution for the U.S. As president of the City of Hope Orange employees down from Duarte. Area univer- ness Journal’s award for co-founding Hunt- Navy for aircraft wiring maintenance for the County, Annette Walker is orchestrating a sities could partner with City of Hope. ington Beach-based Avatar Partners Inc., Naval Air Systems Command’s Boeing V- $1 billion project to build one of the biggest, While the larger campus near the Orange she was accepted into the Forbes Technol- 22 Osprey aircraft. and scientifically advanced, cancer research County Great Park is being built, Walker in ogy Council, an invitation-only community Then the Air Force is using Avatar’s solu- centers in the world. -

Knowing Good Sex Pays Off: the Image of the Journalist As a Famous, Exciting and Chic Sex Columnist Named Carrie Bradshaw in HBO’S Sex and the City

Knowing Good Sex Pays Off: The Image of the Journalist as a Famous, Exciting and Chic Sex Columnist Named Carrie Bradshaw in HBO’s Sex and the City By Bibi Wardak Abstract New York Star sex columnist Carrie Bradshaw lives the life of a celebrity in HBO’s Sex and the City. She mingles with the New York City elite at extravagant parties, dates the city’s most influential men and enjoys the adoration of fans. But Bradshaw echoes the image of many female journalists in popular culture when it comes to romance. Bradshaw is thirty-something, unmarried and unsure about having children. Despite having a successful career and loyal friends, she feels unfulfilled after each failed romantic relationship. I. Introduction “A wildly successful career and a relationship -- I was afraid…women only get one or the other.”1 That’s a fear Carrie Bradshaw just can’t shake. The sex columnist for the New York Star is unmarried, career-oriented and unsure if she will ever have a traditional family.2 Just like other modern sob sisters, she is romantically unfulfilled and has sacrificed aspects of her personal life for professional success.3 Bradshaw, played by Sarah Jessica Parker in HBO’s hit television show Sex and the City, portrays a stereotypical image of female journalists found in television and film.4 She and other female journalists in the series struggle to balance a successful Knowing Good Sex Pays Off: The Image of the Journalist as a Famous, Exciting and Chic Sex Columnist Named Carrie Bradshaw in HBO’s Sex and the City By Bibi Wardak 2 career and satisfying romantic life. -

King, Michael Patrick (B

King, Michael Patrick (b. 1954) by Craig Kaczorowski Encyclopedia Copyright © 2015, glbtq, Inc. Entry Copyright © 2014 glbtq, Inc. Reprinted from http://www.glbtq.com Writer, director, and producer Michael Patrick King has been creatively involved in such television series as the ground-breaking, gay-themed Will & Grace, and the glbtq- friendly shows Sex and the City and The Comeback, among others. Michael Patrick King. He was born on September 14, 1954, the only son among four children of a working- Video capture from a class, Irish-American family in Scranton, Pennsylvania. Among various other jobs, his YouTube video. father delivered coal, and his mother ran a Krispy Kreme donut shop. In a 2011 interview, King noted that he knew from an early age that he was "going to go into show business." Toward that goal, after graduating from a Scranton public high school, he applied to New York City's Juilliard School, one of the premier performing arts conservatories in the country. Unfortunately, his family could not afford Juilliard's high tuition, and King instead attended the more affordable Mercyhurst University, a Catholic liberal arts college in Erie, Pennsylvania. Mercyhurst had a small theater program at the time, which King later learned to appreciate, explaining that he received invaluable personal attention from the faculty and was allowed to explore and grow his talent at his own pace. However, he left college after three years without graduating, driven, he said, by a desire to move to New York and "make it as an actor." In New York, King worked multiple jobs, such as waiting on tables and unloading buses at night, while studying acting during the day at the famed Lee Strasberg Theatre Institute. -

Prohibiting Product Placement and the Use of Characters in Marketing to Children by Professor Angela J. Campbell Georgetown Univ

PROHIBITING PRODUCT PLACEMENT AND THE USE OF CHARACTERS IN MARKETING TO CHILDREN BY PROFESSOR ANGELA J. CAMPBELL1 GEORGETOWN UNIVERSITY LAW CENTER (DRAFT September 7, 2005) 1 Professor Campbell thanks Natalie Smith for her excellent research assistance, Russell Sullivan for pointing out examples of product placements, and David Vladeck, Dale Kunkel, Jennifer Prime, and Marvin Ammori for their helpful suggestions. Introduction..................................................................................................................................... 3 I. Product Placements............................................................................................................. 4 A. The Practice of Product Placement......................................................................... 4 B. The Regulation of Product Placements................................................................. 11 II. Character Marketing......................................................................................................... 16 A. The Practice of Celebrity Spokes-Character Marketing ....................................... 17 B. The Regulation of Spokes-Character Marketing .................................................. 20 1. FCC Regulation of Host-Selling............................................................... 21 2. CARU Guidelines..................................................................................... 22 3. Federal Trade Commission....................................................................... 24 -

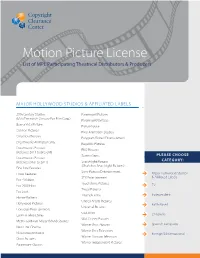

Motion Picture License List of MPL Participating Theatrical Distributors & Producers

Motion Picture License List of MPL Participating Theatrical Distributors & Producers MAJOR HOLLYWOOD STUDIOS & AFFILIATED LABELS 20th Century Studios Paramount Pictures (f/k/a Twentieth Century Fox Film Corp.) Paramount Vantage Buena Vista Pictures Picturehouse Cannon Pictures Pixar Animation Studios Columbia Pictures Polygram Filmed Entertainment Dreamworks Animation SKG Republic Pictures Dreamworks Pictures RKO Pictures (Releases 2011 to present) Screen Gems PLEASE CHOOSE Dreamworks Pictures CATEGORY: (Releases prior to 2011) Searchlight Pictures (f/ka/a Fox Searchlight Pictures) Fine Line Features Sony Pictures Entertainment Focus Features Major Hollywood Studios STX Entertainment & Affiliated Labels Fox - Walden Touchstone Pictures Fox 2000 Films TV Tristar Pictures Fox Look Triumph Films Independent Hanna-Barbera United Artists Pictures Hollywood Pictures Faith-Based Universal Pictures Lionsgate Entertainment USA Films Lorimar Telepictures Children’s Walt Disney Pictures Metro-Goldwyn-Mayer (MGM) Studios Warner Bros. Pictures Spanish Language New Line Cinema Warner Bros. Television Nickelodeon Movies Foreign & International Warner Horizon Television Orion Pictures Warner Independent Pictures Paramount Classics TV 41 Entertaiment LLC Ditial Lifestyle Studios A&E Networks Productions DIY Netowrk Productions Abso Lutely Productions East West Documentaries Ltd Agatha Christie Productions Elle Driver Al Dakheel Inc Emporium Productions Alcon Television Endor Productions All-In-Production Gmbh Fabrik Entertainment Ambi Exclusive Acquisitions -

Tube Convolutional Neural Network (T-CNN) for Action Detection in Videos

Tube Convolutional Neural Network (T-CNN) for Action Detection in Videos Rui Hou, Chen Chen, Mubarak Shah Center for Research in Computer Vision (CRCV), University of Central Florida (UCF) [email protected], [email protected], [email protected] Abstract Deep learning has been demonstrated to achieve excel- lent results for image classification and object detection. However, the impact of deep learning on video analysis has been limited due to complexity of video data and lack of an- notations. Previous convolutional neural networks (CNN) based video action detection approaches usually consist of two major steps: frame-level action proposal generation and association of proposals across frames. Also, most of these methods employ two-stream CNN framework to han- dle spatial and temporal feature separately. In this paper, we propose an end-to-end deep network called Tube Con- volutional Neural Network (T-CNN) for action detection in videos. The proposed architecture is a unified deep net- work that is able to recognize and localize action based on 3D convolution features. A video is first divided into equal length clips and next for each clip a set of tube pro- Figure 1: Overview of the proposed Tube Convolutional posals are generated based on 3D Convolutional Network Neural Network (T-CNN). (ConvNet) features. Finally, the tube proposals of differ- ent clips are linked together employing network flow and spatio-temporal action detection is performed using these tracking based approaches [32]. Moreover, in order to cap- linked video proposals. Extensive experiments on several ture both spatial and temporal information of an action, two- video datasets demonstrate the superior performance of T- stream networks (a spatial CNN and a motion CNN) are CNN for classifying and localizing actions in both trimmed used. -

Turner Classic Movies, Walt Disney World Resort and the Walt Disney Studios Team up to Share Stories Centered on Classic Film

Nov. 26, 2014 Turner Classic Movies, Walt Disney World Resort and The Walt Disney Studios Team Up to Share Stories Centered on Classic Film Features Include New TCM Integration in Theme Park Attraction and On-Air Showcase of Disney Treasures Turner Classic Movies (TCM) today announced new strategic relationships with Walt Disney World Resort and The Walt Disney Studios to broaden its reach in family entertainment with joint efforts centered on classic film. At Disney's Hollywood Studios, the "The Great Movie Ride" Attraction highlights some of the most famous film moments in silver screen history and is set to receive a TCM-curated refresh of the pre-show and the finale. TCM branding will be integrated into the attraction's marquee as well as banners, posters and display windows outside the attraction. In the queue line, families will enjoy new digital movie posters and will watch a new pre-ride video with TCM host Robert Osborne providing illuminating insights from the movies some of which guests will experience during the ride. The finale will feature an all-new montage of classic movie moments. After guests exit the attraction, they will have a photo opportunity with a classic movie theme. The TCM-curated refresh is set to launch by spring. As part of the relationship with The Walt Disney Studios, TCM will launch Treasures from the Disney Vault, a recurring on-air showcase that will include such live-action Disney features as Treasure Island (1950), Darby O'Gill and the Little People (1959) and Pollyanna (1960); animated films like The Three Caballeros (1944) and The Adventures of Ichabod and Mr. -

Render for CNN: Viewpoint Estimation in Images Using Cnns Trained with Rendered 3D Model Views

Render for CNN: Viewpoint Estimation in Images Using CNNs Trained with Rendered 3D Model Views Hao Su∗, Charles R. Qi∗, Yangyan Li, Leonidas J. Guibas Stanford University Abstract Object viewpoint estimation from 2D images is an ConvoluƟonal Neural Network essential task in computer vision. However, two issues hinder its progress: scarcity of training data with viewpoint annotations, and a lack of powerful features. Inspired by the growing availability of 3D models, we propose a framework to address both issues by combining render-based image synthesis and CNNs (Convolutional Neural Networks). We believe that 3D models have the potential in generating a large number of images of high variation, which can be well exploited by deep CNN with a high learning capacity. Figure 1. System overview. We synthesize training images by overlaying images rendered from large 3D model collections on Towards this goal, we propose a scalable and overfit- top of real images. CNN is trained to map images to the ground resistant image synthesis pipeline, together with a novel truth object viewpoints. The training data is a combination of CNN specifically tailored for the viewpoint estimation task. real images and synthesized images. The learned CNN is applied Experimentally, we show that the viewpoint estimation from to estimate the viewpoints of objects in real images. Project our pipeline can significantly outperform state-of-the-art homepage is at https://shapenet.cs.stanford.edu/ methods on PASCAL 3D+ benchmark. projects/RenderForCNN 1. Introduction However, this is contrary to the recent finding — features learned by task-specific supervision leads to much better 3D recognition is a cornerstone problem in many vi- task performance [16, 11, 14]. -

Wonderful! 143: Rare, Exclusive Gak Published July 29Th, 2020 Listen on Themcelroy.Family

Wonderful! 143: Rare, Exclusive Gak Published July 29th, 2020 Listen on TheMcElroy.family [theme music plays] Rachel: I'm gonna get so sweaty in here. Griffin: Are you? Rachel: It is… hotototot. Griffin: Okay. Is this the show? Are we in it? Rachel: Hi, this is Rachel McElroy! Griffin: Hi, this is Griffin McElroy. Rachel: And this is Wonderful! Griffin: It‘s gettin‘ sweaaatyyy! Rachel: [laughs] Griffin: It‘s not—it doesn‘t feel that bad to me. Rachel: See, you're used to it. Griffin: Y'know what it was? Mm, I had my big fat gaming rig pumping out pixels and frames. Comin‘ at me hot and heavy. Master Chief was there. Just so fuckin‘—just poundin‘ out the bad guys, and it was getting hot and sweaty in here. So I apologize. Rachel: Griffin has a very sparse office that has 700 pieces of electronic equipment in it. Griffin: True. So then, one might actually argue it‘s not sparse at all. In fact, it is filled with electronic equipment. Yeah, that‘s true. I imagine if I get the PC running, I imagine if I get the 3D printer running, all at the same time, it‘s just gonna—it could be a sweat lodge. I could go on a real journey in here. But I don‘t think it‘s that bad, and we‘re only in here for a little bit, so let‘s… Rachel: And I will also say that a lot of these electronics help you make a better podcast, which… is a timely thing.